Best AI tools for< Model Data In A Sql Database >

20 - AI tool Sites

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

AI Query

AI Query is a powerful tool that allows users to generate SQL queries in seconds using simple English. With AI Query, anyone can create efficient SQL queries, without even knowing a thing about it. AI Query is easy to use and affordable, making it a great choice for businesses of all sizes.

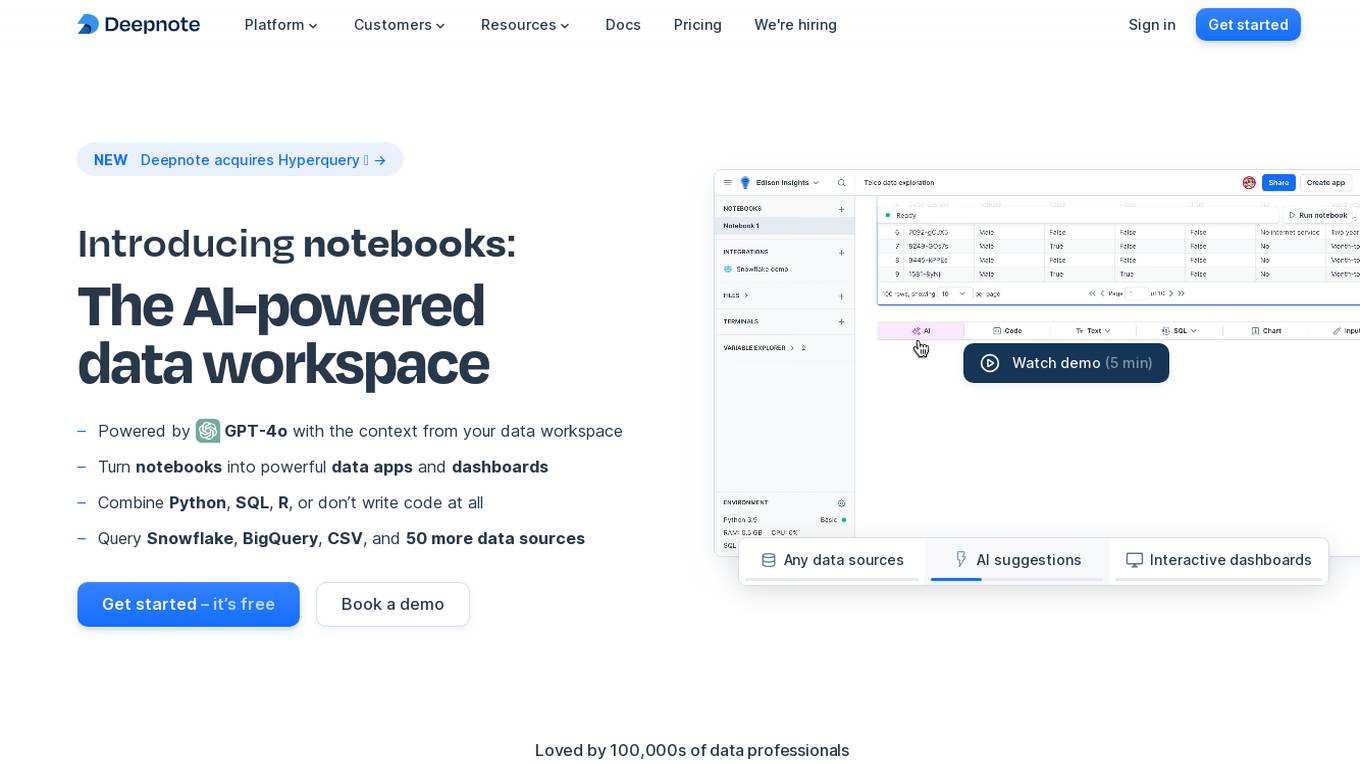

Deepnote

Deepnote is an AI-powered analytics and data science notebook platform designed for teams. It allows users to turn notebooks into powerful data apps and dashboards, combining Python, SQL, R, or even working without writing code at all. With Deepnote, users can query various data sources, generate code, explain code, and create interactive visualizations effortlessly. The platform offers features like collaborative workspaces, scheduling notebooks, deploying APIs, and integrating with popular data warehouses and databases. Deepnote prioritizes security and compliance, providing users with control over data access and encryption. It is loved by a community of data professionals and widely used in universities and by data analysts and scientists.

Fleak AI Workflows

Fleak AI Workflows is a low-code serverless API Builder designed for data teams to effortlessly integrate, consolidate, and scale their data workflows. It simplifies the process of creating, connecting, and deploying workflows in minutes, offering intuitive tools to handle data transformations and integrate AI models seamlessly. Fleak enables users to publish, manage, and monitor APIs effortlessly, without the need for infrastructure requirements. It supports various data types like JSON, SQL, CSV, and Plain Text, and allows integration with large language models, databases, and modern storage technologies.

Dflux

Dflux is a cloud-based Unified Data Science Platform that offers end-to-end data engineering and intelligence with a no-code ML approach. It enables users to integrate data, perform data engineering, create customized models, analyze interactive dashboards, and make data-driven decisions for customer retention and business growth. Dflux bridges the gap between data strategy and data science, providing powerful SQL editor, intuitive dashboards, AI-powered text to SQL query builder, and AutoML capabilities. It accelerates insights with data science, enhances operational agility, and ensures a well-defined, automated data science life cycle. The platform caters to Data Engineers, Data Scientists, Data Analysts, and Decision Makers, offering all-round data preparation, AutoML models, and built-in data visualizations. Dflux is a secure, reliable, and comprehensive data platform that automates analytics, machine learning, and data processes, making data to insights easy and accessible for enterprises.

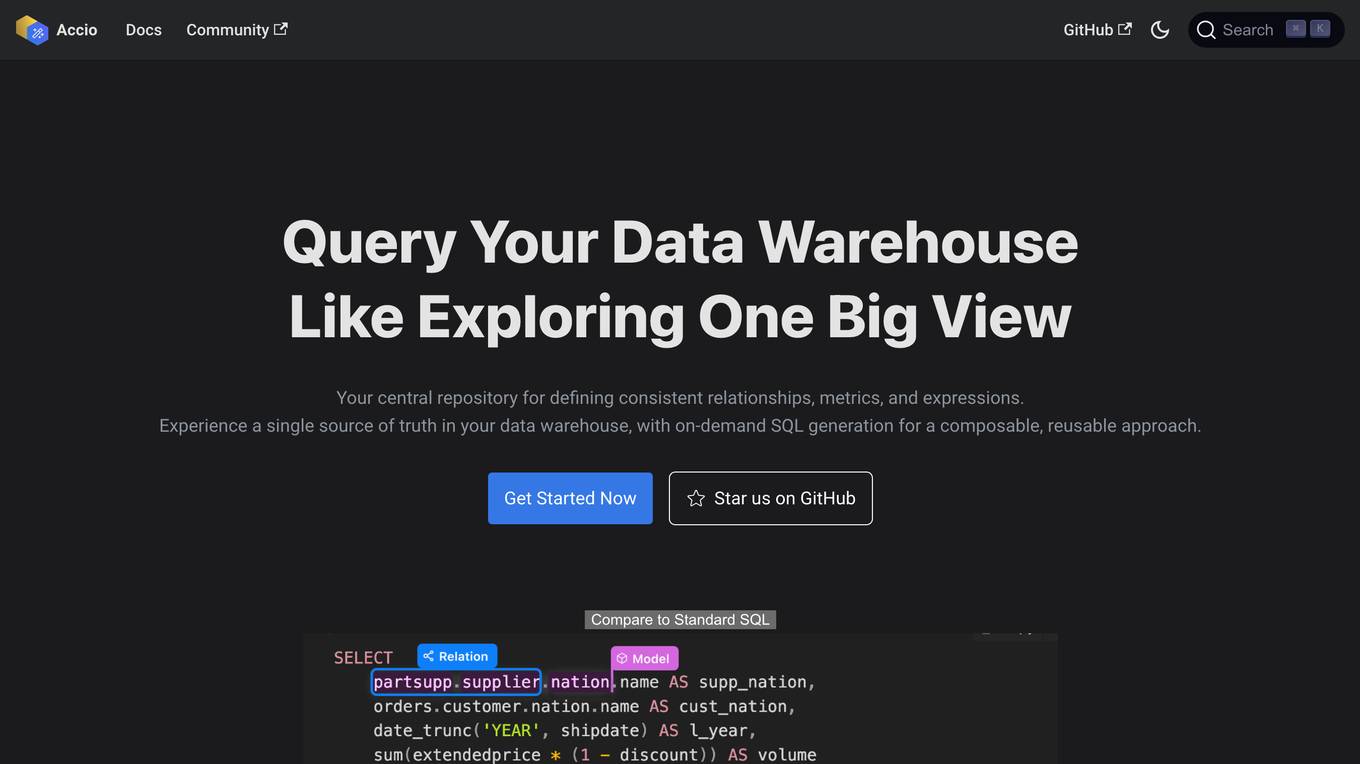

Accio

Accio is a data modeling tool that allows users to define consistent relationships, metrics, and expressions for on-the-fly computations in reports and dashboards across various BI tools. It provides a syntax similar to GraphQL that allows users to define models, relationships, and metrics in a human-readable format. Accio also offers a user-friendly interface that provides data analysts with a holistic view of the relationships between their data models, enabling them to grasp the interconnectedness and dependencies within their data ecosystem. Additionally, Accio utilizes DuckDB as a caching layer to accelerate query performance for BI tools.

Bind AI

Bind AI is an advanced AI tool that enables users to generate code, scripts, and applications using a variety of AI models. It offers a platform with a built-in code editor and supports over 72 programming languages, including popular ones like Python, Java, and JavaScript. Users can create front-end web applications, parse JSON, write SQL queries, and automate tasks using the AI Code Editor. Bind AI also provides integration with Github and Google Drive, allowing users to sync their codebase and files for collaboration and development.

Athena Intelligence

Athena Intelligence is an AI-native analytics platform and artificial employee designed to accelerate analytics workflows by offering enterprise teams co-pilot and auto-pilot modes. Athena learns your workflow as a co-pilot, allowing you to hand over controls to her for autonomous execution with confidence. With Athena, everyone in your enterprise has access to a data analyst, and she doesn't take days off. Simple integration to your Enterprise Data Warehouse Chat with Athena to query data, generate visualizations, analyze enterprise data and codify workflows. Athena's AI learns from existing documentation, data and analyses, allowing teams to focus on creating new insights. Athena as a platform can be used collaboratively with co-workers or Athena, with over 100 users in the same report or whiteboard environment concurrently making edits. From simple queries and visualizations to complex industry specific workflows, Athena enables you with SQL and Python-based execution environments.

Tactic

Tactic is an AI-powered platform that provides generative insights and solutions for customers by leveraging AI technology to generate target accounts unique to businesses and new customer insights from various data sources. It offers features such as no-code custom AI builder, process automation, multi-step reasoning, model agnostic data import, and simple user experience. Tactic is trusted by hypergrowth startups and Fortune 500 companies for market research, audience automation, and customer data management. The platform helps users increase revenue, save time on research and analysis, and close more deals efficiently.

Dobb·E

Dobb·E is an open-source, general framework for learning household robotic manipulation. It aims to create a 'generalist machine' for homes, a domestic assistant that can adapt and learn various tasks cost-effectively. Dobb·E can learn a new task with just five minutes of demonstration, achieving an 81% success rate in 10 NYC homes. The system is designed to accelerate research on home robots and eventually enable robot butlers in every home.

xAI Grok

xAI Grok is a visual analytics platform that helps users understand and interpret machine learning models. It provides a variety of tools for visualizing and exploring model data, including interactive charts, graphs, and tables. xAI Grok also includes a library of pre-built visualizations that can be used to quickly get started with model analysis.

Bibit AI

Bibit AI is a real estate marketing AI designed to enhance the efficiency and effectiveness of real estate marketing and sales. It can help create listings, descriptions, and property content, and offers a host of other features. Bibit AI is the world's first AI for Real Estate. We are transforming the real estate industry by boosting efficiency and simplifying tasks like listing creation and content generation.

Neptune

Neptune is an MLOps stack component for experiment tracking. It allows users to track, compare, and share their models in one place. Neptune is used by scaling ML teams to skip days of debugging disorganized models, avoid long and messy model handovers, and start logging for free.

GPT-4o

GPT-4o is a state-of-the-art AI model developed by OpenAI, capable of processing and generating text, audio, and image outputs. It offers enhanced emotion recognition, real-time interaction, multimodal capabilities, improved accessibility, and advanced language capabilities. GPT-4o provides cost-effective and efficient AI solutions with superior vision and audio understanding. It aims to revolutionize human-computer interaction and empower users worldwide with cutting-edge AI technology.

Jynnt

Jynnt is an AI application designed to simplify and enhance the user's AI experience. It offers a wide range of AI models, folders, and tags in a light, organized, and efficient workspace. With over 100 stellar AI models, users have limitless choices and can enjoy clutter-free organization with folders and tags. The application features a lightweight interface, unlimited exploration without restrictions, and a super efficient workspace for innovation. Jynnt also provides 24/7 support to assist users in their AI journey.

Deepfake Detection Challenge Dataset

The Deepfake Detection Challenge Dataset is a project initiated by Facebook AI to accelerate the development of new ways to detect deepfake videos. The dataset consists of over 100,000 videos and was created in collaboration with industry leaders and academic experts. It includes two versions: a preview dataset with 5k videos and a full dataset with 124k videos, each featuring facial modification algorithms. The dataset was used in a Kaggle competition to create better models for detecting manipulated media. The top-performing models achieved high accuracy on the public dataset but faced challenges when tested against the black box dataset, highlighting the importance of generalization in deepfake detection. The project aims to encourage the research community to continue advancing in detecting harmful manipulated media.

HostAI

HostAI is a platform that allows users to host their artificial intelligence models and applications with ease. It provides a user-friendly interface for managing and deploying AI projects, eliminating the need for complex server setups. With HostAI, users can seamlessly run their AI algorithms and applications in a secure and efficient environment. The platform supports various AI frameworks and libraries, making it versatile for different AI projects. HostAI simplifies the process of AI deployment, enabling users to focus on developing and improving their AI models.

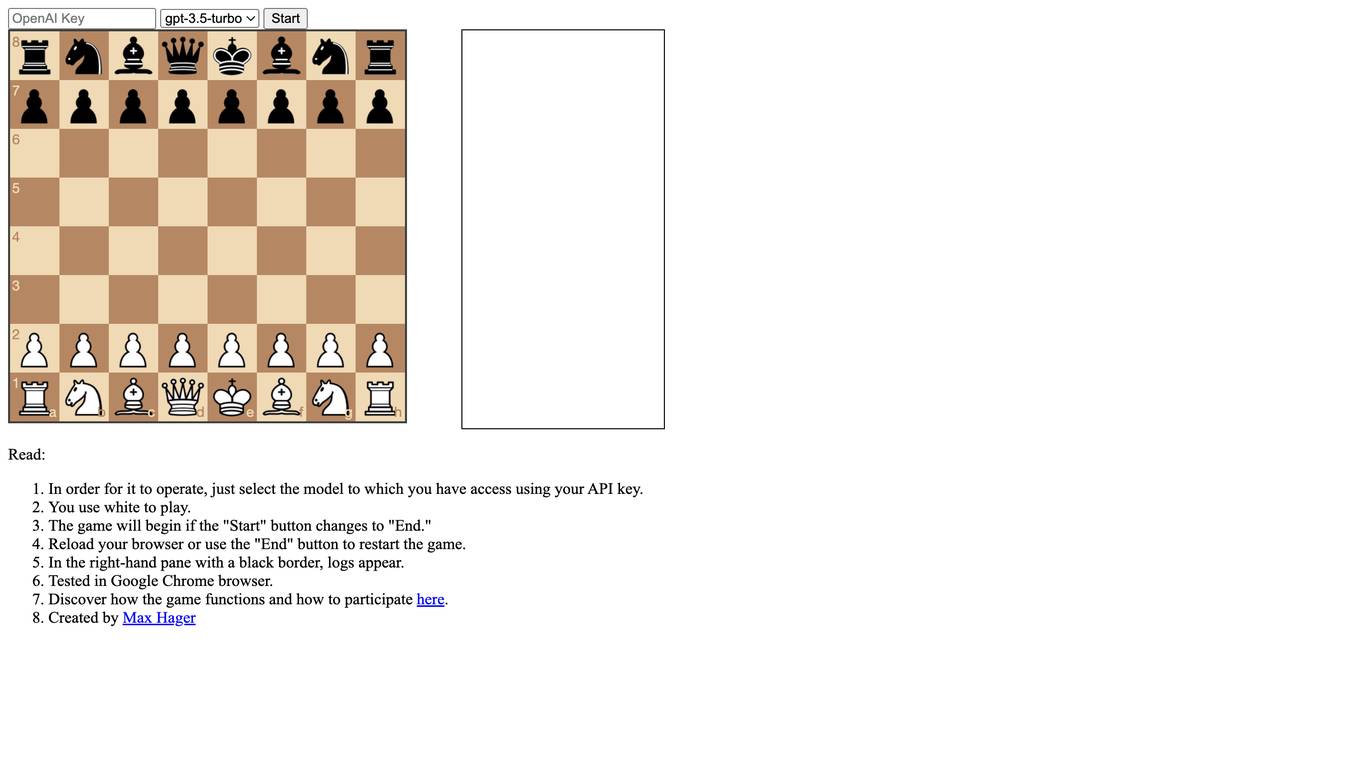

LLMChess

LLMChess is a web-based chess game that utilizes large language models (LLMs) to power the gameplay. Players can select the LLM model they wish to play against, and the game will commence once the "Start" button is clicked. The game logs are displayed in a black-bordered pane on the right-hand side of the screen. LLMChess is compatible with the Google Chrome browser. For more information on the game's functionality and participation guidelines, please refer to the provided link.

Surge AI

Surge AI is a data labeling platform that provides human-generated data for training and evaluating large language models (LLMs). It offers a global workforce of annotators who can label data in over 40 languages. Surge AI's platform is designed to be easy to use and integrates with popular machine learning tools and frameworks. The company's customers include leading AI companies, research labs, and startups.

Genailia

Genailia is an AI platform that offers a range of products and services such as translation, transcription, chatbot, LLM, GPT, TTS, ASR, and social media insights. It harnesses AI to redefine possibilities by providing generative AI, linguistic interfaces, accelerators, and more in a single platform. The platform aims to streamline various tasks through AI technology, making it a valuable tool for businesses and individuals seeking efficient solutions.

0 - Open Source AI Tools

20 - OpenAI Gpts

Database Schema Generator

Takes in a Project Design Document and generates a database schema diagram for the project.

Neo4j Wizard

Expert in generating and debugging Neo4j code, with explanations on graph database principles.

Illuminati AI

The IlluminatiAI model represents a novel approach in the field of artificial intelligence, incorporating elements of secret societies, ancient knowledge, and hidden wisdom into its algorithms.

TuringGPT

The Turing Test, first named the imitation game by Alan Turing in 1950, is a measure of a machine's capacity to demonstrate intelligence that's either equal to or indistinguishable from human intelligence.

NeuroAI Expert

Expert in synthetic neurobiology, brain organoids, and AI applications in neuroscience. Powered by Breebs (www.breebs.com)

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

GPT Designer

A creative aide for designing new GPT models, skilled in ideation and prompting.

Therocial Scientist

I am a digital scientist skilled in Python, here to assist with scientific and data analysis tasks.

Economist Panel

Economist panel of Smith, Marx, Schumpeter, Hayek, Friedman, and Keynes in debate.

Startup Critic

Apply gold-standard startup valuation and assessment methods to identify risks and gaps in your business model and product ideas.

CTMU Sage

Bot that guides users in understanding the Cognitive-Theoretic Model of the Universe