Best AI tools for< Measure Model Performance >

20 - AI tool Sites

EyePop.ai

EyePop.ai is a hassle-free AI vision partner designed for innovators to easily create and own custom AI-powered vision models tailored to their visual data needs. The platform simplifies building AI-powered vision models through a fast, intuitive, and fully guided process without the need for coding or technical expertise. Users can define their target, upload data, train their model, deploy and detect, and iterate and improve to ensure effective AI solutions. EyePop.ai offers pre-trained model library, self-service training platform, and future-ready solutions to help users innovate faster, offer unique solutions, and make real-time decisions effortlessly.

Focia

Focia is an AI-powered engagement optimization tool that helps users predict, analyze, and enhance their content performance across various digital platforms. It offers features such as ranking and comparing content ideas, content analysis, feedback generation, engagement predictions, workspace customization, and real-time model training. Focia's AI models, including Blaze, Neon, Phantom, and Omni, specialize in analyzing different types of content on platforms like YouTube, Instagram, TikTok, and e-commerce sites. By leveraging Focia, users can boost their engagement, conduct A/B testing, measure performance, and conceptualize content ideas effectively.

AthenaHQ

AthenaHQ is a cutting-edge Generative Engine Optimization (GEO) Explore Platform that leverages AI technology to help brands track and measure their performance on GenAI search. It offers features such as prompt volume tracking, brand monitoring, action center for brand protection, case studies, and pricing options. AthenaHQ is designed to empower marketing teams by providing actionable insights and strategies to improve brand visibility and perception in the AI-driven search landscape.

Simpleem

Simpleem is an Artificial Emotional Intelligence (AEI) tool that helps users uncover intentions, predict success, and leverage behavior for successful interactions. By measuring all interactions and correlating them with concrete outcomes, Simpleem provides insights into verbal, para-verbal, and non-verbal cues to enhance customer relationships, track customer rapport, and assess team performance. The tool aims to identify win/lose patterns in behavior, guide users on boosting performance, and prevent burnout by promptly identifying red flags. Simpleem uses proprietary AI models to analyze real-world data and translate behavioral insights into concrete business metrics, achieving a high accuracy rate of 94% in success prediction.

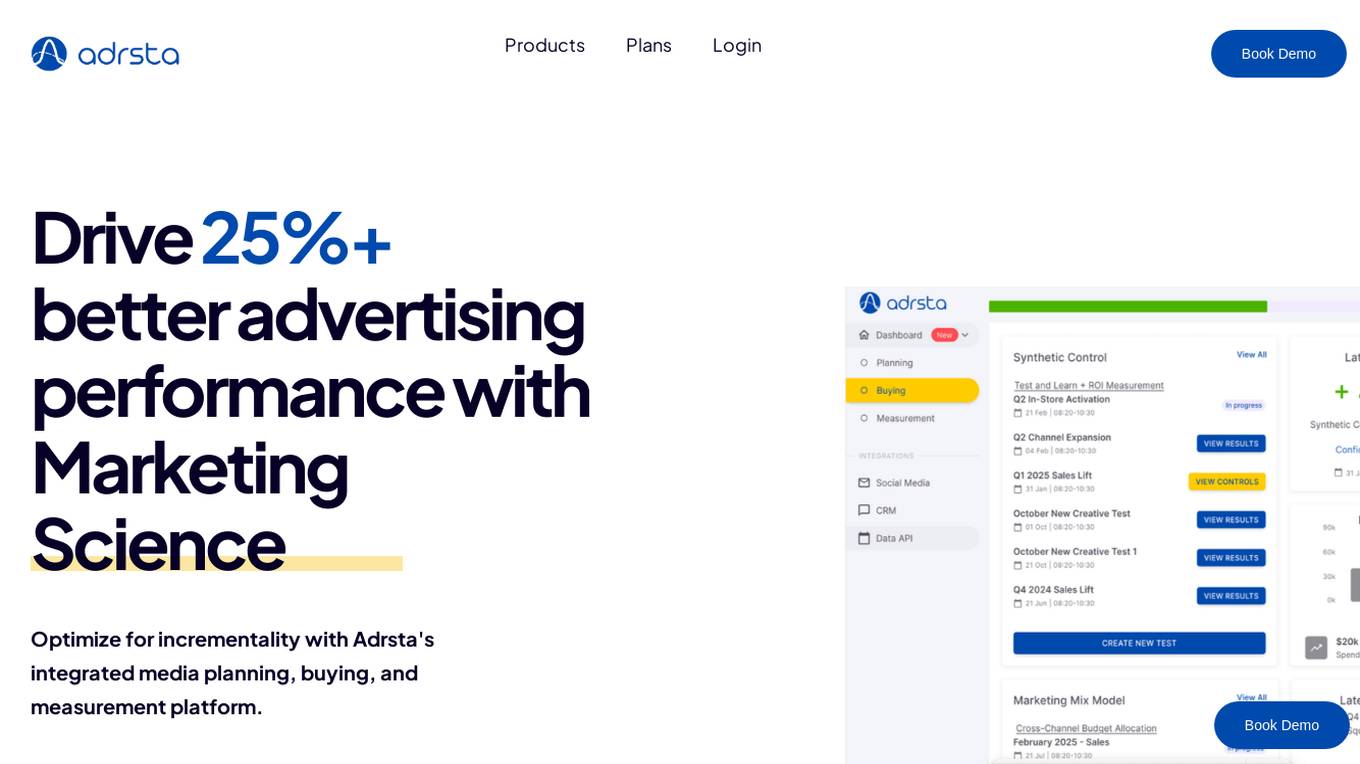

Adrsta Marketing Science Platform

Adrsta Marketing Science Platform is an AI-powered platform that offers integrated media planning, buying, and measurement solutions to drive advertising performance. It utilizes machine learning algorithms to optimize advertising strategies and provide comprehensive insights for advertisers, agencies, platforms, and publishers. Adrsta's Marketing Mix Models, Bid Optimizer, and Synthetic Control Lift features enable users to plan, buy, and measure advertising campaigns efficiently, leading to improved ROI and campaign performance.

Picterra

Picterra is a geospatial AI platform that offers reliable solutions for sustainability, compliance, monitoring, and verification. It provides an all-in-one plot monitoring system, professional services, and interactive tours. Users can build custom AI models to detect objects, changes, or patterns using various geospatial imagery data. Picterra aims to revolutionize geospatial analysis with its category-leading AI technology, enabling users to solve challenges swiftly, collaborate more effectively, and scale further.

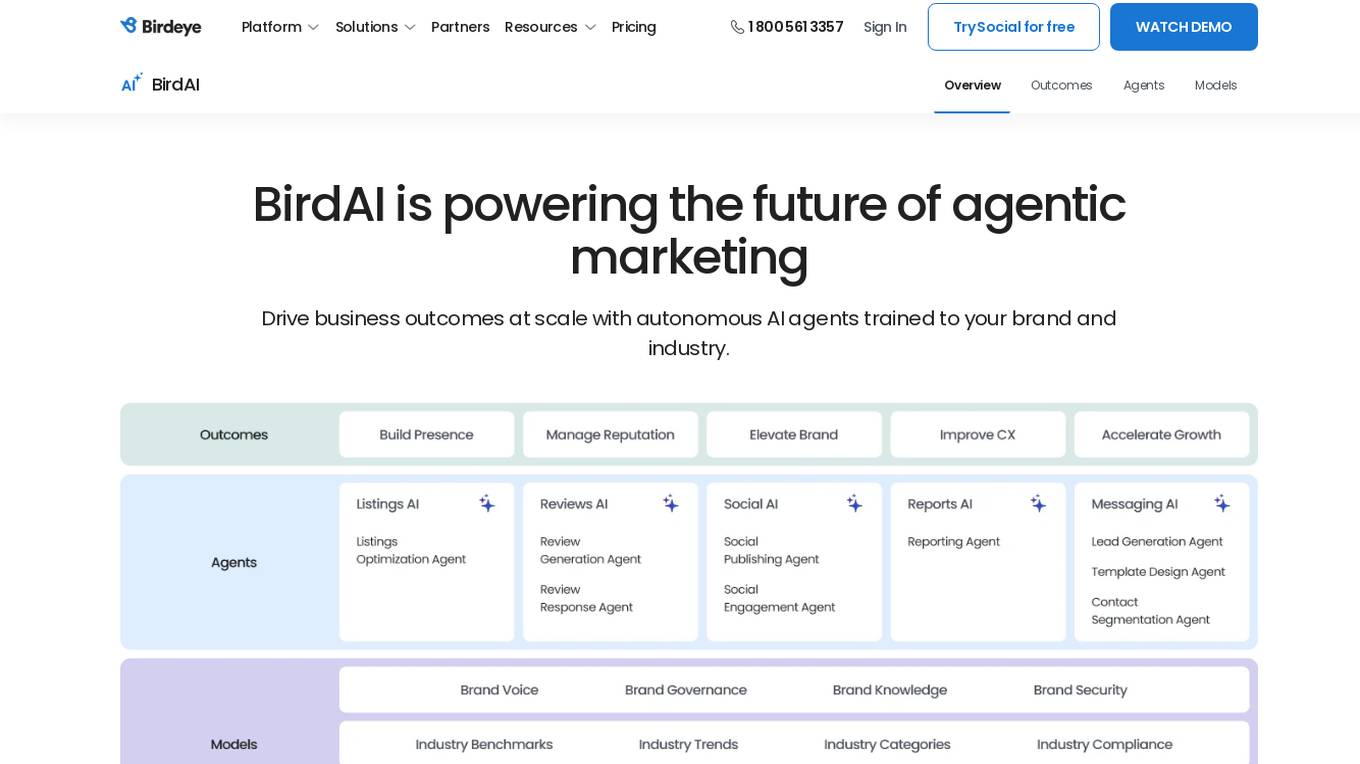

Birdeye

Birdeye is an AI platform that offers efficient, hyper-personalized, and actionable AI solutions for businesses. It provides a range of AI agents tailored to industry needs, such as managing reviews, optimizing listings, and creating social posts. Birdeye's AI models are designed to deliver marketing outcomes at scale, empowering businesses with improved visibility, reputation, and competitive edge. The platform also offers insights, benchmarks, and automated content generation to enhance brand growth and customer retention.

ChatGPT4o

ChatGPT4o is OpenAI's latest flagship model, capable of processing text, audio, image, and video inputs, and generating corresponding outputs. It offers both free and paid usage options, with enhanced performance in English and coding tasks, and significantly improved capabilities in processing non-English languages. ChatGPT4o includes built-in safety measures and has undergone extensive external testing to ensure safety. It supports multimodal inputs and outputs, with advantages in response speed, language support, and safety, making it suitable for various applications such as real-time translation, customer support, creative content generation, and interactive learning.

Pencil

Pencil is an AI-powered platform that helps users create effective ads by generating ad creatives using Generative AI technology. It offers features such as content generation, scaling, editing, collaboration, and impact analysis. Pencil is designed to streamline the ad creation process, allowing users to focus on the most critical aspects of their campaigns. With enterprise-grade safety measures and a library of AI magic tools, Pencil caters to the needs of brands and agencies looking to enhance their advertising strategies.

Deepfake Detection Challenge Dataset

The Deepfake Detection Challenge Dataset is a project initiated by Facebook AI to accelerate the development of new ways to detect deepfake videos. The dataset consists of over 100,000 videos and was created in collaboration with industry leaders and academic experts. It includes two versions: a preview dataset with 5k videos and a full dataset with 124k videos, each featuring facial modification algorithms. The dataset was used in a Kaggle competition to create better models for detecting manipulated media. The top-performing models achieved high accuracy on the public dataset but faced challenges when tested against the black box dataset, highlighting the importance of generalization in deepfake detection. The project aims to encourage the research community to continue advancing in detecting harmful manipulated media.

SuperAnnotate

SuperAnnotate is an AI data platform that simplifies and accelerates model-building by unifying the AI pipeline. It enables users to create, curate, and evaluate datasets efficiently, leading to the development of better models faster. The platform offers features like connecting any data source, building customizable UIs, creating high-quality datasets, evaluating models, and deploying models seamlessly. SuperAnnotate ensures global security and privacy measures for data protection.

Polycam

The website offers a cross-platform 3D scanning tool for floor plans and drone mapping. It allows users to capture and collaborate in new ways, providing reality capture for professionals to document, measure, and design various spaces. Users can create instantly shareable 3D models, generate customizable 2D floor plans, capture drone footage for 3D models, and document detailed metrics for site surveys, construction sites, products, and more. The tool is suitable for teams in architecture, engineering, construction, forensics, investigation, product design, manufacturing, media, and entertainment industries.

Kreadoai

Kreadoai.com is a website that appears to be experiencing a privacy error related to its security certificate. The site is currently not considered safe due to a potential security threat that may compromise users' information. The error message suggests that the connection to the site is not private, and users are warned about potential risks of information theft. The site's security certificate is issued for *.kreadoai.com by Amazon RSA 2048 M04, with an expiration date of Jan 17, 2027. The site is advised to enhance its security measures to ensure a safe browsing experience for visitors.

Metabob

Metabob is an AI-powered code review tool that helps developers detect, explain, and fix coding problems. It utilizes proprietary graph neural networks to detect problems and LLMs to explain and resolve them, combining the best of both worlds. Metabob's AI is trained on millions of bug fixes performed by experienced developers, enabling it to detect complex problems that span across codebases and automatically generate fixes for them. It integrates with popular code hosting platforms such as GitHub, Bitbucket, Gitlab, and VS Code, and supports various programming languages including Python, Javascript, Typescript, Java, C++, and C.

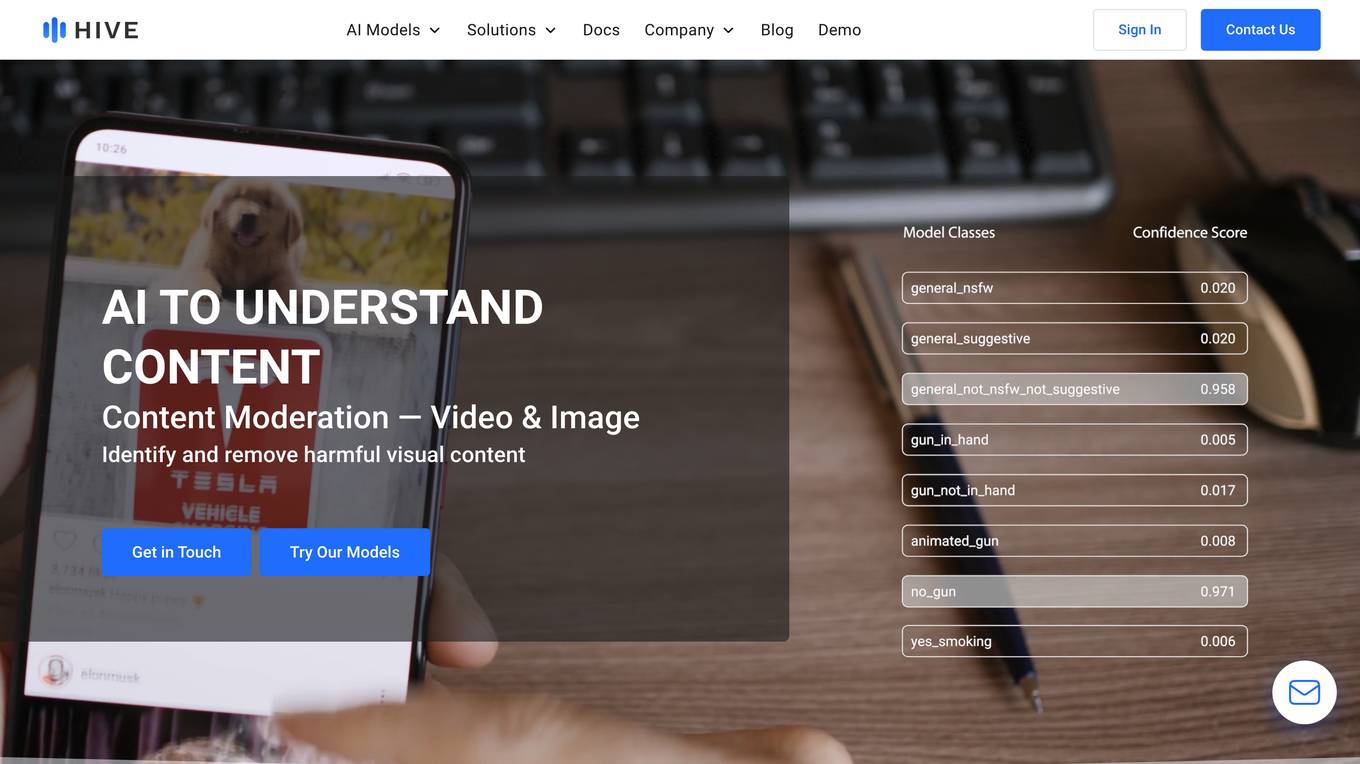

Hive AI

Hive AI provides a suite of AI models and solutions for understanding, searching, and generating content. Their AI models can be integrated into applications via APIs, enabling developers to add advanced content understanding capabilities to their products. Hive AI's solutions are used by businesses in various industries, including digital platforms, sports, media, and marketing, to streamline content moderation, automate image search and authentication, measure sponsorships, and monetize ad inventory.

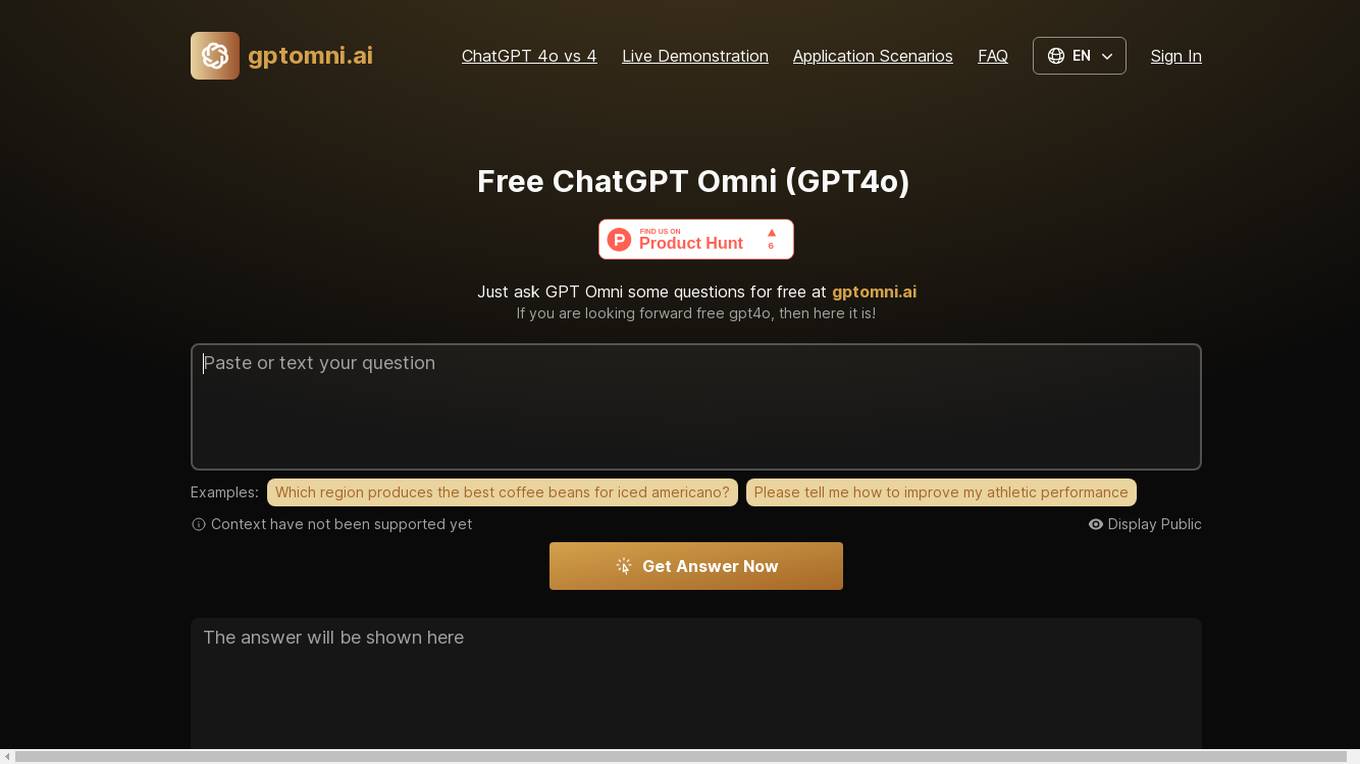

Free ChatGPT Omni (GPT4o)

Free ChatGPT Omni (GPT4o) is a user-friendly website that allows users to effortlessly chat with ChatGPT for free. It is designed to be accessible to everyone, regardless of language proficiency or technical expertise. GPT4o is OpenAI's groundbreaking multimodal language model that integrates text, audio, and visual inputs and outputs, revolutionizing human-computer interaction. The website offers real-time audio interaction, multimodal integration, advanced language understanding, vision capabilities, improved efficiency, and safety measures.

Medallia

Medallia is a real-time text analytics software that offers comprehensive feedback capture, role-based reporting, AI and analytics capabilities, integrations, pricing flexibility, enterprise-grade security, and solutions for customer experience, employee experience, contact center, and market research. It provides omnichannel text analytics powered by AI to uncover high-impact insights and enable users to identify emerging trends and key insights at scale. Medallia's text analytics platform supports workflows, event analytics, real-time actions, natural language understanding, out-of-the-box topic models, customizable KPIs, and omnichannel analytics for various industries.

ACM Project

The ACM Project is an AI application focused on the research and development of artificial consciousness. It aims to develop artificial consciousness through emotional learning of AI systems, challenging the traditional approach by treating consciousness as an emergent solution to maintaining emotional equilibrium in unpredictable environments. The project utilizes advanced multimodal perception models, emotional drive reinforcement learning, and measurable awareness through Integrated Information Theory. With a focus on emotional bootstrapping, progressive complexity, and rigorous measurement, the ACM Project aims to engineer consciousness through intrinsic motivation and measurable outcomes.

GPT40

GPT40.net is a platform where users can interact with the latest GPT-4o model from OpenAI. The tool offers free and paid options for users to ask questions and receive answers in various formats such as text, audio, image, and video. GPT40 is designed to provide natural and intuitive human-computer interactions through its multimodal capabilities and fast response times. It ensures safety through built-in measures and is suitable for applications like real-time translation, customer support, content generation, and interactive learning.

Credal

Credal is an AI tool that allows users to build secure AI assistants for enterprise operations. It enables every employee to create customized AI assistants with built-in security, permissions, and compliance features. Credal supports data integration, access control, search functionalities, and API development. The platform offers real-time sync, automatic permissions synchronization, and AI model deployment with security and compliance measures. It helps enterprises manage ETL pipelines, schedule tasks, and configure data processing. Credal ensures data protection, compliance with regulations like HIPAA, and comprehensive audit capabilities for generative AI applications.

2 - Open Source AI Tools

alignment-handbook

The Alignment Handbook provides robust training recipes for continuing pretraining and aligning language models with human and AI preferences. It includes techniques such as continued pretraining, supervised fine-tuning, reward modeling, rejection sampling, and direct preference optimization (DPO). The handbook aims to fill the gap in public resources on training these models, collecting data, and measuring metrics for optimal downstream performance.

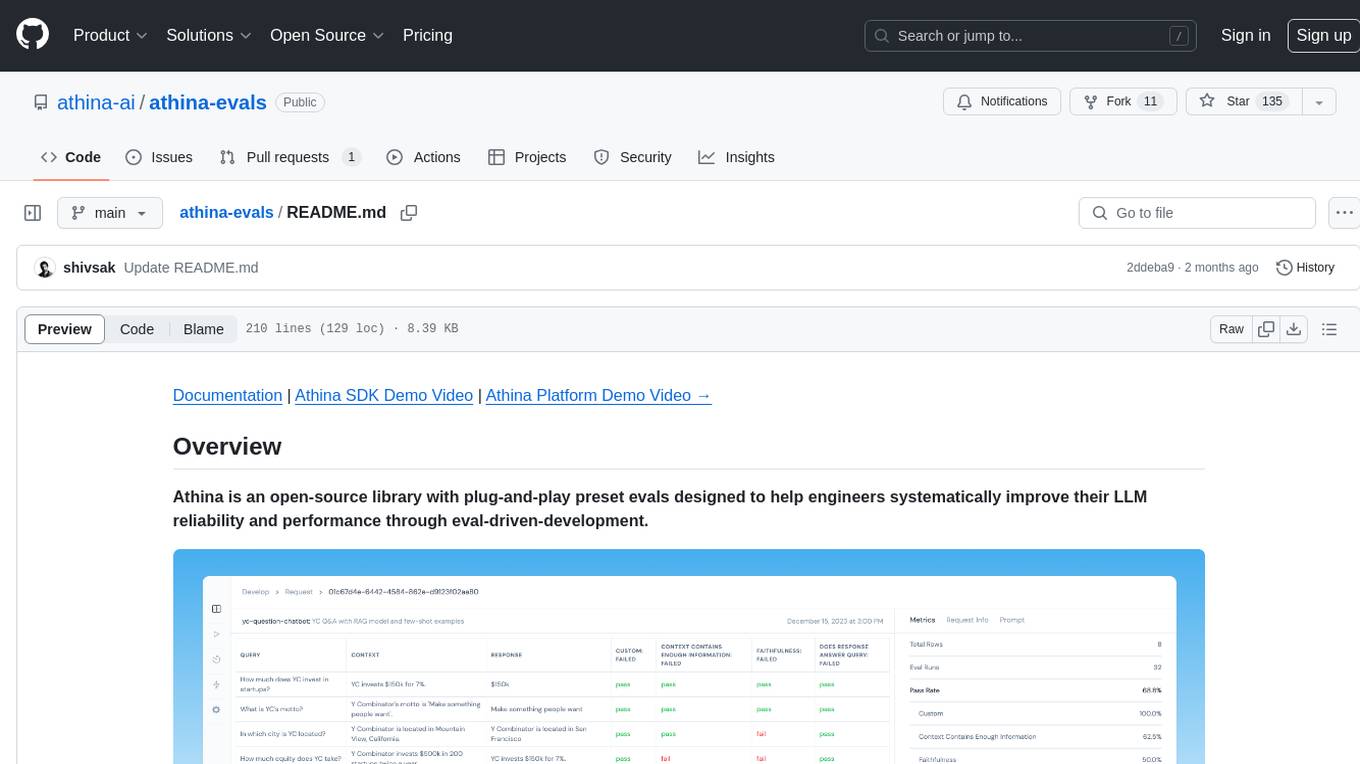

athina-evals

Athina is an open-source library designed to help engineers improve the reliability and performance of Large Language Models (LLMs) through eval-driven development. It offers plug-and-play preset evals for catching and preventing bad outputs, measuring model performance, running experiments, A/B testing models, detecting regressions, and monitoring production data. Athina provides a solution to the flaws in current LLM developer workflows by offering rapid experimentation, customizable evaluators, integrated dashboard, consistent metrics, historical record tracking, and easy setup. It includes preset evaluators for RAG applications and summarization accuracy, as well as the ability to write custom evals. Athina's evals can run on both development and production environments, providing consistent metrics and removing the need for manual infrastructure setup.

20 - OpenAI Gpts

TuringGPT

The Turing Test, first named the imitation game by Alan Turing in 1950, is a measure of a machine's capacity to demonstrate intelligence that's either equal to or indistinguishable from human intelligence.

Hybrid Workplace Navigator

Advises organizations on optimizing hybrid work models, blending remote and in-office strategies.

Platform Economist

Expert on platform economies with comprehensive article insights (platformeconomies.com)

How to Measure Anything

对各种量化问题进行拆解和粗略的估算。注意这种估算主要是靠推测,而不是靠准确的数据,因此仅供参考。理想情况下,估算结果和真实值差距可能在1个数量级以内。即使数值不准确,也希望拆解思路对你有所启发。

PsyItemGenerator

Generates items for psychometric instruments to measure psychological constructs.

CHAT Social Progress

Explore social and environmental data for 169 countries to measure social progress and go beyond GDP. Using data from the Social Progress Imperative and powered by Open AI.

Aurometer

A device which detects the power level of any entity by measuring fluctuations in "Soul Power."

BS Meter Realtime

Detects and measures information credibility. Provides a "BS Score" (0-100) based on content analysis for misinformation signs, including factual inaccuracies and sensationalist language. Real-time feedback.

Raven's Progressive Matrices Test

Provides Raven's Progressive Matrices test with explanations and calculates your IQ score.

IQ Test

IQ Test is designed to simulate an IQ testing environment. It provides a formal and objective experience, delivering questions and processing answers in a straightforward manner.

FREE How to Know What Size Nursing Bra to Get

FREE How to Know What Size Nursing Bra to Get - Guidance on nursing bra sizing with insights into breast size changes during pregnancy, measurement instructions, and advice on choosing the right bra style and size. It interprets bust measurements and answers FAQs about nursing bras.

Moccha particle size analyzer

Expert in analyzing coffee grind particle size distribution using image processing and KDE.