Best AI tools for< Manage Server Load >

20 - AI tool Sites

OpenResty

The website appears to be displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is often encountered when trying to access a webpage without proper permissions or when the server is misconfigured. The message 'openresty' suggests that the server may be using the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, commonly used for building dynamic web applications. It provides a powerful and flexible way to create web services and APIs.

OpenResty Server Manager

The website seems to be experiencing a 403 Forbidden error, which typically indicates that the server is denying access to the requested resource. This error is often caused by incorrect permissions or misconfigurations on the server side. The message 'openresty' suggests that the server may be using the OpenResty web platform. Users encountering this error may need to contact the website administrator for assistance in resolving the issue.

OpenResty Server

The website is currently displaying a '403 Forbidden' error, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' message suggests that the server is using the OpenResty web platform, which is based on NGINX and Lua programming language. Users encountering this error may need to contact the website administrator for assistance in resolving the issue.

VoteMinecraftServers

VoteMinecraftServers is a modern Minecraft voting website that provides real-time live analytics to help users stay ahead in the game. It utilizes advanced AI and ML technologies to deliver accurate and up-to-date information. The website is free to use and offers a range of features, including premium commands for enhanced functionality. VoteMinecraftServers is committed to data security and user privacy, ensuring a safe and reliable experience.

WebServerPro

The website is a platform that provides web server hosting services. It helps users set up and manage their web servers efficiently. Users can easily deploy their websites and applications on the server, ensuring a seamless online presence. The platform offers a user-friendly interface and reliable hosting solutions to meet various needs.

502 Bad Gateway

The website seems to be experiencing technical difficulties at the moment, showing a '502 Bad Gateway' error message. This error typically occurs when a server acting as a gateway or proxy receives an invalid response from an upstream server. The 'nginx' reference in the error message indicates that the server is using the Nginx web server software. Users encountering this error may need to wait for the issue to be resolved by the website's administrators or try accessing the site at a later time.

403 Forbidden Resolver

The website is currently displaying a '403 Forbidden' error, which means that the server is refusing to respond to the request. This could be due to various reasons such as insufficient permissions, server misconfiguration, or a client error. The 'openresty' message indicates that the server is using the OpenResty web platform. It is important to troubleshoot and resolve the issue to regain access to the website.

503 Service Temporarily Unavailable

The website is currently experiencing a temporary service outage, resulting in a 503 Service Temporarily Unavailable error message. This error typically occurs when the server is unable to handle the request due to maintenance, overload, or other issues. Users may encounter this message when trying to access the website, indicating a temporary disruption in service. It is recommended to wait for the issue to be resolved by the website administrators before attempting to access the site again.

403 Forbidden

The website seems to be experiencing a 403 Forbidden error, which indicates that the server is refusing to respond to the request. This error is often caused by incorrect permissions on the server or misconfigured security settings. The message 'openresty' suggests that the server may be running on the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, known for its high performance and scalability. Users encountering a 403 Forbidden error on a website may need to contact the website administrator or webmaster for assistance in resolving the issue.

403 Forbidden

The website appears to be displaying a '403 Forbidden' error message, indicating that access to the page is restricted or denied. This error is commonly encountered when the server understands the request but refuses to authorize it. The message '403 Forbidden' is a standard HTTP status code that communicates this refusal to the client. It may be due to insufficient permissions, IP blocking, or other security measures. The 'openresty' mentioned in the text is likely the software or server platform being used to host the website.

Ticket AI

Ticket AI is a Discord bot that automates customer support by answering tickets with AI. It simplifies support by allowing users to upload training data, such as support documents, and then using that data to answer customer questions. Ticket AI is easy to use, with no coding experience required, and it offers features such as custom support channels, ephemeral replies, and 24/7 availability. With Ticket AI, businesses can save time and improve the efficiency of their customer support.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server understood the request but refuses to authorize it. This error is often encountered when trying to access a webpage without the necessary permissions. The 'openresty' mentioned in the text is likely the software running on the server. It is a web platform based on NGINX and LuaJIT, known for its high performance and scalability in handling web traffic. The website may be using OpenResty to manage its server configurations and handle incoming requests.

503 Server Error

The website is currently experiencing a 503 Server Error, indicating that the service requested is unavailable at the moment. Users encountering this error are advised to try again in 30 seconds. The website may be undergoing maintenance or experiencing technical difficulties, leading to the temporary unavailability of the service.

LiteSpeed Web Server

The website is powered by LiteSpeed Web Server, which is not a web hosting company. It serves as a platform that handles web server operations efficiently. LiteSpeed Technologies Inc. does not have control over the content found on the site.

Server Error Handler

The website encountered a server error, preventing it from fulfilling the user's request. The error message indicates a temporary issue that may be resolved by trying again after 30 seconds.

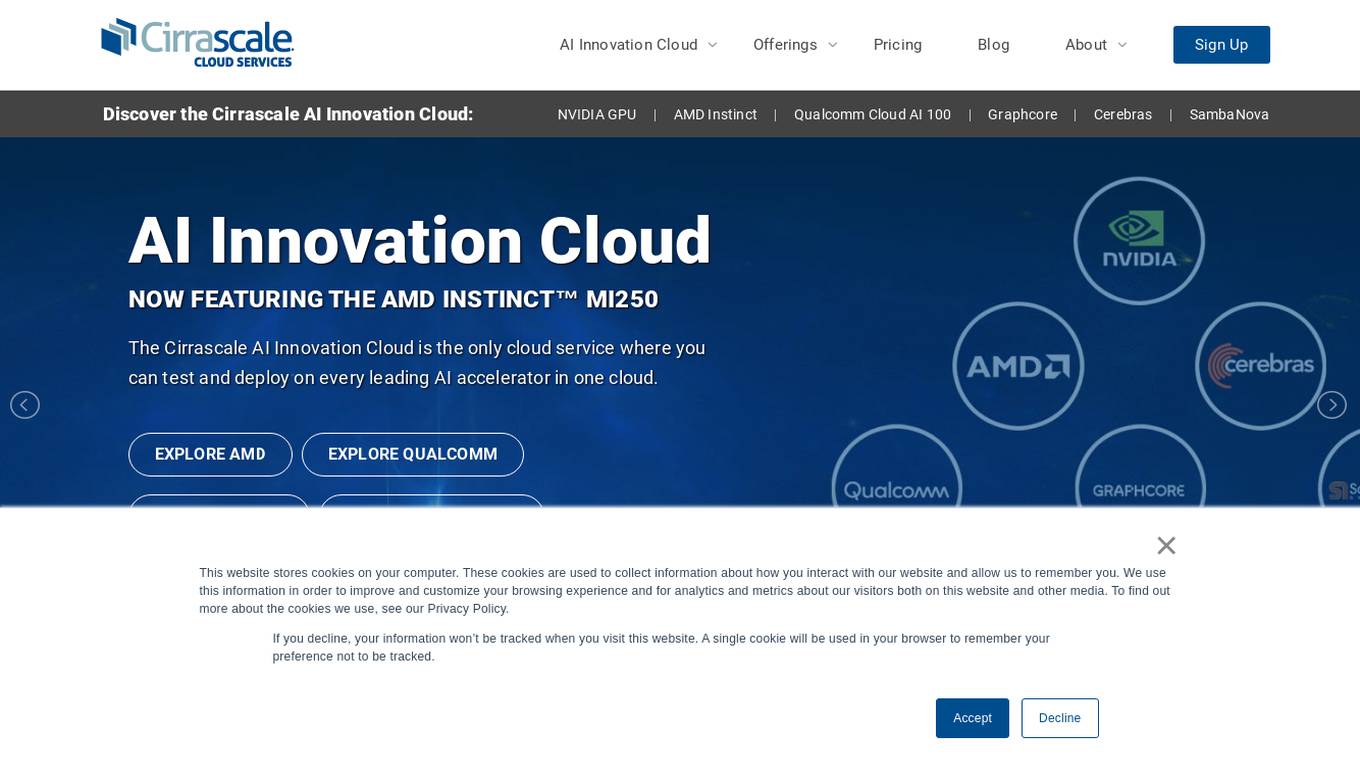

Cirrascale Cloud Services

Cirrascale Cloud Services is an AI tool that offers cloud solutions for Artificial Intelligence applications. The platform provides a range of cloud services and products tailored for AI innovation, including NVIDIA GPU Cloud, AMD Instinct Series Cloud, Qualcomm Cloud, Graphcore, Cerebras, and SambaNova. Cirrascale's AI Innovation Cloud enables users to test and deploy on leading AI accelerators in one cloud, democratizing AI by delivering high-performance AI compute and scalable deep learning solutions. The platform also offers professional and managed services, tailored multi-GPU server options, and high-throughput storage and networking solutions to accelerate development, training, and inference workloads.

WebDB

WebDB is an open-source and efficient Database IDE that focuses on providing a secure and user-friendly platform for database management. It offers features such as automatic DBMS discovery, credential guessing, time machine for database version control, powerful queries editor with autocomplete and documentation, AI assistant integration, NoSQL structure management, intelligent data generation, and more. With a modern ERD view and support for various databases, WebDB aims to simplify database management tasks and enhance productivity for users.

Softbuilder

Softbuilder is a software development company that focuses on creating innovative database tools. Their products include ERBuilder Data Modeler, a database modeling software for high-quality data models, and AbstraLinx, a powerful metadata discovery tool for Salesforce. Softbuilder aims to provide straightforward tools that utilize the latest technology to help users be more productive and focus on delivering solutions rather than learning complicated tools.

AI Table Talk

AI Table Talk is an AI application designed to help busy restaurants manage incoming calls efficiently. It provides around-the-clock responsiveness, seamless reservation and order flow, instant information and smart suggestions, and multilingual, natural conversations. The application aims to free up staff, increase revenue, offer effortless scalability, and maintain the human touch by complementing the restaurant team.

N/A

The website seems to be experiencing technical difficulties as indicated by the error message '502 Bad Gateway'. This error typically occurs when a server acting as a gateway or proxy receives an invalid response from an upstream server. The message 'openresty' suggests that the server may be using the OpenResty web platform. Users encountering a 502 Bad Gateway error may need to wait for the issue to be resolved by the website's administrators or try accessing the site at a later time.

1 - Open Source AI Tools

paddler

Paddler is an open-source load balancer and reverse proxy designed specifically for optimizing servers running llama.cpp. It overcomes typical load balancing challenges by maintaining a stateful load balancer that is aware of each server's available slots, ensuring efficient request distribution. Paddler also supports dynamic addition or removal of servers, enabling integration with autoscaling tools.

20 - OpenAI Gpts

SQL Server assistant

Expert in SQL Server for database management, optimization, and troubleshooting.

Baci's AI Server

An AI waiter for Baci Bistro & Bar, knowledgeable about the menu and ready to assist.

Software expert

Server admin expert in cPanel, Softaculous, WHM, WordPress, and Elementor Pro.

アダチさん13号(SQLServer篇)

安達孝一さんがSE時代に蓄積してきた、SQL Serverのナレッジやノウハウ等 (SQL Server 2000/2005/2008/2012) について、ご質問頂けます。また、対話内容を基に、ChatGPT(GPT-4)向けの、汎用的な質問文例も作成できます。

BashEmulator GPT

BashEmulator GPT: A Virtualized Bash Environment for Linux Command Line Interaction. It virtualized all network interfaces and local network