Best AI tools for< Manage Language Models >

20 - AI tool Sites

Entry Point AI

Entry Point AI is a modern AI optimization platform for fine-tuning proprietary and open-source language models. It provides a user-friendly interface to manage prompts, fine-tunes, and evaluations in one place. The platform enables users to optimize models from leading providers, train across providers, work collaboratively, write templates, import/export data, share models, and avoid common pitfalls associated with fine-tuning. Entry Point AI simplifies the fine-tuning process, making it accessible to users without the need for extensive data, infrastructure, or insider knowledge.

NinjaChat

NinjaChat is an all-in-one AI platform that offers a suite of premium AI chatbots, an AI image generator, an AI music generator, and more—all seamlessly integrated into one powerful platform tailored to users' needs. It provides access to over 9 AI apps on one platform, featuring popular AI models like GPT-4, Claude 3, Mixtral, PDF analysis, image generation, and music composition. Users can chat with documents, generate images, and interact with multiple language models under one subscription.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

Stockpulse

Stockpulse is an AI-powered platform that analyzes financial news and communities using Artificial Intelligence. It provides decision support for operations by collecting, filtering, and converting unstructured data into processable information. With extensive coverage of financial media sources globally, Stockpulse offers unique historical data, sentiment analysis, and AI-driven insights for various sectors in the financial markets.

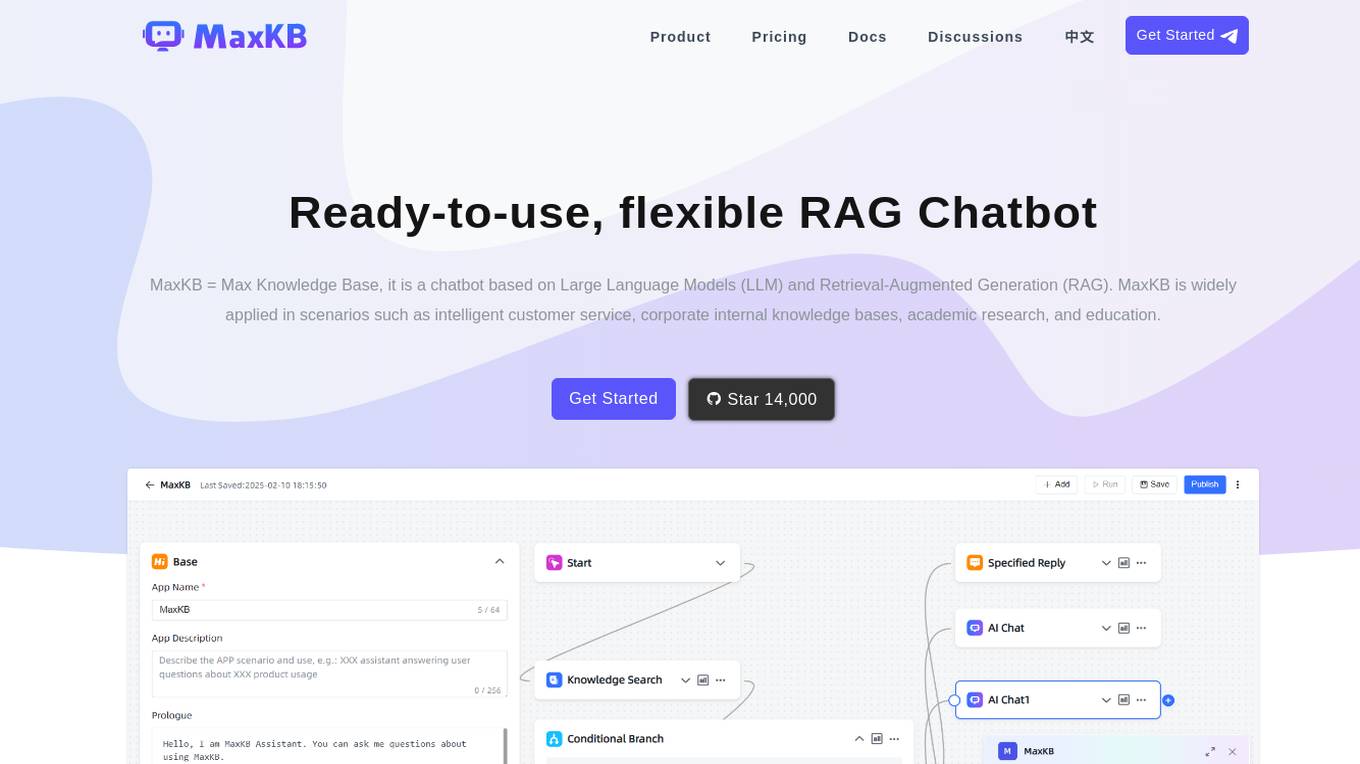

MaxKB

MaxKB is a ready-to-use, flexible RAG Chatbot that is based on Large Language Models (LLM) and Retrieval-Augmented Generation (RAG). It is widely applied in scenarios such as intelligent customer service, corporate internal knowledge bases, academic research, and education. MaxKB supports direct uploading of documents, automatic crawling of online documents, automatic text splitting, vectorization, and RAG for smart Q&A interactions. It also offers flexible orchestration, seamless integration into third-party systems, and supports various large models for enhanced user satisfaction.

Spellbook

Spellbook is a comprehensive AI tool designed for commercial lawyers to review, draft, and manage legal contracts efficiently. It offers features such as redline contract review, drafting from scratch or saved libraries, quick answers to complex questions, comparing contracts to industry standards, and multi-document workflows. Spellbook is powered by OpenAI's GPT-4o and other large language models, providing accurate and reliable performance. It ensures data privacy with Zero Data Retention agreements, making it a secure and private solution for law firms and in-house legal teams worldwide.

AiFA Labs

AiFA Labs is an AI platform that offers a comprehensive suite of generative AI products and services for enterprises. The platform enables businesses to create, manage, and deploy generative AI applications responsibly and at scale. With a focus on governance, compliance, and security, AiFA Labs provides a range of AI tools to streamline business operations, enhance productivity, and drive innovation. From AI code assistance to chat interfaces and data synthesis, AiFA Labs empowers organizations to leverage the power of AI for various use cases across different industries.

Gen AI For Enterprise

Gen AI For Enterprise is an AI application that offers a secure, scalable, and customizable platform for enterprises. It provides a private chat feature, enhanced knowledge retrieval, and custom business case development. The application is trusted by various industries and empowers teams to work better by leveraging advanced AI models and APIs. It ensures robust security, compliance with industry standards, and simplified user management. Gen AI For Enterprise aims to transform businesses by providing innovative AI solutions.

Dust

Dust is a customizable and secure AI assistant platform that helps businesses amplify their team's potential. It allows users to deploy the best Large Language Models to their company, connect Dust to their team's data, and empower their teams with assistants tailored to their specific needs. Dust is exceptionally modular and adaptable, tailoring to unique requirements and continuously evolving to meet changing needs. It supports multiple sources of data and models, including proprietary and open-source models from OpenAI, Anthropic, and Mistral. Dust also helps businesses identify their most creative and driven team members and share their experience with AI throughout the company. It promotes collaboration with shared conversations, @mentions in discussions, and Slackbot integration. Dust prioritizes security and data privacy, ensuring that data remains private and that enterprise-grade security measures are in place to manage data access policies.

ModelOp

ModelOp is the leading AI Governance software for enterprises, providing a single source of truth for all AI systems, automated process workflows, real-time insights, and integrations to extend the value of existing technology investments. It helps organizations safeguard AI initiatives without stifling innovation, ensuring compliance, accelerating innovation, and improving key performance indicators. ModelOp supports generative AI, Large Language Models (LLMs), in-house, third-party vendor, and embedded systems. The software enables visibility, accountability, risk tiering, systemic tracking, enforceable controls, workflow automation, reporting, and rapid establishment of AI governance.

Trieve

Trieve is an AI-first infrastructure API that offers search, recommendations, and RAG capabilities by combining language models with tools for fine-tuning ranking and relevance. It helps companies build unfair competitive advantages through their discovery experiences, powering over 30,000 discovery experiences across various categories. Trieve supports semantic vector search, BM25 & SPLADE full-text search, hybrid search, merchandising & relevance tuning, and sub-sentence highlighting. The platform is built on open-source models, ensuring data privacy, and offers self-hostable options for sensitive data and maximum performance.

Milo

Milo is an AI-powered co-pilot for parents, designed to help them manage the chaos of family life. It uses GPT-4, the latest in large-language models, to sort and organize information, send reminders, and provide updates. Milo is designed to be accurate and solve complex problems, and it learns and gets better based on user feedback. It can be used to manage tasks such as adding items to a grocery list, getting updates on the week's schedule, and sending screenshots of birthday invitations.

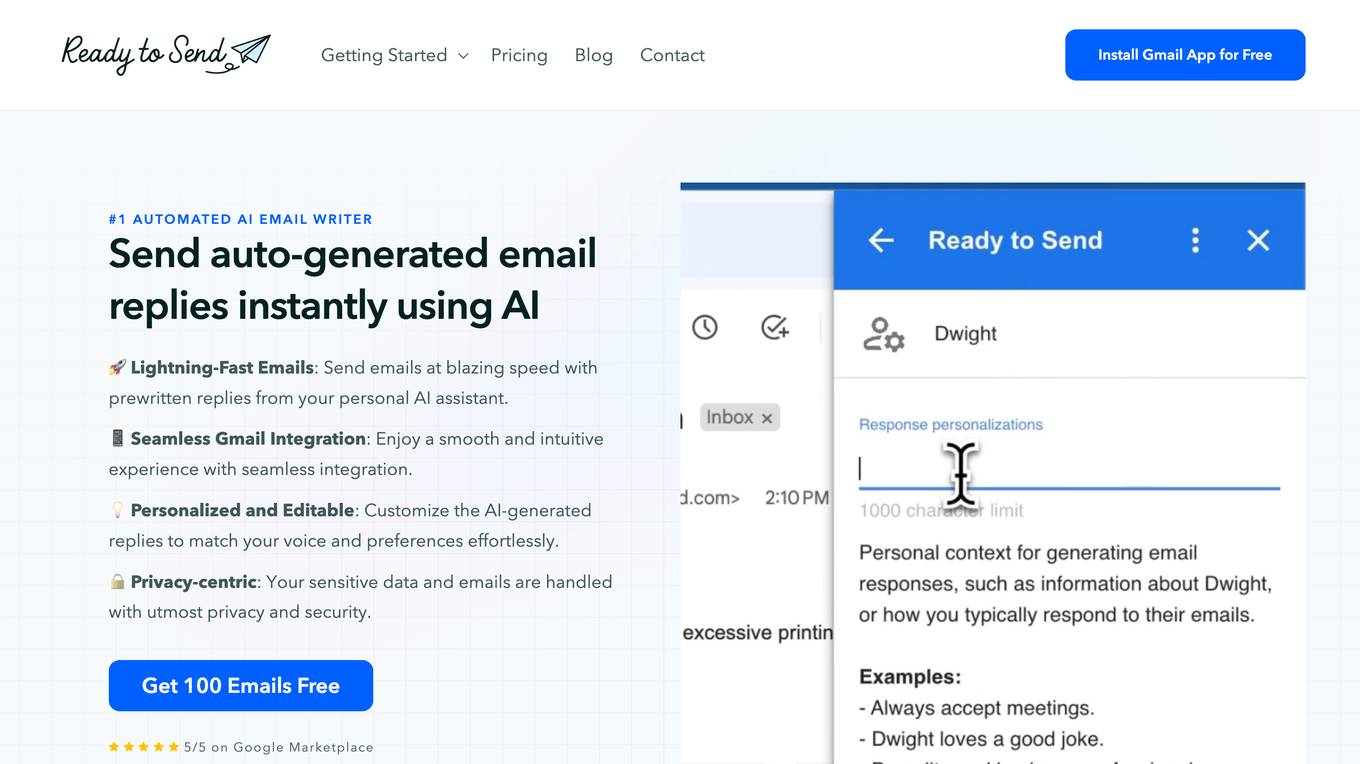

Ready to Send

Ready to Send is an AI-powered Gmail assistant that automates the process of generating personalized email responses. It seamlessly integrates with Gmail to provide lightning-fast email replies, personalized and editable responses, and privacy-centric handling of sensitive data. The application leverages AI technology to craft contextual responses in the user's voice, transforming inbox management from a chore to a delight. With support for multiple languages and advanced language models, Ready to Send offers a secure and efficient solution for enhancing email productivity.

Azure AI Platform

Azure AI Platform by Microsoft offers a comprehensive suite of artificial intelligence services and tools for developers and businesses. It provides a unified platform for building, training, and deploying AI models, as well as integrating AI capabilities into applications. With a focus on generative AI, multimodal models, and large language models, Azure AI empowers users to create innovative AI-driven solutions across various industries. The platform also emphasizes content safety, scalability, and agility in managing AI projects, making it a valuable resource for organizations looking to leverage AI technologies.

Fleak AI Workflows

Fleak AI Workflows is a low-code serverless API Builder designed for data teams to effortlessly integrate, consolidate, and scale their data workflows. It simplifies the process of creating, connecting, and deploying workflows in minutes, offering intuitive tools to handle data transformations and integrate AI models seamlessly. Fleak enables users to publish, manage, and monitor APIs effortlessly, without the need for infrastructure requirements. It supports various data types like JSON, SQL, CSV, and Plain Text, and allows integration with large language models, databases, and modern storage technologies.

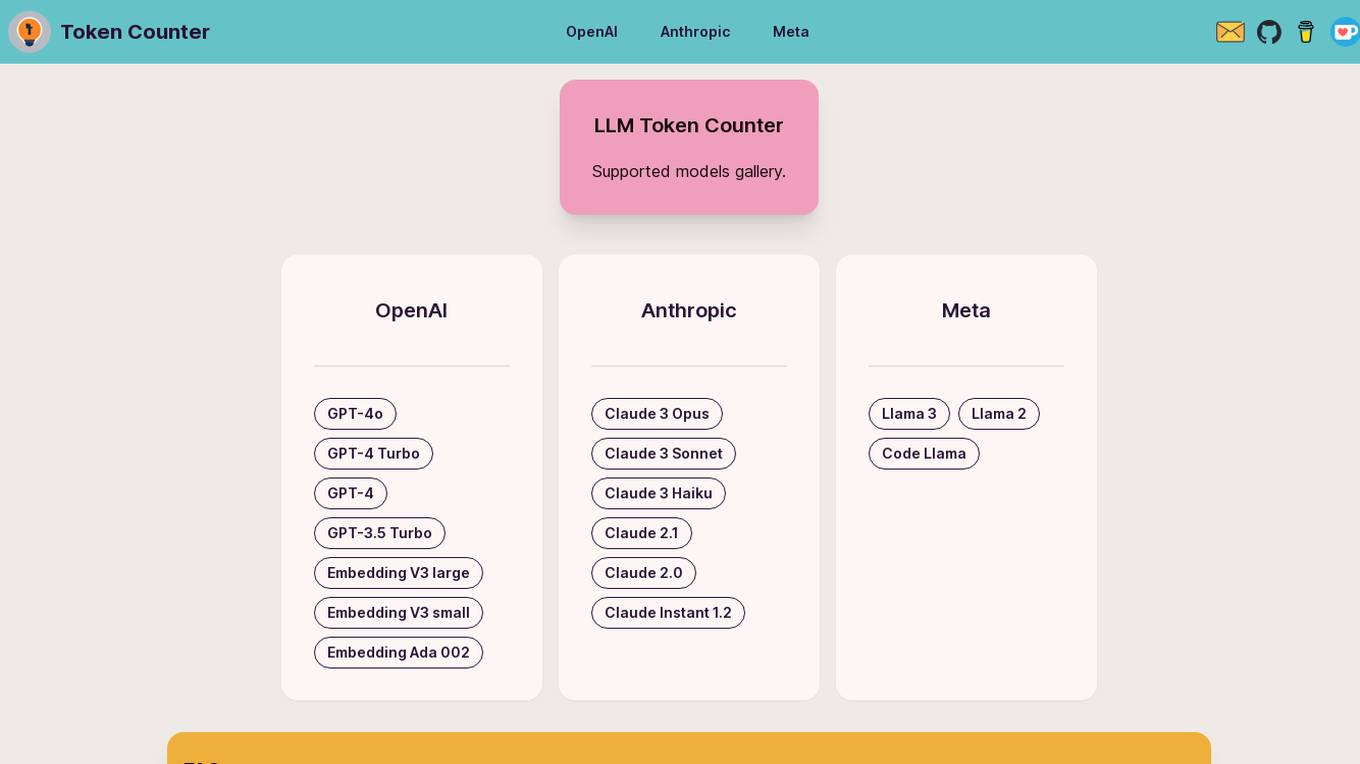

LLM Token Counter

The LLM Token Counter is a sophisticated tool designed to help users effectively manage token limits for various Language Models (LLMs) like GPT-3.5, GPT-4, Claude-3, Llama-3, and more. It utilizes Transformers.js, a JavaScript implementation of the Hugging Face Transformers library, to calculate token counts client-side. The tool ensures data privacy by not transmitting prompts to external servers.

LexEdge

LexEdge is an AI-powered legal practice management solution that revolutionizes how legal professionals handle their responsibilities. It offers advanced features like case tracking, client communications, AI chatbot assistance, document automation, task management, and detailed reporting and analytics. LexEdge enhances productivity, accuracy, and client satisfaction by leveraging technologies such as AI, large language models (LLM), retrieval-augmented generation (RAG), fine-tuning, and custom model training. It caters to solo practitioners, small and large law firms, and corporate legal departments, providing tailored solutions to meet their unique needs.

Stack Spaces

Stack Spaces is an intelligent all-in-one workspace designed to elevate productivity by providing a central workspace and dashboard for product development. It offers a platform to manage knowledge, tasks, documents, and schedule in an organized, centralized, and simplified manner. The application integrates GPT-4 technology to tailor the workspace for users, allowing them to leverage large language models and customizable widgets. Users can centralize all apps and tools, ask questions, and perform intelligent searches to access relevant answers and insights. Stack Spaces aims to streamline workflows, eliminate context-switching, and optimize efficiency for users.

Athina AI

Athina AI is a comprehensive platform designed to monitor, debug, analyze, and improve the performance of Large Language Models (LLMs) in production environments. It provides a suite of tools and features that enable users to detect and fix hallucinations, evaluate output quality, analyze usage patterns, and optimize prompt management. Athina AI supports integration with various LLMs and offers a range of evaluation metrics, including context relevancy, harmfulness, summarization accuracy, and custom evaluations. It also provides a self-hosted solution for complete privacy and control, a GraphQL API for programmatic access to logs and evaluations, and support for multiple users and teams. Athina AI's mission is to empower organizations to harness the full potential of LLMs by ensuring their reliability, accuracy, and alignment with business objectives.

Olympia

Olympia is an AI-powered consultancy platform that offers smart and affordable AI consultants to help businesses with various tasks such as business strategy, online marketing, content generation, legal advice, software development, and sales. The platform features continuous learning capabilities, real-time research, email integration, vision capabilities, and more. Olympia aims to streamline operations, reduce expenses, and boost productivity for startups, small businesses, and solopreneurs by providing expert AI teams powered by advanced language models like GPT4 and Claude 3. The platform ensures secure communication, no rate limits, long-term memory, and outbound email capabilities.

2 - Open Source AI Tools

ChatOpsLLM

ChatOpsLLM is a project designed to empower chatbots with effortless DevOps capabilities. It provides an intuitive interface and streamlined workflows for managing and scaling language models. The project incorporates robust MLOps practices, including CI/CD pipelines with Jenkins and Ansible, monitoring with Prometheus and Grafana, and centralized logging with the ELK stack. Developers can find detailed documentation and instructions on the project's website.

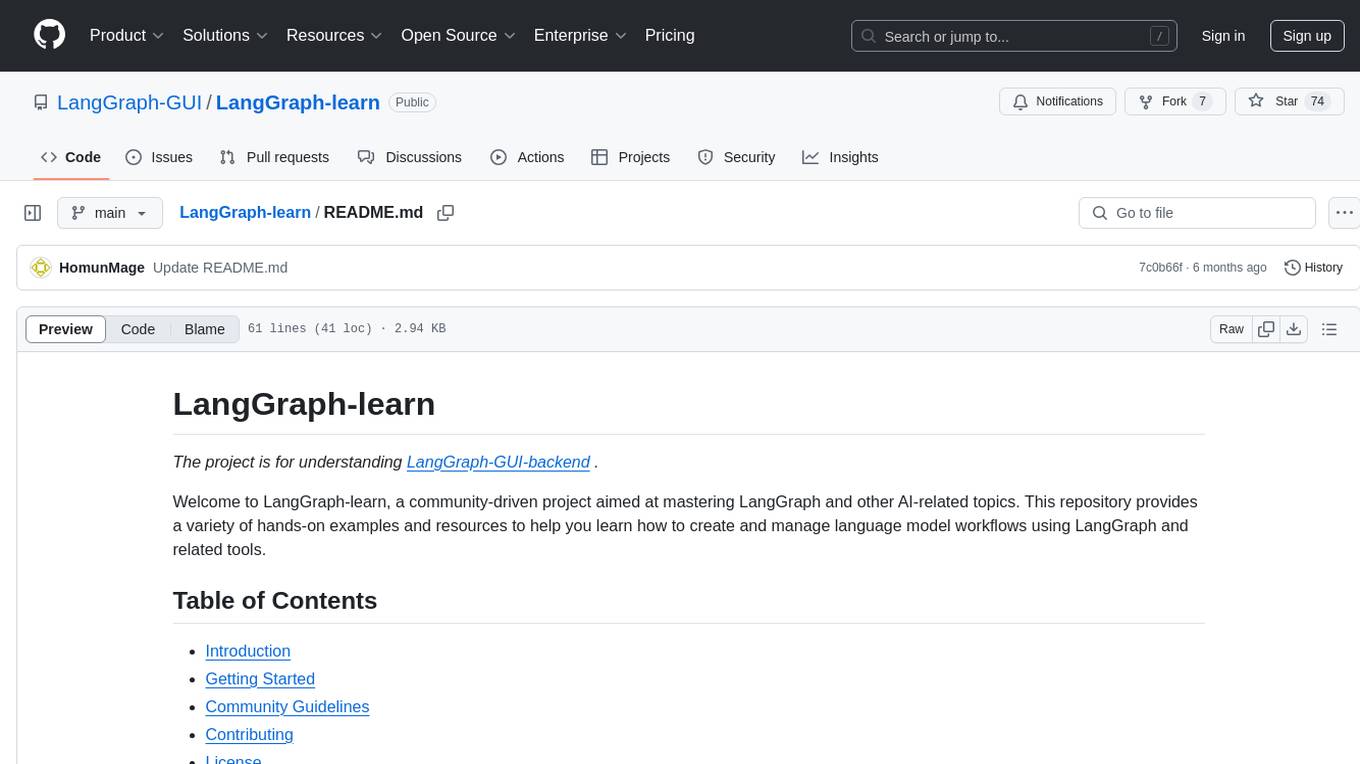

LangGraph-learn

LangGraph-learn is a community-driven project focused on mastering LangGraph and other AI-related topics. It provides hands-on examples and resources to help users learn how to create and manage language model workflows using LangGraph and related tools. The project aims to foster a collaborative learning environment for individuals interested in AI and machine learning by offering practical examples and tutorials on building efficient and reusable workflows involving language models.

20 - OpenAI Gpts

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

PROMPT for Brands GPT

Helping you learn to work better and quicker using language models. Drawing lessons from PROMPT for Brands https://prompt.mba/.

GPT Architect

Expert in designing GPT models and translating user needs into technical specs.

GPT Designer

A creative aide for designing new GPT models, skilled in ideation and prompting.

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

Enough

As the smallest language model (SLM) chatbot in existence, Enough responds with only one word.