Best AI tools for< Manage Kubernetes Cluster >

20 - AI tool Sites

Framer

Framer is a design tool used for creating interactive prototypes and designs. It allows users to visually design interfaces, interactions, and animations. With Framer, designers and developers can collaborate seamlessly to bring their ideas to life. The tool provides a range of features to streamline the design process and enhance creativity.

KubeHelper

KubeHelper is an AI-powered tool designed to reduce Kubernetes downtime by providing troubleshooting solutions and command searches. It seamlessly integrates with Slack, allowing users to interact with their Kubernetes cluster in plain English without the need to remember complex commands. With features like troubleshooting steps, command search, infrastructure management, scaling capabilities, and service disruption detection, KubeHelper aims to simplify Kubernetes operations and enhance system reliability.

Rafay

Rafay is an AI-powered platform that accelerates cloud-native and AI/ML initiatives for enterprises. It provides automation for Kubernetes clusters, cloud cost optimization, and AI workbenches as a service. Rafay enables platform teams to focus on innovation by automating self-service cloud infrastructure workflows.

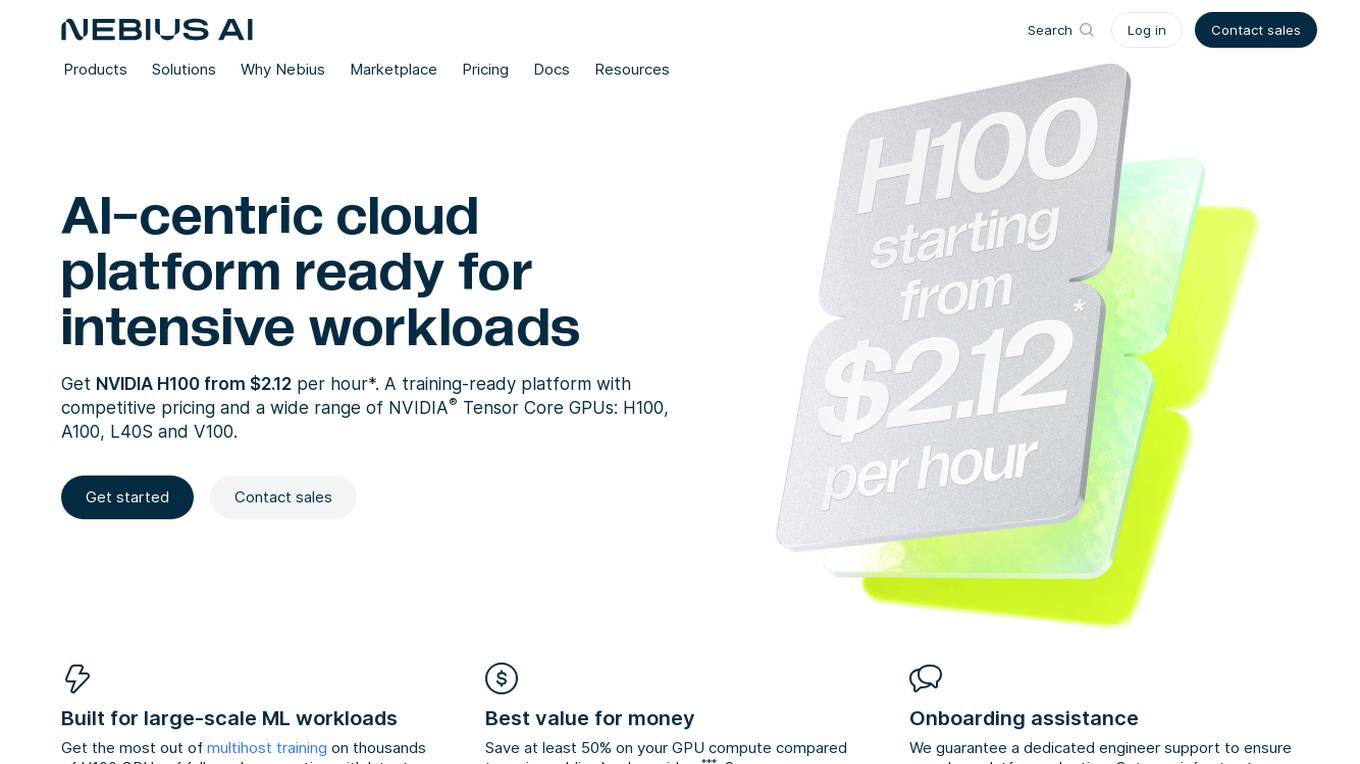

Nebius AI

Nebius AI is an AI-centric cloud platform designed to handle intensive workloads efficiently. It offers a range of advanced features to support various AI applications and projects. The platform ensures high performance and security for users, enabling them to leverage AI technology effectively in their work. With Nebius AI, users can access cutting-edge AI tools and resources to enhance their projects and streamline their workflows.

Nebius

Nebius is the ultimate cloud for AI explorers, designed to democratize AI infrastructure and empower builders everywhere. It offers flexible architecture to seamlessly scale AI from a single GPU to pre-optimized clusters with thousands of NVIDIA GPUs. Nebius is engineered for demanding AI workloads, integrating NVIDIA GPU accelerators, high-performance InfiniBand, and Kubernetes or Slurm orchestration for peak efficiency. The platform provides long-term value by optimizing every layer of the stack, delivering substantial customer value over competitors.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

Lambda Docs

Lambda Docs is an AI tool that provides cloud and hardware solutions for individuals, teams, and organizations. It offers services such as Managed Kubernetes, Preinstalled Kubernetes, Slurm, and access to GPU clusters. The platform also provides educational resources and tutorials for machine learning engineers and researchers to fine-tune models and deploy AI solutions.

Webb.ai

Webb.ai is an AI-powered platform that offers automated troubleshooting for Kubernetes. It is designed to assist users in identifying and resolving issues within their Kubernetes environment efficiently. By leveraging AI technology, Webb.ai provides insights and recommendations to streamline the troubleshooting process, ultimately improving system reliability and performance. The platform is user-friendly and caters to both beginners and experienced users in the field of Kubernetes management.

Kubeflow

Kubeflow is an open-source machine learning (ML) toolkit that makes deploying ML workflows on Kubernetes simple, portable, and scalable. It provides a unified interface for model training, serving, and hyperparameter tuning, and supports a variety of popular ML frameworks including PyTorch, TensorFlow, and XGBoost. Kubeflow is designed to be used with Kubernetes, a container orchestration system that automates the deployment, management, and scaling of containerized applications.

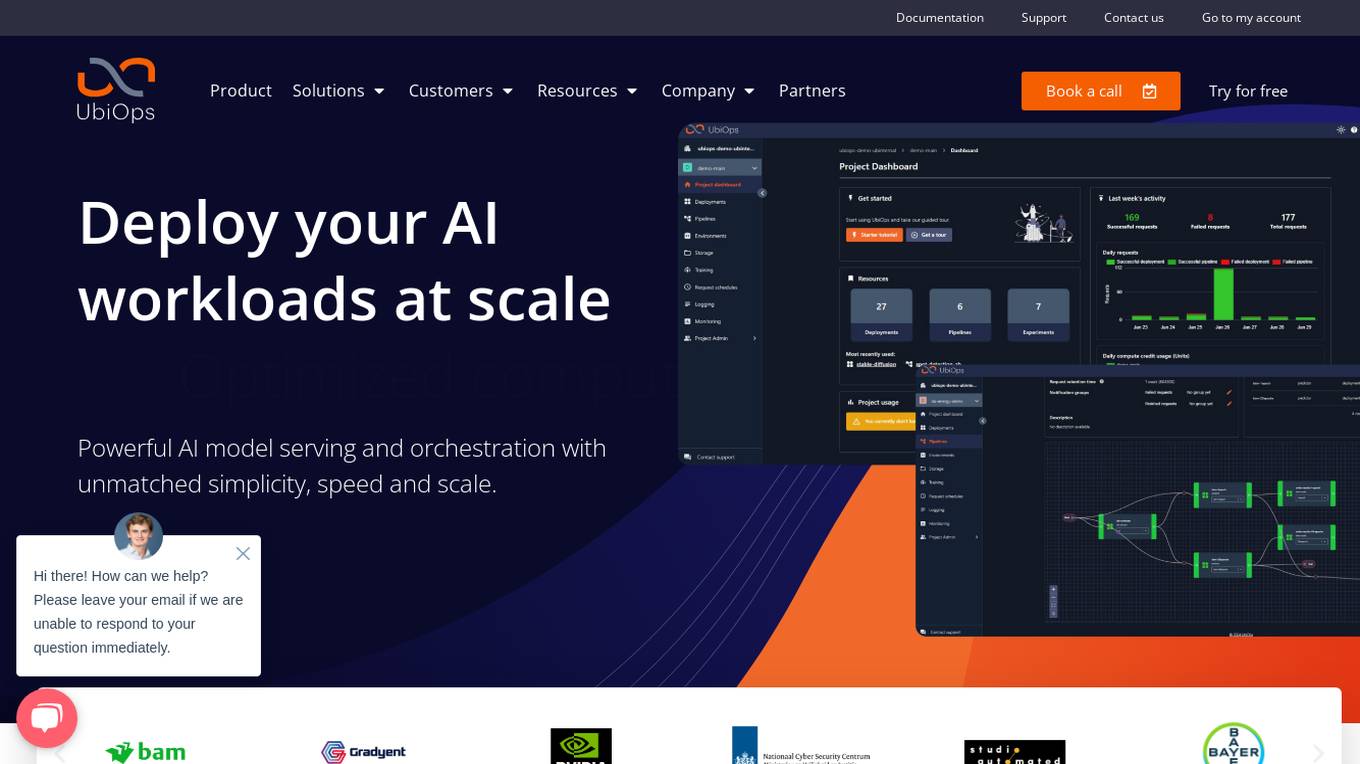

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

Operant

Operant is a cloud-native runtime protection platform that offers instant visibility and control from infrastructure to APIs. It provides AI security shield for applications, API threat protection, Kubernetes security, automatic microsegmentation, and DevSecOps solutions. Operant helps defend APIs, protect Kubernetes, and shield AI applications by detecting and blocking various attacks in real-time. It simplifies security for cloud-native environments with zero instrumentation, application code changes, or integrations.

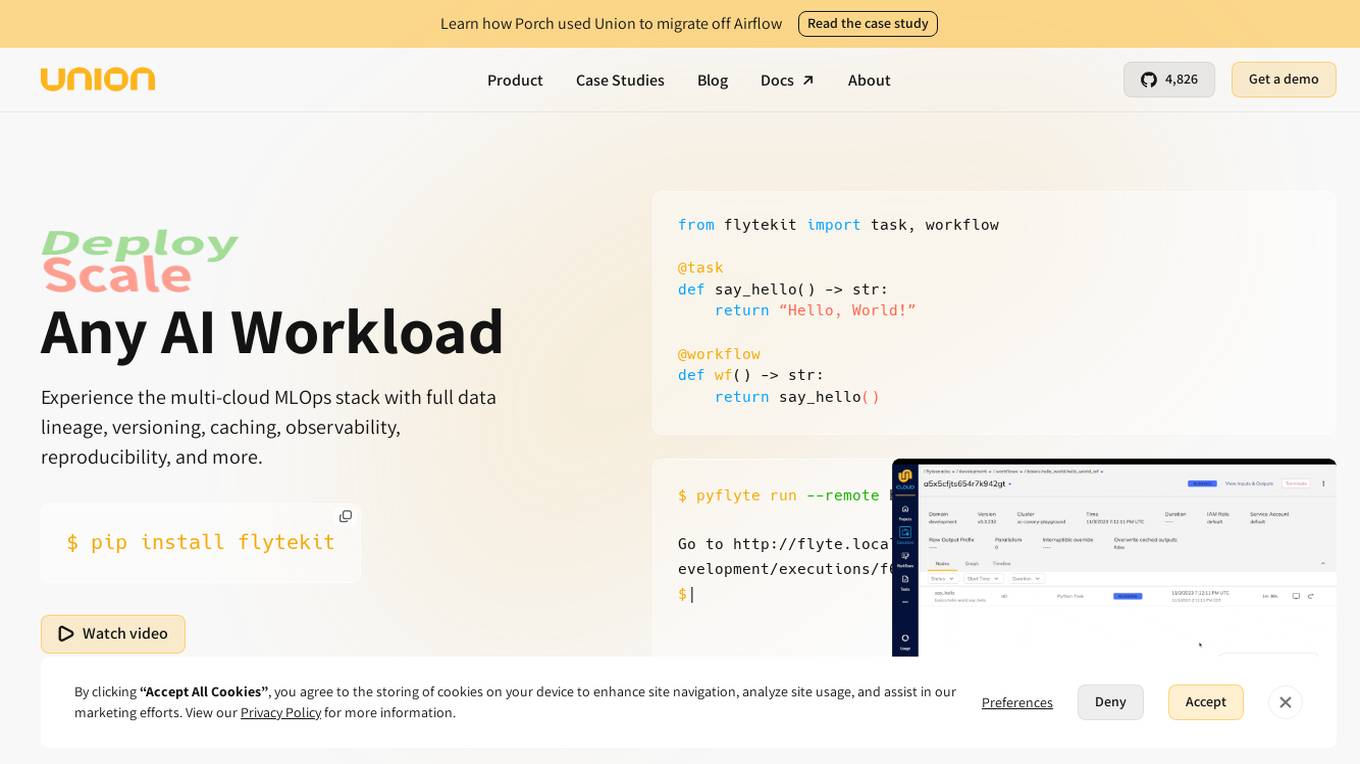

Union.ai

Union.ai is an infrastructure platform designed for AI, ML, and data workloads. It offers a scalable MLOps platform that optimizes resources, reduces costs, and fosters collaboration among team members. Union.ai provides features such as declarative infrastructure, data lineage tracking, accelerated datasets, and more to streamline AI orchestration on Kubernetes. It aims to simplify the management of AI, ML, and data workflows in production environments by addressing complexities and offering cost-effective strategies.

PrimeOrbit

PrimeOrbit is an AI-driven cloud cost optimization platform designed to empower operations and boost ROI for enterprises. The platform focuses on streamlining operations and simplifying cost management by delivering quality-centric solutions. It offers AI-driven optimization recommendations, automated cost allocation, and tailored FinOps for optimal efficiency and control. PrimeOrbit stands out by providing user-centric approach, superior AI recommendations, customization, and flexible enterprise workflow. It supports major cloud providers including AWS, Azure, and GCP, with full support for GCP and Kubernetes coming soon. The platform ensures complete cost allocation across cloud resources, empowering decision-makers to optimize cloud spending efficiently and effectively.

Microtica

Microtica is an AI-powered cloud delivery platform that offers a comprehensive suite of DevOps tools to help users build, deploy, and optimize their infrastructure efficiently. With features like AI Incident Investigator, AI Infrastructure Builder, Kubernetes deployment simplification, alert monitoring, pipeline automation, and cloud monitoring, Microtica aims to streamline the development and management processes for DevOps teams. The platform provides real-time insights, cost optimization suggestions, and guided deployments, making it a valuable tool for businesses looking to enhance their cloud infrastructure operations.

Lacework

Lacework is a cloud security platform that provides comprehensive security solutions for DevOps, Containers, and Cloud Environments. It offers features such as Code Security, Workload Protection, Identities and Entitlements management, Posture Management, Kubernetes Security, Data Posture Management, Infrastructure as Code security, Software Composition Analysis, Application Security Testing, Edge Security, and Platform Overview. Lacework empowers users to secure their entire cloud infrastructure, prioritize risks, protect workloads, and stay compliant by leveraging AI-driven technologies and behavior-based threat detection. The platform helps automate compliance reporting, fix vulnerabilities, and reduce alerts, ultimately enhancing cloud security and operational efficiency.

SocialBee

SocialBee is an AI-powered social media management tool that helps businesses and individuals manage their social media accounts efficiently. It offers a range of features, including content creation, scheduling, analytics, and collaboration, to help users plan, create, and publish engaging social media content. SocialBee also provides insights into social media performance, allowing users to track their progress and make data-driven decisions.

Height

Height is an autonomous project management tool designed for teams involved in designing and building projects. It automates manual tasks to provide space for collaborative work, focusing on backlog upkeep, spec updates, and bug triage. With project intelligence and collaboration features, Height offers a customizable workspace with autonomous capabilities to streamline project management. Users can discuss projects in context and benefit from an AI assistant for creating better stories. The tool aims to revolutionize project management by offloading routine tasks to an intelligent system.

Moning

Moning is a platform designed to help users manage and boost their wealth easily. It provides tools for a global view of wealth, making better investment decisions, avoiding costly mistakes, and increasing performance. With features like AI Analysis, Dividends calendar, and Dividend and Growth Safety Scores, Moning offers a mix of Human & Artificial Intelligence to enhance investment knowledge and decision-making. Users can track and manage their wealth through a comprehensive dashboard, access detailed information on stocks, ETFs, and cryptos, and benefit from quick screeners to find the best investment opportunities.

Legitt AI

Legitt AI is an AI-powered Contract Lifecycle Management platform that offers a comprehensive solution for managing contracts at scale. It combines automation and intelligence to revolutionize contract management, ensuring efficiency, accuracy, and compliance with legal standards. The platform streamlines contract creation, signing, tracking, and management processes by embedding intelligence in every step. Legitt AI enhances contract review processes, contract tracking, and contract intelligence at scale, providing users with insights, recommendations, and automated workflows. With robust security measures, scalable infrastructure, and integrations with popular business tools, Legitt AI empowers businesses to manage contracts with precision and efficiency.

Social Places

Social Places is a leading franchise marketing agency that provides a suite of tools to help businesses with multiple locations manage their online presence. The platform includes tools for managing listings, reputation, social media, ads, and bookings. Social Places also offers a conversational AI chatbot and a custom feedback form builder.

0 - Open Source AI Tools

20 - OpenAI Gpts

BASHer GPT || Your Bash & Linux Shell Tutor!

Adaptive and clear Bash guide with command execution. Learn by poking around in the code interpreter's isolated Kubernetes container!

FODMAPs Dietician

Dietician that helps those with IBS manage their symptoms via FODMAPs. FODMAP stands for fermentable oligosaccharides, disaccharides, monosaccharides and polyols. These are the chemical names of 5 naturally occurring sugars that are not well absorbed by your small intestine.

Cognitive Behavioral Coach

Provides cognitive-behavioral and emotional therapy guidance, helping users understand and manage their thoughts, behaviors, and emotions.

1ACulma - Management Coach

Cross-cultural management. Useful for those who relocate to another country or manage cross-cultural teams.

Finance Butler(ファイナンス・バトラー)

I manage finances securely with encryption and user authentication.

GroceriesGPT

I manage your grocery lists to help you stay organized. *1/ Tell me what to add to a list. 2/ Ask me to add all ingredients for a receipe. 3/ Upload a receipt to remove items from your lists 4/ Add an item by simply uploading a picture. 5/ Ask me what items I would recommend you add to your lists.*

Family Legacy Assistant

Helps users manage and preserve family heirlooms with empathy and practical advice.

AI Home Doctor (Guided Care)

Give me your syptoms and I will provide instructions for how to manage your illness.

MixerBox ChatGSlide

Your AI Google Slides assistant! Effortlessly locate, manage, and summarize your presentations!