Best AI tools for< Manage Data Permissions >

20 - AI tool Sites

SID

SID is a data ingestion, storage, and retrieval pipeline that provides real-time context for AI applications. It connects to various data sources, handles authentication and permission flows, and keeps information up-to-date. SID's API allows developers to retrieve the right piece of data for a given task, enabling them to build AI apps that are fast, accurate, and scalable. With SID, developers can focus on building their products and leave the data management to SID.

Credal

Credal is an AI tool that allows users to build secure AI assistants for enterprise operations. It enables every employee to create customized AI assistants with built-in security, permissions, and compliance features. Credal supports data integration, access control, search functionalities, and API development. The platform offers real-time sync, automatic permissions synchronization, and AI model deployment with security and compliance measures. It helps enterprises manage ETL pipelines, schedule tasks, and configure data processing. Credal ensures data protection, compliance with regulations like HIPAA, and comprehensive audit capabilities for generative AI applications.

Blaze

Blaze is a no-code platform that enables teams to build web applications and internal tools without writing code. It offers a variety of features, including a visual creator, prebuilt integrations, user permissions, and enterprise security. Blaze is trusted by Fortune 500s and healthcare organizations and is HIPAA compliant.

Superblocks

Superblocks is an AI-powered platform that unites engineers, business teams, and IT professionals to collaboratively build secure internal applications. The platform offers features such as generating apps with AI, visual editing, extending with code, centralized governance, integrations, authentication, permissions, and audit logs. Superblocks aims to enable enterprises to accelerate app development, streamline workflows, and ensure data security within their VPC.

Airtable

Airtable is a next-gen app-building platform that enables teams to create custom business apps without the need for coding. It offers features like AI integration, connected data, automations, interface design, and data visualization. Airtable allows users to manage security, permissions, and data protection at scale. The platform also provides integrations with popular tools like Slack, Google Drive, and Salesforce, along with an extension marketplace for additional templates and apps. Users can streamline workflows, automate processes, and gain insights through reporting and analytics.

Amplication

Amplication is an AI-powered platform for .NET and Node.js app development, offering the world's fastest way to build backend services. It empowers developers by providing customizable, production-ready backend services without vendor lock-ins. Users can define data models, extend and customize with plugins, generate boilerplate code, and modify the generated code freely. The platform supports role-based access control, microservices architecture, continuous Git sync, and automated deployment. Amplication is SOC-2 certified, ensuring data security and compliance.

GitBook

GitBook is a knowledge management platform that helps engineering teams centralize, access, and add to their technical knowledge in the tools they use every day. With GitBook, teams can capture knowledge from conversations, code, and meetings, and turn it into useful, readable documentation. GitBook also offers a variety of features to help teams collaborate on documentation, including a branch-based workflow, real-time editing, and user permissions.

Globalese by memoQ

Globalese by memoQ is a robust platform for training AI-powered custom machine translation models. It empowers enterprises and language service providers to easily create high-quality translation engines using their own data, tailored to their specific needs. The user-friendly platform integrates with popular translation management systems and offers an API for seamless workflow integration. With features like custom prompts, advanced tag handling, translation tool integration, supported languages for over 130 language combinations, granular permissions, and flexible deployment options, Globalese by memoQ streamlines the translation process with AI-powered custom models.

Velotix

Velotix is an AI-powered data security platform that offers groundbreaking visual data security solutions to help organizations discover, visualize, and use their data securely and compliantly. The platform provides features such as data discovery, permission discovery, self-serve data access, policy-based access control, AI recommendations, and automated policy management. Velotix aims to empower enterprises with smart and compliant data access controls, ensuring data integrity and compliance. The platform helps organizations gain data visibility, control access, and enforce policy compliance, ultimately enhancing data security and governance.

Qypt AI

Qypt AI is an advanced tool designed to elevate privacy and empower security through secure file sharing and collaboration. It offers end-to-end encryption, AI-powered redaction, and privacy-preserving queries to ensure confidential information remains protected. With features like zero-trust collaboration and client confidentiality, Qypt AI is built by security experts to provide a secure platform for sharing sensitive data. Users can easily set up the tool, define sharing permissions, and invite collaborators to review documents while maintaining control over access. Qypt AI is a cutting-edge solution for individuals and businesses looking to safeguard their data and prevent information leaks.

Glean

Glean is an AI-powered work assistant and enterprise search platform that enables teams to harness generative AI to make better decisions faster. It connects all company data, provides advanced personalization, and ensures retrieval of the most relevant information. Glean offers responsible AI solutions that scale to businesses, respecting permissions and providing secure, private, and fully referenceable answers. With turnkey deployment and a variety of platform tools, Glean helps teams move faster and be more productive.

Venice

Venice is a permissionless AI application that offers an alternative to popular AI apps by prioritizing user privacy and delivering uncensored, unbiased machine intelligence. It utilizes leading open-source AI technology to ensure privacy while providing intelligent responses. Venice aims to empower users with open intelligence and a censorship-free experience.

Hatchet

Hatchet is an AI companion designed to assist on-call engineers in incident response by providing intelligent insights and suggestions based on logs, communications channels, and code analysis. It helps save time and money by automating the triaging and investigation process during critical incidents. The tool is built by engineers with a focus on data security, offering self-hosted deployments, permissions, audit trails, SSO, and version control. Hatchet aims to streamline incident resolution for tier-1 services, enabling faster response and potential problem resolution.

Knostic AI

Knostic AI is an AI application that focuses on Copilot Readiness for Enterprise AI Security. It helps organizations locate and remediate data leaks from AI searches, ensuring data security and compliance. Knostic offers solutions to prevent data leakage, map knowledge boundaries, recommend permission adjustments, and provide independent verification of security posture readiness for AI adoption.

500 supabaseUrl

500 supabaseUrl is a cloud-based database service that provides a fully managed, scalable, and secure way to store and manage data. It is designed to be easy to use, with a simple and intuitive interface that makes it easy to create, manage, and query databases. 500 supabaseUrl is also highly scalable, so it can handle even the most demanding workloads. And because it is fully managed, you don't have to worry about the underlying infrastructure or maintenance tasks.

One Data

One Data is an AI-powered data product builder that offers a comprehensive solution for building, managing, and sharing data products. It bridges the gap between IT and business by providing AI-powered workflows, lifecycle management, data quality assurance, and data governance features. The platform enables users to easily create, access, and share data products with automated processes and quality alerts. One Data is trusted by enterprises and aims to streamline data product management and accessibility through Data Mesh or Data Fabric approaches, enhancing efficiency in logistics and supply chains. The application is designed to accelerate business impact with reliable data products and support cost reduction initiatives with advanced analytics and collaboration for innovative business models.

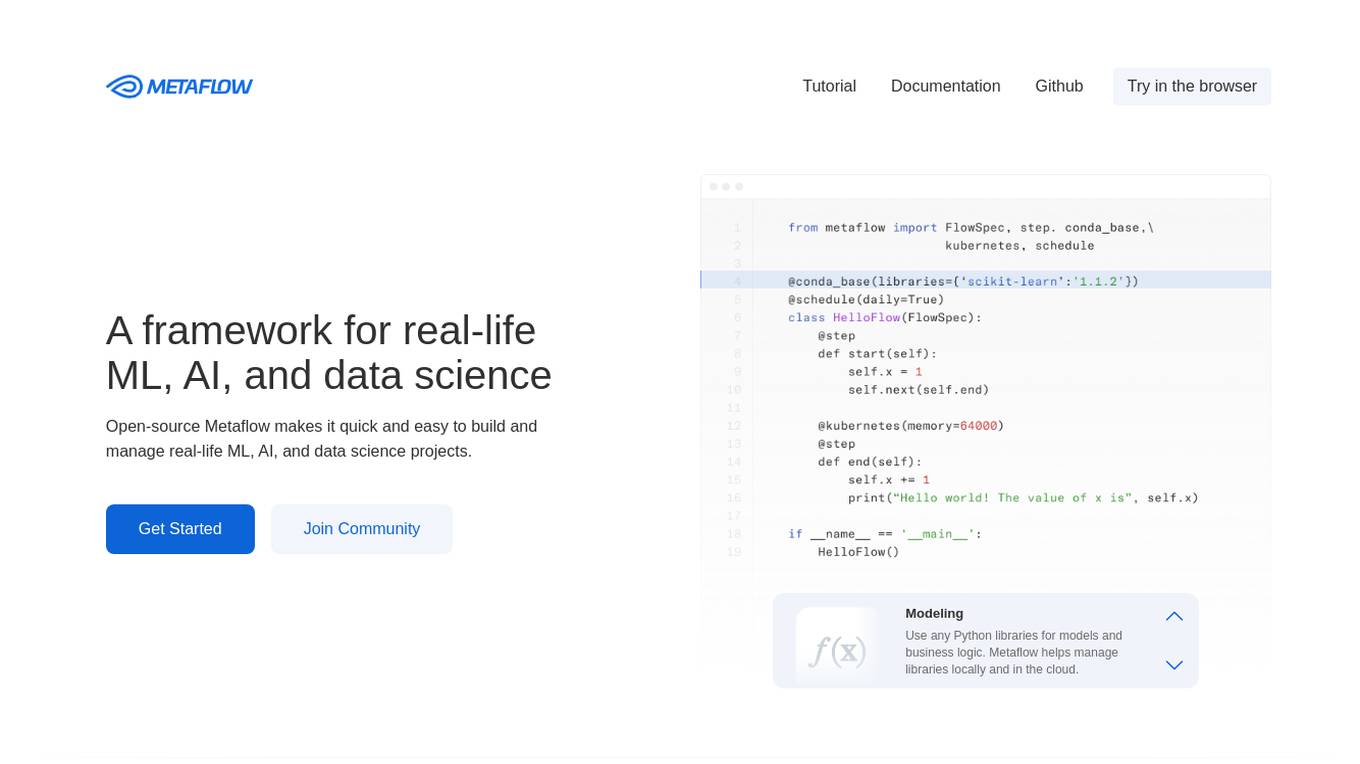

Metaflow

Metaflow is an open-source framework for building and managing real-life ML, AI, and data science projects. It makes it easy to use any Python libraries for models and business logic, deploy workflows to production with a single command, track and store variables inside the flow automatically for easy experiment tracking and debugging, and create robust workflows in plain Python. Metaflow is used by hundreds of companies, including Netflix, 23andMe, and Realtor.com.

Dot Group Data Advisory

Dot Group is an AI-powered data advisory and solutions platform that specializes in effective data management. They offer services to help businesses maximize the potential of their data estate, turning complex challenges into profitable opportunities using AI technologies. With a focus on data strategy, data engineering, and data transport, Dot Group provides innovative solutions to drive better profitability for their clients.

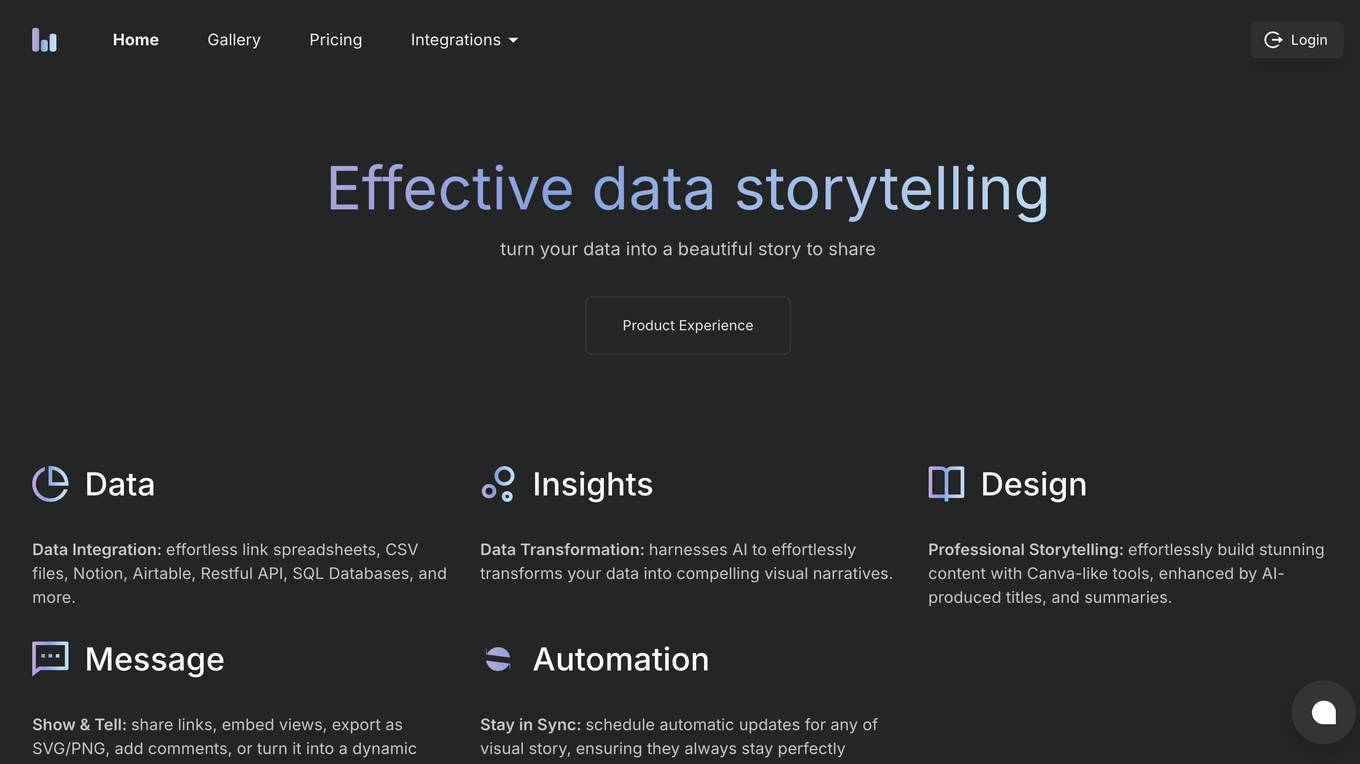

Columns

Columns is an AI tool that automates data storytelling. It enables users to create compelling narratives and visualizations from their data without the need for manual intervention. With Columns, users can easily transform raw data into engaging stories, making data analysis more accessible and impactful. The tool leverages advanced algorithms to analyze data sets, identify patterns, and generate insights that can be presented in a visually appealing format. Columns streamlines the process of data storytelling, saving users time and effort while enhancing the effectiveness of their data-driven communication.

Walter Shields Data Academy

Walter Shields Data Academy is an AI-powered platform offering premium training in SQL, Python, and Excel. With over 200,000 learners, it provides curated courses from bestselling books and LinkedIn Learning. The academy aims to revolutionize data expertise and empower individuals to excel in data analysis and AI technologies.

0 - Open Source AI Tools

20 - OpenAI Gpts

Auth Guide - Authentication & Authorization Expert

Detailed, step-by-step authentication & authorization guide for programmers, with code examples.

👑 Data Privacy for PI & Security Firms 👑

Private Investigators and Security Firms, given the nature of their work, handle highly sensitive information and must maintain strict confidentiality and data privacy standards.

👑 Data Privacy for Watch & Jewelry Designers 👑

Watchmakers and Jewelry Designers, high-end businesses dealing with valuable items and personal details of clients, making data privacy and security paramount.

👑 Data Privacy for Event Management 👑

Data Privacy for Event Management and Ticketing Services handle personal data such as names, contact details, and payment information for event registrations and ticket purchases.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Data Governance Advisor

Ensures data accuracy, consistency, and security across organization.

👑 Data Privacy for Home Inspection & Appraisal 👑

Home Inspection and Appraisal Services have access to personal property and related information, requiring them to be vigilant about data privacy.

👑 Data Privacy for Freelancers & Independents 👑

Freelancers and Independent Consultants, individuals in these roles often handle client data, project specifics, and personal contact information, requiring them to be vigilant about data privacy.

👑 Data Privacy for Architecture & Construction 👑

Architecture and Construction Firms handle sensitive project data, client information, and architectural plans, necessitating strict data privacy measures.

👑 Data Privacy for Real Estate Agencies 👑

Real Estate Agencies and Brokers deal with personal data of clients, including financial information and preferences, requiring careful handling and protection of such data.

👑 Data Privacy for Spa & Beauty Salons 👑

Spa and Beauty Salons collect Customer inforation, including personal details and treatment records, necessitating a high level of confidentiality and data protection.

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

Data Architect

Database Developer assisting with SQL/NoSQL, architecture, and optimization.

Snowflake Copilot

Your personal Snowflake assistant and copilot with a focus on efficient, secure, and scalable data warehousing. Trained with the latest knowledge and docs.