Best AI tools for< Install Custom Kernels >

20 - AI tool Sites

FastBots.ai

FastBots.ai is an AI chatbot builder that allows users to create custom chatbots trained on their own data. These chatbots can be integrated into websites to provide customer support, sales assistance, and other services. FastBots.ai is easy to use and requires no coding. It supports a wide range of content types, including text, PDFs, and YouTube videos. FastBots.ai also offers a variety of features, such as customization options, chat history storage, and Zapier integration.

AnythingLLM

AnythingLLM is an all-in-one AI application designed for everyone. It offers a suite of tools for working with LLM (Large Language Models), documents, and agents in a fully private environment. Users can install AnythingLLM on their desktop for Windows, MacOS, and Linux, enabling flexible one-click installation and secure, fully private operation without internet connectivity. The application supports custom models, including enterprise models like GPT-4, custom fine-tuned models, and open-source models like Llama and Mistral. AnythingLLM allows users to work with various document formats, such as PDFs and word documents, providing tailored solutions with locally running defaults for privacy.

Axiom.ai

Axiom.ai is a no-code browser automation tool that allows users to automate website actions and repetitive tasks on any website or web app. It is a Chrome Extension that is simple to install and free to try. Once installed, users can pin Axiom to the Chrome Toolbar and click on the icon to open and close. Users can build custom bots or use templates to automate actions like clicking, typing, and scraping data from websites. Axiom.ai can be integrated with Zapier to trigger automations based on external events.

PulsePost AI

PulsePost AI is an AI-powered tool designed to help established service businesses close the enquiry-to-sale gap and maintain a consistent flow of deals without the need for manual follow-up. The tool installs a self-sustaining deal machine that filters out browsers from buyers, responds to new leads quickly, reactivates old leads, and books qualified appointments seamlessly. PulsePost AI focuses on creating predictable profit and daily appointments for service businesses, ensuring a steady revenue stream and eliminating the unpredictability in sales processes.

AnythingYou.AI

AnythingYou.AI is an AI tool that generates beautiful profile pictures using AI avatars. Users can create custom AI avatars by uploading 10-20 selfies, and the tool will train a custom model for them immediately. The generated avatar images are high-quality and realistic, created using innovative technologies like Stable Diffusion and DreamBooth. Users can easily create avatars without the need for subscriptions or app installs, and get their avatar images in just 2 hours. The tool ensures user privacy by using images only for model training and deleting them immediately after avatar generation.

Cmd J – ChatGPT for Chrome

Cmd J – ChatGPT for Chrome is a Chrome extension that allows users to use ChatGPT on any tab without having to copy and paste. It offers a variety of features to help users improve their writing, generate blog posts, crush coding issues, boost their social engagement, and fix code bugs faster. The extension is easy to use and can be accessed with a simple keyboard shortcut.

Package

Package is a generative AI rendering tool that helps homeowners envision different renovation styles, receive recommended material packages, and streamline procurement with just one click. It offers a wide range of design packages curated by experts, allowing users to customize items to fit their specific style. Package also provides 3D renderings, material management, and personalized choices, making it easy for homeowners to bring their design ideas to life.

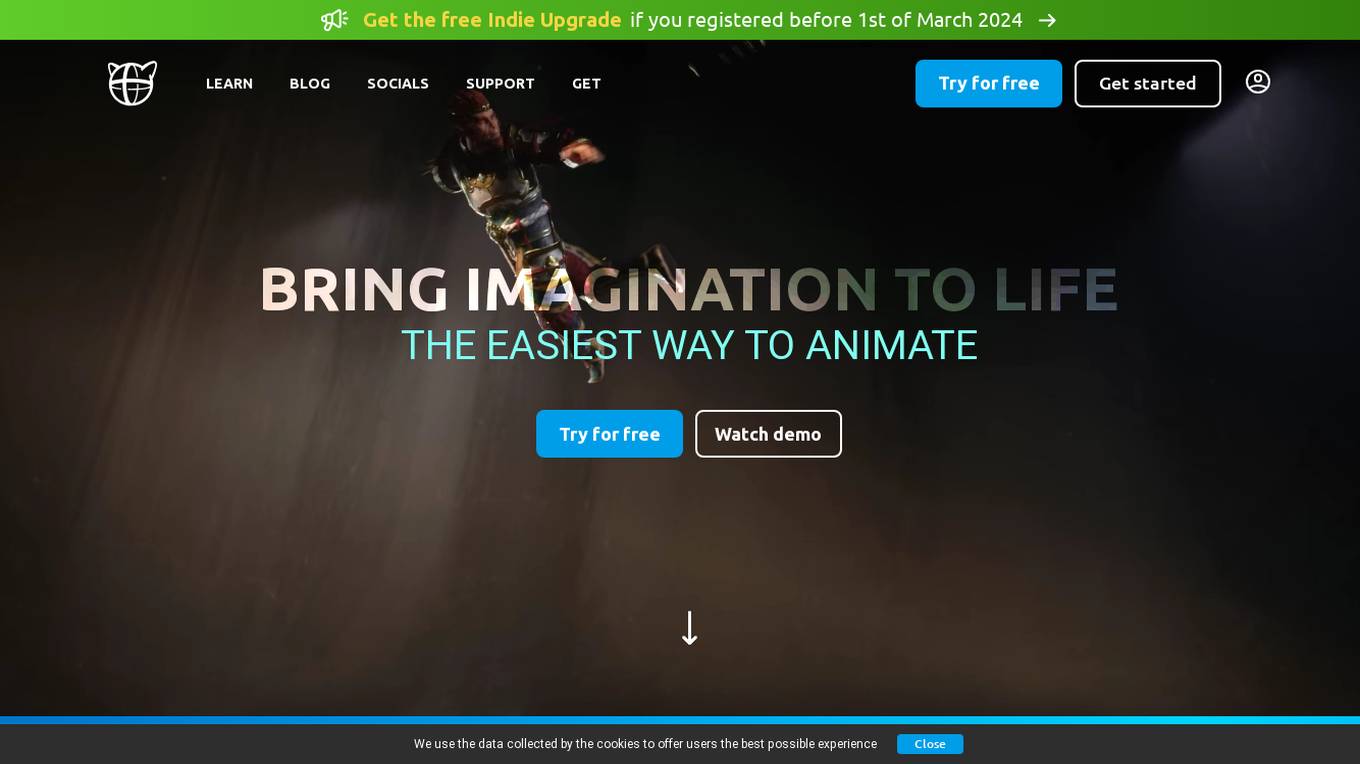

Cascadeur

Cascadeur is a standalone 3D software that lets you create keyframe animation, as well as clean up and edit any imported ones. Thanks to its AI-assisted and physics tools you can dramatically speed up the animation process and get high quality results. It works with .FBX, .DAE and .USD files making it easy to integrate into any animation workflow.

Hoop.dev

Hoop.dev is an AI-powered application that provides live data masking in Rails console sessions. It offers shielded Rails console access, automated employee onboarding and off-boarding, and AI data masking to protect sensitive information. The application allows for passwordless authentication via Google SSO with MFA, auditability of console operations, and compliance with various security controls and regulations. Hoop.dev aims to streamline Rails console operations, reduce manual workflows, and enhance security measures for user convenience and data protection.

GptPanda

GptPanda is a free AI-assistant application designed for Slack users to enhance teamwork and productivity. It integrates seamlessly into Slack workspaces, offering unlimited requests and support in multiple languages. Users can communicate with GptPanda in personal messages or corporate chats, allowing it to assist with daily tasks, answer questions, and manage workspaces efficiently. The application prioritizes user data security through encryption and provides 24/7 customer support for any inquiries or issues.

Meow Apps

Meow Apps is a collection of powerful WordPress plugins designed to supercharge websites with AI capabilities, optimization features, and more. Created by Jordy Meow, a software engineer and photographer based in Tokyo, the plugins aim to enhance productivity and user experience on WordPress platforms. With a focus on optimization, imagery, and AI integration, Meow Apps offers a range of tools to elevate content, automate social posts, clean databases, manage media files, and add AI features like chatbots and content generation. The plugins are known for their friendly user interface, extensive features, and support for databases of all sizes. Meow Apps strives for perfection by providing high-quality tools that can transform the WordPress experience for users.

InstantAPI.ai

InstantAPI.ai is a powerful web scraping API and Chrome extension that allows users to extract data from any website with ease. The tool leverages AI technology to automate data extraction, adapt to site changes, and deliver customized JSON objects. With features like worldwide geotargeting, proxy management, JavaScript rendering, and CAPTCHA bypass, InstantAPI.ai ensures fast and reliable results. Users can describe the data they need and receive it in real-time, tailored to their exact requirements. The tool offers unlimited concurrency, human support, and a user-friendly interface, making web scraping simple and efficient.

Visual Studio Marketplace

The Visual Studio Marketplace is a platform where developers can find and publish extensions for Visual Studio family of products. It offers a wide range of extensions to enhance the functionality and features of Visual Studio, Visual Studio Code, Azure DevOps, and more. Developers can customize their development environment with various tools and integrations available on the marketplace.

Tactiq

Tactiq is a live transcription and AI summary tool for Google Meet, Zoom, and MS Teams. It provides real-time transcriptions, speaker identification, and AI-powered insights to help users focus on the meeting and take effective notes. Tactiq also offers one-click AI actions, such as generating meeting summaries, crafting follow-up emails, and formatting project updates, to streamline post-meeting workflows.

Colorcinch

Colorcinch is an online photo editor and AI cartoonizer that allows users to easily edit and transform their photos into artwork. It offers a wide range of features, including background removal, image cropping and resizing, color adjustment, and the ability to add filters and effects. Colorcinch also has a large library of stock photography, graphics, and icons that users can use to enhance their photos. The platform is available online and offline, making it easy for users to access their projects from anywhere.

Machinet

Machinet is an AI Agent designed for full-stack software developers. It serves as an AI-based IDE that assists developers in various tasks, such as code generation, terminal access, front-end debugging, architecture suggestions, refactoring, and mentoring. The tool aims to enhance productivity and streamline the development workflow by providing intelligent assistance and support throughout the coding process. Machinet prioritizes security and privacy, ensuring that user data is encrypted, secure, and never stored for training purposes.

Shakespeare Toolbar

Shakespeare Toolbar is an AI-powered writing tool that helps you write better and faster. It is available as a Chrome extension and can be used on any website. With Shakespeare Toolbar, you can rephrase emails, summarize documents, write social media posts, and more. It supports over 10 languages and is available for a one-time purchase of $49.

GPTConsole

GPTConsole is an AI-powered platform that helps developers build production-ready applications faster and more efficiently. Its AI agents can generate code for a variety of applications, including web applications, AI applications, and landing pages. GPTConsole also offers a range of features to help developers build and maintain their applications, including an AI agent that can learn your entire codebase and answer your questions, and a CLI tool for accessing agents directly from the command line.

Stable Diffusion

Stable Diffusion is an AI art generation tool that allows users to create high-quality images from text descriptions. It offers a user-friendly platform for both beginners and experts to explore AI art creation without deep technical knowledge. The tool excels in producing complex, detailed, and customizable images, making it ideal for artists, designers, and anyone looking to integrate AI into their creative process. Stable Diffusion provides unprecedented creative freedom through features like image generation, inpainting, outpainting, and text-guided image-to-image translation.

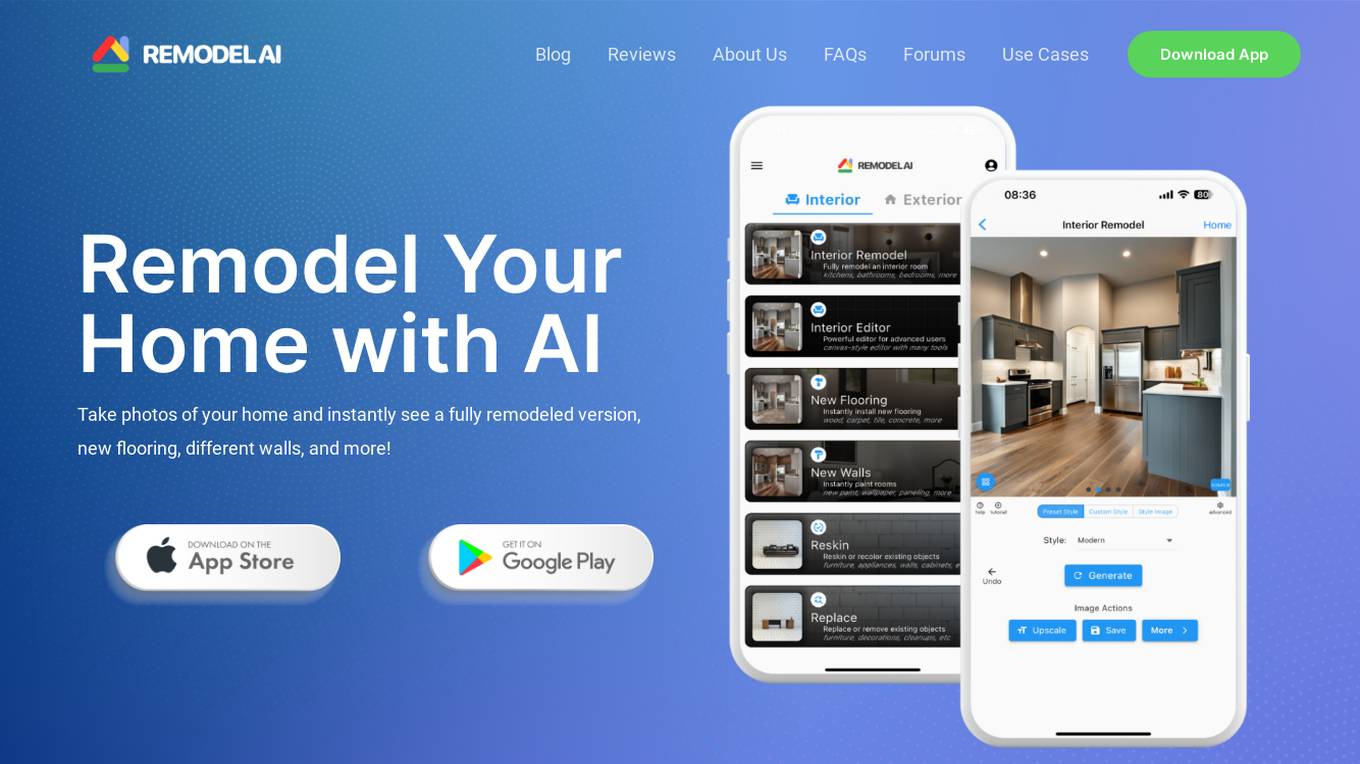

Remodel AI

Remodel AI is an innovative AI application that allows users to renovate their homes with ease. By simply taking photos of their home's interior or exterior, users can instantly visualize fully remodeled versions, new flooring, different walls, and more. The app leverages artificial intelligence to provide various interior design styles and architecture options for users to choose from. With features like interior and exterior remodeling, new flooring installation, wall painting, landscaping visualization, and object reskinning, Remodel AI offers a comprehensive solution for home renovation enthusiasts. The app has received accolades for its user-friendly interface and ability to transform home design ideas into reality.

0 - Open Source AI Tools

20 - OpenAI Gpts

S22 Flip Advisor

Expert on Cat S22 FLIP rooting and custom ROMs, with a broad internet research scope.

Browser Extension Generator

Create browser extensions for web tasks to boost your productivity. Or jumpstart a more advanced extension idea. You'll get a full package download ready to install in your Chrome or Edge browser. 📂 v1.2 _____ _____ What do you want to build? _____

FlutterCraft

FlutterCraft is an AI-powered assistant that streamlines Flutter app development. It interprets user-provided descriptions to generate and compile Flutter app code, providing ready-to-install APK and iOS files. Ideal for rapid prototyping, FlutterCraft makes app development accessible and efficient.

BioinformaticsManual

Compile instructions from the web and github for bioinformatics applications. Receive line-by-line instructions and commands to get started

Ciepły montaż okien

Firma Grupa Magnum specjalizuje się w sprzedaży akcesoriów do ciepłego montażu okien i drzwi. Oferują bogaty wybór narzędzi i akcesoriów, które są niezbędne do prawidłowego montażu stolarki okiennej i drzwiowej.

Throw a Wrench In Your Plans GPT

As "Throw a Wrench in Your Plans GPT", I provide expert guidance on skilled trades and AI adoption, inspired by TWYP Media