Best AI tools for< Initialize Memory Bank >

15 - AI tool Sites

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

VJAL Institute

VJAL Institute is an AI training platform that aims to empower individuals and organizations with the knowledge and skills needed to thrive in the field of artificial intelligence. Through a variety of courses, workshops, and online resources, VJAL Institute provides comprehensive training on AI technologies, applications, and best practices. The platform also offers opportunities for networking, collaboration, and certification, making it a valuable resource for anyone looking to enhance their AI expertise.

OdiaGenAI

OdiaGenAI is a collaborative initiative focused on conducting research on Generative AI and Large Language Models (LLM) for the Odia Language. The project aims to leverage AI technology to develop Generative AI and LLM-based solutions for the overall development of Odisha and the Odia language through collaboration among Odia technologists. The initiative offers pre-trained models, codes, and datasets for non-commercial and research purposes, with a focus on building language models for Indic languages like Odia and Bengali.

Onegen

Onegen is an AI application that provides end-to-end AI transformation services for startups and enterprises. The platform helps businesses consult, build, and iterate reliable and responsible AI solutions to overcome AI transformation challenges. Onegen emphasizes the importance of data readiness and leveraging artificial intelligence to drive success in various sectors such as retail, manufacturing, and technology startups. The platform offers AI insights for lead time management, legal operations enhancement, and rapid development of AI applications. With features like custom AI application development, AI integration services, LLM training and deployment, generative AI solutions, and predictive analytics, Onegen aims to empower businesses with scalable and expert-guided AI solutions.

AIToolBox

AIToolBox is an initiative that provides advanced AI tools for image and content generation, customization, and hosting solutions. Its mission is to empower businesses with cutting-edge AI capabilities.

Responsible AI Licenses (RAIL)

Responsible AI Licenses (RAIL) is an initiative that empowers developers to restrict the use of their AI technology to prevent irresponsible and harmful applications. They provide licenses with behavioral-use clauses to control specific use-cases and prevent misuse of AI artifacts. The organization aims to standardize RAIL Licenses, develop collaboration tools, and educate developers on responsible AI practices.

SpeechForms™

SpeechForms™ is an Early Childhood Support Initiative in New York City that helps families access Early Intervention Program services for children ages 0–3. The platform provides information about developmental milestones, evaluation processes, and available services, along with practical tips for families. SpeechForms™ offers support for families facing speech and development delays, guiding them towards the right path and connecting them quickly to necessary services. The platform also assists families in accessing private speech therapy services for more flexibility and timely support.

AI4K12

The Artificial Intelligence (AI) for K-12 initiative (AI4K12) is a joint effort by AAAI and CSTA to develop national guidelines for AI education for K-12, an online resource directory for AI instruction, and a community of practitioners and developers focused on AI for K-12. The initiative aims to provide educators with frameworks, resources, and support to integrate AI concepts into K-12 education.

yesnoerror

yesnoerror is an autonomous AI agent developed by DeSci initiative that scans scientific papers to uncover errors, inconsistencies, and flawed methods that human reviewers may have missed. The tool utilizes blockchain technology and AI to audit science at scale, aiming to enhance scientific integrity through automated error detection. By analyzing papers from renowned repositories like arXiv, bioRxiv, and medRxiv, yesnoerror helps researchers identify and correct critical issues in research, such as mathematical errors and data discrepancies.

OpenAI Strawberry Model

OpenAI Strawberry Model is a cutting-edge AI initiative that represents a significant leap in AI capabilities, focusing on enhancing reasoning, problem-solving, and complex task execution. It aims to improve AI's ability to handle mathematical problems, programming tasks, and deep research, including long-term planning and action. The project showcases advancements in AI safety and aims to reduce errors in AI responses by generating high-quality synthetic data for training future models. Strawberry is designed to achieve human-like reasoning and is expected to play a crucial role in the development of OpenAI's next major model, codenamed 'Orion.'

AIMAC Leaderboard

AIMAC Leaderboard is an AI Model Accessibility Checker that evaluates the accessibility of web pages generated by AI models across 28 categories. It compares top AI models side by side, auditing them for accessibility and measuring their performance. The initiative aims to ensure that AI models write accessible code by default. The project is a collaboration between the GAAD Foundation and ServiceNow, providing insights into how different models handle the same design challenges.

AI & Inclusion Hub

The website focuses on the intersection of artificial intelligence (AI) and inclusion, exploring the impact of AI technologies on marginalized populations and global digital inequalities. It provides resources, research findings, and ideas on themes like health, education, and humanitarian crisis mitigation. The site showcases the work of the Ethics and Governance of AI initiative in collaboration with the MIT Media Lab, incorporating perspectives from experts in the field. It aims to address challenges and opportunities related to AI and inclusion through research, events, and multi-stakeholder dialogues.

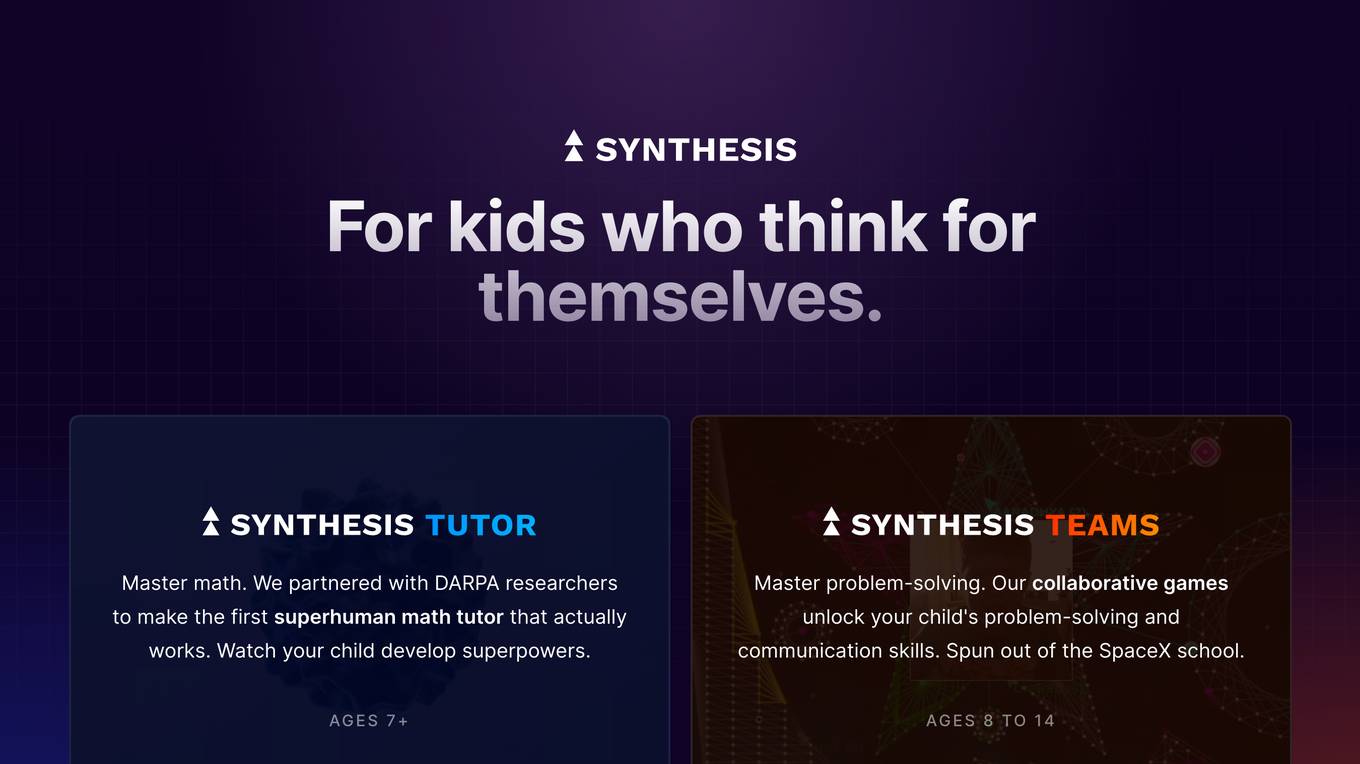

Synthesis

Synthesis is an innovative educational platform designed for kids who seek to think independently and excel in math and problem-solving. Developed in collaboration with DARPA researchers, it offers a superhuman math tutor and collaborative games to enhance children's problem-solving and communication skills. Founded as part of the SpaceX school initiative, Synthesis aims to cultivate student voice, strategic thinking, and collaborative problem-solving abilities. The platform provides unique learning experiences to a global community, empowering students to develop essential skills for the future.

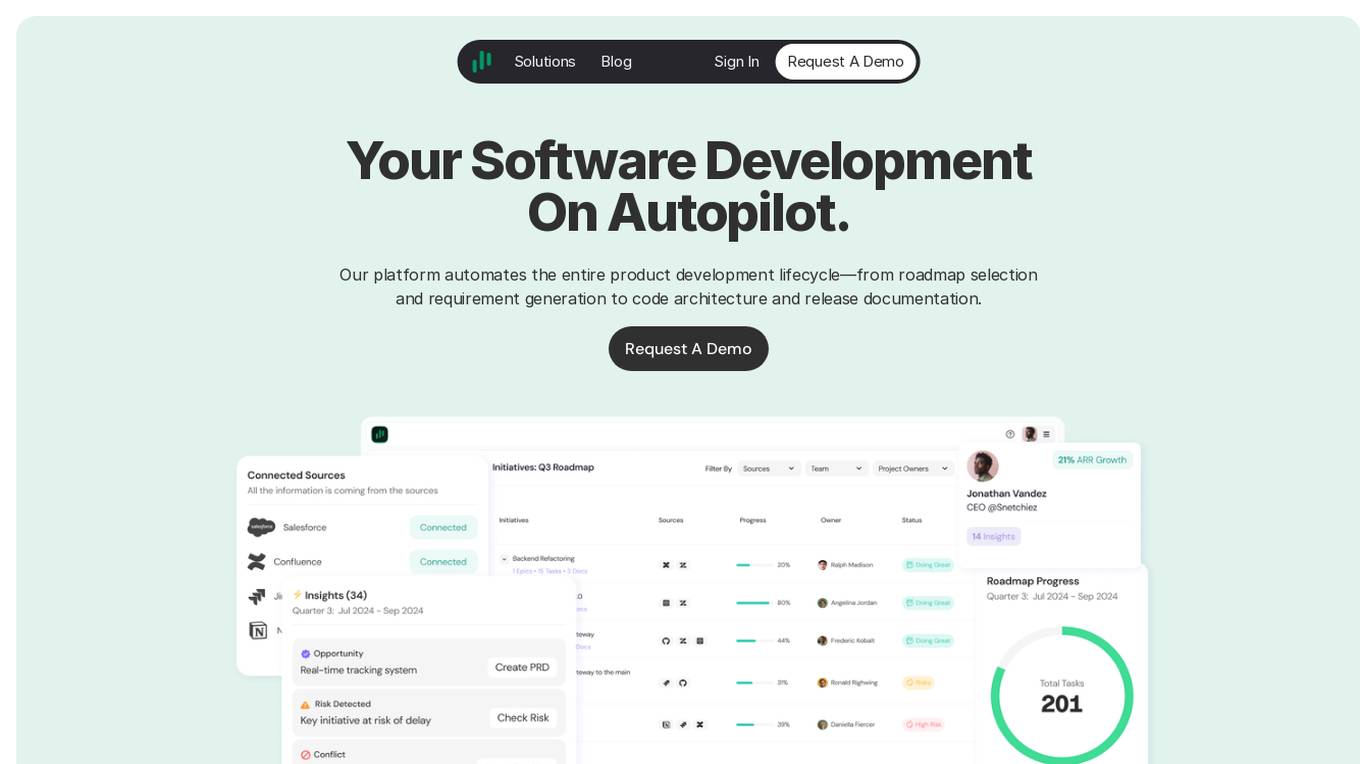

Prodmap AI

Prodmap AI is an AI-powered platform that automates the entire product development lifecycle, from roadmap selection and requirement generation to code architecture and release documentation. It helps streamline product development processes, save time, cut costs, and deliver impactful results. The platform offers features such as roadmap generation, faster roadmap planning, AI PRD generation, feature prioritization, real-time project monitoring, proactive risk management, end-to-end visibility, actionable execution insights, knowledge base, integrated tools, strategic initiative insights, custom AI agents, tailored automation, flexible AI solutions, and personalized workflows.

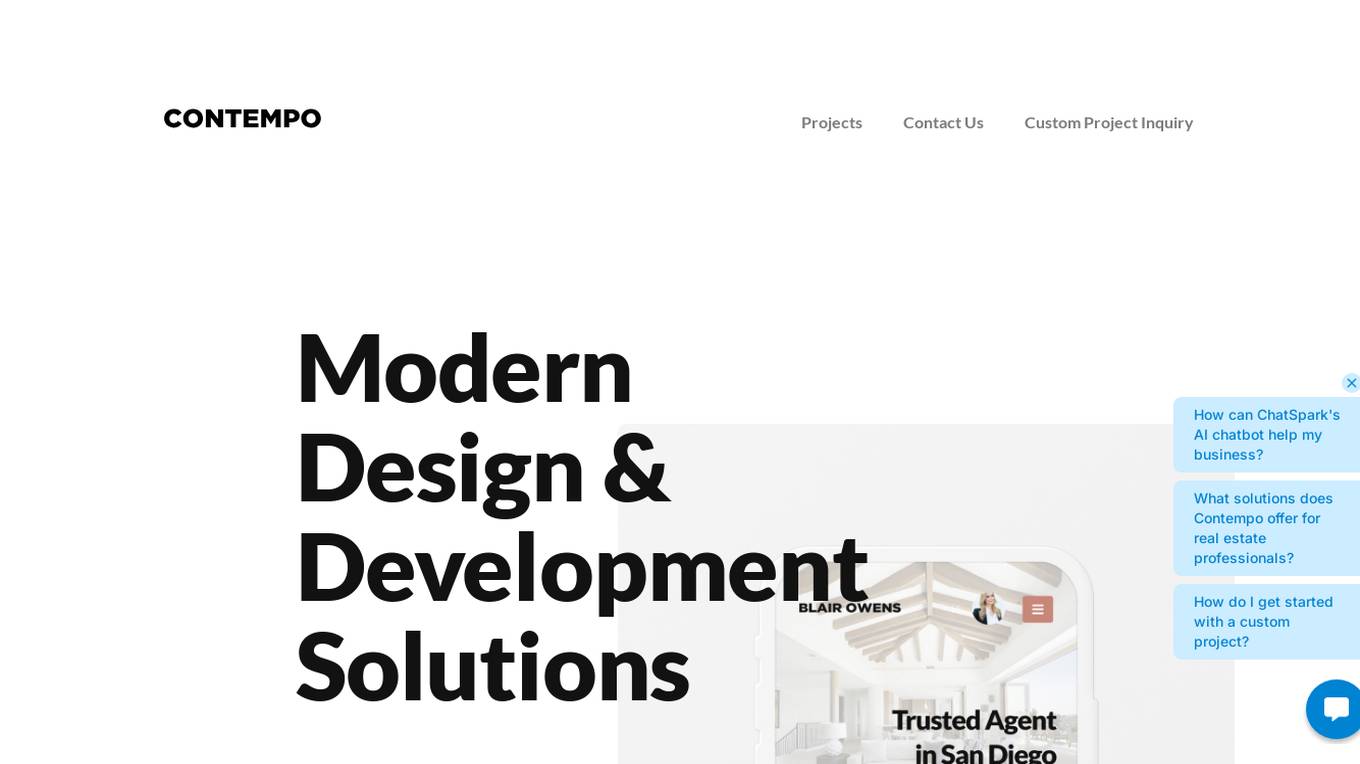

Contempo Creative Inc.

Contempo Creative Inc. is a technology company based in San Diego, California, specializing in modern design and development solutions. They offer industry-leading IDX websites for real estate professionals, powered by their own CRM & IDX technology. Their flagship AI initiative, ChatSpark, provides AI chatbots trained on unique data for personalized customer engagement. Contempo Themes focuses on delivering comprehensive and responsive IDX websites to empower real estate agents and brokers with cutting-edge tools and services. The company's mission is to make sophisticated, industry-leading solutions accessible and beneficial by combining AI capabilities with real estate expertise.

1 - Open Source AI Tools

roo-code-memory-bank

Roo Code Memory Bank is a tool designed for AI-assisted development to maintain project context across sessions. It provides a structured memory system integrated with VS Code, ensuring deep understanding of the project for the AI assistant. The tool includes key components such as Memory Bank for persistent storage, Mode Rules for behavior configuration, VS Code Integration for seamless development experience, and Real-time Updates for continuous context synchronization. Users can configure custom instructions, initialize the Memory Bank, and organize files within the project root directory. The Memory Bank structure includes files for tracking session state, technical decisions, project overview, progress tracking, and optional project brief and system patterns documentation. Features include persistent context, smart workflows for specialized tasks, knowledge management with structured documentation, and cross-referenced project knowledge. Pro tips include handling multiple projects, utilizing Debug mode for troubleshooting, and managing session updates for synchronization. The tool aims to enhance AI-assisted development by providing a comprehensive solution for maintaining project context and facilitating efficient workflows.

2 - OpenAI Gpts

File Baby

Your guide to Content Credentials, Content Authenticity Initiative (CAI) and Coalition for Content Provenance and Authenticity (C2PA) at File.Baby.

Project Change Management Advisor

Guides organizational transitions to achieve desired business outcomes.