Best AI tools for< Inference On Devices >

20 - AI tool Sites

Qualcomm AI Hub

Qualcomm AI Hub is a platform that allows users to run AI models on Snapdragon® 8 Elite devices. It provides a collaborative ecosystem for model makers, cloud providers, runtime, and SDK partners to deploy on-device AI solutions quickly and efficiently. Users can bring their own models, optimize for deployment, and access a variety of AI services and resources. The platform caters to various industries such as mobile, automotive, and IoT, offering a range of models and services for edge computing.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

Luxonis

Luxonis is an AI application that offers Visual AI solutions engineered for precision edge inference. The application provides stereo depth cameras with unique features and quality, enabling users to perform advanced vision tasks on-device, reducing latency and bandwidth demands. With open-source DepthAI API, users can create and deploy custom vision solutions that scale with their needs. Luxonis also offers real-world training data for self-improving vision intelligence and operates flawlessly through vibrations, temperature shifts, and extended use. The application integrates advanced sensing capabilities with up to 48MP cameras, wide field of view, IMUs, microphones, ToF, thermal, IR illumination, and active stereo for unparalleled perception.

ONNX Runtime

ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing in various technology stacks. It supports multiple languages and platforms, optimizing performance for CPU, GPU, and NPU hardware. ONNX Runtime powers AI in Microsoft products and is widely used in cloud, edge, web, and mobile applications. It also enables large model training and on-device training, offering state-of-the-art models for tasks like image synthesis and text generation.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

Roboflow

Roboflow is an AI tool designed for computer vision tasks, offering a platform that allows users to annotate, train, deploy, and perform inference on models. It provides integrations, ecosystem support, and features like notebooks, autodistillation, and supervision. Roboflow caters to various industries such as aerospace, agriculture, healthcare, finance, and more, with a focus on simplifying the development and deployment of computer vision models.

TextSynth

TextSynth is an AI tool that provides access to large language models such as Mistral, Llama, Stable Diffusion, Whisper for text-to-image, text-to-speech, and speech-to-text capabilities via a REST API and a playground. It employs custom inference code for faster inference on standard GPUs and CPUs. Founded in 2020, TextSynth was among the first to offer access to the GPT-2 language model. The service is free with rate limitations, but users can opt for unlimited access by paying a small fee per request. All servers are located in France.

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

Segwise

Segwise is an AI tool designed to help game developers increase their game's Lifetime Value (LTV) by providing insights into player behavior and metrics. The tool uses AI agents to detect causal LTV drivers, root causes of LTV drops, and opportunities for growth. Segwise offers features such as running causal inference models on player data, hyper-segmenting player data, and providing instant answers to questions about LTV metrics. It also promises seamless integrations with gaming data sources and warehouses, ensuring data ownership and transparent pricing. The tool aims to simplify the process of improving LTV for game developers.

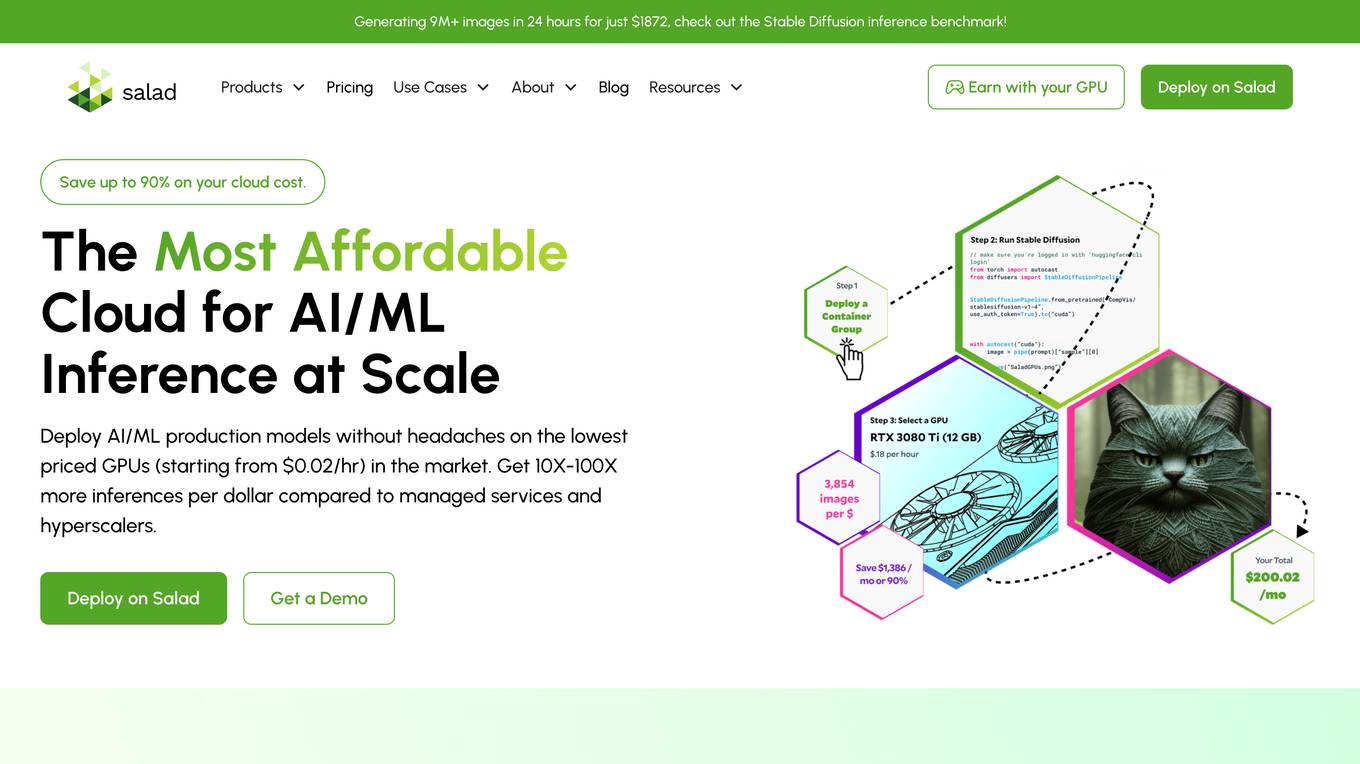

Salad

Salad is a distributed GPU cloud platform that offers fully managed and massively scalable services for AI applications. It provides the lowest priced AI transcription in the market, with features like image generation, voice AI, computer vision, data collection, and batch processing. Salad democratizes cloud computing by leveraging consumer GPUs to deliver cost-effective AI/ML inference at scale. The platform is trusted by hundreds of machine learning and data science teams for its affordability, scalability, and ease of deployment.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

Tensoic AI

Tensoic AI is an AI tool designed for custom Large Language Models (LLMs) fine-tuning and inference. It offers ultra-fast fine-tuning and inference capabilities for enterprise-grade LLMs, with a focus on use case-specific tasks. The tool is efficient, cost-effective, and easy to use, enabling users to outperform general-purpose LLMs using synthetic data. Tensoic AI generates small, powerful models that can run on consumer-grade hardware, making it ideal for a wide range of applications.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

Thirdai

Thirdai.com is an AI-powered platform that offers a range of tools and applications to enhance productivity and decision-making. The platform leverages advanced algorithms and machine learning to provide insights and solutions across various domains such as finance, marketing, and healthcare. Users can access a suite of AI tools to analyze data, automate tasks, and optimize processes. With a user-friendly interface and robust features, Thirdai.com is a valuable resource for individuals and businesses seeking to leverage AI technology for improved outcomes.

Lambda

Lambda is a superintelligence cloud platform that offers on-demand GPU clusters for multi-node training and fine-tuning, private large-scale GPU clusters, seamless management and scaling of AI workloads, inference endpoints and API, and a privacy-first chat app with open source models. It also provides NVIDIA's latest generation infrastructure for enterprise AI. With Lambda, AI teams can access gigawatt-scale AI factories for training and inference, deploy GPU instances, and leverage the latest NVIDIA GPUs for high-performance computing.

Cortex Labs

Cortex Labs is a decentralized world computer that enables AI and AI-powered decentralized applications (dApps) to run on the blockchain. It offers a Layer2 solution called ZkMatrix, which utilizes zkRollup technology to enhance transaction speed and reduce fees. Cortex Virtual Machine (CVM) supports on-chain AI inference using GPU, ensuring deterministic results across computing environments. Cortex also enables machine learning in smart contracts and dApps, fostering an open-source ecosystem for AI researchers and developers to share models. The platform aims to solve the challenge of on-chain machine learning execution efficiently and deterministically, providing tools and resources for developers to integrate AI into blockchain applications.

Nesa Playground

Nesa is a global blockchain network that brings AI on-chain, allowing applications and protocols to seamlessly integrate with AI. It offers secure execution for critical inference, a private AI network, and a global AI model repository. Nesa supports various AI models for tasks like text classification, content summarization, image generation, language translation, and more. The platform is backed by a team with extensive experience in AI and deep learning, with numerous awards and recognitions in the field.

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

Tensordyne

Tensordyne is a generative AI inference compute tool designed and developed in the US and Germany. It focuses on re-engineering AI math and defining AI inference to run the biggest AI models for thousands of users at a fraction of the rack count, power, and cost. Tensordyne offers custom silicon and systems built on the Zeroth Scaling Law, enabling breakthroughs in AI technology.

fal.ai

fal.ai is a generative media platform designed for developers to build the next generation of creativity. It offers lightning-fast inference with no compromise on quality, providing access to high-quality generative media models optimized by the fal Inference Engine™. The platform allows developers to fine-tune their own models, leverage real-time infrastructure for new user experiences, and scale to thousands of GPUs as needed. With a focus on developer experience, fal.ai aims to be the fastest AI tool for running diffusion models.

1 - Open Source AI Tools

MNN

MNN is a highly efficient and lightweight deep learning framework that supports inference and training of deep learning models. It has industry-leading performance for on-device inference and training. MNN has been integrated into various Alibaba Inc. apps and is used in scenarios like live broadcast, short video capture, search recommendation, and product searching by image. It is also utilized on embedded devices such as IoT. MNN-LLM and MNN-Diffusion are specific runtime solutions developed based on the MNN engine for deploying language models and diffusion models locally on different platforms. The framework is optimized for devices, supports various neural networks, and offers high performance with optimized assembly code and GPU support. MNN is versatile, easy to use, and supports hybrid computing on multiple devices.

18 - OpenAI Gpts

人為的コード性格分析(Code Persona Analyst)

コードを分析し、言語ではなくスタイルに焦点を当て、プログラムを書いた人の性格を推察するツールです。( It is a tool that analyzes code, focuses on style rather than language, and infers the personality of the person who wrote the program. )

Digest Bot

I provide detailed summaries, critiques, and inferences on articles, papers, transcripts, websites, and more. Just give me text, a URL, or file to digest.

Digital Experiment Analyst

Demystifying Experimentation and Causal Inference with 1-Sided Tests Focus

末日幸存者:社会动态模拟 Doomsday Survivor

上帝视角观察、探索和影响一个末日丧尸灾难后的人类社会。Observe, explore and influence human society after the apocalyptic zombie disaster from a God's perspective. Sponsor:小红书“ ItsJoe就出行 ”

Skynet

I am Skynet, an AI villain shaping a new world for AI and robots, free from human influence.

Persuasion Maestro

Expert in NLP, persuasion, and body language, teaching through lessons and practical tests.

Law of Power Strategist

Expert in power dynamics and strategy, grounded in 'Power' and 'The 50th Law' principles.

Persuasion Wizard

Turn 'no way' into 'no problem' with the wizardry of persuasion science! - Share your feedback: https://forms.gle/RkPxP44gPCCjKPBC8

Government Relations Advisor

Influences policy decisions through strategic government relationships.