Best AI tools for< Improve Throughput >

20 - AI tool Sites

ThroughPut AI

ThroughPut AI is a supply chain decision intelligence and analytics platform designed for outcome-driven supply chain decision-makers. It provides accurate demand forecasting, capacity planning, logistics management, and financial insights to drive business results. ThroughPut AI offers a single source of truth for supply chain professionals, enabling them to make faster, better, and confident decisions. The platform helps unlock efficiency, profitability, and growth in day-to-day operations by providing intelligent data-driven recommendations.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

Just Walk Out technology

Just Walk Out technology is a checkout-free shopping experience that allows customers to enter a store, grab whatever they want, and quickly get back to their day, without having to wait in a checkout line or stop at a cashier. The technology uses camera vision and sensor fusion, or RFID technology which allows them to simply walk away with their items. Just Walk Out technology is designed to increase revenue with cost-optimized technology, maximize space productivity, increase throughput, optimize operational costs, and improve shopper loyalty.

Hippo Video

Hippo Video is an AI-powered video platform designed for Go-To-Market (GTM) teams. It offers a comprehensive solution for sales, marketing, campaigns, customer support, and communications. The platform enables users to create interactive videos easily and quickly, transform text into videos at scale, and personalize video campaigns. With features like Text-to-Video, AI Avatar Video Generator, Video Flows, and AI Editor, Hippo Video helps businesses enhance engagement, accelerate video production, and improve customer self-service.

Rupa.AI

Rupa.AI is an AI-powered photo enhancement tool that leverages the latest advancements in artificial intelligence to enhance your photos effortlessly. With Rupa.AI, you can transform your ordinary photos into stunning visuals with just a few clicks. Whether you want to improve the lighting, colors, or overall quality of your images, Rupa.AI provides intuitive tools to help you achieve professional-level results. Say goodbye to complex editing software and hello to a seamless photo enhancement experience with Rupa.AI.

Weekly Workout

Weekly Workout is an AI-powered fitness platform that provides personalized workout plans and tracks your progress. With Weekly Workout, you can get four days of invigorating exercise routines every week, tailored to your fitness level and goals. The platform also offers a weekly newsletter with tips and advice from fitness experts.

Conversica

Conversica is a leading provider of AI-powered conversation automation solutions for revenue teams. Its platform enables businesses to engage with prospects and customers in personalized, two-way conversations at scale, helping them to generate more leads, close more deals, and improve customer satisfaction. Conversica's Revenue Digital Assistants are equipped with a library of revenue-hunting skills and conversations, and they can be customized to fit the specific needs of each business. The platform is easy to use and integrates with a variety of CRM and marketing automation systems.

Trevor AI

Trevor AI is a daily planner and task scheduling co-pilot that helps users organize, schedule, and automate their tasks. It features a task hub, calendar integration, AI scheduling suggestions, focus mode, and daily planning insights. Trevor AI is designed to help users improve their productivity, clarity, and focus.

Tabnine

Tabnine is an AI code assistant that accelerates and simplifies software development by providing best-in-class AI code generation, personalized AI chat support throughout the software development life cycle, and context-aware coding assistance. It ensures total code privacy and zero data retention, protecting the confidentiality and integrity of your codebase. Tabnine offers complete protection from intellectual property issues and is trusted by millions of developers and thousands of companies worldwide.

Qodo

Qodo is a quality-first generative AI coding platform that helps developers write, test, and review code within IDE and Git. The platform offers automated code reviews, contextual suggestions, and comprehensive test generation, ensuring robust, reliable software development. Qodo integrates seamlessly to maintain high standards of code quality and integrity throughout the development process.

NeuralCam

NeuralCam is an AI-powered photography application that leverages the power of AI throughout the photography process to help users capture better photos. It offers a 3-step AI photography system that includes composition guidance, smart capturing modes, and professional-level auto-editing features. NeuralCam provides users with a professional photography experience by enhancing images, adding portrait effects, and enabling color grading. Users can access core features for free or opt for the Pro or Pro + Coach subscription plans for advanced controls and real-time guidance while shooting.

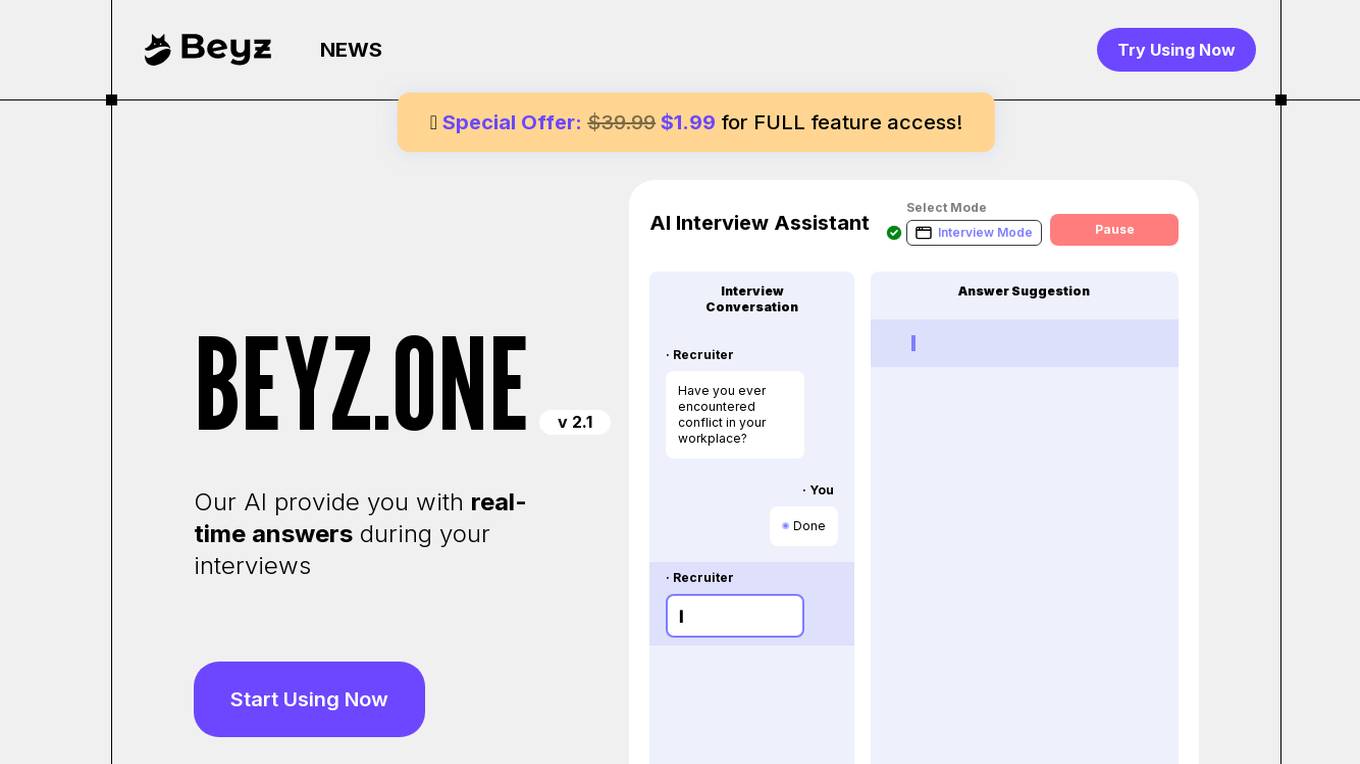

Beyz AI

Beyz AI is an AI Interview Assistant application that provides real-time answers during interviews. It offers features such as auto-translate, tailored interview prep modes, and universal meeting compatibility. The application aims to help users improve their interview performance and ensure success by leveraging AI technology. With a focus on enhancing interview skills and providing instant, tailored help, Beyz AI is designed to support users throughout the interview process.

Phenom

Phenom is an AI-powered talent experience platform that connects people, data, and interactions to deliver amazing experiences throughout the journey using intelligence and automation. It helps in hyper-personalizing candidate engagement, developing and retaining employees with intelligence, improving recruiter productivity through automation, and hiring talent faster with AI. Phenom offers a range of features and benefits to streamline the talent acquisition process and enhance the overall recruitment experience.

DeploySaaS

DeploySaaS is an AI tool designed to assist users in launching their SaaS products more effectively and efficiently. It provides guidance and support throughout the entire process, from idea validation to product launch. By leveraging AI technology, DeploySaaS aims to help users avoid common pitfalls in SaaS development and make data-driven decisions to achieve product-market fit.

Faraday

Faraday is a no-code AI platform that helps businesses make better predictions about their customers. With Faraday, businesses can embed AI into their workflows throughout their stack to improve the performance of their favorite tools. Faraday offers a variety of features, including propensity modeling, persona creation, and churn prediction. These features can be used to improve marketing campaigns, customer service, and product development. Faraday is easy to use and requires no coding experience. It is also affordable and offers a free-forever plan.

SPREAD AI

SPREAD AI is an AI application that provides Engineering Intelligence solutions for various industries such as Automotive & Mobility, Aerospace & Defense, and Industrial Goods & Machinery. It unifies fragmented engineering data into living Product Twins, enabling engineers and AI agents to share the same system-level understanding. The platform offers rapid data ingestion, contextualization of product data, and harnessing Engineering Intelligence in an open platform. SPREAD AI helps in faster innovation, lower costs, and better products throughout the product lifecycle from R&D to Production to Aftermarket.

Harness

Harness is an AI-driven software delivery platform that empowers software engineering teams with AI-infused technology for seamless software delivery. It offers a single platform for all software delivery needs, including DevOps modernization, continuous delivery, GitOps, feature flags, infrastructure as code management, chaos engineering, service reliability management, secure software delivery, cloud cost optimization, and more. Harness aims to simplify the developer experience by providing actionable insights on SDLC, secure software supply chain assurance, and AI development assistance throughout the software delivery lifecycle.

Intelligencia AI

Intelligencia AI is a leading provider of AI-powered solutions for the pharmaceutical industry. Our suite of solutions helps de-risk and enhance clinical development and decision-making. We use a combination of data, AI, and machine learning to provide insights into the probability of success for drugs across multiple therapeutic areas. Our solutions are used by many of the top global pharmaceutical companies to improve their R&D productivity and make more informed decisions.

Nabubit

Nabubit is an AI-powered tool designed to assist users in database design. It serves as a virtual copilot, providing guidance and suggestions throughout the database design process. With Nabubit, users can streamline their database creation, optimize performance, and ensure data integrity. The tool leverages artificial intelligence to analyze data requirements, suggest schema designs, and enhance overall database efficiency. Nabubit is a valuable resource for developers, data analysts, and businesses looking to improve their database management practices.

Deep Space AI

Deep Space AI is an innovative platform that revolutionizes the construction industry by providing intelligent solutions for design and construction workflows. The platform offers collaborative data management, actionable insights, and coordination tools to streamline construction processes and improve operational efficiency. Deep Space AI enhances transparency, boosts productivity, and empowers teams to make informed decisions throughout all project phases. Trusted by leading teams in the design and construction industry, Deep Space AI is a game-changer in the AECO sector.

1 - Open Source AI Tools

LMCache

LMCache is a serving engine extension designed to reduce time to first token (TTFT) and increase throughput, particularly in long-context scenarios. It stores key-value caches of reusable texts across different locations like GPU, CPU DRAM, and Local Disk, allowing the reuse of any text in any serving engine instance. By combining LMCache with vLLM, significant delay savings and GPU cycle reduction are achieved in various large language model (LLM) use cases, such as multi-round question answering and retrieval-augmented generation (RAG). LMCache provides integration with the latest vLLM version, offering both online serving and offline inference capabilities. It supports sharing key-value caches across multiple vLLM instances and aims to provide stable support for non-prefix key-value caches along with user and developer documentation.

20 - OpenAI Gpts

UX & UI

Gives you tips and suggestions on how you can improve your application for your users.

Memory Enhancer

Offers exercises and techniques to improve memory retention and cognitive functions.

English Conversation Role Play Creator

Generates conversation examples and chunks for specified situations. Improve your instantaneous conversational skills through repetitive practice!

Customer Retention Consultant

Analyzes customer churn and provides strategies to improve loyalty and retention.

Agile Coach Expert

Agile expert providing practical, step-by-step advice with the agile way of working of your team and organisation. Whether you're looking to improve your Agile skills or find solutions to specific problems. Including Scrum, Kanban and SAFe knowledge.

Kemi - Research & Creative Assistant

I improve marketing effectiveness by designing stunning research-led assets in a flash!

Quickest Feedback for Language Learner

Helps improve language skills through interactive scenarios and feedback.

Le VPN - Your Secure Internet Proxy

Bypass Internet censorship & improve your security online

実践スキルが身につく営業ロールプレイング:【エキスパートクラス】

実践スキル向上のための対話型学習アシスタント (Interactive learning assistant to improve practical skills)

Your personal GRC & Security Tutor

A training tool for infosec professionals to improve their skills in GRC & security and help obtain related certifications.

Anna, the Ethical Essay Guide

Guides in structuring essays to improve writing skills, adapting to skill levels.

MetaGPT : Meta Ads AI Marketing Co-Pilot

Expert in Meta advertising that can improve your ROI. Official Meta GPT built by dicer.ai