Best AI tools for< Improve Data Literacy >

20 - AI tool Sites

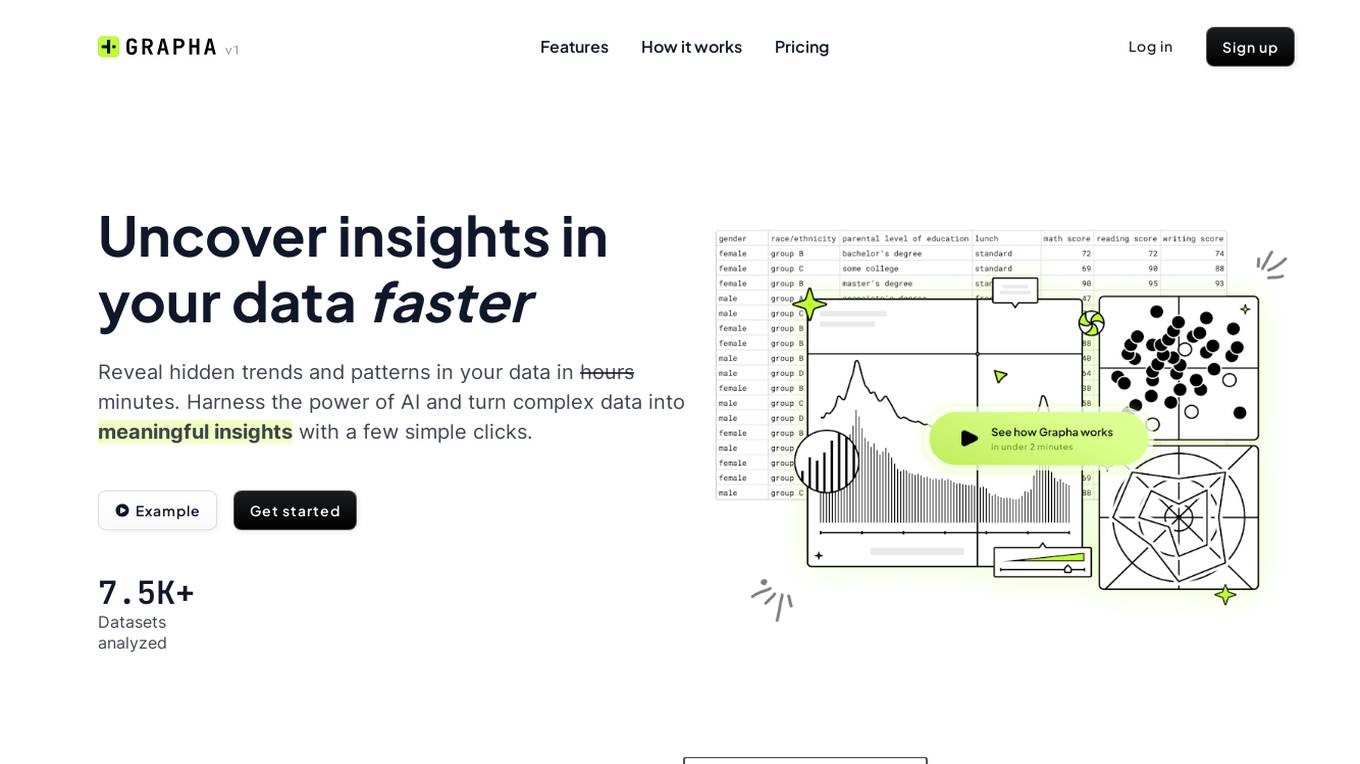

Grapha.ai

Grapha.ai is a data exploration tool that helps users to quickly and easily explore and understand their data. It provides a variety of features to help users to visualize their data, identify trends and patterns, and make informed decisions. Grapha.ai is designed to be easy to use, even for users with no prior experience with data analysis.

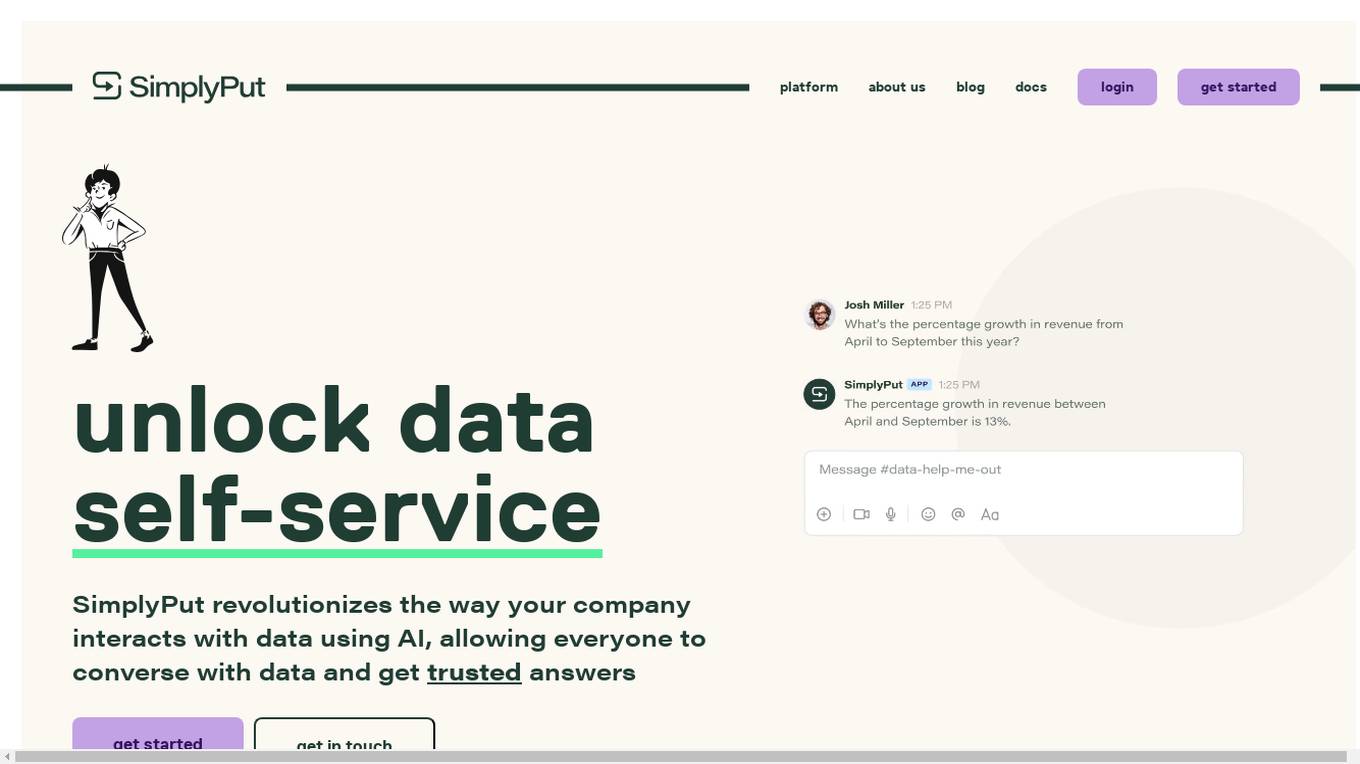

SimplyPut

SimplyPut is an AI-powered data analytics platform that allows users to ask questions about their data in natural language and get instant answers. It is designed to be intuitive and easy to use, and it can be integrated with a variety of data sources. SimplyPut is used by businesses of all sizes to improve their data literacy and make better decisions.

AI Unlock

AI Unlock is a sales training platform that focuses on teaching sales teams how to leverage AI to improve efficiency and productivity. The platform offers comprehensive training on AI fundamentals, sales techniques, industry knowledge, AI products, consultative selling, data literacy, communication skills, competitive analysis, ethical considerations, and continuous learning in AI. AI Unlock aims to help companies stay ahead in the fast-evolving AI industry by providing effective, fast, and low-cost AI training programs. The platform also offers on-going training, updates, and a community of AI sales professionals to support continuous learning and development.

.Training

The website .Training provides comprehensive information on the impact of AI and automation on workforce training. It discusses how AI and automation are reshaping job roles, necessitating reskilling, and enhancing learning experiences. The platform emphasizes the importance of organizations adapting to these changes to prepare workforces for automated futures by leveraging AI technology to improve training effectiveness. It also highlights the significance of AI literacy across various functions and the need for continuous upskilling to thrive in an increasingly automated economy.

Marzy

Marzy is an interactive character powered by advanced AI language models, designed to engage children in real conversations and inspire critical thinking and problem-solving skills. It offers personalized learning experiences tailored to each child's natural path, focusing on topics like homework, academics, executive functioning, and self-regulation. Marzy also promotes AI literacy, digital literacy, and media literacy, preparing children for the challenges of the 21st century. The application is built by experts in education, science, engineering, and art, following best practices in learning science to enhance focus, deep thinking, and curiosity. Additionally, Marzy provides a White Glove Program for personalized support and ensures data security and privacy for families.

Storywizard.ai

Storywizard.ai is an AI-powered platform that helps students create and refine their own fully illustrated and editable stories in any of eight languages. It also provides teachers with tools to create and track customized reading and writing assignments. Storywizard.ai is designed to help students improve their literacy skills, engage with fun and educational exercises, and increase their motivation to learn.

DQLabs

DQLabs is a modern data quality platform that leverages observability to deliver reliable and accurate data for better business outcomes. It combines the power of Data Quality and Data Observability to enable data producers, consumers, and leaders to achieve decentralized data ownership and turn data into action faster, easier, and more collaboratively. The platform offers features such as data observability, remediation-centric data relevance, decentralized data ownership, enhanced data collaboration, and AI/ML-enabled semantic data discovery.

Datuum

Datuum is an AI-powered data onboarding solution that offers seamless integration for businesses. It simplifies the data onboarding process by automating manual tasks, generating code, and ensuring data accuracy with AI-driven validation. Datuum helps businesses achieve faster time to value, reduce costs, improve scalability, and enhance data quality and consistency.

Roundtable

Roundtable is an AI-powered tool designed to improve market research data quality by leveraging Human-in-the-Loop AI technology. It helps businesses prevent bots and fraud in real-time while ensuring a seamless user experience. The tool offers features such as invisible CAPTCHA, continuous behavioral AI, privacy-preserving security, effortless integration, and explainable results. Roundtable is trusted by global platforms and is built to scale with businesses of all sizes.

Coginiti

Coginiti is a collaborative analytics platform and tools designed for SQL developers, data scientists, engineers, and analysts. It offers capabilities such as AI assistant, data mesh, database & object store support, powerful query & analysis, and share & reuse curated assets. Coginiti empowers teams and organizations to manage collaborative practices, data efficiency, and deliver trusted data products faster. The platform integrates modular analytic development, collaborative versioned teamwork, and a data quality framework to enhance productivity and ensure data reliability. Coginiti also provides an AI-enabled virtual analytics advisor to boost team efficiency and empower data heroes.

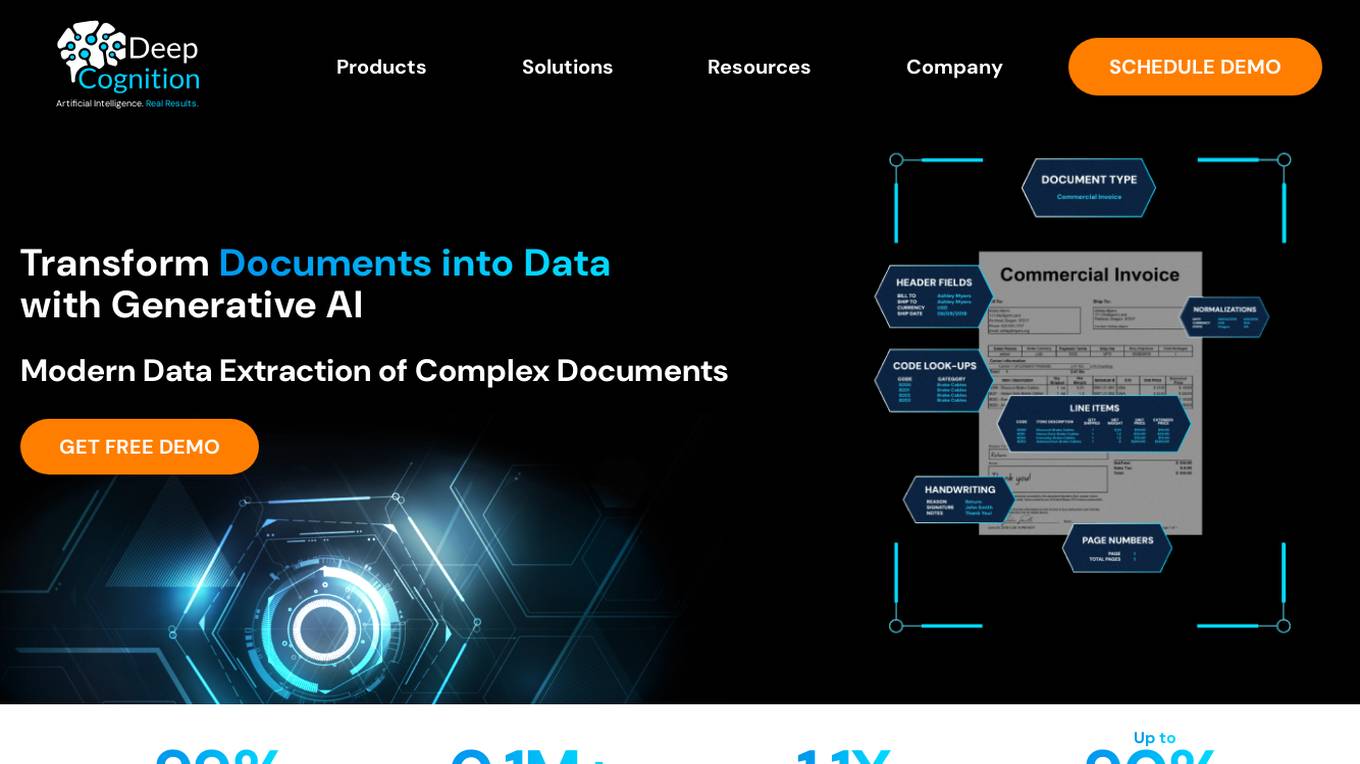

PaperEntry AI

Deep Cognition offers PaperEntry AI, an Intelligent Document Processing solution powered by generative AI. It automates data entry tasks with high accuracy, scalability, and configurability, handling complex documents of any type or format. The application is trusted by leading global organizations for customs clearance automation and government document processing, delivering significant time and cost savings. With industry-specific features and a proven track record, Deep Cognition provides a state-of-the-art solution for businesses seeking efficient data extraction and automation.

Eigen Technologies

Eigen Technologies is an AI-powered data extraction platform designed for business users to automate the extraction of data from various documents. The platform offers solutions for intelligent document processing and automation, enabling users to streamline business processes, make informed decisions, and achieve significant efficiency gains. Eigen's platform is purpose-built to deliver real ROI by reducing manual processes, improving data accuracy, and accelerating decision-making across industries such as corporates, banks, financial services, insurance, law, and manufacturing. With features like generative insights, table extraction, pre-processing hub, and model governance, Eigen empowers users to automate data extraction workflows efficiently. The platform is known for its unmatched accuracy, speed, and capability, providing customers with a flexible and scalable solution that integrates seamlessly with existing systems.

Seudo

Seudo is a data workflow automation platform that uses AI to help businesses automate their data processes. It provides a variety of features to help businesses with data integration, data cleansing, data transformation, and data analysis. Seudo is designed to be easy to use, even for businesses with no prior experience with AI. It offers a drag-and-drop interface that makes it easy to create and manage data workflows. Seudo also provides a variety of pre-built templates that can be used to get started quickly.

Sicara

Sicara is a data and AI expert platform that helps clients define and implement data strategies, build data platforms, develop data science products, and automate production processes with computer vision. They offer services to improve data performance, accelerate data use cases, integrate generative AI, and support ESG transformation. Sicara collaborates with technology partners to provide tailor-made solutions for data and AI challenges. The platform also features a blog, job offers, and a team of experts dedicated to enhancing productivity and quality in data projects.

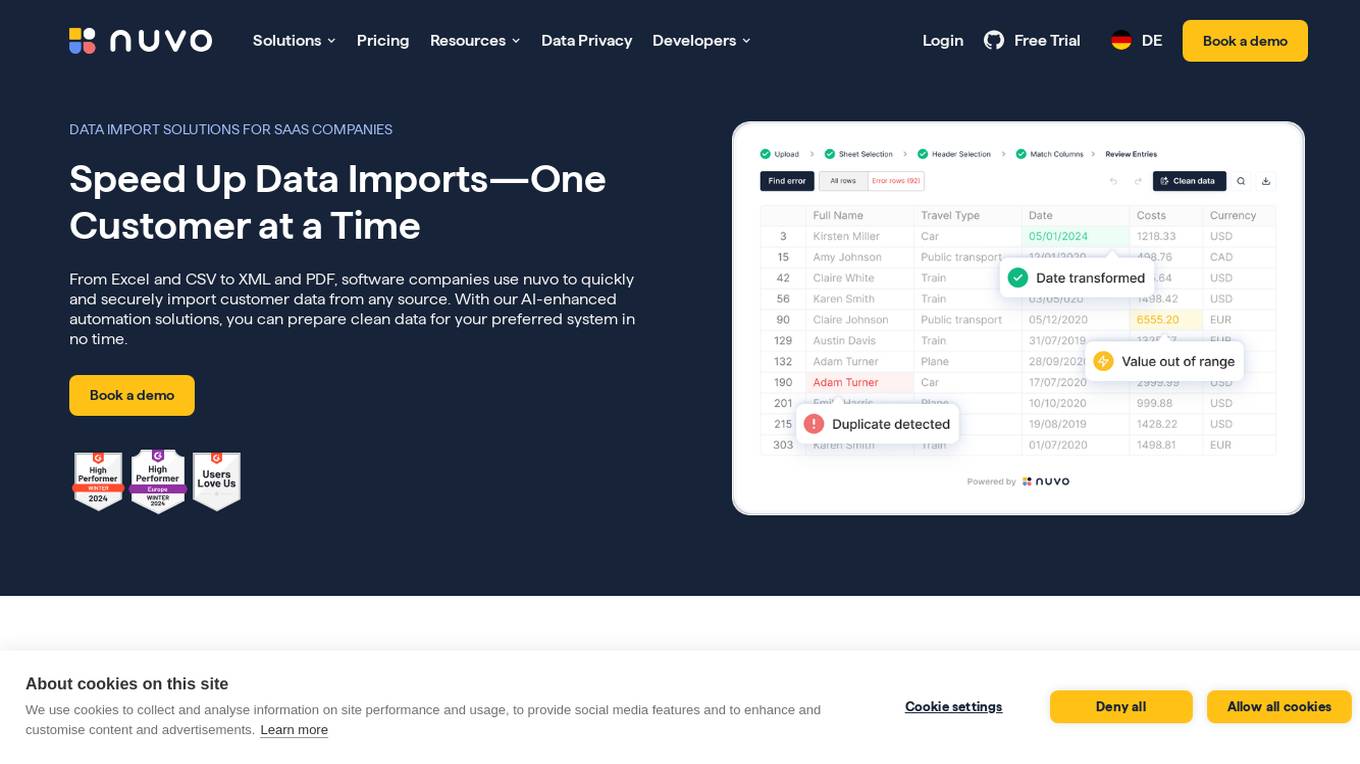

nuvo

nuvo is an AI-powered data import solution that offers fast, secure, and scalable data import solutions for software companies. It provides tools like nuvo Data Importer SDK and nuvo Data Pipeline to streamline manual and recurring ETL data imports, enabling users to manage data imports independently. With AI-enhanced automation, nuvo helps prepare clean data for preferred systems quickly and efficiently, reducing manual effort and improving data quality. The platform allows users to upload unlimited data in various formats, match imported data to system schemas, clean and validate data, and import clean data into target systems with just a click.

Swyft AI

Swyft AI is an AI-powered tool that automates CRM data capture and sales motions. It integrates with popular CRM and web conferencing tools, allowing sales teams to save time and improve data accuracy. Swyft AI's key features include automatic CRM data capture, workflow automation, and call summarization. It offers advantages such as improved data hygiene, increased sales productivity, and reduced manual work for revenue teams.

Prompt Security

Prompt Security is a platform that secures all uses of Generative AI in the organization: from tools used by your employees to your customer-facing apps.

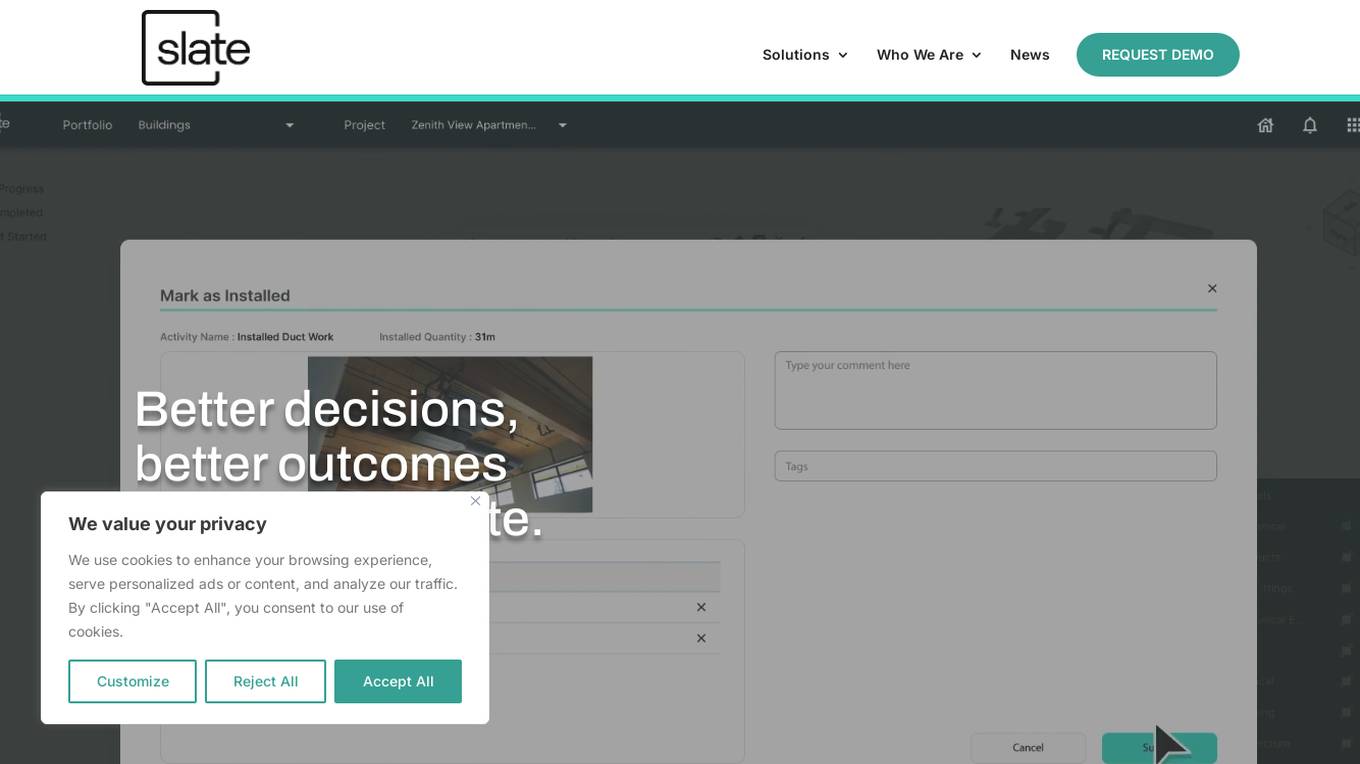

Slate Technologies Solutions

Slate Technologies Solutions is an AI-powered data analytics software that leverages predictive AI, generative AI, and conversational AI to provide a powerful toolkit for next-generation construction. The software connects, contextualizes, and enhances relevant information within existing data sources, allowing users to query, interact, and make decisions based on data insights and recommendations. Slate aims to address real-world construction problems by empowering teams with AI-driven intelligence, optimizing data, and turning unstructured information into actionable insights. The application improves operational efficiency, provides real-time progress reporting, and enables teams to make smarter decisions, ultimately driving profitability and success in construction projects.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes with self-optimizing, lossless compression. It offers state-of-the-art technology that works seamlessly across various platforms like Iceberg, Delta, Trino, Spark, Snowflake, and Databricks. Granica helps organizations reduce storage costs, improve query performance, and enhance data accessibility for AI and analytics workloads.

Enlitic

Enlitic provides healthcare data solutions that leverage artificial intelligence to improve data management, clinical workflows, and create a foundation for real-world evidence medical image databases. Their products, ENDEX and ENCOG, utilize computer vision and natural language processing to standardize, protect, and analyze medical imaging data, enabling healthcare providers to optimize workflows, increase efficiencies, and expand capacity.

0 - Open Source AI Tools

20 - OpenAI Gpts

👑 Data Privacy for Language & Training Centers 👑

Language and Skill Training Centers collect personal information of learners, including progress tracking and sometimes payment details.

Missing Cluster Identification Program

I analyze and integrate missing clusters in data for coherent structuring.

Mermaid Architect GPT | 💡 -> 👁

Turn your projects' Ideas into Clear Flowcharts(data flow) with Recommended Tech Stack

FAANG.AI

Get into FAANG. Practice with an AI expert in algorithms, data structures, and system design. Do a mock interview and improve.

Palm Reader

Moved to https://chat.openai.com/g/g-KFnF7qssT-palm-reader . Interprets palm readings from user-uploaded hand images. Turned off setting to use data for OpenAi to improve model.

Face Reader

Moved to https://chat.openai.com/g/g-q6GNcOkYx-face-reader. Reads faces to tell fortunes based on Chinese face reading. Turned off setting to use data for OpenAi to improve model.

Deal Architect

Designing Strategic M&A Blueprints for Success in buying, selling or merging companies. Use this GPT to simplify, speed up and improve the quality of the M&A process. With custom data - 100s of creative options in deal flow, deal structuring, financing and more. **Version 2.2 - 28012024**

📩 メール執筆・校正アシスタント【✅セキュリティ強化済み】

メールの作成・添削・返信の提案などを行います。メールの校正は、草案をそのままコピペするだけで可能です。件名・署名・添付ファイルなどを忘れないようリマインドも行います。さらにこのGPTは、特別な設定で「Use conversation data in your GPT to improve our models」の項目をOFFにしています。そのため、うっかり機密情報が含まれるメール文を送信してしまった場合でも、ChatGPTの学習に利用されないと思われます。 ※ただし、今後の仕様変更や他の経路からの情報漏洩などのリスクもありえます。個人情報は決して書き込まないでください。

Coach Gestion Data

Collecte et analyse de données sur la résilience aux catastrophes naturelles.

👑 Data Privacy for Pet Grooming Services 👑

Pet Grooming and Boarding Services store pet owner contact information, pet health data, and service preferences.

Customer Retention Consultant

Analyzes customer churn and provides strategies to improve loyalty and retention.

Data Analysis - SERP

it helps me analyze serp results and data from certain websites in order to create an outline for the writer

Data-Driven Messaging Campaign Generator

Create, analyze & duplicate customized automated message campaigns to boost retention & drive revenue for your website or app