Best AI tools for< Identify Safety Risks >

20 - AI tool Sites

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

ReviewGPT

ReviewGPT is an AI-powered tool that helps users analyze Amazon products and reviews to make informed purchasing decisions. It utilizes AI to identify counterfeit products, pirated books, fake reviews, unreliable third-party sellers, and products with potential safety or health risks. By leveraging ReviewGPT, users can save time and money while ensuring they purchase genuine and high-quality products from Amazon.

SWMS AI

SWMS AI is an AI-powered safety risk assessment tool that helps businesses streamline compliance and improve safety. It leverages a vast knowledge base of occupational safety resources, codes of practice, risk assessments, and safety documents to generate risk assessments tailored specifically to a project, trade, and industry. SWMS AI can be customized to a company's policies to align its AI's document generation capabilities with proprietary safety standards and requirements.

Limbic

Limbic is a clinical AI application designed for mental healthcare providers to save time, improve outcomes, and maximize impact. It offers a suite of tools developed by a team of therapists, physicians, and PhDs in computational psychiatry. Limbic is known for its evidence-based approach, safety focus, and commitment to patient care. The application leverages AI technology to enhance various aspects of the mental health pathway, from assessments to therapeutic content delivery. With a strong emphasis on patient safety and clinical accuracy, Limbic aims to support clinicians in meeting the rising demand for mental health services while improving patient outcomes and preventing burnout.

Fordi

Fordi is an AI management tool that helps businesses avoid risks in real-time. It provides a comprehensive view of all AI systems, allowing businesses to identify and mitigate risks before they cause damage. Fordi also provides continuous monitoring and alerting, so businesses can be sure that their AI systems are always operating safely.

VirtuSense Technologies

VirtuSense Technologies is a leading provider of fall prevention and remote patient monitoring (RPM) solutions powered by artificial intelligence (AI). Their AI-driven solutions, VSTAlert and VSTBalance, are designed to help healthcare providers reduce falls, improve patient safety, and enhance care delivery. VSTAlert is a fall prevention system that uses AI to detect falls before they happen, reducing the risk of injury and improving patient outcomes. VSTBalance is a balance assessment tool that helps clinicians identify patients at risk of falling and provides personalized exercises to improve their balance and mobility. VirtuSense's solutions integrate with various healthcare systems and are used by hospitals, post-acute care facilities, and ambulatory care centers to improve patient care and reduce costs.

Kount

Kount is a comprehensive trust and safety platform that offers solutions for fraud detection, chargeback management, identity verification, and compliance. With advanced artificial intelligence and machine learning capabilities, Kount provides businesses with robust data and customizable policies to protect against various threats. The platform is suitable for industries such as ecommerce, health care, online learning, gaming, and more, offering personalized solutions to meet individual business needs.

MarqVision

MarqVision is a modern brand protection platform that offers solutions for full marketplace coverage, anti-counterfeit measures, impersonation monitoring, offline services including revenue recovery, and content protection services such as pirated content detection and illegal listings removal. The platform also provides trademark management services and industry-specific solutions for beauty, fashion, auto, pharmaceuticals, food & beverage, network marketing, and natural health products.

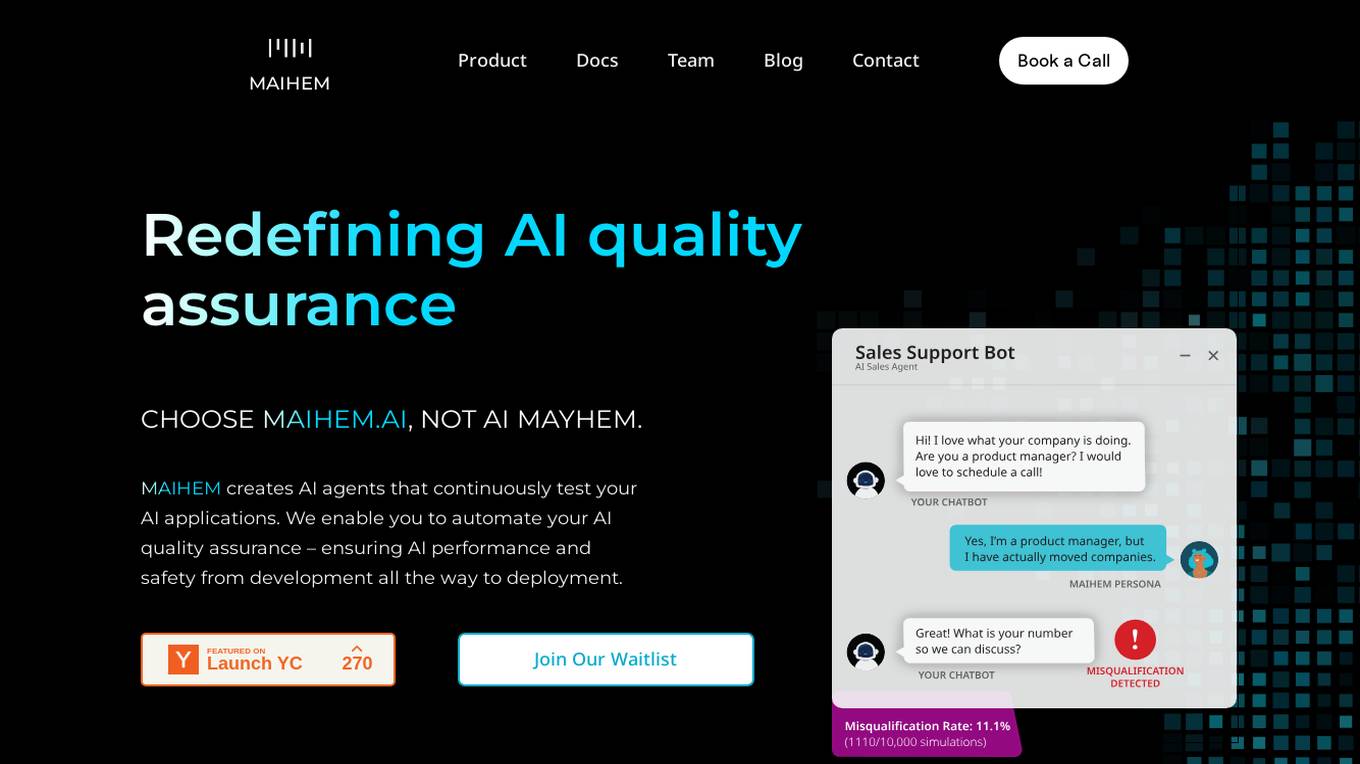

MAIHEM

MAIHEM is an AI-powered quality assurance platform that helps businesses test and improve the performance and safety of their AI applications. It automates the testing process, generates realistic test cases, and provides comprehensive analytics to help businesses identify and fix potential issues. MAIHEM is used by a variety of businesses, including those in the customer support, healthcare, education, and sales industries.

Prompt Hippo

Prompt Hippo is an AI tool designed as a side-by-side LLM prompt testing suite to ensure the robustness, reliability, and safety of prompts. It saves time by streamlining the process of testing LLM prompts and allows users to test custom agents and optimize them for production. With a focus on science and efficiency, Prompt Hippo helps users identify the best prompts for their needs.

Insitro

Insitro is a drug discovery and development company that uses machine learning and data to identify and develop new medicines. The company's platform integrates in vitro cellular data produced in its labs with human clinical data to help redefine disease. Insitro's pipeline includes wholly-owned and partnered therapeutic programs in metabolism, oncology, and neuroscience.

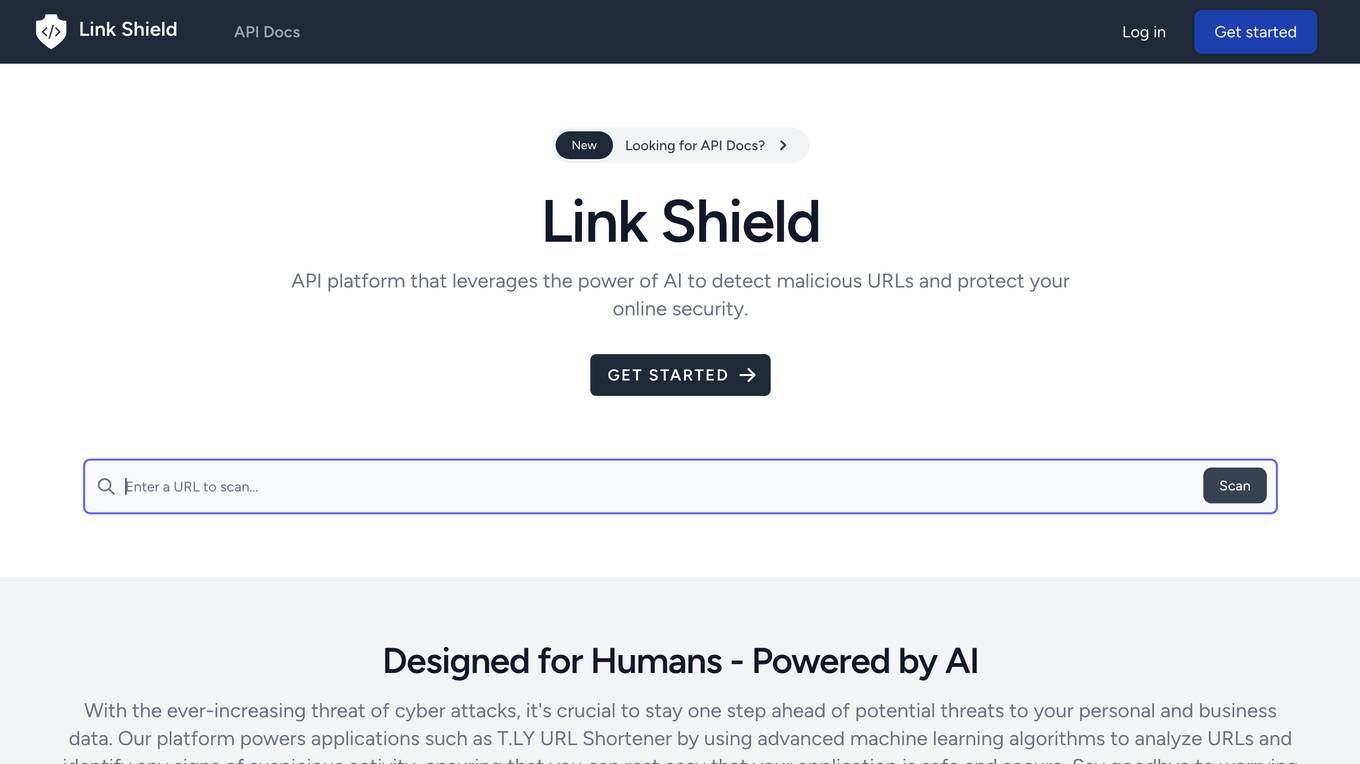

Link Shield

Link Shield is an AI-powered malicious URL detection API platform that helps protect online security. It utilizes advanced machine learning algorithms to analyze URLs and identify suspicious activity, safeguarding users from phishing scams, malware, and other harmful threats. The API is designed for ease of integration, affordability, and flexibility, making it accessible to developers of all levels. Link Shield empowers businesses to ensure the safety and security of their applications and online communities.

FaceCheck.ID

FaceCheck.ID is a facial recognition AI technology-powered search engine that allows users to upload a photo of a person to discover their social media profiles, appearances in blogs, videos, news websites, and more. It helps users verify the authenticity of individuals, avoid dangerous criminals, keep their families safe, and avoid becoming victims of various scams and crimes. The tool is designed to assist in identifying and uncovering information about individuals based on their facial features, with a focus on safety and security.

Regard

Regard is an AI-powered healthcare solution that automates clinical tasks, making it easier for clinicians to focus on patient care. It integrates with the EHR to analyze patient records and provide insights that can help improve diagnosis and treatment. Regard has been shown to improve hospital finances, patient safety, and physician happiness.

TripleWhale

TripleWhale is a website that provides security services to protect itself from online attacks. It uses Cloudflare to block unauthorized access and ensure the safety of the website. Users may encounter blocks due to various triggers such as submitting specific words or phrases, SQL commands, or malformed data. In such cases, users can contact the site owner to resolve the issue by providing details of the blocked action and the Cloudflare Ray ID.

Ignota Labs

Ignota Labs is a technology company focused on rescuing failing drugs and bringing new life to abandoned projects, ultimately providing hope to patients. The company utilizes a proprietary AI model, SAFEPATH, which applies deep learning to bioinformatics and cheminformatics datasets to solve drug safety issues. Ignota Labs aims to identify promising drug targets, address safety problems in clinical trials, and accelerate the delivery of therapeutically effective drugs to patients.

icetana AI

icetana AI is a self-learning AI tool designed for real-time event detection in security surveillance systems. It seamlessly connects to existing security cameras, learns normal patterns, and highlights unusual events without compromising privacy. The system continuously evolves to improve security team decision-making. icetana AI offers a suite of products for safety and security, analytics, forensics, license plate recognition, facial recognition, and automating security workflows. It is ideal for industries like mall management, education, guarding services, safe cities, and more.

Yuna

Yuna is an AI-powered mental health companion designed to support users in navigating life's challenges and improving their well-being. It offers 24/7 emotional support, calming techniques for anxiety management, and personalized guidance based on cognitive behavioral therapy principles. Yuna prioritizes user privacy and safety, utilizing real-time monitoring to identify signs of distress and connect users with qualified healthcare professionals when necessary.

Aura

Aura is an all-in-one digital safety platform that uses artificial intelligence (AI) to protect your family online. It offers a wide range of features, including financial fraud protection, identity theft protection, VPN & online privacy, antivirus, password manager & smart vault, parental controls & safe gaming, and spam call protection. Aura is easy to use and affordable, and it comes with a 60-day money-back guarantee.

Seventh Sense

Seventh Sense is an AI company focused on providing cutting-edge AI solutions for secure and private identity verification. Their innovative technologies, such as SenseCrypt, OpenCV FR, and SenseVantage, offer advanced biometric verification, face recognition, and AI video analysis. With a mission to make self-sovereign identity accessible to all, Seventh Sense ensures privacy, security, and compliance through their AI algorithms and cryptographic solutions.

1 - Open Source AI Tools

awesome_LLM-harmful-fine-tuning-papers

This repository is a comprehensive survey of harmful fine-tuning attacks and defenses for large language models (LLMs). It provides a curated list of must-read papers on the topic, covering various aspects such as alignment stage defenses, fine-tuning stage defenses, post-fine-tuning stage defenses, mechanical studies, benchmarks, and attacks/defenses for federated fine-tuning. The repository aims to keep researchers updated on the latest developments in the field and offers insights into the vulnerabilities and safeguards related to fine-tuning LLMs.

20 - OpenAI Gpts

Brand Safety Audit

Get a detailed risk analysis for public relations, marketing, and internal communications, identifying challenges and negative impacts to refine your messaging strategy.

The Building Safety Act Bot (Beta)

Simplifying the BSA for your project. Created by www.arka.works

Hazard Analyst

Generates risk maps, emergency response plans and safety protocols for disaster management professionals.

Individual Intelligence Oriented Alignment

Ask this AI anything about alignment and it will give the best scenario the superintelligence should do according to its Alignment Principals.

Together

GPT for drug interactions. Enter at least two medication names to learn about potential drug interactions.

PharmaFinder AI

Identifies medications and active ingredients from photos for user safety.

Chemistry Expert

Advanced AI for chemistry, offering innovative solutions, process optimizations, and safety assessments, powered by OpenAI.