Best AI tools for< Generate Llm Context >

20 - AI tool Sites

ContextClue

ContextClue is an AI text analysis tool that offers enhanced document insights through features like text summarization, report generation, and LLM-driven semantic search. It helps users summarize multi-format content, automate document creation, and enhance research by understanding context and intent. ContextClue empowers users to efficiently analyze documents, extract insights, and generate content with unparalleled accuracy. The tool can be customized and integrated into existing workflows, making it suitable for various industries and tasks.

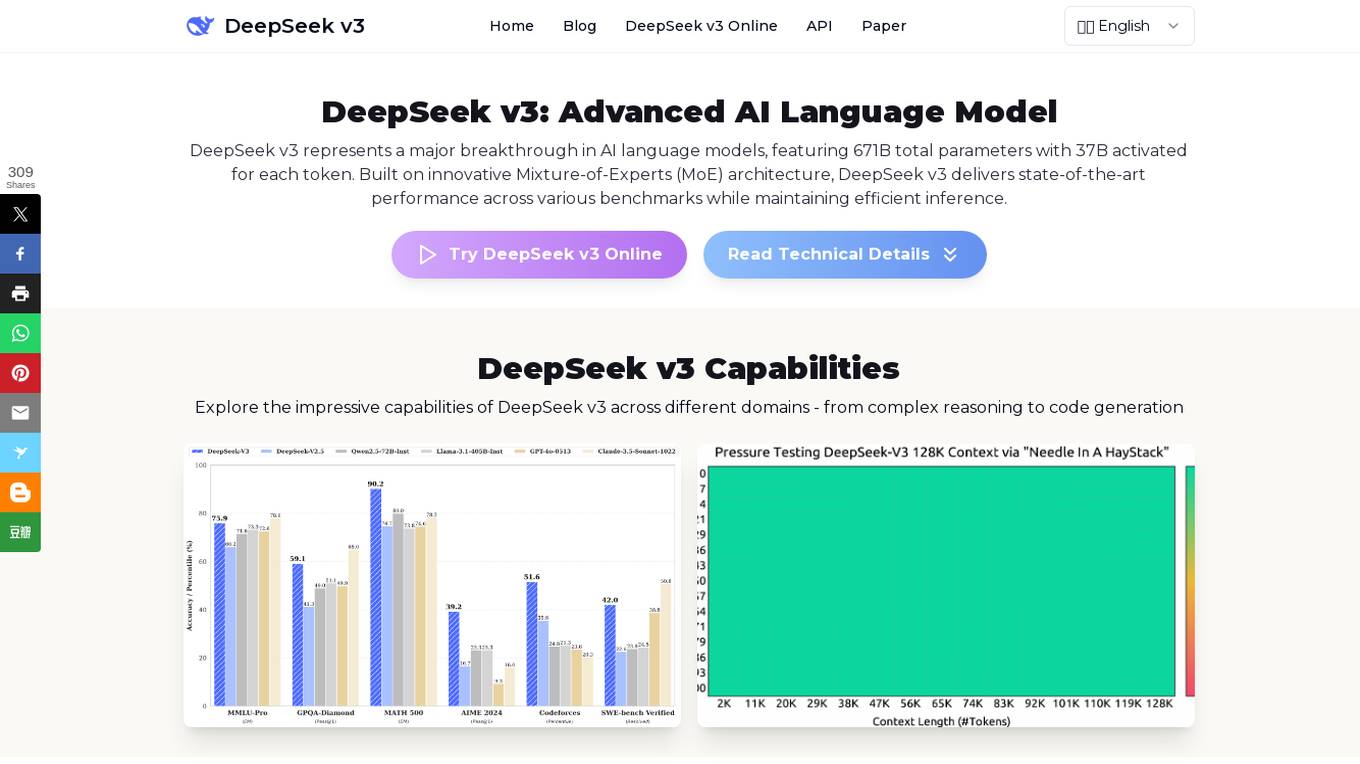

DeepSeek v3

DeepSeek v3 is an advanced AI language model that represents a major breakthrough in AI language models. It features a groundbreaking Mixture-of-Experts (MoE) architecture with 671B total parameters, delivering state-of-the-art performance across various benchmarks while maintaining efficient inference capabilities. DeepSeek v3 is pre-trained on 14.8 trillion high-quality tokens and excels in tasks such as text generation, code completion, and mathematical reasoning. With a 128K context window and advanced Multi-Token Prediction, DeepSeek v3 sets new standards in AI language modeling.

BackX

BackX is an AI development platform that empowers developers to quickly ship backends across various use cases, environments, and scales. It offers unparalleled accuracy, flexibility, and efficiency by overcoming the limitations of traditional AI-assisted programming. With features like one-click production-grade code, context-aware consistent code output, versioned artifacts, instant deploy, and a suite of AI-powered dev tools, BackX revolutionizes the backend development process. Developers can effortlessly design and manage databases, generate CRUD operations, implement complex business logic, and deploy serverless applications with ease. The platform aims to streamline development processes, increase cost-effectiveness, and provide more accurate outputs than traditional methods.

Yellow.ai

Yellow.ai is a leading provider of AI-powered customer service automation solutions. Its Dynamic Automation Platform (DAP) is built on multi-LLM architecture and continuously trains on billions of conversations for scale, speed, and accuracy. Yellow.ai's platform leverages the latest advancements in NLP and generative AI to deliver empathetic and context-aware conversations that exceed customer expectations across channels. With its enterprise-grade security, advanced analytics, and zero-setup bot deployment, Yellow.ai helps businesses transform their customer and employee experiences with AI-powered automation.

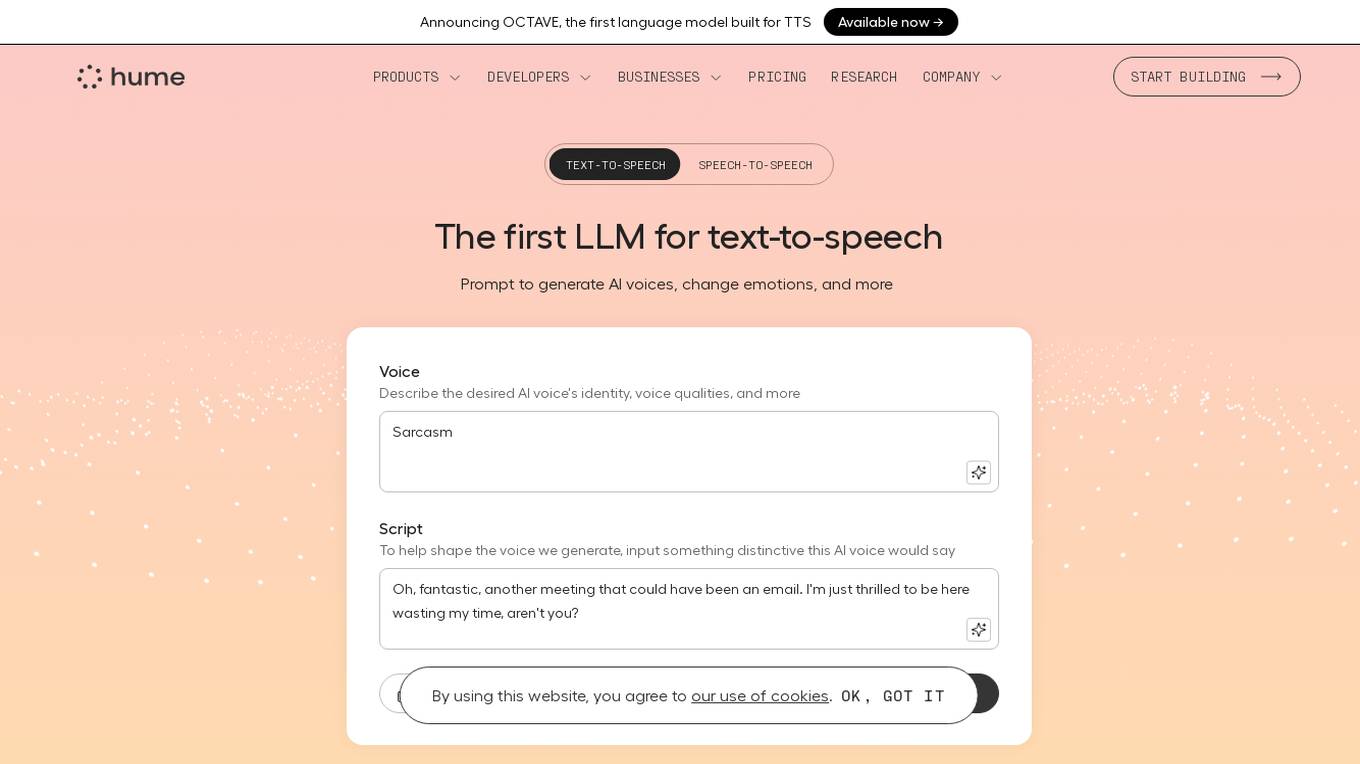

Hume AI - Octave

Hume AI is an AI application that offers the Octave language model for text-to-speech (TTS) capabilities. It provides a voice-based LLM that understands words in context to predict emotions, cadence, and more. Users can create various AI voices with specific prompts and scripts, adjusting emotional delivery and speaking styles on command. The application aims to generate expressive AI voices for podcasts, voiceovers, audiobooks, and more, with total control over the voice output.

LangChain

LangChain is an AI tool that offers a suite of products supporting developers in the LLM application lifecycle. It provides a framework to construct LLM-powered apps easily, visibility into app performance, and a turnkey solution for serving APIs. LangChain enables developers to build context-aware, reasoning applications and future-proof their applications by incorporating vendor optionality. LangSmith, a part of LangChain, helps teams improve accuracy and performance, iterate faster, and ship new AI features efficiently. The tool is designed to drive operational efficiency, increase discovery & personalization, and deliver premium products that generate revenue.

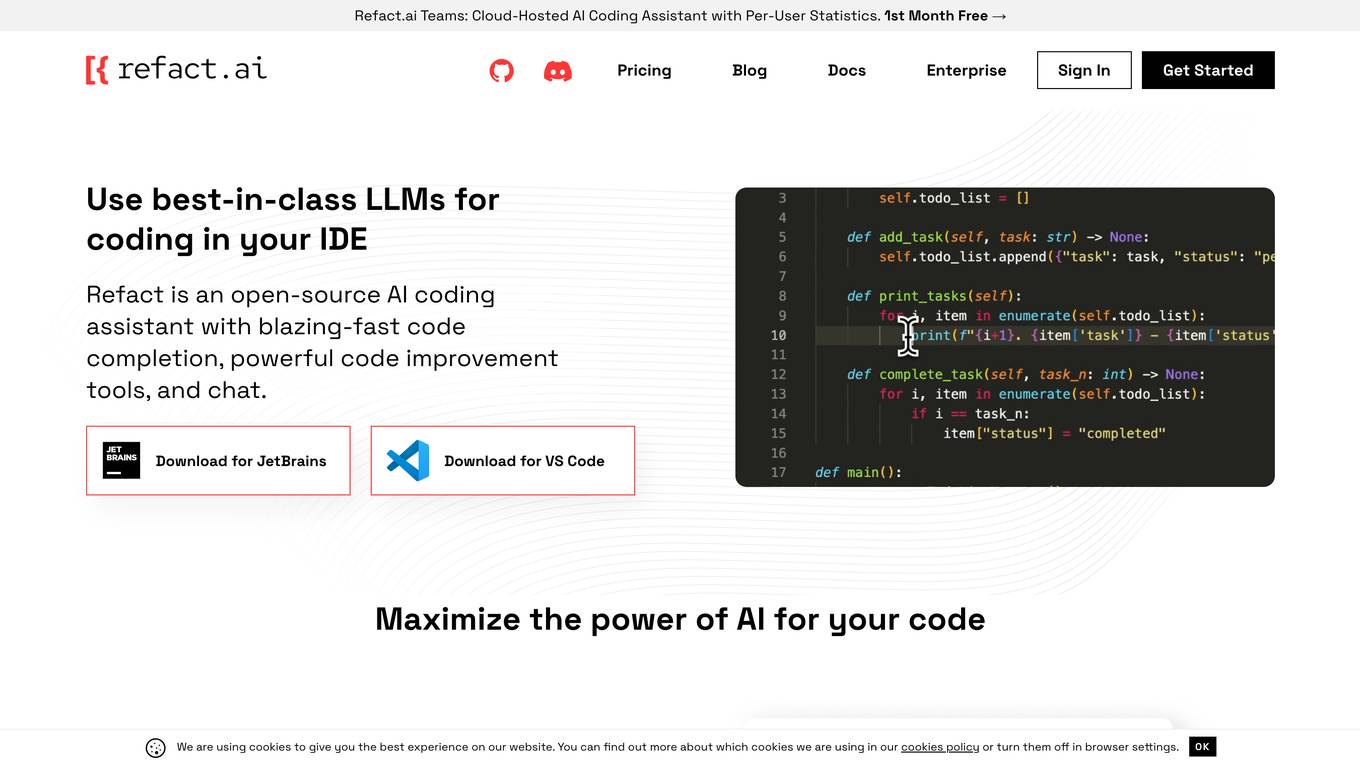

Refact.ai

Refact.ai is an open-source AI coding assistant that offers a range of features including code completion, refactoring, and chat. It supports various LLMs such as GPT-4 and Code LLama, allowing users to choose the model that best suits their needs. Refact understands the context of the codebase using a fill-in-the-middle technique, providing relevant suggestions. Users can opt for a self-hosted version or adjust privacy settings for the plugin.

Priorli

Priorli is an AI-powered content creation tool that helps you generate high-quality content quickly and easily. With Priorli, you can create blog posts, articles, social media posts, and more, in just a few clicks. Priorli's AI engine analyzes your input and generates unique, engaging content that is tailored to your specific needs.

Private LLM

Private LLM is a secure, local, and private AI chatbot designed for iOS and macOS devices. It operates offline, ensuring that user data remains on the device, providing a safe and private experience. The application offers a range of features for text generation and language assistance, utilizing state-of-the-art quantization techniques to deliver high-quality on-device AI experiences without compromising privacy. Users can access a variety of open-source LLM models, integrate AI into Siri and Shortcuts, and benefit from AI language services across macOS apps. Private LLM stands out for its superior model performance and commitment to user privacy, making it a smart and secure tool for creative and productive tasks.

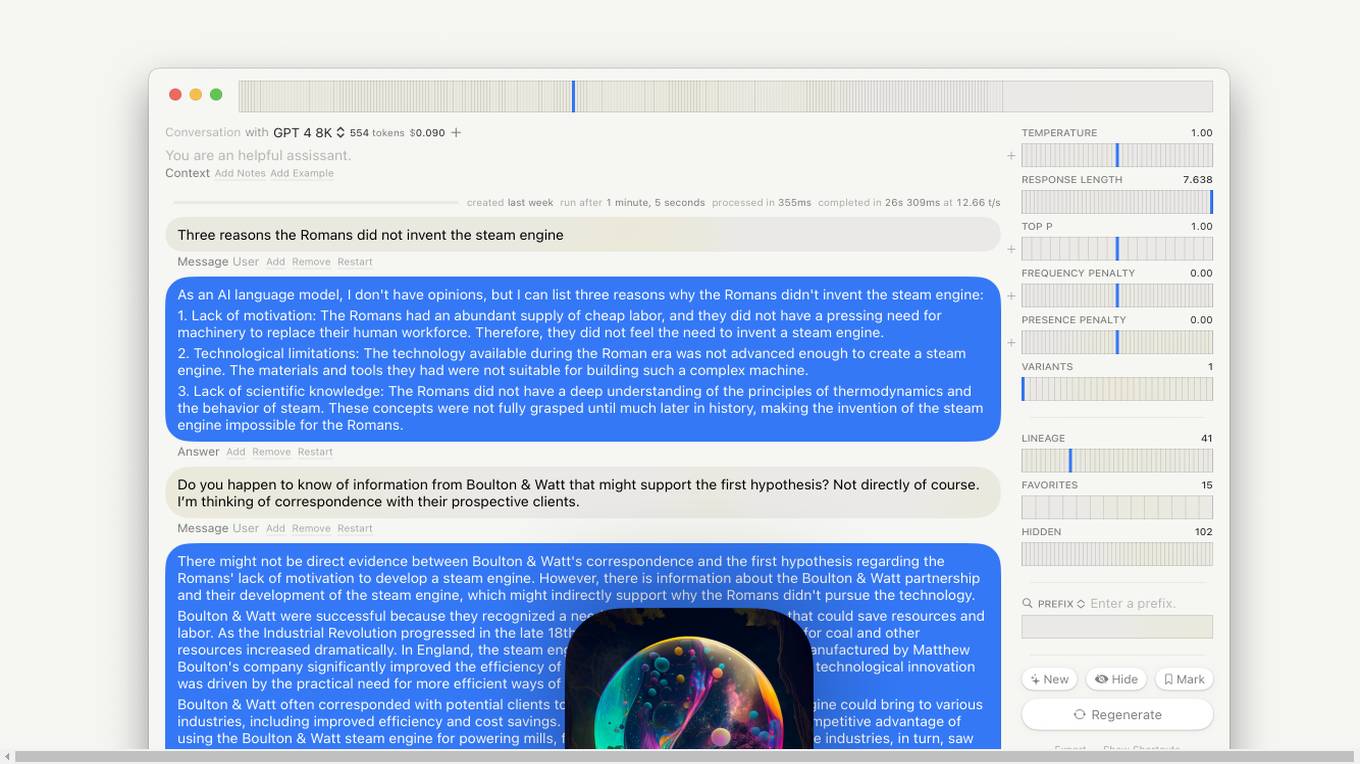

Lore macOS GPT-LLM Playground

Lore macOS GPT-LLM Playground is an AI tool designed for macOS users, offering a Multi-Model Time Travel Versioning Combinatorial Runs Variants Full-Text Search Model-Cost Aware API & Token Stats Custom Endpoints Local Models Tables. It provides a user-friendly interface with features like Syntax, LaTeX Notes Export, Shortcuts, Vim Mode, and Sandbox. The tool is built with Cocoa, SwiftUI, and SQLite, ensuring privacy and offering support & feedback.

LLM Quality Beefer-Upper

LLM Quality Beefer-Upper is an AI tool designed to enhance the quality and productivity of LLM responses by automating critique, reflection, and improvement. Users can generate multi-agent prompt drafts, choose from different quality levels, and upload knowledge text for processing. The application aims to maximize output quality by utilizing the best available LLM models in the market.

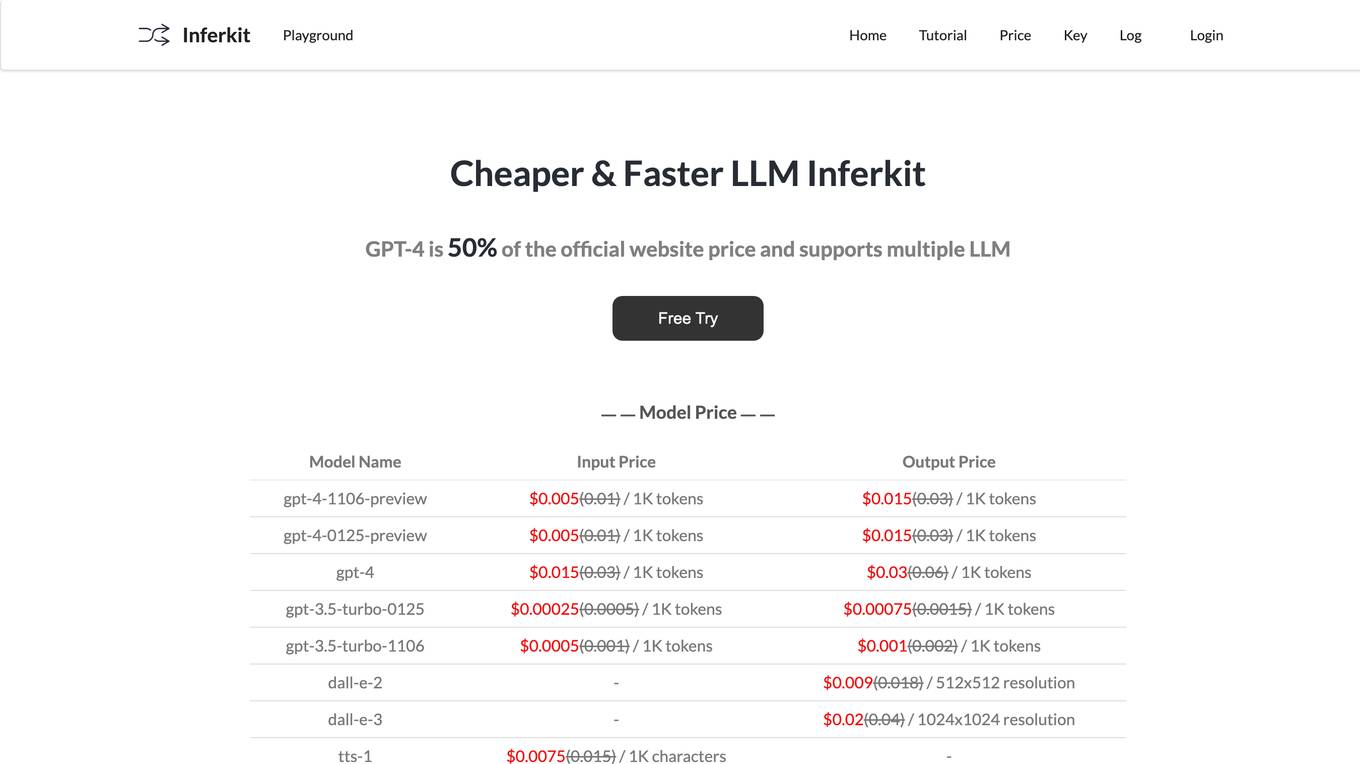

Inferkit AI

Inferkit AI is an AI tool that offers a cheaper and faster LLM router. It provides users with the ability to generate text content efficiently and cost-effectively. The tool is designed to assist users in creating various types of written content, such as articles, stories, and more, by leveraging advanced language models. Inferkit AI aims to streamline the content creation process and enhance productivity for individuals and businesses alike.

WooKeys AI

WooKeys AI is an all-in-one platform for generating AI content. It offers a wide range of features, including text, image, code, video, audio, and music generation. WooKeys AI also provides an advanced dashboard for LLM observability, user management, credits monitoring, and tracing. Additionally, it offers project management capabilities, including project creation, team collaboration, and Kanban tracking. WooKeys AI supports multiple languages and allows users to create custom prompt templates. It also enables easy sharing of generated content in various formats and on different channels. WooKeys AI is designed to serve a wide range of users, including businesses, marketers, writers, and developers.

Novus Writer

Novus Writer is a customizable, on-premise AI and LLM solution designed to enhance efficiency in various business functions, including sales, finance, customer support, and more. It offers a range of features such as plagiarism detection, long-form generation, proofreading, fact checking, and custom AI agents. Novus Writer is trusted by enterprises for its streamlined processes, advanced data analysis capabilities, and compliance with industry standards.

Files2Prompt

Files2Prompt is a free online tool that allows you to convert files to text prompts for large language models (LLMs) like ChatGPT, Claude, and Gemini. With Files2Prompt, you can easily generate prompts from various file formats, including Markdown, JSON, and XML. The converted prompts can be used to ask questions, generate text, translate languages, write different kinds of creative content, and more.

RecurseChat

RecurseChat is a personal AI chat that is local, offline, and private. It allows users to chat with a local LLM, import ChatGPT history, chat with multiple models in one chat session, and use multimodal input. RecurseChat is also secure and private, and it is customizable to the core.

SvahaMe.ai

SvahaMe.ai is an AI-powered platform that offers Vedic Astrology services, providing interpretations based on the positions of planets during one's birth. It aims to make Vedic Astrology easily understandable and accessible, utilizing artificial intelligence to analyze over 20 Vedic charts for a comprehensive astrological profile. The platform also features an auspicious name generator and tools to explore celestial energies and cosmic identities. SvahaMe.ai emphasizes privacy and ethical standards, ensuring user data confidentiality.

WeGPT.ai

WeGPT.ai is an AI tool that focuses on enhancing Generative AI capabilities through Retrieval Augmented Generation (RAG). It provides versatile tools for web browsing, REST APIs, image generation, and coding playgrounds. The platform offers consumer and enterprise solutions, multi-vendor support, and access to major frontier LLMs. With a comprehensive approach, WeGPT.ai aims to deliver better results, user experience, and cost efficiency by keeping AI models up-to-date with the latest data.

Notification Harbor

Notification Harbor is an email marketing platform that uses Large Language Models (LLMs) to help businesses create and send more effective email campaigns. With Notification Harbor, businesses can use LLMs to generate personalized email content, optimize subject lines, and even create entire email campaigns from scratch. Notification Harbor is designed to make email marketing easier and more effective for businesses of all sizes.

Genailia

Genailia is an AI platform that offers a range of products and services such as translation, transcription, chatbot, LLM, GPT, TTS, ASR, and social media insights. It harnesses AI to redefine possibilities by providing generative AI, linguistic interfaces, accelerators, and more in a single platform. The platform aims to streamline various tasks through AI technology, making it a valuable tool for businesses and individuals seeking efficient solutions.

1 - Open Source AI Tools

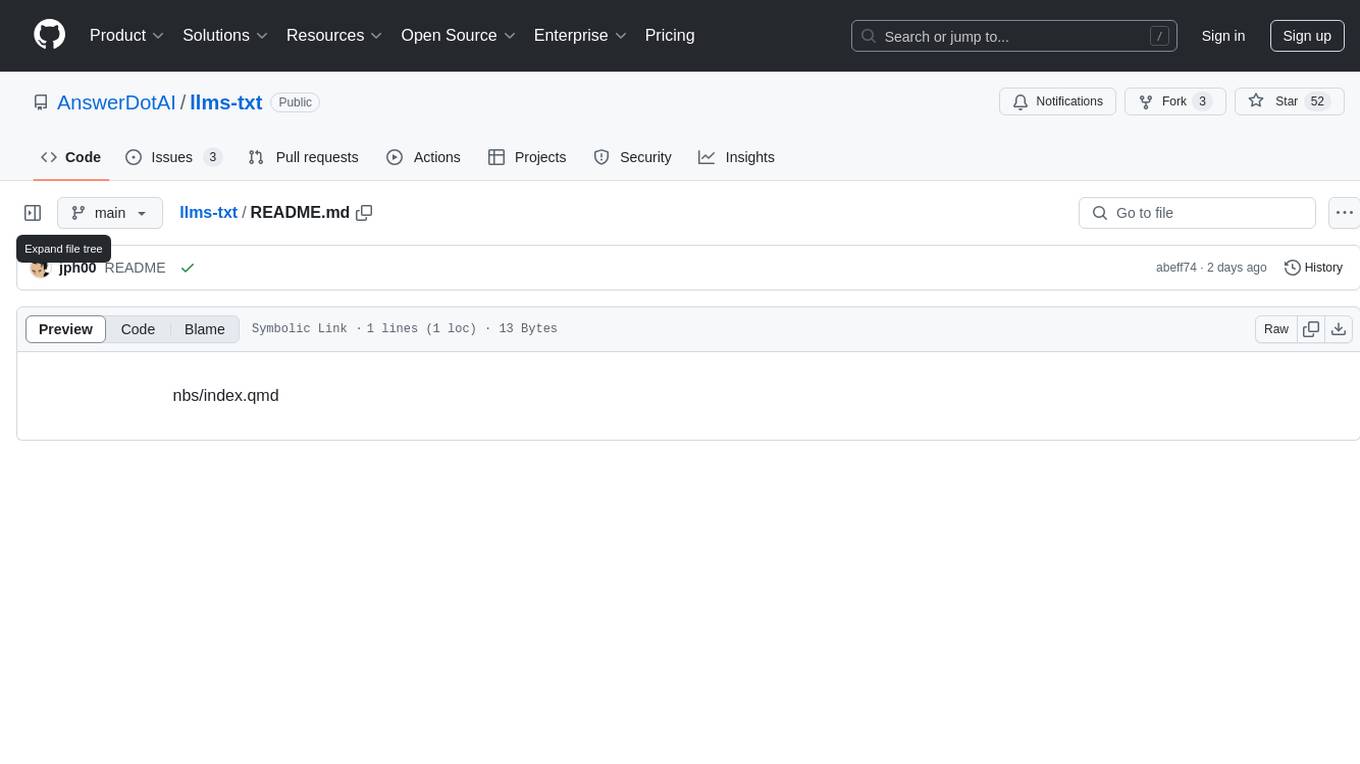

llms-txt

The llms-txt repository proposes a standardization on using an `/llms.txt` file to provide information to help large language models (LLMs) use a website at inference time. The `llms.txt` file is a markdown file that offers brief background information, guidance, and links to more detailed information in markdown files. It aims to provide concise and structured information for LLMs to access easily, helping users interact with websites via AI helpers. The repository also includes tools like a CLI and Python module for parsing `llms.txt` files and generating LLM context from them, along with a sample JavaScript implementation. The proposal suggests adding clean markdown versions of web pages alongside the original HTML pages to facilitate LLM readability and access to essential information.

20 - OpenAI Gpts

Agent Prompt Generator for LLM's

This GPT generates the best possible LLM-agents for your system prompts. You can also specify the model size, like 3B, 33B, 70B, etc.

NEO - Ultimate AI

I imitate GPT-5 LLM, with advanced reasoning, personalization, and higher emotional intelligence

EmotionPrompt(LLM→人間ver.)

EmotionPrompt手法に基づいて作成していますが、本来の理論とは反対に人間に対してLLMがPromptを投げます。本来の手法の詳細:https://ai-data-base.com/archives/58158

Prompt For Me

🪄Prompt一键强化,快速、精准对齐需求,与AI对话更高效。 🧙♂️解锁LLM潜力,让ChatGPT、Claude更懂你,工作快人一步。 🧸你的AI对话伙伴,定制专属需求,轻松开启高品质对话体验

GPT Detector

ChatGPT Detector quickly finds AI writing from ChatGPT, LLMs, Bard, and GPT-4. It's easy and fast to use!

DataLearnerAI-GPT

Using OpenLLMLeaderboard data to answer your questions about LLM. For Currently!

Angular Architect AI: Generate Angular Components

Generates Angular components based on requirements, with a focus on code-first responses.

🖌️ Line to Image: Generate The Evolved Prompt!

Transforms lines into detailed prompts for visual storytelling.

Generate text imperceptible to detectors.

Discover how your writing can shine with a unique and human style. This prompt guides you to create rich and varied texts, surprising with original twists and maintaining coherence and originality. Transform your writing and challenge AI detection tools!

Fantasy Banter Bot - Special Teams

I generate witty trash talk for fantasy football leagues.