Best AI tools for< Generate Kernels >

20 - AI tool Sites

Self-Introduction Generate AI

Self-Introduction Generate AI is an innovative platform designed to assist individuals and businesses in crafting compelling and effective self-introductions. It leverages advanced AI technology to understand context and generate personalized content. The platform can analyze and understand various types of input, including text and context, to generate tailored self-introductions that are engaging and informative, enhancing personal and professional branding. With features like quick response times, quality assurance, and specialized service for self-introductions, it is an ideal tool for job applications, networking events, and personal branding initiatives.

Nextatlas Generate Suite

Nextatlas Generate Suite is an AI-powered trend forecasting service that revolutionizes market research by offering profound insights into market trends and consumer behavior. It provides a full array of specialized assistants to jumpstart team's work, including scouting innovation, planning multiple scenarios, and advising on brand strategy. The suite features GenAI Agents for efficient workflows, Persona Generator Agent for persona development, Generate Chat for advanced insights, Innovation Tracker for tracking tech advancements, Sentiment Pulse Agent for real-time insights, Subculture Scout for engaging specific audiences, and Sustainability Scout for tracking emerging trends and regulations.

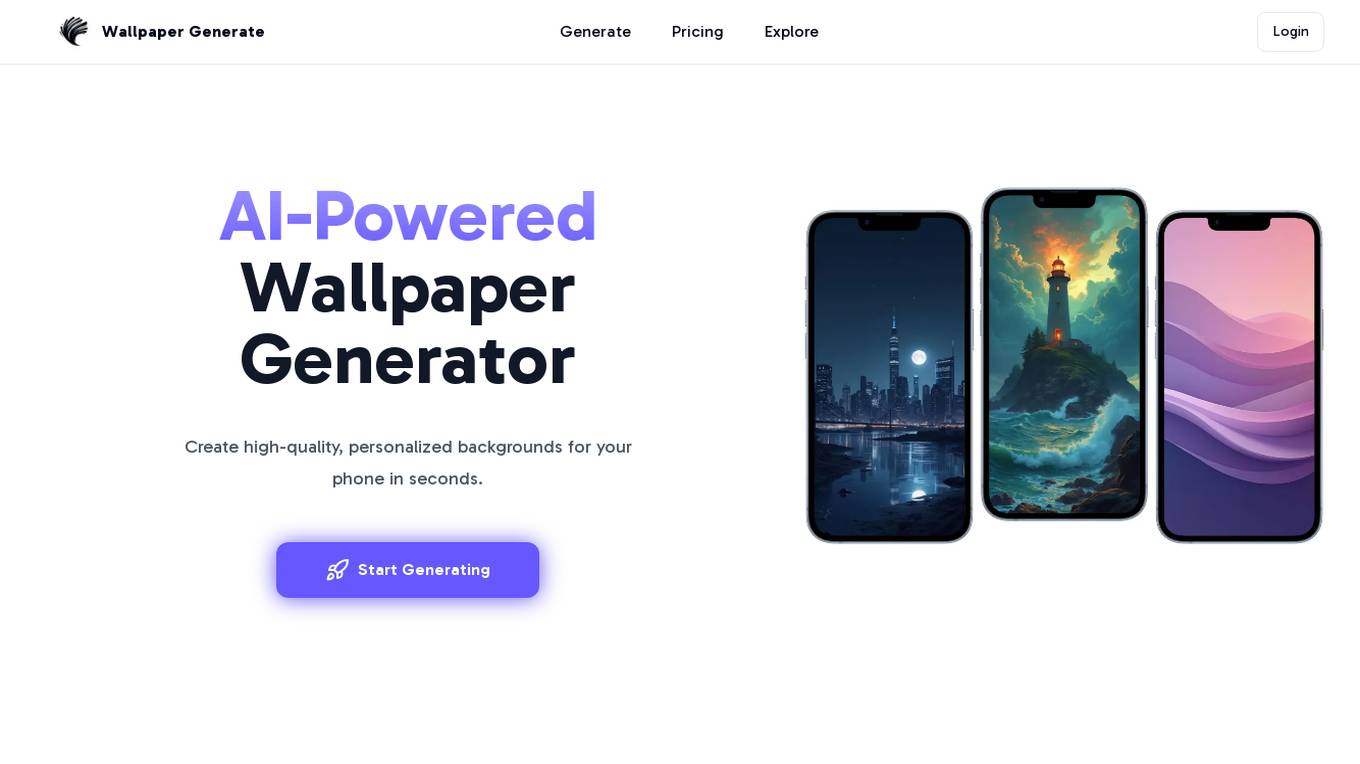

Wallpaper Generate

Wallpaper Generate is an AI-powered tool that allows users to create high-quality, personalized backgrounds for their phones in seconds. The platform offers a range of exceptional wallpaper styles, from nature and abstract to retro and vintage designs. Users can easily design and customize wallpapers by sharing their vision with the AI, selecting a style, and customizing colors. Wallpaper Generate provides infinite wallpaper possibilities, seamless creation process, high-quality designs, user-friendly design experience, stunning visual quality, and organized cloud storage integration.

ZMO.AI

ZMO.AI is a free AI image generator tool that allows users to create stunning AI art, images, anime, and realistic photos from text or images with a simple click of a button. The tool offers a full suite of powerful features to generate, remove, expand, or edit images like a pro using AI magic. With ZMO.AI, users can effortlessly generate anime and manga characters, flawless portrait photos, and realistic backgrounds. The application is trusted by over 1,000,000 users worldwide for its high-quality AI image generation capabilities.

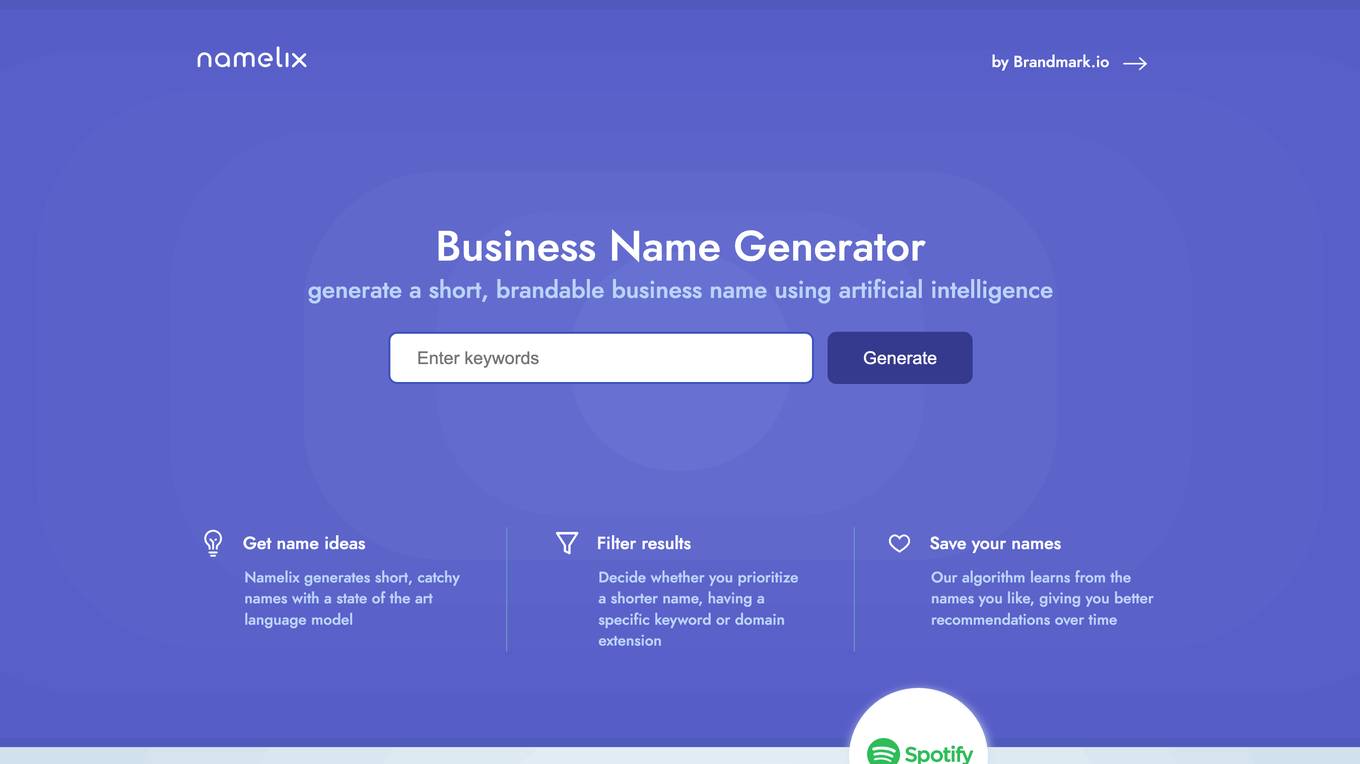

Namelix

Namelix by Brandmark.io is a free AI-powered business name generator that helps users create short, brandable names using artificial intelligence. The tool generates catchy names with a state-of-the-art language model, allows users to filter results based on preferences, and saves preferred names to provide better recommendations over time. Namelix aims to address the challenge of limited naming options for new businesses by offering unique, memorable, and affordable branded names. The tool also assists in creating professional logos for businesses.

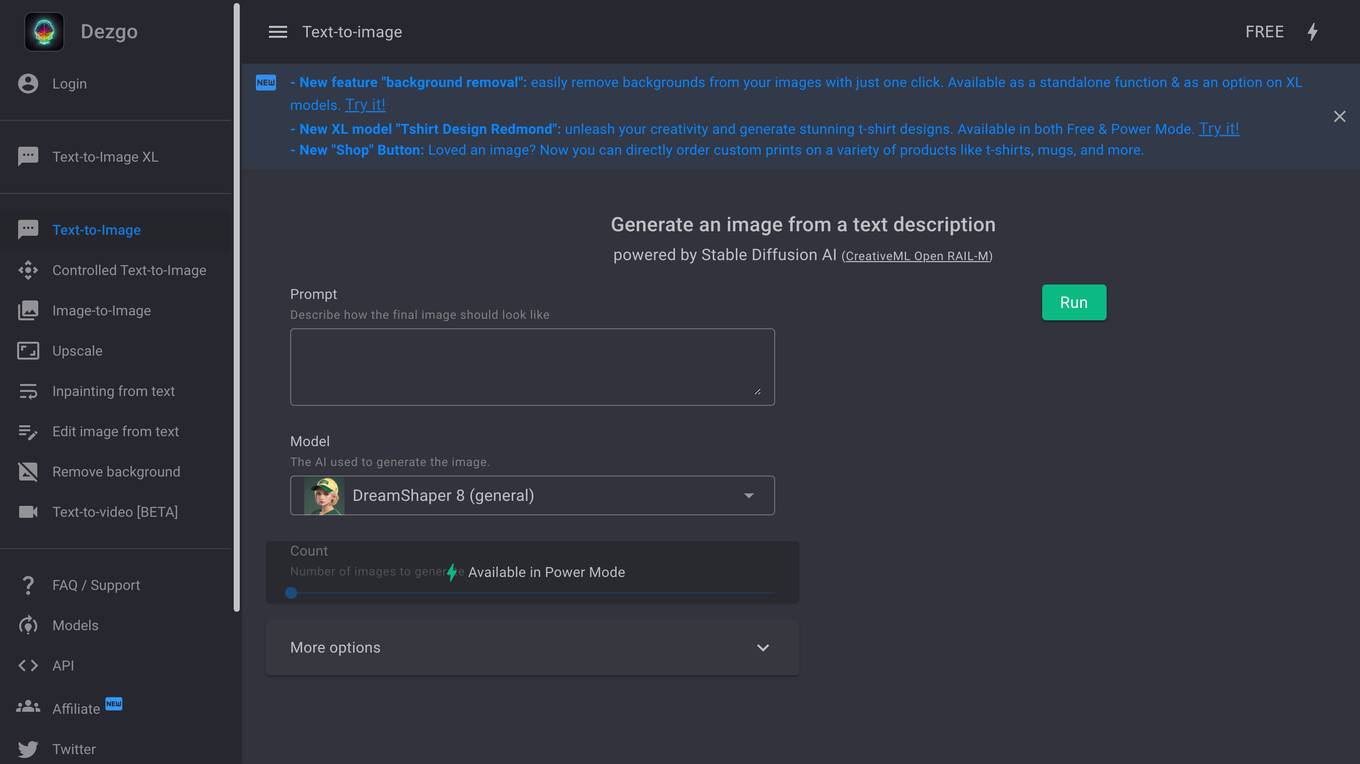

Dezgo

Dezgo is a text-to-image AI image generator powered by Stable Diffusion AI. It allows users to generate images from text descriptions. The tool offers various features such as controlled text-to-image, image-to-image upscale, inpainting from text, editing images from text, removing backgrounds, and text-to-video generation. Dezgo also provides access to models, APIs, and an affiliate program.

AI Story Generator

This free AI story generator can help you create unique and engaging stories in seconds. Simply enter a few details about your story, and our AI will generate a complete story for you. You can use this tool to generate story ideas, write short stories, or even create entire novels.

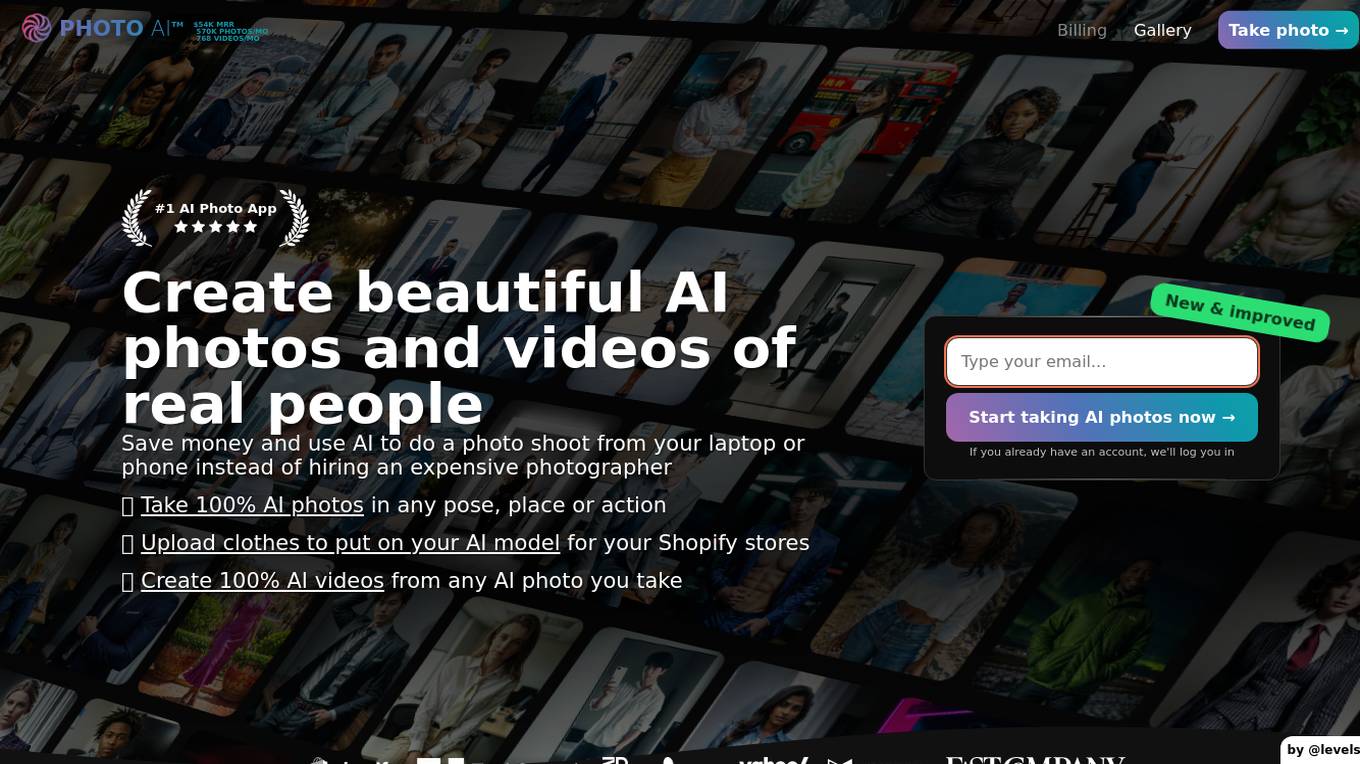

Photo AI

Photo AI is an AI-powered photo generator that allows users to create realistic images of people in various poses, settings, and actions. With Photo AI, users can upload their selfies to create their own AI model, which can then be used to generate photos in any pose, place, or action. Photo AI also offers a variety of photo packs, which provide users with pre-made photo templates and prompts. Additionally, Photo AI allows users to upload clothes to dress their AI model, and to create AI-generated fashion designs with Sketch2Image.

Qodo

Qodo is a quality-first generative AI coding platform that helps developers write, test, and review code within IDE and Git. The platform offers automated code reviews, contextual suggestions, and comprehensive test generation, ensuring robust, reliable software development. Qodo integrates seamlessly to maintain high standards of code quality and integrity throughout the development process.

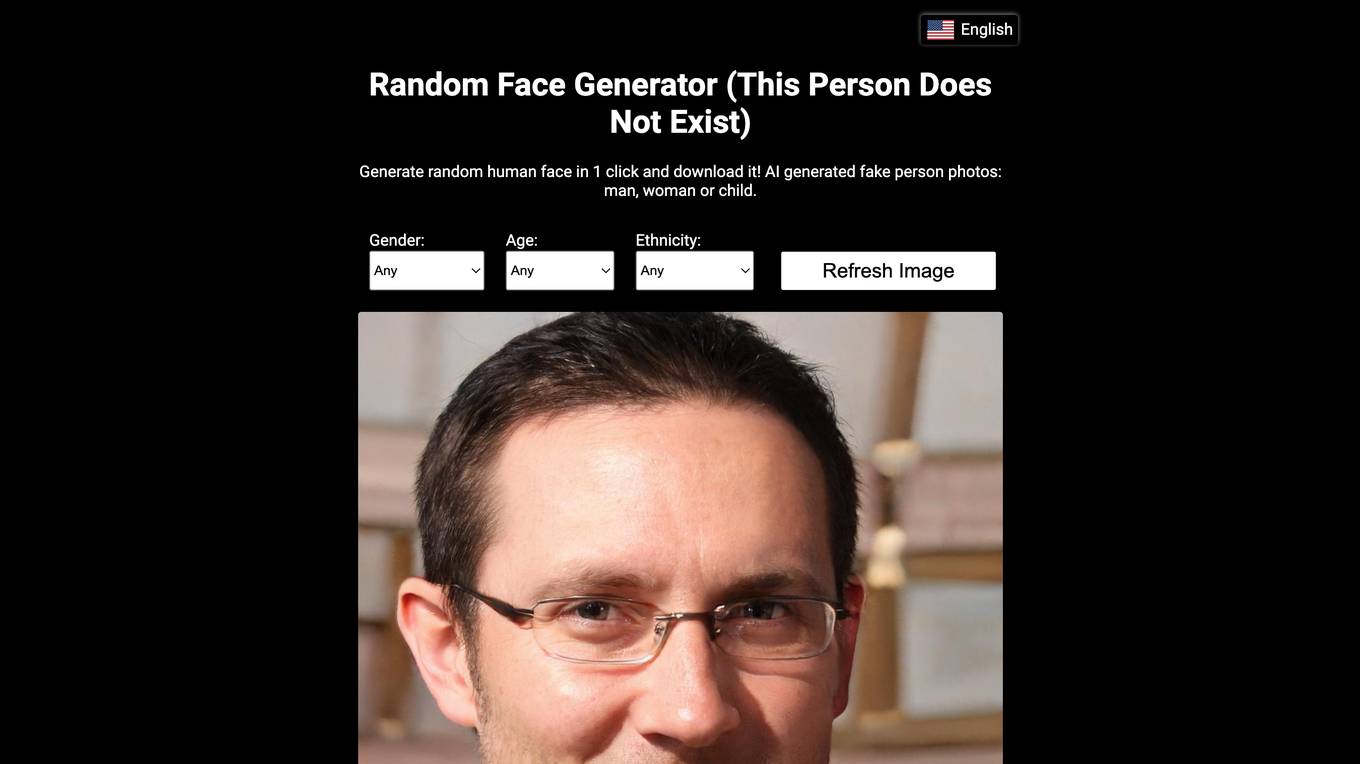

This Person Does Not Exist

This Person Does Not Exist is a website that generates random, realistic faces of people who do not exist. The website uses a neural network called StyleGAN, developed by Nvidia, to create these faces. StyleGAN is a generative adversarial network (GAN), which is a type of machine learning algorithm that can generate new data from a given dataset. In the case of StyleGAN, the dataset is a collection of images of human faces. The GAN is trained on this dataset, and it learns to generate new faces that are realistic and indistinguishable from real faces.

ZMO.AI

ZMO.AI is a free AI Image Generator that allows users to create stunning AI art, images, anime, and realistic photos from text or images with a simple click of a button. The platform offers a full suite of powerful AI image generation tools, including AI Photo Editor, AI Anime Generator, AI Background Changer, AI Video Generator, and more. Trusted by over 1,000,000 users worldwide, ZMO.AI provides studio-quality photo editing capabilities, background removal, image generation, and editing features powered by AI magic. Users can easily generate high-quality anime, manga characters, portraits, and images with versatile styles using the AI tools available on the platform.

SEO Writing AI

SEO Writing AI is an AI-powered writing tool that helps users create SEO-optimized articles, blog posts, and affiliate content in just a few clicks. With its user-friendly interface and advanced features, SEO Writing AI makes it easy for anyone to generate high-quality content that ranks well in search results. Some of the key features of SEO Writing AI include the ability to generate articles in over 48 languages, automatically post articles to WordPress, and optimize content for specific keywords. SEO Writing AI also offers a variety of templates and tools to help users create engaging and informative content. Overall, SEO Writing AI is a valuable tool for anyone who wants to improve their content marketing efforts.

NICE

NICE is a leading Customer Experience (CX) AI Platform offering a range of AI-driven solutions for businesses to enhance customer experiences, optimize workforce engagement, and improve operational efficiency. The platform provides AI companions for contact center employees, AI-driven customer self-service, AI tools for CX leaders, and actionable insights through CX analytics. NICE also offers solutions tailored for various industries such as healthcare, retail, financial services, insurance, telecom, travel, and hospitality. With a focus on transforming experiences with AI, NICE helps businesses identify behaviors that drive frictionless customer experiences, boost customer loyalty, empower agents, and drive digital transformation.

OnlyWaifus.ai

OnlyWaifus.ai is an AI-powered tool that allows users to generate uncensored, photorealistic images of anime-style female characters. The tool is easy to use and requires no technical knowledge. Users simply need to describe the waifu they want to generate, and the tool will create an image that matches their specifications. OnlyWaifus.ai offers a variety of different styles to choose from, so users can create waifus that are cute, sexy, or even dark and twisted. The tool is also constantly being updated with new features and content, so users can always find something new to enjoy.

GlamGirls.ai

GlamGirls.ai is a website that allows users to generate AI-powered virtual girlfriends. Users can choose from a variety of different girlfriends, each with her own unique personality and appearance. GlamGirls.ai also offers a variety of features that allow users to interact with their girlfriends, such as chatting, sending gifts, and going on dates.

Elai.io

Elai.io is an advanced tool that utilizes the power of artificial intelligence to simplify and automate the video creation process. Our aim in Elai.io is to offer a user-friendly platform that welcomes individuals at any skill level in video production. Users can outline their video’s theme and narrative through an easy-to-navigate text interface, enriching it further with various multimedia components like images, texts, and sounds. This synergy between AI and user creativity enables the crafting of videos that are rich in information and visually stunning.

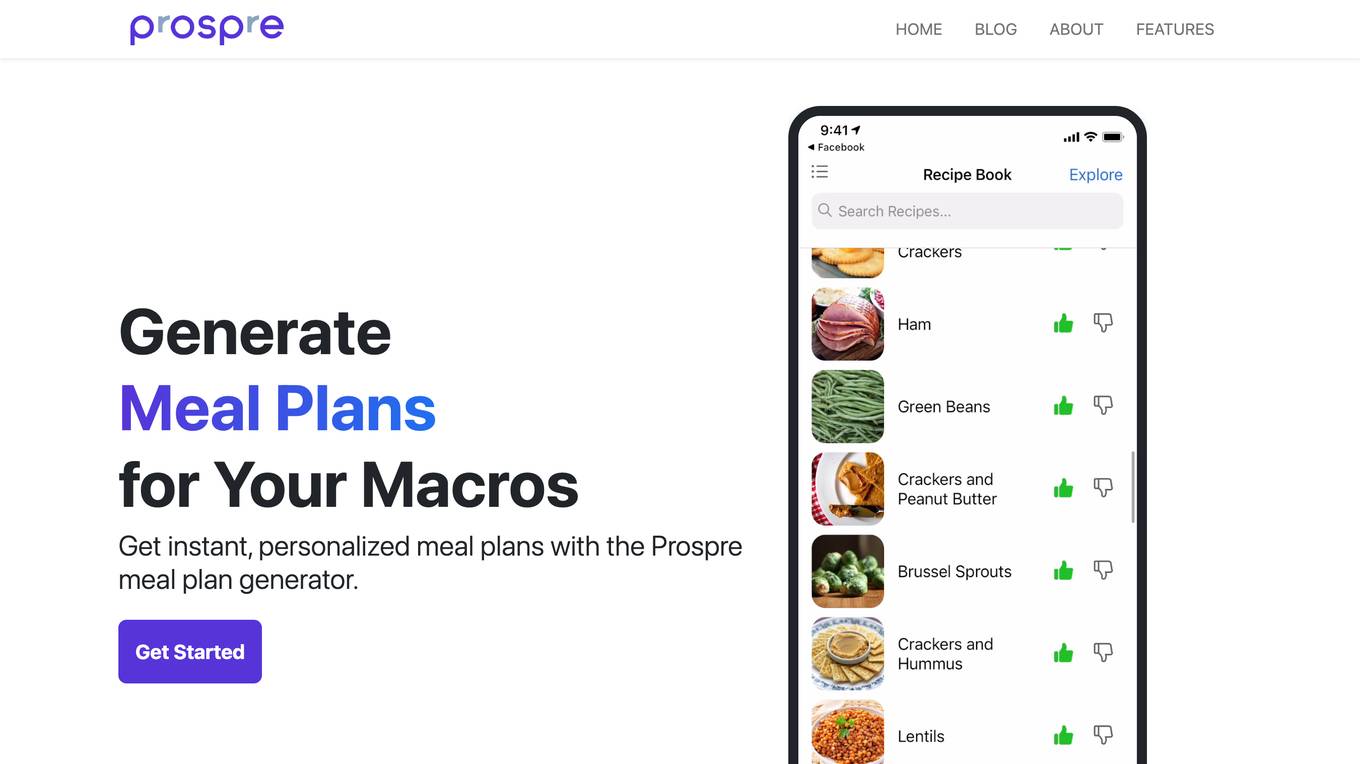

Prospre

Prospre is a meal planning app that helps users create personalized meal plans based on their calorie and macro goals. The app also includes a macro tracker, a recipe database, and a grocery list generator. Prospre is designed to make it easy for users to eat healthy and reach their fitness goals.

Sloyd

Sloyd is an AI-powered 3D model generator that allows users to create 3D models from text prompts. The platform offers a wide range of features, including a huge 3D model library, easy customization of 3D models, and ready-to-use 3D models. Sloyd is ideal for game developers, designers, and 3D enthusiasts who need to create high-quality 3D models quickly and efficiently.

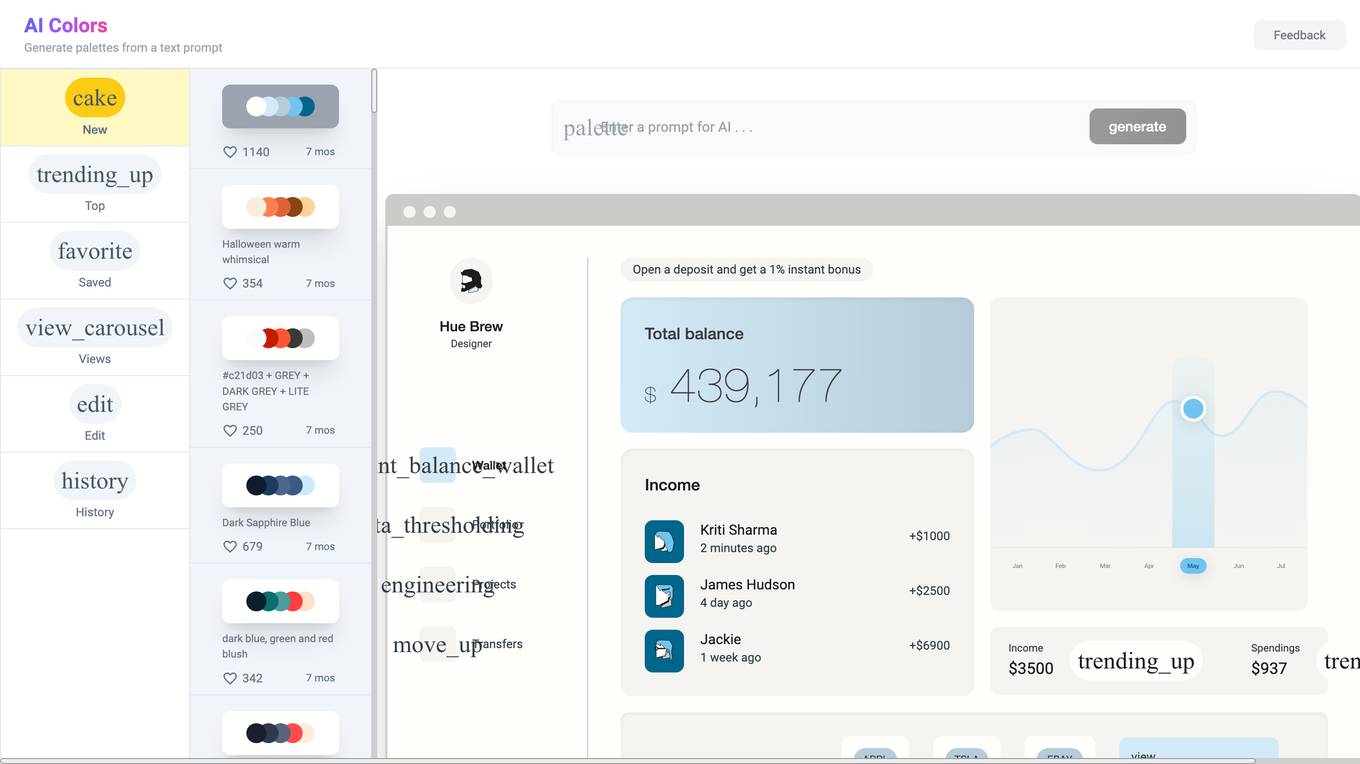

AI Color Palette Generator

The AI Color Palette Generator is a web-based tool that allows users to browse, edit, visualize, and generate unique color palettes. It features a library of pre-made palettes, as well as the ability to create custom palettes from scratch. The tool also includes a variety of features to help users visualize and compare different color combinations.

1 - Open Source AI Tools

KernelBench

KernelBench is a benchmark tool designed to evaluate Large Language Models' (LLMs) ability to generate GPU kernels. It focuses on transpiling operators from PyTorch to CUDA kernels at different levels of granularity. The tool categorizes problems into four levels, ranging from single-kernel operators to full model architectures, and assesses solutions based on compilation, correctness, and speed. The repository provides a structured directory layout, setup instructions, usage examples for running single or multiple problems, and upcoming roadmap features like additional GPU platform support and integration with other frameworks.

20 - OpenAI Gpts

Angular Architect AI: Generate Angular Components

Generates Angular components based on requirements, with a focus on code-first responses.

🖌️ Line to Image: Generate The Evolved Prompt!

Transforms lines into detailed prompts for visual storytelling.

Generate text imperceptible to detectors.

Discover how your writing can shine with a unique and human style. This prompt guides you to create rich and varied texts, surprising with original twists and maintaining coherence and originality. Transform your writing and challenge AI detection tools!

Fantasy Banter Bot - Special Teams

I generate witty trash talk for fantasy football leagues.

Product StoryBoard Director

Helps you generate script keyframes, for better experience please visit museclip.ai

Visual Storyteller

Extract the essence of the novel story according to the quantity requirements and generate corresponding images. The images can be used directly to create novel videos.小说推文图片自动批量生成,可自动生成风格一致性图片

CodeGPT

This GPT can generate code for you. For now it creates full-stack apps using Typescript. Just describe the feature you want and you will get a link to the Github code pull request and the live app deployed.