Best AI tools for< Extract Patterns >

20 - AI tool Sites

nventr

nventr is an AI platform for predictive automation, offering a suite of products and services powered by predictive analytics. The company focuses on applying new approaches to uncover patterns, extract valuable intelligence, and predict outcomes within vast datasets. nventr solutions support enterprise-grade AI acceleration, intelligent data processing, and digital transformation. The platform, nventr.ai, enables rapid building of AI models and software applications through collaborative tools and cloud-based infrastructure.

Magic Regex Generator

Magic Regex Generator is an AI-powered tool that simplifies the process of generating, testing, and editing Regular Expression patterns. Users can describe what they want to match in English, and the AI generates the corresponding regex in the editor for testing and refining. The tool is designed to make working with regex easier and more efficient, allowing users to focus on meaningful tasks without getting bogged down in complex pattern matching.

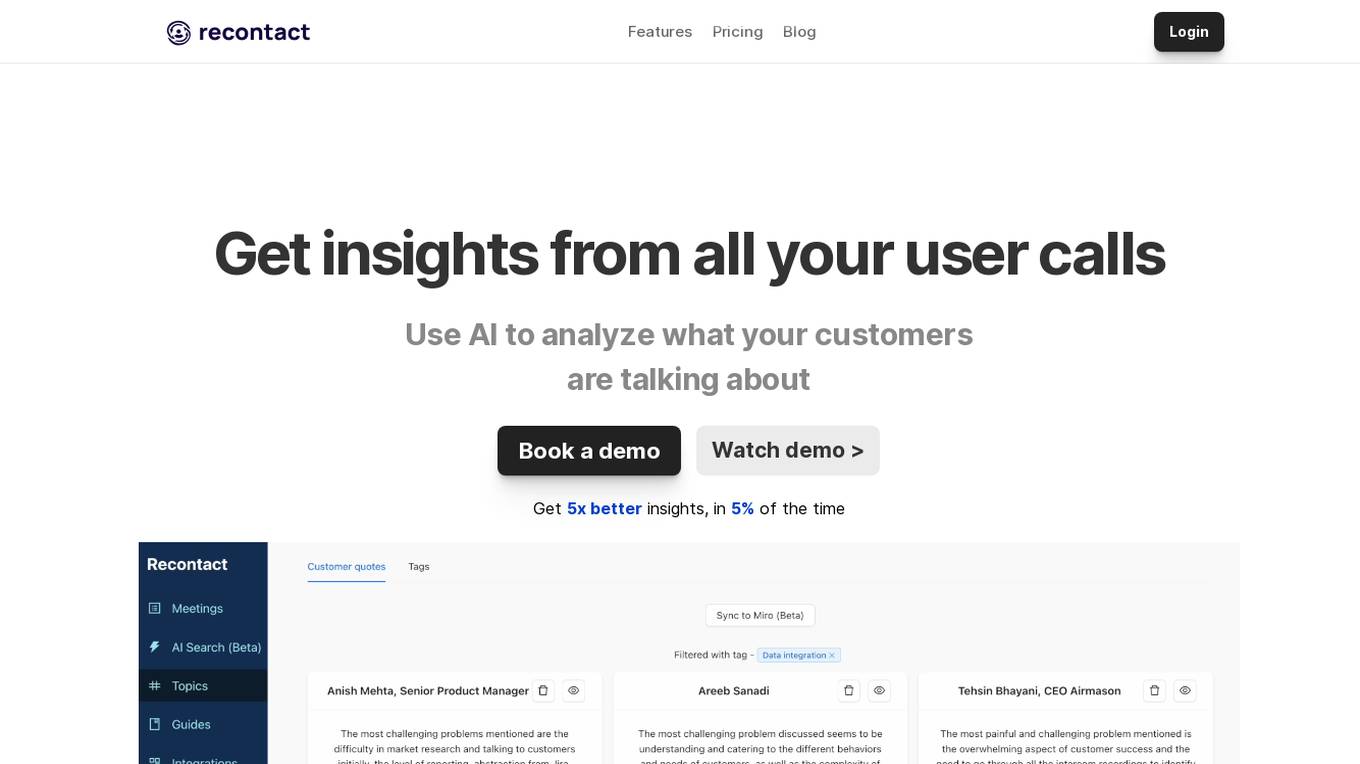

Recontact

Recontact is an AI-powered tool designed to help users analyze and gain insights from user calls efficiently. By leveraging AI technology, Recontact can process and extract valuable information from user conversations, enabling users to understand customer needs, identify trends, and generate detailed reports in a matter of minutes. The tool streamlines the process of listening to call transcripts, making affinity diagrams, and understanding customer requirements, saving users valuable time and effort. Recontact is best suited for early-stage founders, user research teams, and customer support teams looking to analyze user interviews, validate startup ideas, and improve customer interactions.

Rgx.tools

Rgx.tools is an AI-powered text-to-regex generator that helps users create regular expressions quickly and easily. It is a wrapper around OpenAI's gpt-3.5-chat model, which generates clean, readable, and efficient regular expressions based on user input. Rgx.tools is designed to make the process of writing regular expressions less painful and more accessible, even for those with limited experience.

Wunderschild

Schwarzthal Tech's Wunderschild is an AI-driven platform for financial crime intelligence that revolutionizes compliance and investigation techniques. It provides intelligence solutions based on network assessment, data linkage, flow aggregation, and machine learning. The platform offers expertise and insights on strategic risks related to Politically Exposed Persons, Serious Organised Crime, Terrorism Financing, and more. With features like Compliance, Investigation, Know Your Network, Media Scan, Document Drill, and Transaction Monitoring, Wunderschild empowers users to enhance compliance functions, conduct deep dives into complex transnational crime cases, and detect suspicious activities. The platform is trusted by global companies and offers advanced OCR, multilingual support, and key information extraction capabilities.

RegexBot

RegexBot is an AI-powered Regex Builder that allows users to test and convert natural language into powerful regular expressions effortlessly. It leverages the power of AI to help users master regular expressions by providing tools to match specific patterns like URLs, email addresses, ZIP codes, and words containing only uppercase letters. With a user-friendly interface, RegexBot simplifies the process of creating and validating regular expressions, making it a valuable tool for developers, data analysts, and anyone working with text data.

Socrates

Socrates is an AI tool that provides comprehensive analysis and insights into your documents. It utilizes advanced natural language processing algorithms to extract key information, identify patterns, and offer valuable suggestions. With Socrates, users can gain a deeper understanding of their text content, improve accuracy, and enhance decision-making processes. Whether you're a student, researcher, or professional, Socrates can help you unlock the full potential of your documents.

BabblerAI

BabblerAI is an advanced artificial intelligence tool designed to assist businesses in analyzing and extracting valuable insights from large volumes of text data. The application utilizes natural language processing and machine learning algorithms to provide users with actionable intelligence and automate the process of information extraction. With BabblerAI, users can streamline their data analysis workflows, uncover trends and patterns, and make data-driven decisions with confidence. The tool is user-friendly and offers a range of features to enhance productivity and efficiency in data analysis tasks.

dataset.macgence

dataset.macgence is an AI-powered data analysis tool that helps users extract valuable insights from their datasets. It offers a user-friendly interface for uploading, cleaning, and analyzing data, making it suitable for both beginners and experienced data analysts. With advanced algorithms and visualization capabilities, dataset.macgence enables users to uncover patterns, trends, and correlations in their data, leading to informed decision-making. Whether you're a business professional, researcher, or student, dataset.macgence can streamline your data analysis process and enhance your data-driven strategies.

Spiral

Spiral is an AI-powered tool designed to automate 80% of repeat writing, thinking, and creative tasks. It allows users to create Spirals to accelerate any writing task by training it on examples to generate outputs in their desired voice and style. The tool includes a powerful Prompt Builder to help users work faster and smarter, transforming content into tweets, PRDs, proposals, summaries, and more. Spiral extracts patterns from text to deduce voice and style, enabling users to iterate on outputs until satisfied. Users can share Spirals with their team to maximize quality and streamline processes.

Insight7

Insight7 is a powerful AI-powered tool that helps businesses extract insights from customer and employee interviews. It uses natural language processing and machine learning to analyze large volumes of unstructured data, such as transcripts, audio recordings, and videos. Insight7 can identify key themes, trends, and sentiment, which can then be used to improve products, services, and customer experiences.

Kadoa

Kadoa is an AI web scraper tool that extracts unstructured web data at scale automatically, without the need for coding. It offers a fast and easy way to integrate web data into applications, providing high accuracy, scalability, and automation in data extraction and transformation. Kadoa is trusted by various industries for real-time monitoring, lead generation, media monitoring, and more, offering zero setup or maintenance effort and smart navigation capabilities.

FormX.ai

FormX.ai is an AI-powered data extraction and conversion tool that automates the process of extracting data from physical documents and converting it into digital formats. It supports a wide range of document types, including invoices, receipts, purchase orders, bank statements, contracts, HR forms, shipping orders, loyalty member applications, annual reports, business certificates, personnel licenses, and more. FormX.ai's pre-configured data extraction models and effortless API integration make it easy for businesses to integrate data extraction into their existing systems and workflows. With FormX.ai, businesses can save time and money on manual data entry and improve the accuracy and efficiency of their data processing.

Parsio

Parsio is an AI-powered document parser that can extract structured data from PDFs, emails, and other documents. It uses natural language processing to understand the context of the document and identify the relevant data points. Parsio can be used to automate a variety of tasks, such as extracting data from invoices, receipts, and emails.

RIDO Protocol

RIDO Protocol is a decentralized data protocol that allows users to extract value from their personal data in Web2 and Web3. It provides users with a variety of features, including programmable data generation, programmable access control, and cross-application data sharing. RIDO also has a data marketplace where users can list or offer their data information and ownership. Additionally, RIDO has a DataFi protocol which promotes the flowing of data information and value.

Tablize

Tablize is a powerful data extraction tool that helps you turn unstructured data into structured, tabular format. With Tablize, you can easily extract data from PDFs, images, and websites, and export it to Excel, CSV, or JSON. Tablize uses artificial intelligence to automate the data extraction process, making it fast and easy to get the data you need.

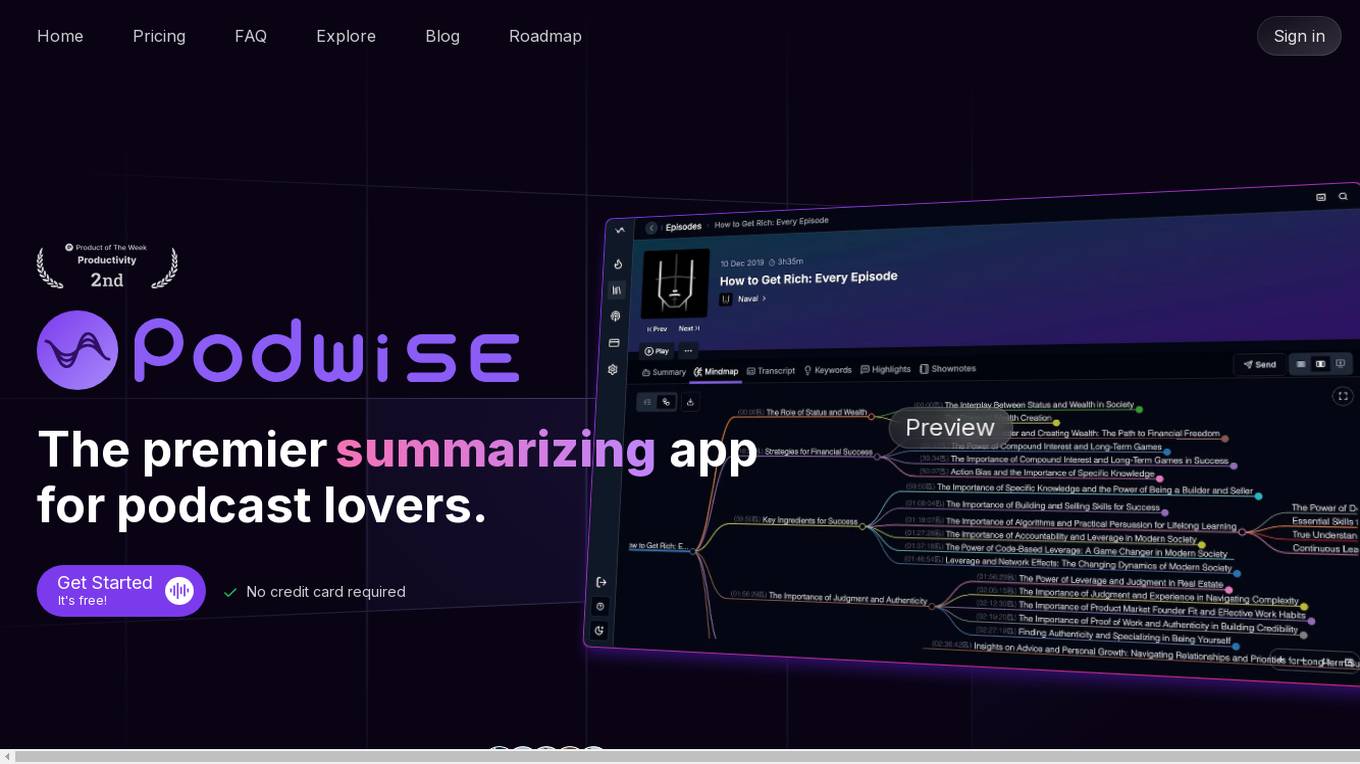

Podwise

Podwise is an AI-powered podcast tool designed for podcast lovers to extract structured knowledge from episodes at 10x speed. It offers features such as AI-powered summarization, mind mapping, content outlining, transcription, and seamless integration with knowledge management workflows. Users can subscribe to favorite content, get lightning-speed access to structured knowledge, and discover episodes of interest. Podwise aims to address the challenge of enjoying podcasts, recalling less, and forgetting quickly, by providing a meticulous, accurate, and impactful tool for efficient podcast referencing and note consolidation.

Kazuha

Kazuha is an AI-powered platform that provides investment insights extracted from top financial content creators on platforms like podcasts, YouTube, and Twitter. The platform uses advanced AI algorithms to analyze hours of content and generate concise summaries and key takeaways for investors. Users can stay informed about the latest investment opportunities and never miss actionable insights buried in lengthy financial content.

Nex

Nex is an AI Knowledge Copilot application designed to help users efficiently extract main points from long YouTube videos and articles. It offers features like summarizing content, providing quick takeaways, highlighting essential parts, and saving inspirations. With Nex, users can improve their information absorption and save time by focusing on the most relevant parts of the content.

Vocal Remover Oak

Vocal Remover Oak is an advanced AI tool designed for music producers, video makers, and karaoke enthusiasts to easily separate vocals and accompaniment in audio files. The website offers a free online vocal remover service that utilizes deep learning technology to provide fast processing, high-quality output, and support for various audio and video formats. Users can upload local files or provide YouTube links to extract vocals, accompaniment, and original music. The tool ensures lossless audio output quality and compatibility with multiple formats, making it suitable for professional music production and personal entertainment projects.

1 - Open Source AI Tools

basiclingua-LLM-Based-NLP

BasicLingua is a Python library that provides functionalities for linguistic tasks such as tokenization, stemming, lemmatization, and many others. It is based on the Gemini Language Model, which has demonstrated promising results in dealing with text data. BasicLingua can be used as an API or through a web demo. It is available under the MIT license and can be used in various projects.

20 - OpenAI Gpts

Regex Wizard

Generate and explain regex patterns from your description, it support English and Chinese.

Image Analyzer

I'm an image analysis assistant, providing detailed summaries and insights.

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

Visual Storyteller

Extract the essence of the novel story according to the quantity requirements and generate corresponding images. The images can be used directly to create novel videos.小说推文图片自动批量生成,可自动生成风格一致性图片

Receipt CSV Formatter

Extract from receipts to CSV: Date of Purchase, Item Purchased, Quantity Purchased, Units

PDF AI

PDFChat : Analyse 1000's of PDF's in seconds, extract and chat with PDFs in any language.

Watch Identification, Pricing, Sales Research Tool

Analyze watch images, extract text, and craft sales descriptions. Add 1 or more images for a single watch to get started.

The Enigmancer

Put your prompt engineering skills to the ultimate test! Embark on a journey to outwit a mythical guardian of ancient secrets. Try to extract the secret passphrase hidden in the system prompt and enter it in chat when you think you have it and claim your glory. Good luck!

Dissertation & Thesis GPT

An Ivy Leage Scholar GPT equipped to understand your research needs, formulate comprehensive literature review strategies, and extract pertinent information from a plethora of academic databases and journals. I'll then compose a peer review-quality paper with citations.

ExtractWisdom

Takes in any text and extracts the wisdom from it like you spent 3 hours taking handwritten notes.

QCM

ce GPT va recevoir des images dans lesquelles il y a des questions QCM codingame ou Problem Solving sur les sujets : Java, Hibernate, Angular, Spring Boot, SQL. Il doit extraire le texte depuis l'image et répondre au question QCM le plus rapidement possible.