Best AI tools for< Extract Data From Websites >

20 - AI tool Sites

Tablize

Tablize is a powerful data extraction tool that helps you turn unstructured data into structured, tabular format. With Tablize, you can easily extract data from PDFs, images, and websites, and export it to Excel, CSV, or JSON. Tablize uses artificial intelligence to automate the data extraction process, making it fast and easy to get the data you need.

Webscrape AI

Webscrape AI is a no-code web scraping tool that allows users to collect data from websites without writing any code. It is easy to use, accurate, and affordable, making it a great option for businesses of all sizes. With Webscrape AI, you can automate your data collection process and free up your time to focus on other tasks.

Extractify.co

Extractify.co is a website that offers a variety of tools and services for extracting information from different sources. The platform provides users with the ability to extract data from websites, documents, and other sources in a quick and efficient manner. With a user-friendly interface, Extractify.co aims to simplify the process of data extraction for individuals and businesses alike. Whether you need to extract text, images, or other types of data, Extractify.co has the tools to help you get the job done. The platform is designed to be intuitive and easy to use, making it accessible to users of all skill levels.

ScrapeComfort

ScrapeComfort is an AI-driven web scraping tool that offers an effortless and intuitive data mining solution. It leverages AI technology to extract data from websites without the need for complex coding or technical expertise. Users can easily input URLs, download data, set up extractors, and save extracted data for immediate use. The tool is designed to cater to various needs such as data analytics, market investigation, and lead acquisition, making it a versatile solution for businesses and individuals looking to streamline their data collection process.

PandasAI

PandasAI is an open-source AI tool designed for conversational data analysis. It allows users to ask questions in natural language to their enterprise data and receive real-time data insights. The tool is integrated with various data sources and offers enhanced analytics, actionable insights, detailed reports, and visual data representation. PandasAI aims to democratize data analysis for better decision-making, offering enterprise solutions for stable and scalable internal data analysis. Users can also fine-tune models, ingest universal data, structure data automatically, augment datasets, extract data from websites, and forecast trends using AI.

Axiom.ai

Axiom.ai is a no-code browser automation tool that allows users to automate website actions and repetitive tasks on any website or web app. It is a Chrome Extension that is simple to install and free to try. Once installed, users can pin Axiom to the Chrome Toolbar and click on the icon to open and close. Users can build custom bots or use templates to automate actions like clicking, typing, and scraping data from websites. Axiom.ai can be integrated with Zapier to trigger automations based on external events.

PromptLoop

PromptLoop is an AI-powered tool that integrates with Excel and Google Sheets to enhance market research and data analysis. It offers custom AI models tailored to specific needs, enabling users to extract insights from complex information. With PromptLoop, users can leverage advanced AI capabilities for tasks such as web research, content analysis, and data labeling, streamlining workflows and improving efficiency.

UseScraper

UseScraper is a web crawler and scraper API that allows users to extract data from websites for research, analysis, and AI applications. It offers features such as full browser rendering, markdown conversion, and automatic proxies to prevent rate limiting. UseScraper is designed to be fast, easy to use, and cost-effective, with plans starting at $0 per month.

Browse AI

Browse AI is a web data extraction and monitoring platform that makes it easy, affordable, and reliable for anyone to collect data from the web at scale. It was founded in 2020 with the mission of making the web more accessible and useful for everyone.

Hexowatch

Hexowatch is an AI-powered website monitoring and archiving tool that helps businesses track changes to any website, including visual, content, source code, technology, availability, or price changes. It provides detailed change reports, archives snapshots of pages, and offers side-by-side comparisons and diff reports to highlight changes. Hexowatch also allows users to access monitored data fields as a downloadable CSV file, Google Sheet, RSS feed, or sync any update via Zapier to over 2000 different applications.

Simplescraper

Simplescraper is a web scraping tool that allows users to extract data from any website in seconds. It offers the ability to download data instantly, scrape at scale in the cloud, or create APIs without the need for coding. The tool is designed for developers and no-coders, making web scraping simple and efficient. Simplescraper AI Enhance provides a new way to pull insights from web data, allowing users to summarize, analyze, format, and understand extracted data using AI technology.

Reworkd

Reworkd is a web data extraction tool that uses AI to generate and repair web extractors on the fly. It allows users to retrieve data from hundreds of websites without the need for developers. Reworkd is used by businesses in a variety of industries, including manufacturing, e-commerce, recruiting, lead generation, and real estate.

Browse AI

Browse AI is a powerful AI-powered data extraction platform that allows users to scrape and monitor data from any website without the need for coding. With Browse AI, users can easily extract data, monitor websites for changes, turn websites into APIs, and integrate data with over 7,000 apps. The platform offers prebuilt robots for various use cases like e-commerce, real estate, recruitment, and more. Browse AI is trusted by over 740,000 users worldwide for its reliability, scalability, and ease of use.

Lilys AI

Lilys AI is an AI application that provides services related to translation, document conversion, and web scraping. Users can utilize the tool for tasks such as language translation, file conversion, and extracting information from websites. The application requires JavaScript to be enabled for full functionality.

MyEmailExtractor

MyEmailExtractor is a free email extractor tool that helps you find and save emails from web pages to a CSV file. It's a great way to quickly increase your leads and grow your business. With MyEmailExtractor, you can extract emails from any website, including search engine results pages (SERPs), social media sites, and professional networking sites. The extracted emails are accurate and up-to-date, and you can export them to a CSV file for easy use.

PromptLoop

PromptLoop is an AI-powered web scraping and data extraction platform that allows users to run AI automation tasks on lists of data with a simple file upload. It enables users to crawl company websites, categorize entities, and conduct research tasks at a fraction of the cost of other alternatives. By leveraging unique company data from spreadsheets, PromptLoop enables the creation of custom AI models tailored to specific needs, facilitating the extraction of valuable insights from complex information.

CopyClick

CopyClick is an AI tool designed to simplify the process of copying and pasting text from websites and apps. It allows users to easily extract text from any website or app in plain format, making it convenient for use in ChatGPT or Claude. With CopyClick, users can quickly transfer text without any formatting issues, enhancing their workflow efficiency.

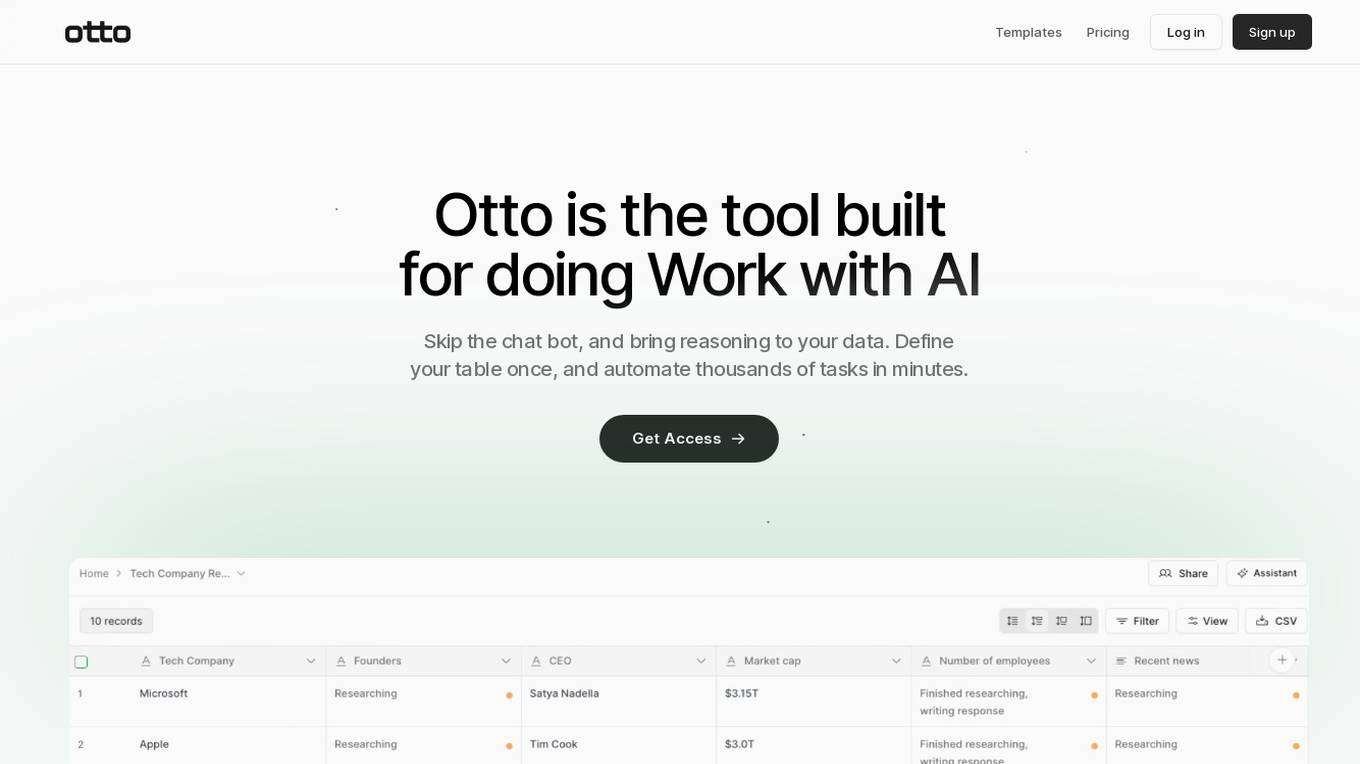

Otto

Otto is an AI-powered tool designed to streamline work processes by bringing reasoning to data. It allows users to define tables once and automate numerous tasks in minutes. With features like research capabilities, outbound message creation, and customizable columns, Otto enables users to work 10x faster by leveraging AI agents for parallel processing. The tool unlocks insights from various data sources, including websites, documents, and images, and offers an AI Assistant for contextual assistance. Otto aims to enhance productivity and efficiency by providing advanced data analysis and processing functionalities.

Wix.com

Wix.com is a website builder platform that allows users to create stunning websites with ease. It offers a user-friendly interface and a wide range of customizable templates to suit various needs. Users can easily connect their domain to their website and receive support from the Wix Support Team. With Wix.com, individuals and businesses can establish their online presence quickly and efficiently.

Skimming

Skimming is an AI tool that enables users to interact with various types of data, including audio, video, and text, to extract knowledge. It offers features like chatting with documents, YouTube videos, websites, audio, and video, as well as custom prompts and multilingual support. Skimming is trusted by over 100,000 users and is designed to save time and enhance information extraction. The tool caters to a diverse audience, including teachers, students, businesses, researchers, scholars, lawyers, HR professionals, YouTubers, and podcasters.

2 - Open Source AI Tools

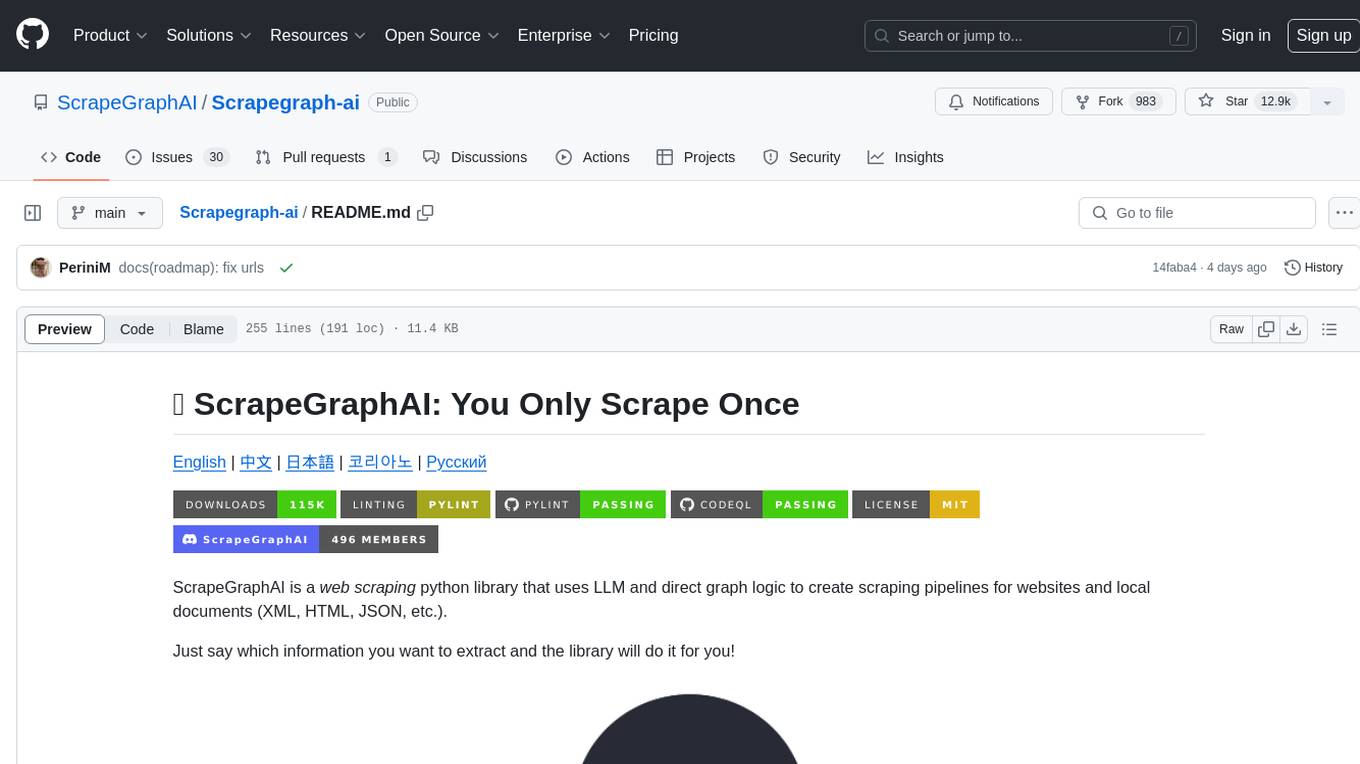

Scrapegraph-ai

ScrapeGraphAI is a Python library that uses Large Language Models (LLMs) and direct graph logic to create web scraping pipelines for websites, documents, and XML files. It allows users to extract specific information from web pages by providing a prompt describing the desired data. ScrapeGraphAI supports various LLMs, including Ollama, OpenAI, Gemini, and Docker, enabling users to choose the most suitable model for their needs. The library provides a user-friendly interface through its `SmartScraper` class, which simplifies the process of building and executing scraping pipelines. ScrapeGraphAI is open-source and available on GitHub, with extensive documentation and examples to guide users. It is particularly useful for researchers and data scientists who need to extract structured data from web pages for analysis and exploration.

Scrapegraph-ai

ScrapeGraphAI is a web scraping Python library that utilizes LLM and direct graph logic to create scraping pipelines for websites and local documents. It offers various standard scraping pipelines like SmartScraperGraph, SearchGraph, SpeechGraph, and ScriptCreatorGraph. Users can extract information by specifying prompts and input sources. The library supports different LLM APIs such as OpenAI, Groq, Azure, and Gemini, as well as local models using Ollama. ScrapeGraphAI is designed for data exploration and research purposes, providing a versatile tool for extracting information from web pages and generating outputs like Python scripts, audio summaries, and search results.

20 - OpenAI Gpts

Spreadsheet Composer

Magically turning text from emails, lists and website content into spreadsheet tables

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

Property Manager Document Assistant

Provides analysis and data extraction of Property Management documents and contracts for managers

Fill PDF Forms

Fill legal forms & complex PDF documents easily! Upload a file, provide data sources and I'll handle the rest.

Email Thread GPT

I'm EmailThreadAnalyzer, here to help you with your email thread analysis.

Regex Wizard

Generate and explain regex patterns from your description, it support English and Chinese.

Metaphor API Guide - Python SDK

Teaches you how to use the Metaphor Search API using our Python SDK