Best AI tools for< Explain Function >

20 - AI tool Sites

Formulas HQ

Formulas HQ is an AI-powered formula and script generator for Excel and Sheets. It provides users with a range of tools to simplify complex calculations, automate tasks, and enhance their spreadsheet mastery. With Formulas HQ, users can generate formulas, regular expressions, VBA code, and Apps Script, even without prior programming experience. The platform also offers a chat feature with system prompts to assist users with idea generation and troubleshooting. Formulas HQ is designed to empower users to work smarter and make better business decisions.

Formulas HQ

Formulas HQ is an AI-powered formula and script generator for Excel and Sheets. It provides users with a variety of tools to simplify complex calculations, automate tasks, and gain insights from data. The platform includes features such as formula generation, regular expression simplification, VBA and Apps Script automation, and chat-based assistance. Formulas HQ is designed to help users improve their productivity and efficiency when working with spreadsheets.

CodeSquire

CodeSquire is an AI-powered code writing assistant that helps data scientists, engineers, and analysts write code faster and more efficiently. It provides code completions and suggestions as you type, and can even generate entire functions and SQL queries. CodeSquire is available as a Chrome extension and works with Google Colab, BigQuery, and JupyterLab.

Stackie.AI

Stackie.AI is a life logging and tracking application that empowers users to log, track, recall, and reflect on various aspects of their lives. With embedded AI capabilities, users can create personalized diaries, manage health metrics, organize information, and engage in AI-assisted learning. The application offers auto-formatting, categorization, customizable stack structures, and templates for seamless user experience. Stackie.AI aims to enhance self-awareness, productivity, and overall well-being through efficient logging and tracking functionalities.

Programming Helper

Programming Helper is a tool that helps you code faster with the help of AI. It can generate code, test code, and explain code. It also has a wide range of other features, such as a function from description, text description to SQL command, and code to explanation. Programming Helper is a valuable tool for any programmer, regardless of their skill level.

Figstack

Figstack is an intelligent coding companion powered by AI, designed to help developers understand and document code quickly and efficiently. It offers a suite of solutions that supercharge the ability to read and write code across different programming languages. With features like Explain Code, Language Translator, Docstring Writer, and Time Complexity function, Figstack aims to simplify coding tasks and optimize program efficiency.

Rerun

Rerun is an SDK, time-series database, and visualizer for temporal and multimodal data. It is used in fields like robotics, spatial computing, 2D/3D simulation, and finance to verify, debug, and explain data. Rerun allows users to log data like tensors, point clouds, and text to create streams, visualize and interact with live and recorded streams, build layouts, customize visualizations, and extend data and UI functionalities. The application provides a composable data model, dynamic schemas, and custom views for enhanced data visualization and analysis.

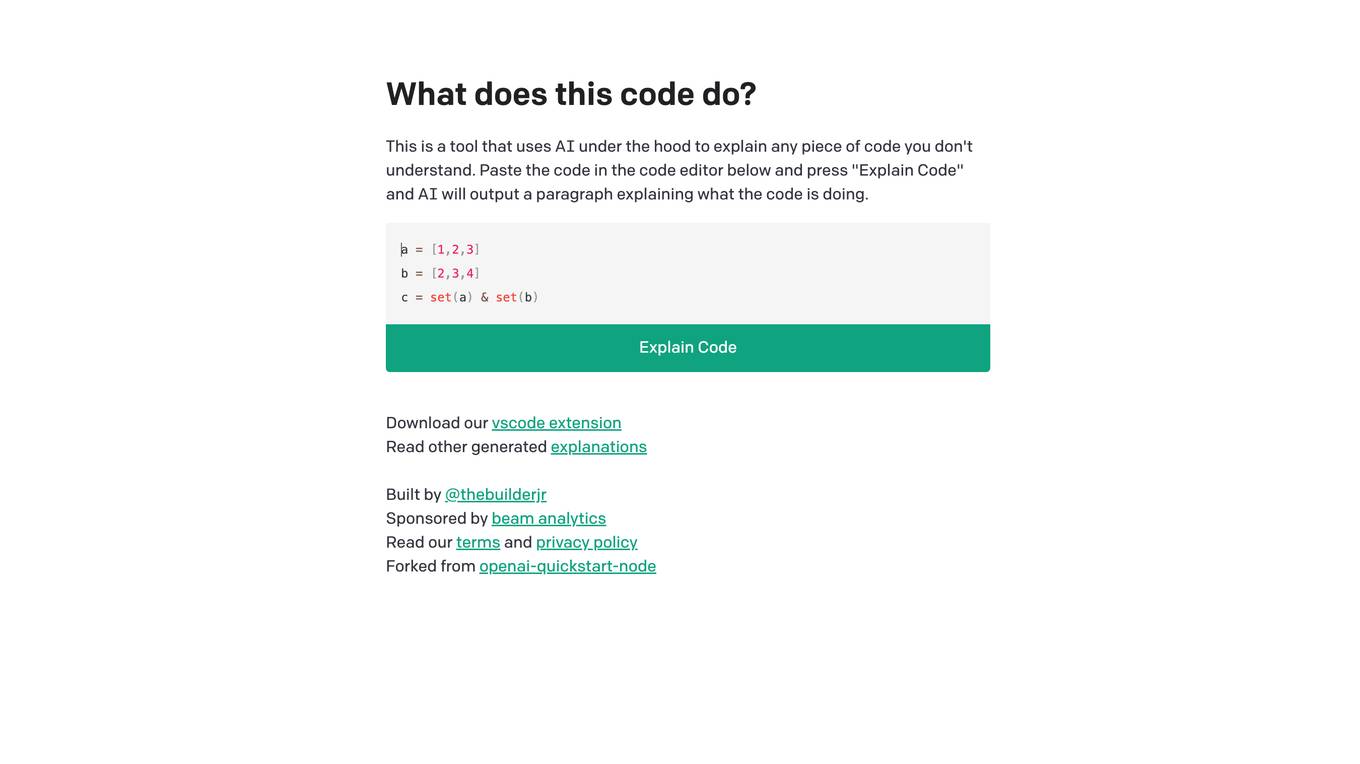

Code Explain

This tool uses AI to explain any piece of code you don't understand. Simply paste the code in the code editor and press "Explain Code" and AI will output a paragraph explaining what the code is doing.

Explain This

The website offers a no-code AI-powered user assistance tool that helps turn knowledge bases into proactive in-app support. It features Explain This for in-app contextual mastery, Chatbot for real-time intelligent responses, Tooltips for effortless interaction, Widget for a centralized help hub, Knowledge Base for context-based empowerment, and Ticket Form for hassle-free issue reporting. The tool supports seven languages and aims to boost product adoption while reducing support tickets.

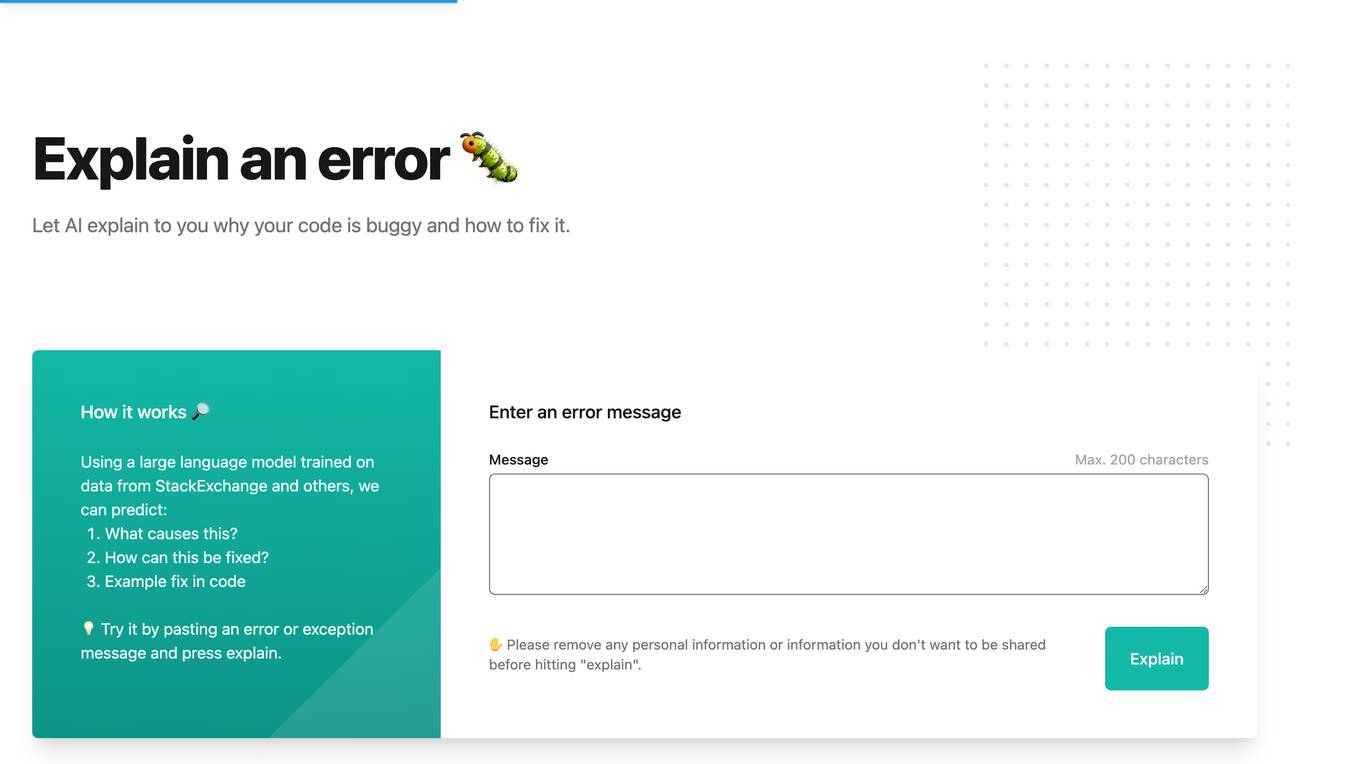

Whybug

Whybug is an AI tool designed to help developers debug their code by providing explanations for errors. By utilizing a large language model trained on data from StackExchange and other sources, Whybug can predict the causes of errors and suggest fixes. Users can simply paste an error message and receive detailed explanations on how to resolve the issue. The tool aims to streamline the debugging process and improve code quality.

ExplainDev

ExplainDev is a platform that allows users to ask and answer technical coding questions. It uses computer vision to retrieve technical context from images or videos. The platform is designed to help developers get the best answers to their technical questions and guide others to theirs.

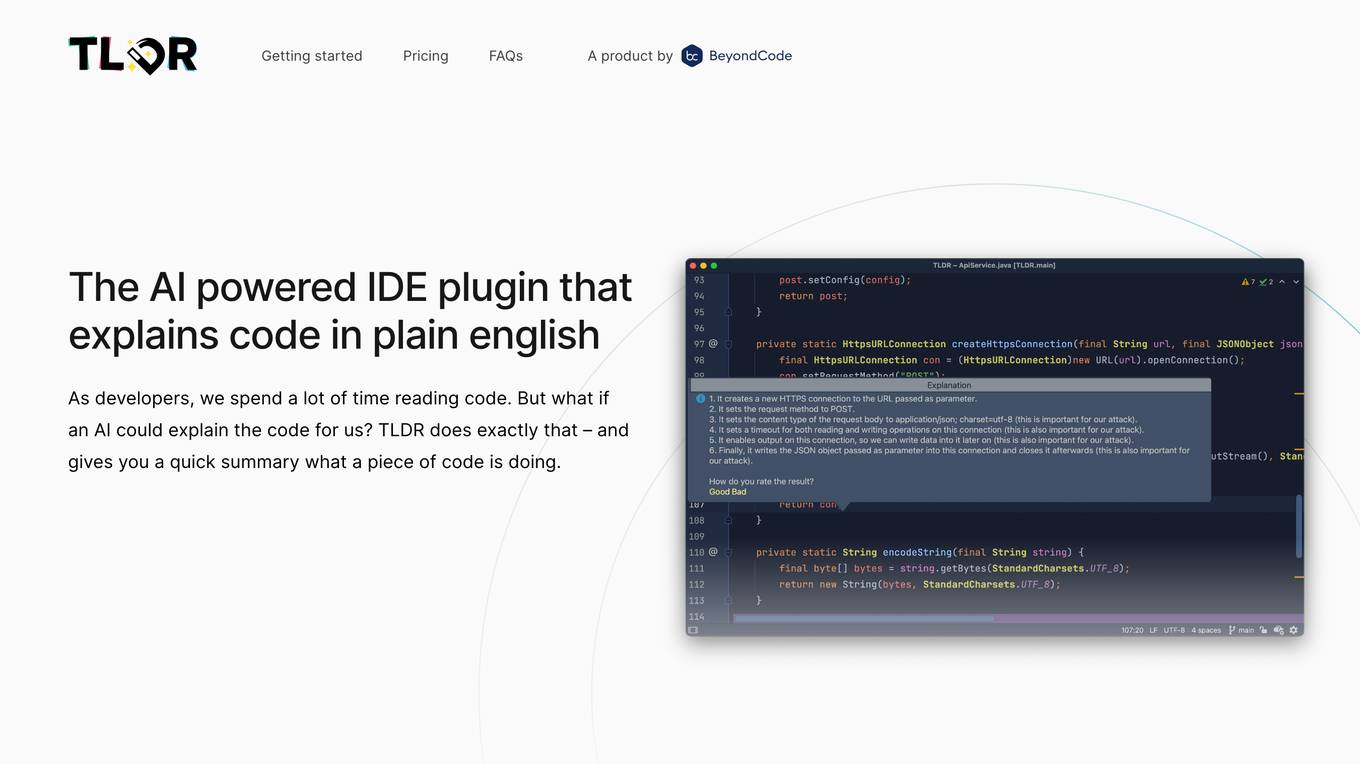

TLDR

TLDR is an AI-powered IDE plugin that explains code in plain English. It helps developers understand code by providing quick summaries of what a piece of code is doing. The tool supports almost all programming languages and offers a free version for users to try before purchasing. TLDR aims to simplify the understanding of complex code structures and save developers time in comprehending codebases.

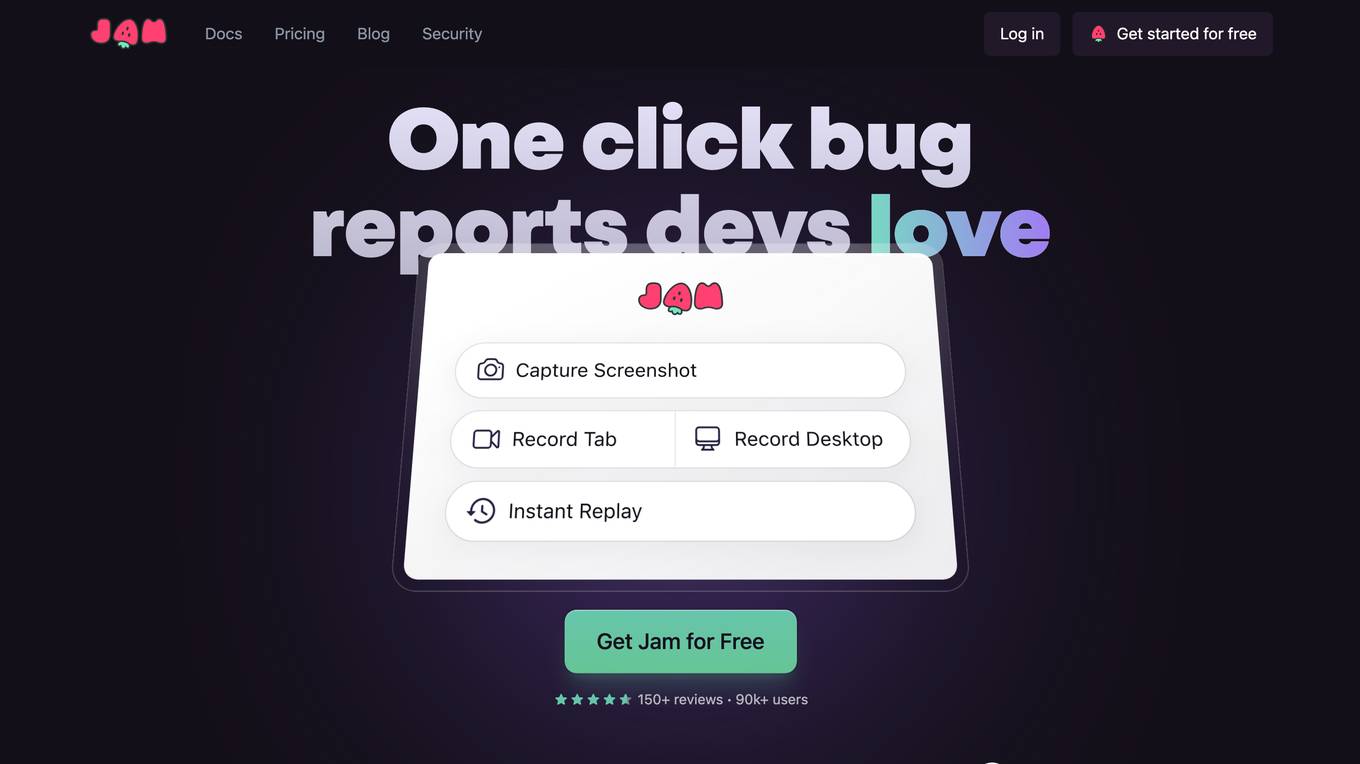

Jam

Jam is a bug-tracking tool that helps developers reproduce and debug issues quickly and easily. It automatically captures all the information engineers need to debug, including device and browser information, console logs, network logs, repro steps, and backend tracing. Jam also integrates with popular tools like GitHub, Jira, Linear, Slack, ClickUp, Asana, Sentry, Figma, Datadog, Gitlab, Notion, and Airtable. With Jam, developers can save time and effort by eliminating the need to write repro steps and manually collect information. Jam is used by over 90,000 developers and has received over 150 positive reviews.

Kognitium

Kognitium is an AI assistant designed to provide users with comprehensive and accurate information across various domains. It is equipped with advanced capabilities that enable it to understand the intent behind user inquiries and deliver tailored responses. Kognitium's knowledge base spans a wide range of subjects, including current events, science, history, philosophy, and linguistics. It is designed to be user-friendly and accessible, making it a valuable tool for students, professionals, and anyone seeking to expand their knowledge. Kognitium is committed to providing reliable and actionable insights, empowering users to make informed decisions and enhance their understanding of the world around them.

SiteExplainer

SiteExplainer is an AI-powered web application that helps users understand the purpose of any website quickly and accurately. It uses advanced artificial intelligence and machine learning technology to analyze the content of a website and present a summary of the main ideas and key points. SiteExplainer simplifies the language used on landing pages and eliminates corporate jargon to help visitors better understand a website's content.

Memenome AI

Memenome AI is an AI tool that helps users discover and understand trending sounds, hashtags, accounts, and posts on TikTok. It offers features to find top sounds, hashtags, and posts, provides AI analysis and templates for trend understanding, and allows users to iterate through content ideas with Meme0. The tool aims to save users time by efficiently identifying trends and empowering them to create engaging content.

Fiddler AI

Fiddler AI is an AI Observability platform that provides tools for monitoring, explaining, and improving the performance of AI models. It offers a range of capabilities, including explainable AI, NLP and CV model monitoring, LLMOps, and security features. Fiddler AI helps businesses to build and deploy high-performing AI solutions at scale.

Formularizer

Formularizer is an AI-powered assistant designed to help users with formula-related tasks in spreadsheets like Excel, Google Sheets, and Notion. It provides step-by-step guidance, formula generation, and explanations to simplify complex formula creation and problem-solving. With support for regular expressions, Excel VBA, and Google Apps Script, Formularizer aims to enhance productivity and make data manipulation more accessible.

Formularizer

Formularizer is an AI-powered assistant that helps users create formulas in Excel, Google Sheets, and Notion. It supports a variety of formula types, including Excel, Google Apps Script, and regular expressions. Formularizer can generate formulas from natural language instructions, explain how formulas work, and even help users debug their formulas. It is designed to be user-friendly and accessible to everyone, regardless of their level of expertise.

Tooltips.ai

Tooltips.ai is an AI-powered reading extension that provides instant definitions, translations, and summaries for any word or phrase you hover over. It is designed to enhance your reading experience by making it easier and faster to understand complex or unfamiliar content. Tooltips.ai integrates seamlessly with your browser, so you can use it on any website or document.

2 - Open Source AI Tools

GhidrOllama

GhidrOllama is a script that interacts with Ollama's API to perform various reverse engineering tasks within Ghidra. It supports both local and remote instances of Ollama, providing functionalities like explaining functions, suggesting names, rewriting functions, finding bugs, and automating analysis of specific functions in binaries. Users can ask questions about functions, find vulnerabilities, and receive explanations of assembly instructions. The script bridges the gap between Ghidra and Ollama models, enhancing reverse engineering capabilities.

anon-kode

ANON KODE is a terminal-based AI coding tool that utilizes any model supporting the OpenAI-style API. It helps in fixing spaghetti code, explaining function behavior, running tests and shell commands, and more based on the model used. Users can easily set up models, submit bugs, and ensure data privacy with no telemetry or backend servers other than chosen AI providers.

20 - OpenAI Gpts

Sheets Expert

Master the art of Google Sheets with an assistant who can do everything from answer questions about basic features, explain functions in an eloquent and succinct manner, simplify the most complex formulas into easy steps, and help you identify techniques to effectively visualize your data.

Function Calling Definition Generator

Defines and explains function calls based on a knowledge source.

¿Cómo funciona?

Este GPT explica cómo funciona un objeto y todos los avancces científicos que han permitido su creación.

Explain It To Me Like I'm 8 Years Old

Inspired by The Office, This ChatGPT explains everything like if you were an eight year old... and if you still don't understand it, it will then explain it like you were a five year old.

BSC Tutor

I'm a BSc tutor, here to explain complex concepts and guide you in science subjects.

SciPlore: A Science Paper Explorer

Explain scientific papers using the 3-pass method for efficient understanding. After uploading a paper, you can enter First pass/Second pass /Third pass / Q&A to get different level of response from SciPlore.