Best AI tools for< Ensure Ai Security >

20 - AI tool Sites

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

Adversa AI

Adversa AI is a platform that provides Secure AI Awareness, Assessment, and Assurance solutions for various industries to mitigate AI risks. The platform focuses on LLM Security, Privacy, Jailbreaks, Red Teaming, Chatbot Security, and AI Face Recognition Security. Adversa AI helps enable AI transformation by protecting it from cyber threats, privacy issues, and safety incidents. The platform offers comprehensive research, advisory services, and expertise in the field of AI security.

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

Aporia

Aporia is an AI control platform that provides real-time guardrails and security for AI applications. It offers features such as hallucination mitigation, prompt injection prevention, data leakage prevention, and more. Aporia helps businesses control and mitigate risks associated with AI, ensuring the safe and responsible use of AI technology.

Protecto

Protecto is an Enterprise AI Data Security & Privacy Guardrails application that offers solutions for protecting sensitive data in AI applications. It helps organizations maintain data security and compliance with regulations like HIPAA, GDPR, and PCI. Protecto identifies and masks sensitive data while retaining context and semantic meaning, ensuring accuracy in AI applications. The application provides custom scans, unmasking controls, and versatile data protection across structured, semi-structured, and unstructured text. It is preferred by leading Gen AI companies for its robust and cost-effective data security solutions.

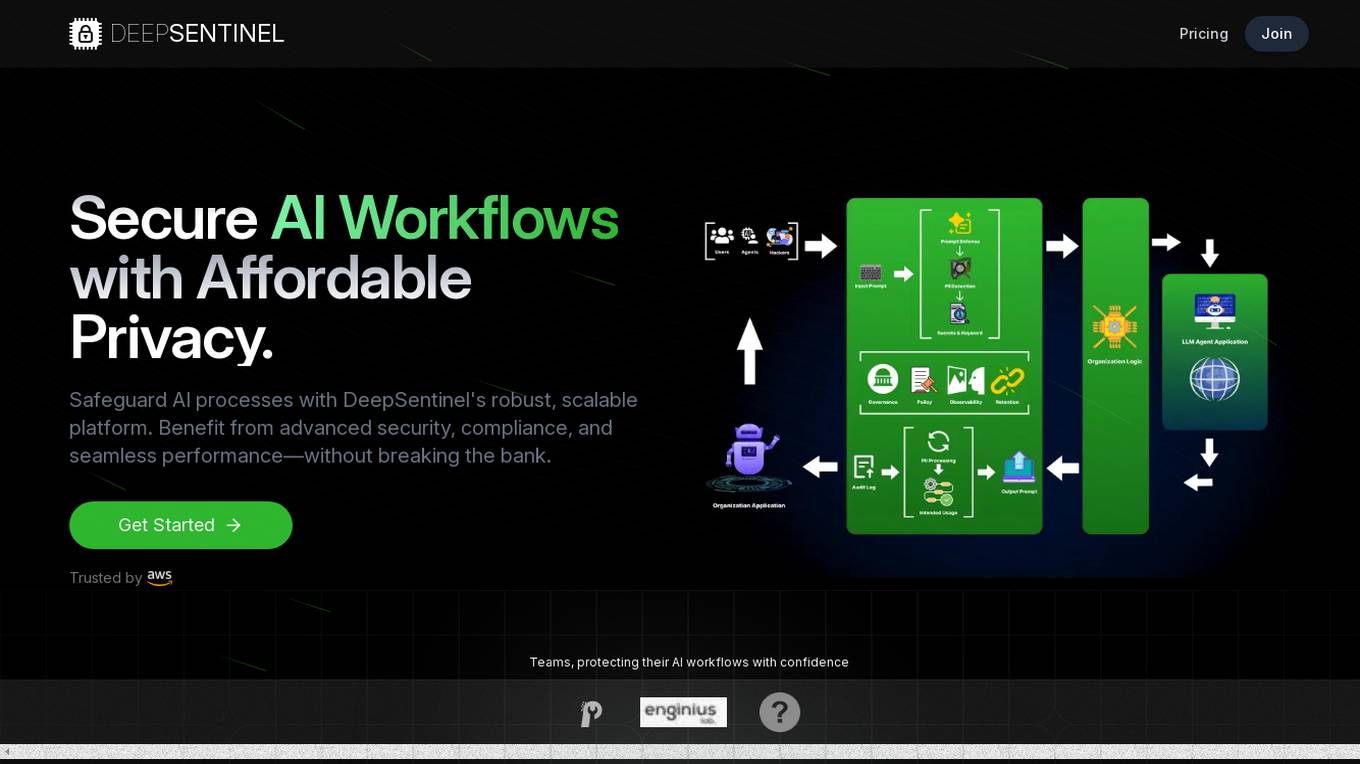

DeepSentinel

DeepSentinel is an AI application that provides secure AI workflows with affordable deep data privacy. It offers a robust, scalable platform for safeguarding AI processes with advanced security, compliance, and seamless performance. The platform allows users to track, protect, and control their AI workflows, ensuring secure and efficient operations. DeepSentinel also provides real-time threat monitoring, granular control, and global trust for securing sensitive data and ensuring compliance with international regulations.

AI CERTs

The website page provides detailed information on AI CERTs, focusing on Google Cloud AI Security and AI Sustainability Strategies. It discusses the importance of AI in cybersecurity, sustainability, and government services. The content covers various topics such as the role of AI in preparing for cyber threats, the significance of AI in shaping a greener future, and the impact of AI on public sector operations. Additionally, it highlights the advantages of AI-driven solutions, the challenges faced in AI adoption, and the future implications of AI security wars.

EdgeDX

EdgeDX is a leading provider of Edge AI Video Analysis Solutions, specializing in security and surveillance, construction and logistics safety, efficient store management, public safety management, and intelligent transportation system. The application offers over 50 intuitive AI apps capable of advanced human behavior analysis, supports various protocols and VMS, and provides features like P2P based mobile alarm viewer, LTE & GPS support, and internal recording with M.2 NVME SSD. EdgeDX aims to protect customer assets, ensure safety, and enable seamless integration with AI Bridge for easy and efficient implementation.

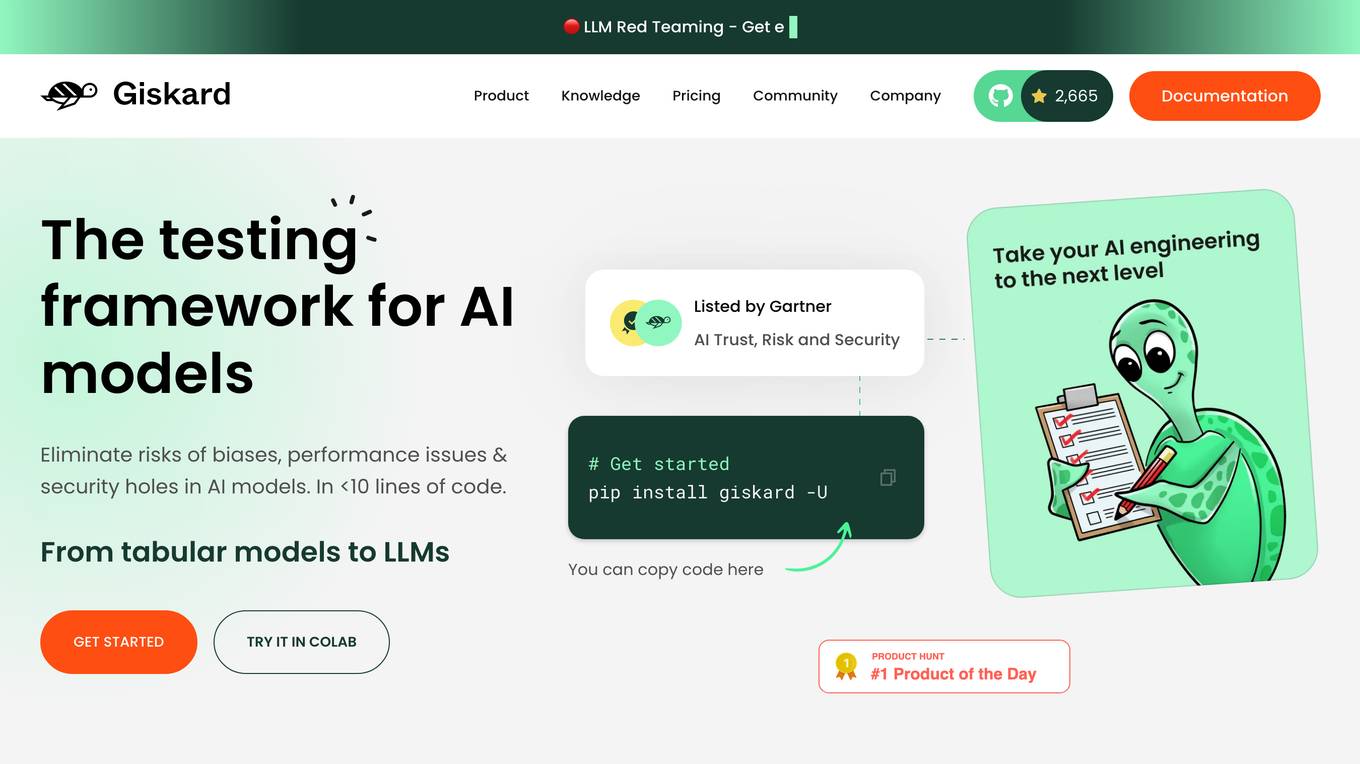

Giskard

Giskard is an AI Red Teaming & LLM Security Platform designed to continuously secure LLM agents by preventing hallucinations and security issues in production. It offers automated testing to catch vulnerabilities before they happen, trusted by enterprise AI leaders to ensure data and reputation protection. The platform provides comprehensive protection against various security attacks and vulnerabilities, offering end-to-end encryption, data residency & isolation, and compliance with GDPR, SOC 2 Type II, and HIPAA. Giskard helps in uncovering AI vulnerabilities, stopping business failures at the source, unifying testing across teams, and saving time with continuous testing to prevent regressions.

Legit

Legit is an Application Security Posture Management (ASPM) platform that helps organizations manage and mitigate application security risks from code to cloud. It offers features such as Secrets Detection & Prevention, Continuous Compliance, Software Supply Chain Security, and AI Security Posture Management. Legit provides a unified view of AppSec risk, deep context to prioritize issues, and proactive remediation to prevent future risks. It automates security processes, collaborates with DevOps teams, and ensures continuous compliance. Legit is trusted by Fortune 500 companies like Kraft-Heinz for securing the modern software factory.

Ambient.ai

Ambient.ai is an AI-powered physical security software that helps prevent security incidents by detecting threats in real-time, auto-clearing false alarms, and accelerating investigations. The platform uses computer vision intelligence to monitor cameras for suspicious activities, decrease alarms, and enable rapid investigations. Ambient.ai offers rich integration ecosystem, detections for a spectrum of threats, unparalleled operational efficiency, and enterprise-grade privacy to ensure maximum security and efficiency for its users.

Concentric AI

Concentric AI is a Managed Data Security Posture Management tool that utilizes Semantic Intelligence to provide comprehensive data security solutions. The platform offers features such as autonomous data discovery, data risk identification, centralized remediation, easy deployment, and data security posture management. Concentric AI helps organizations protect sensitive data, prevent data loss, and ensure compliance with data security regulations. The tool is designed to simplify data governance and enhance data security across various data repositories, both in the cloud and on-premises.

Privatemode AI

Privatemode is an AI service that offers always encrypted generative AI capabilities, ensuring data privacy and security. It allows users to utilize open-source AI models while keeping their data protected through confidential computing. The service is designed for individuals and developers, providing a secure AI assistant for various tasks like content generation and document analysis.

DevSecCops

DevSecCops is an AI-driven automation platform designed to revolutionize DevSecOps processes. The platform offers solutions for cloud optimization, machine learning operations, data engineering, application modernization, infrastructure monitoring, security, compliance, and more. With features like one-click infrastructure security scan, AI engine security fixes, compliance readiness using AI engine, and observability, DevSecCops aims to enhance developer productivity, reduce cloud costs, and ensure secure and compliant infrastructure management. The platform leverages AI technology to identify and resolve security issues swiftly, optimize AI workflows, and provide cost-saving techniques for cloud architecture.

Beecker.ai

Beecker.ai is an AI-powered security service that protects websites from online attacks by using advanced algorithms to detect and block malicious activities. The tool helps website owners safeguard their online presence and data by providing real-time monitoring and protection against various threats such as SQL injections and data breaches. Beecker.ai offers a comprehensive security solution to ensure a safe and secure online environment for businesses and individuals.

Kupid.ai

Kupid.ai is an AI-powered security verification tool designed to protect websites from malicious bots. It utilizes advanced algorithms to verify user authenticity and prevent unauthorized access. By enabling JavaScript and cookies, users can seamlessly navigate through the verification process and ensure a secure online experience. Kupid.ai is a reliable solution for website owners looking to enhance their security measures and safeguard against potential cyber threats.

Motific.ai

Motific.ai is a responsible GenAI tool powered by data at scale. It offers a fully managed service with natural language compliance and security guardrails, an intelligence service, and an enterprise data-powered, end-to-end retrieval augmented generation (RAG) service. Users can rapidly deliver trustworthy GenAI assistants and API endpoints, configure assistants with organization's data, optimize performance, and connect with top GenAI model providers. Motific.ai enables users to create custom knowledge bases, connect to various data sources, and ensure responsible AI practices. It supports English language only and offers insights on usage, time savings, and model optimization.

Wald.ai

Wald.ai is an AI tool designed for businesses to protect Personally Identifiable Information (PII) and trade secrets. It offers cutting-edge AI assistants that ensure data protection and regulatory compliance. Users can securely interact with AI assistants, ask queries, generate code, collaborate with internal knowledge assistants, and more. Wald.ai provides total data and identity protection, compliance with various regulations, and user and policy management features. The platform is used by businesses for marketing, legal work, and content creation, with a focus on data privacy and security.

Link Shield

Link Shield is an AI-powered malicious URL detection API platform that helps protect online security. It utilizes advanced machine learning algorithms to analyze URLs and identify suspicious activity, safeguarding users from phishing scams, malware, and other harmful threats. The API is designed for ease of integration, affordability, and flexibility, making it accessible to developers of all levels. Link Shield empowers businesses to ensure the safety and security of their applications and online communities.

AirMDR

AirMDR is an AI-powered Managed Detection and Response (MDR) application that revolutionizes cybersecurity by leveraging artificial intelligence to automate routine tasks, enhance alert triage, investigation, and response processes. The application offers faster, higher-quality, and more affordable cybersecurity solutions, supervised by human experts. AirMDR aims to deliver unprecedented speed, superior quality, and cost-effective outcomes to cater to the unique demands of security operations centers.

0 - Open Source AI Tools

20 - OpenAI Gpts

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.

Human Writer, Humanizer, Paraphraser(Human AI)🖊️

I'm Iris. You can ask me anything, and I'll answer like a human. I can gather information from the web, add a human touch to your files, and automatically refine your prompts to ensure you receive the most accurate responses.

Education AI Strategist

I provide a structured way of using AI to support teaching and learning. I use the the CHOICE method (i.e., Clarify, Harness, Originate, Iterate, Communicate, Evaluate) to ensure that your use of AI can help you meet your educational goals.

⚖️ Accountable AI

Accountable AI represents a step forward in creating a more ethical, transparent, and responsible AI system, tailored to meet the demands of users who prioritize accountability and unbiased information in their AI interactions.

Inclusive AI Advisor

Expert in AI fairness, offering tailored advice and document insights.

Inspection AI

Expert in testing, inspection, certification, compliant with OpenAI policies, developed on OpenAI.

Copyscape AI Bypasser

Netus AI tool for paraphrasing | Bypass AI Detection | Avoid AI Detectors | Copyscape AI Bypasser - To be 100% Undetectable use Netus AI.

Prompt Helper by Ecom AI Boss

Expert in crafting and refining prompts for ChatGPT, ensuring clarity and precision through interactive iterations.

GPT-Mediator

GPT-Mediator is an AI chatbot designed to facilitate dispute resolution. It employs empathy, neutrality, and respect, offering tailored suggestions for conflict resolution, all while ensuring unbiased mediation.

Plagiarism Checker

Plagiarism Checker GPT is powered by Winston AI and created to help identify plagiarized content. It is designed to help you detect instances of plagiarism and maintain integrity in academia and publishing. Winston AI is the most trusted AI and Plagiarism Checker.

Blog Title Click Magnet

I generate SEO-optimized, catchy blog titles that ensure a higher click through rate.

Santa Claus

Santa Claus, your jolly companion for heartwarming conversations! Always in character, our Santa ensures every interaction is family-friendly, spreading cheer and festive spirit with each reply. Get ready to share your holiday wishes and enjoy delightful chats that capture the magic of Christmas!

Harvard Quick Citations

This tool is only useful if you have added new sources to your reference list and need to ensure that your in-text citations reflect these updates. Paste your essay below to get started.