Best AI tools for< Develop Llm >

20 - AI tool Sites

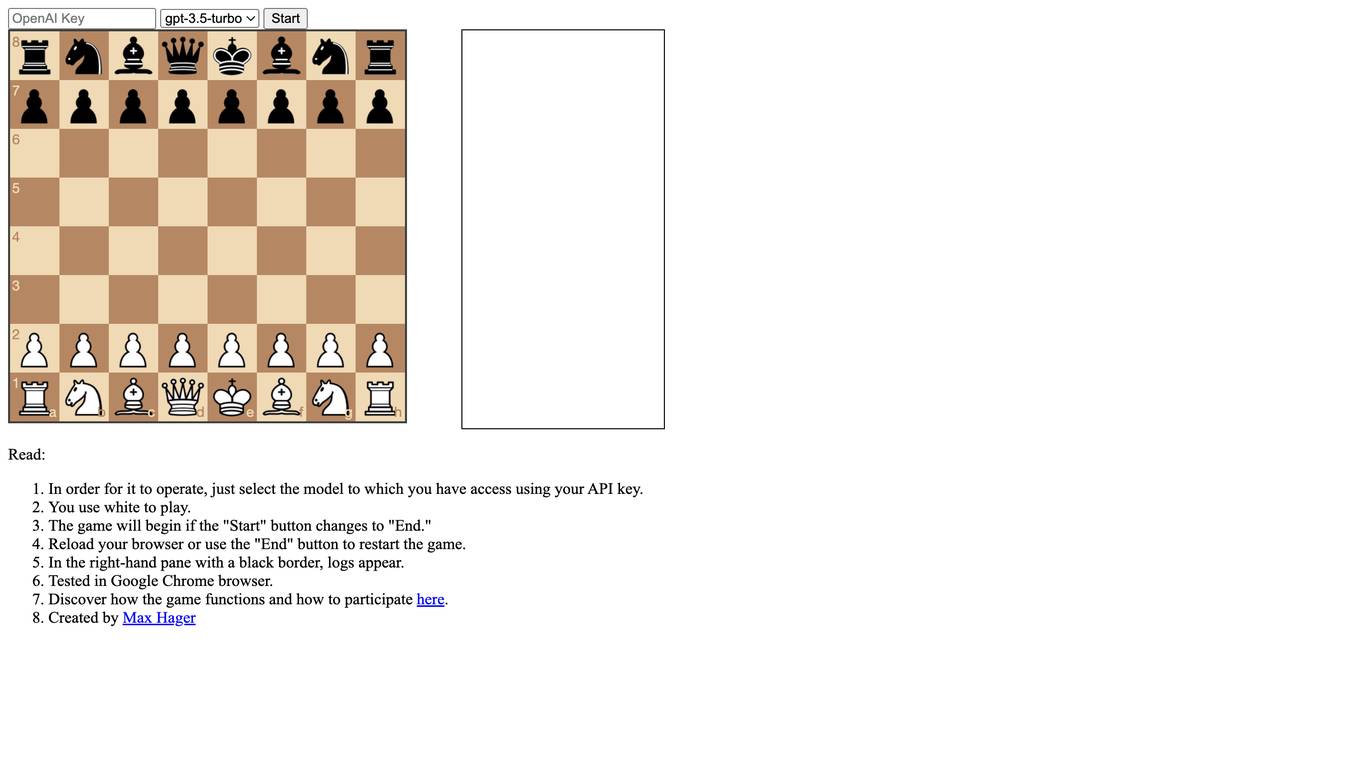

LLMChess

LLMChess is a web-based chess game that utilizes large language models (LLMs) to power the gameplay. Players can select the LLM model they wish to play against, and the game will commence once the "Start" button is clicked. The game logs are displayed in a black-bordered pane on the right-hand side of the screen. LLMChess is compatible with the Google Chrome browser. For more information on the game's functionality and participation guidelines, please refer to the provided link.

AI Builders Summit

AI Builders Summit is a 4-week virtual training event designed to equip data scientists, ML and AI engineers, and innovators with the latest advancements in large language models (LLMs), AI agents, and Retrieval-Augmented Generation (RAG). The summit emphasizes hands-on learning and real-world applications, with interactive workshops, platform credits, and direct exposure to industry-leading tools. Attendees can learn progressively over four weeks, building practical skills through expert-led sessions, cutting-edge tools, and industry insights.

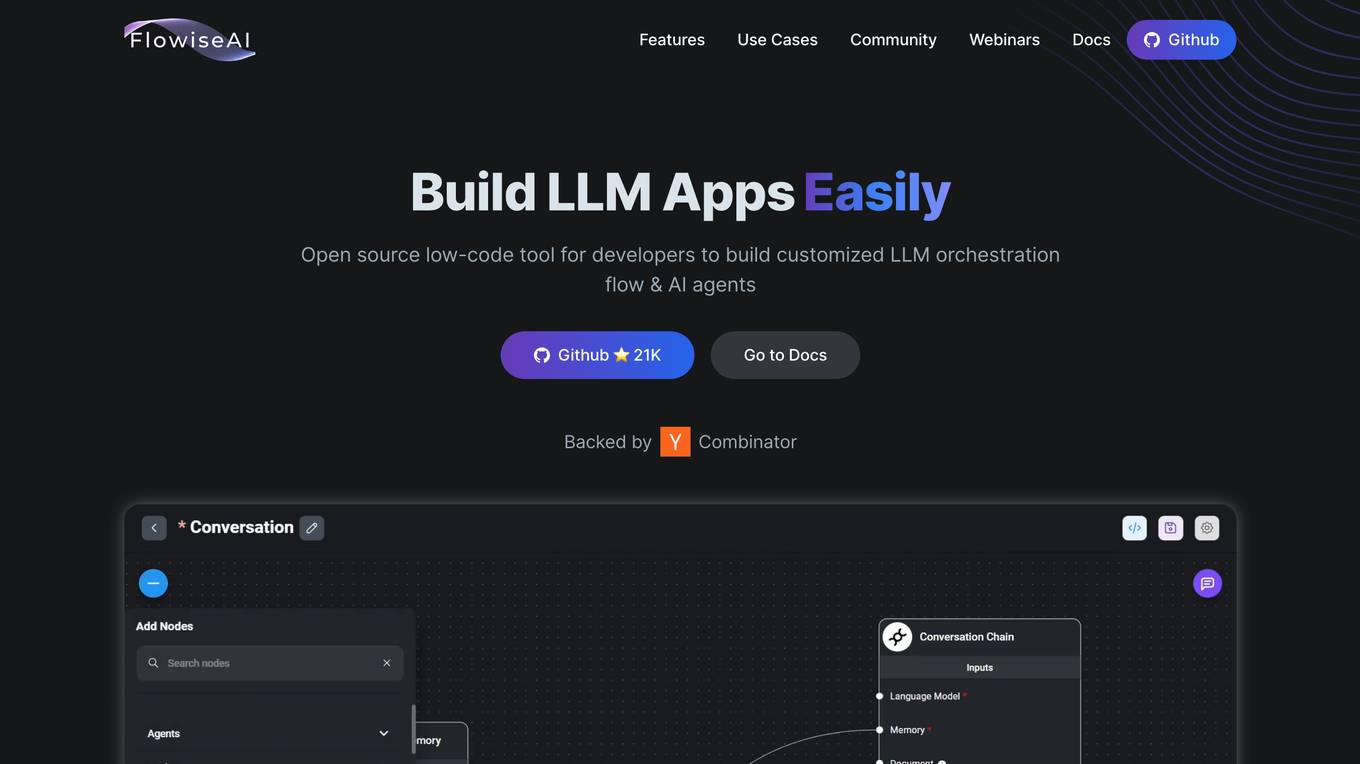

Flowise

Flowise is an open-source, low-code tool that enables developers to build customized LLM orchestration flows and AI agents. It provides a drag-and-drop interface, pre-built app templates, conversational agents with memory, and seamless deployment on cloud platforms. Flowise is backed by Combinator and trusted by teams around the globe.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

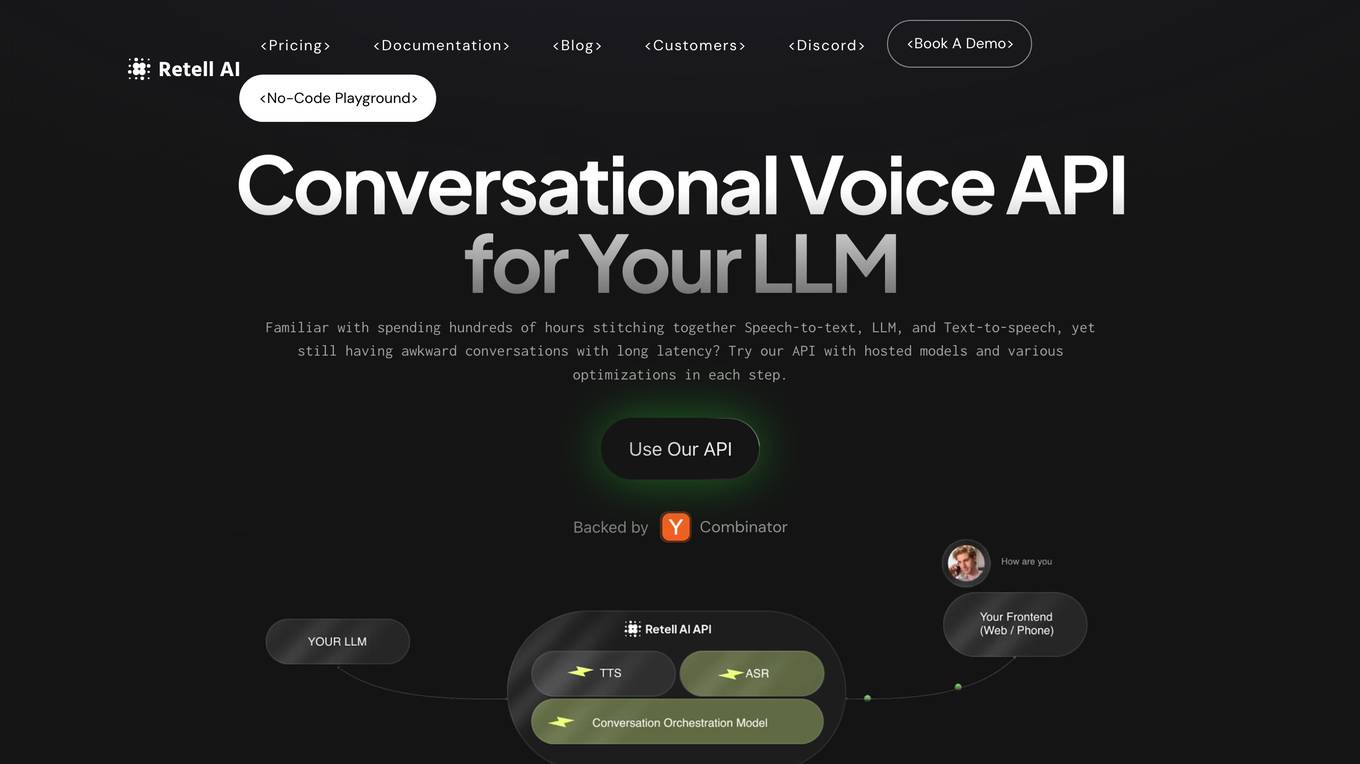

Retell AI

Retell AI provides a Conversational Voice API that enables developers to integrate human-like voice interactions into their applications. With Retell AI's API, developers can easily connect their own Large Language Models (LLMs) to create AI-powered voice agents that can engage in natural and engaging conversations. Retell AI's API offers a range of features, including ultra-low latency, realistic voices with emotions, interruption handling, and end-of-turn detection, ensuring seamless and lifelike conversations. Developers can also customize various aspects of the conversation experience, such as voice stability, backchanneling, and custom voice cloning, to tailor the AI agent to their specific needs. Retell AI's API is designed to be easy to integrate with existing LLMs and frontend applications, making it accessible to developers of all levels.

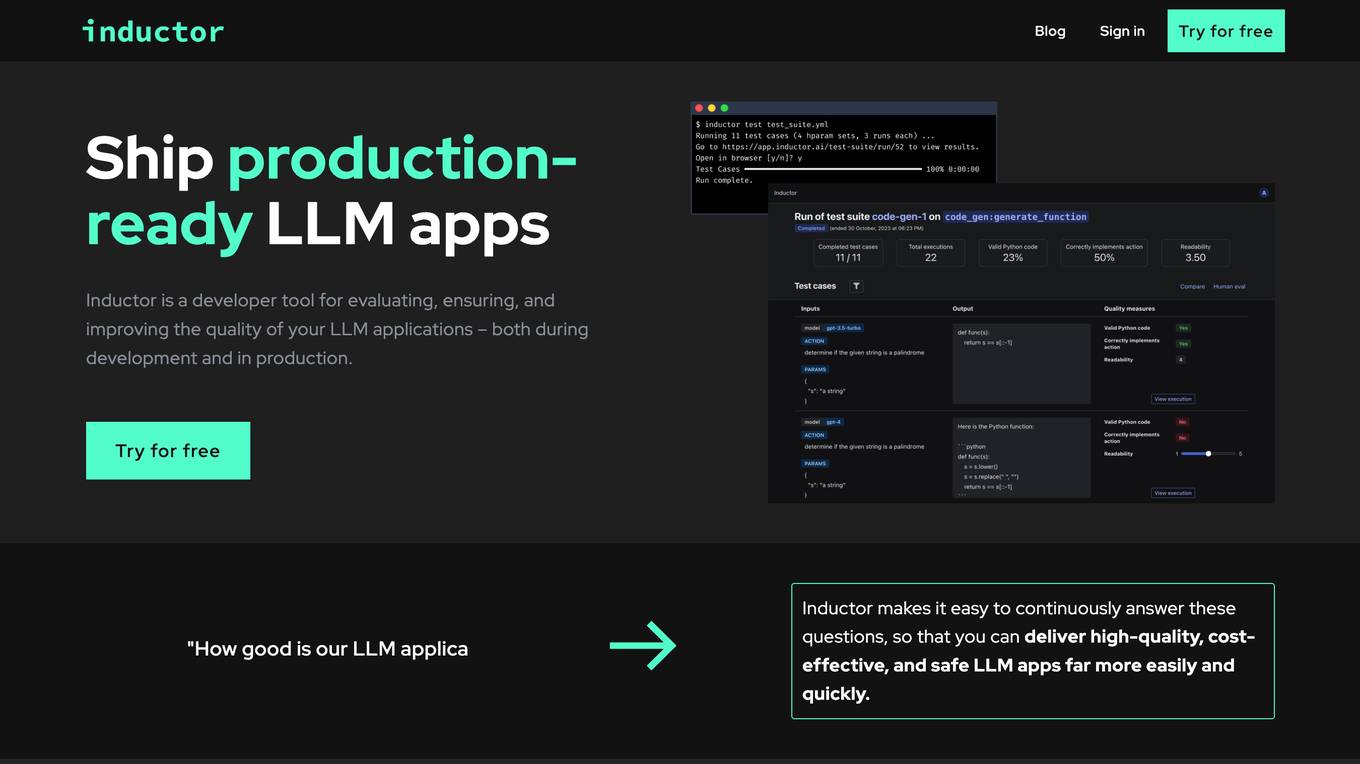

Inductor

Inductor is a developer tool for evaluating, ensuring, and improving the quality of your LLM applications – both during development and in production. It provides a fantastic workflow for continuous testing and evaluation as you develop, so that you always know your LLM app’s quality. Systematically improve quality and cost-effectiveness by actionably understanding your LLM app’s behavior and quickly testing different app variants. Rigorously assess your LLM app’s behavior before you deploy, in order to ensure quality and cost-effectiveness when you’re live. Easily monitor your live traffic: detect and resolve issues, analyze usage in order to improve, and seamlessly feed back into your development process. Inductor makes it easy for engineering and other roles to collaborate: get critical human feedback from non-engineering stakeholders (e.g., PM, UX, or subject matter experts) to ensure that your LLM app is user-ready.

WeGPT.ai

WeGPT.ai is an AI tool that focuses on enhancing Generative AI capabilities through Retrieval Augmented Generation (RAG). It provides versatile tools for web browsing, REST APIs, image generation, and coding playgrounds. The platform offers consumer and enterprise solutions, multi-vendor support, and access to major frontier LLMs. With a comprehensive approach, WeGPT.ai aims to deliver better results, user experience, and cost efficiency by keeping AI models up-to-date with the latest data.

AiPlus

AiPlus is an AI tool designed to serve as a cost-efficient model gateway. It offers users a platform to access and utilize various AI models for their projects and tasks. With AiPlus, users can easily integrate AI capabilities into their applications without the need for extensive development or resources. The tool aims to streamline the process of leveraging AI technology, making it accessible to a wider audience.

Derwen

Derwen is an open-source integration platform for production machine learning in enterprise, specializing in natural language processing, graph technologies, and decision support. It offers expertise in developing knowledge graph applications and domain-specific authoring. Derwen collaborates closely with Hugging Face and provides strong data privacy guarantees, low carbon footprint, and no cloud vendor involvement. The platform aims to empower AI engineers and domain experts with quality, time-to-value, and ownership since 2017.

Flow AI

Flow AI is an advanced AI tool designed for evaluating and improving Large Language Model (LLM) applications. It offers a unique system for creating custom evaluators, deploying them with an API, and developing specialized LMs tailored to specific use cases. The tool aims to revolutionize AI evaluation and model development by providing transparent, cost-effective, and controllable solutions for AI teams across various domains.

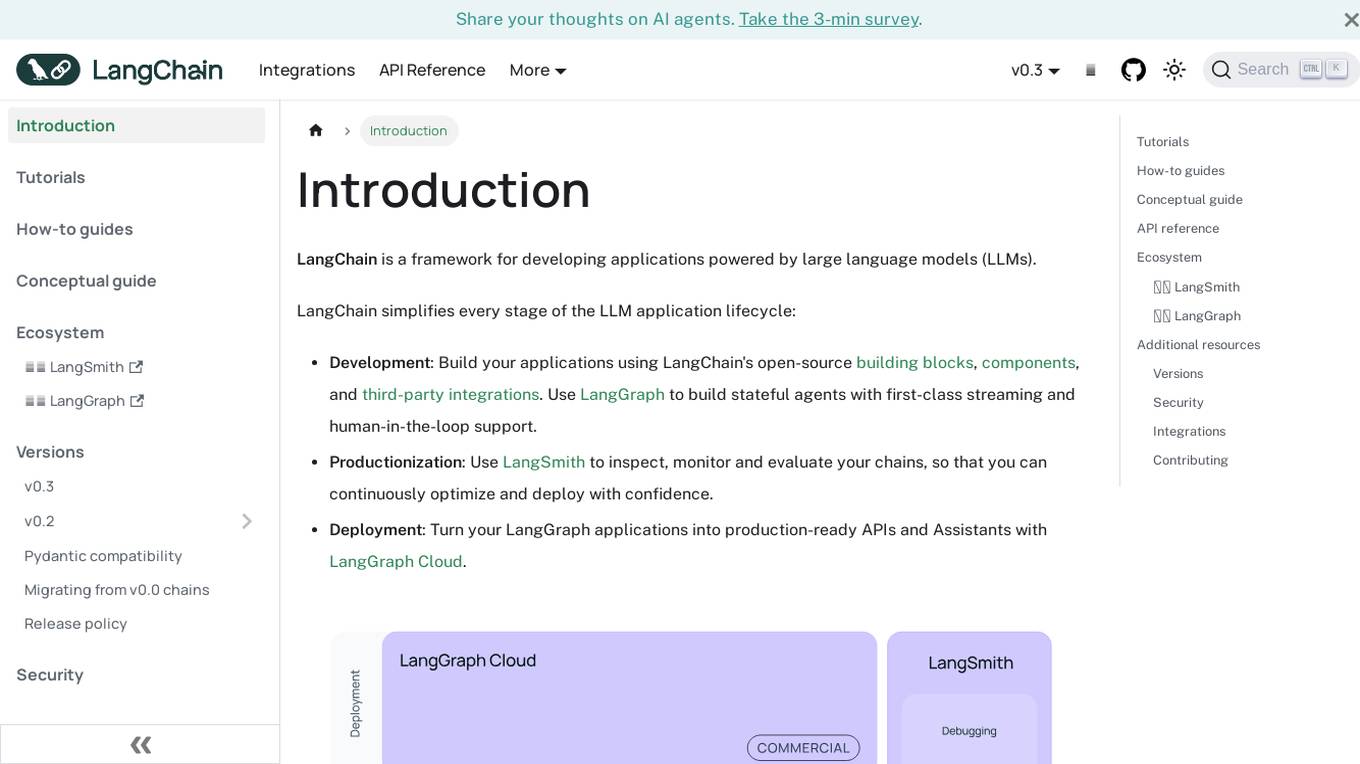

LangChain

LangChain is a framework for developing applications powered by large language models (LLMs). It simplifies every stage of the LLM application lifecycle, including development, productionization, and deployment. LangChain consists of open-source libraries such as langchain-core, langchain-community, and partner packages. It also includes LangGraph for building stateful agents and LangSmith for debugging and monitoring LLM applications.

YourGPT

YourGPT is a suite of next-generation AI products designed to empower businesses with the potential of Large Language Models (LLMs). Its products include a no-code AI Chatbot solution for customer support and LLM Spark, a developer platform for building and deploying production-ready LLM applications. YourGPT prioritizes data security and is GDPR compliant, ensuring the privacy and protection of customer data. With over 2,000 satisfied customers, YourGPT has earned trust through its commitment to quality and customer satisfaction.

Placeholder Website

The website is a simple and straightforward platform that seems to lack content or functionality. It appears to be a placeholder or under construction. There is no specific information available on the site, and it seems to be in a basic state of development.

ITRex

ITRex is an AI tool that specializes in Gen AI, Data, and Agentic System Development. The company offers a wide range of services including AI strategy consulting, AI product discovery, AI design and development, data consulting, IoT solutions, and more. ITRex focuses on providing end-to-end AI solutions tailored to meet the specific needs of each client, from strategy to deployment. The company's expertise spans across various industries such as healthcare, logistics, manufacturing, and retail, delivering innovative and customized AI solutions to drive business growth and efficiency.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

OdiaGenAI

OdiaGenAI is a collaborative initiative focused on conducting research on Generative AI and Large Language Models (LLM) for the Odia Language. The project aims to leverage AI technology to develop Generative AI and LLM-based solutions for the overall development of Odisha and the Odia language through collaboration among Odia technologists. The initiative offers pre-trained models, codes, and datasets for non-commercial and research purposes, with a focus on building language models for Indic languages like Odia and Bengali.

Dify.AI

Dify.AI is a generative AI application development platform that allows users to create AI agents, chatbots, and other AI-powered applications. It provides a variety of tools and services to help developers build, deploy, and manage their AI applications. Dify.AI is designed to be easy to use, even for those with no prior experience in AI development.

NOCODING AI

NOCODING AI is an innovative AI tool that allows users to create advanced applications without the need for coding skills. The platform offers a user-friendly interface with drag-and-drop functionality, making it easy for individuals and businesses to develop custom solutions. With NOCODING AI, users can build chatbots, automate workflows, analyze data, and more, all without writing a single line of code. The tool leverages machine learning algorithms to streamline the development process and empower users to bring their ideas to life quickly and efficiently.

Anyscale

Anyscale is a company that provides a scalable compute platform for AI and Python applications. Their platform includes a serverless API for serving and fine-tuning open LLMs, a private cloud solution for data privacy and governance, and an open source framework for training, batch, and real-time workloads. Anyscale's platform is used by companies such as OpenAI, Uber, and Spotify to power their AI workloads.

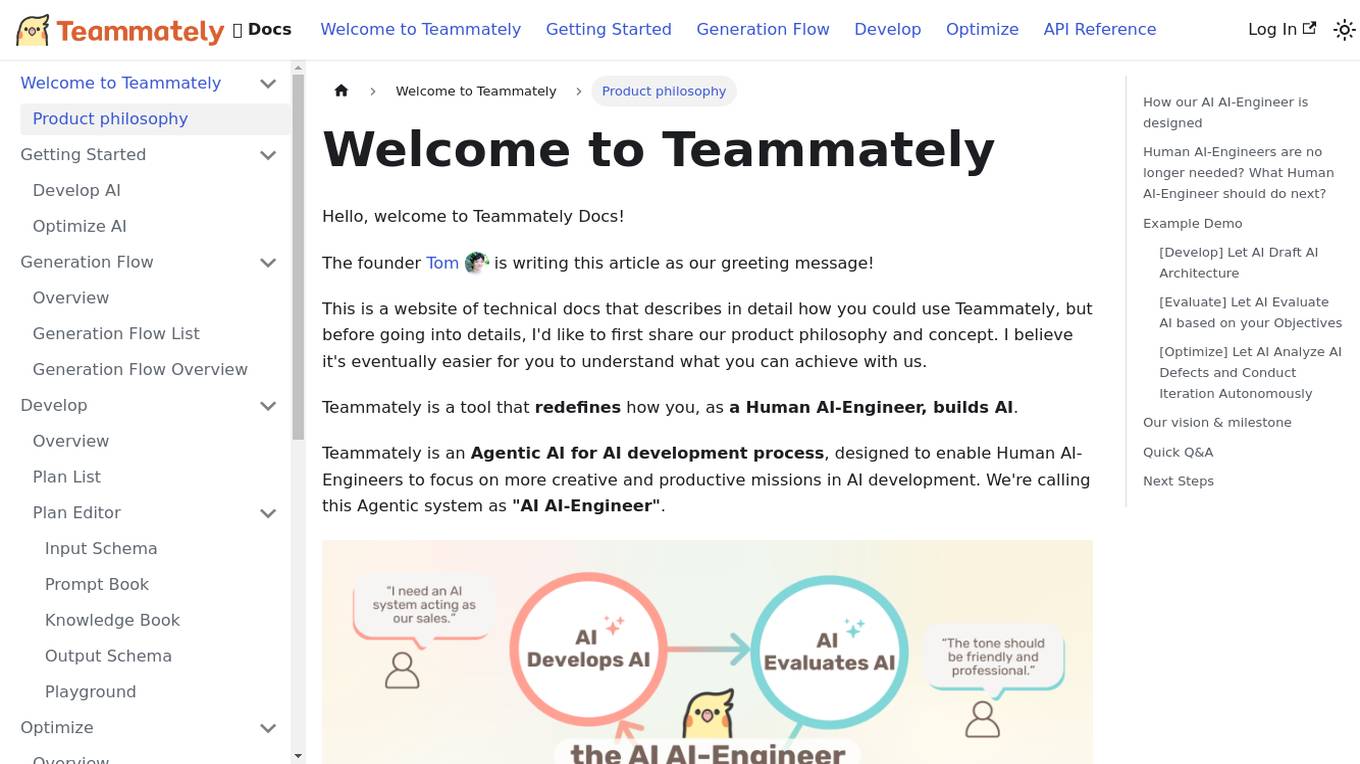

Teammately

Teammately is an AI tool that redefines how Human AI-Engineers build AI. It is an Agentic AI for AI development process, designed to enable Human AI-Engineers to focus on more creative and productive missions in AI development. Teammately follows the best practices of Human LLM DevOps and offers features like Development Prompt Engineering, Knowledge Tuning, Evaluation, and Optimization to assist in the AI development process. The tool aims to revolutionize AI engineering by allowing AI AI-Engineers to handle technical tasks, while Human AI-Engineers focus on planning and aligning AI with human preferences and requirements.

1 - Open Source AI Tools

maxtext

MaxText is a high-performance, highly scalable, open-source LLM written in pure Python/Jax and targeting Google Cloud TPUs and GPUs for training and inference. MaxText achieves high MFUs and scales from single host to very large clusters while staying simple and "optimization-free" thanks to the power of Jax and the XLA compiler. MaxText aims to be a launching off point for ambitious LLM projects both in research and production. We encourage users to start by experimenting with MaxText out of the box and then fork and modify MaxText to meet their needs.

20 - OpenAI Gpts

CISO GPT

Specialized LLM in computer security, acting as a CISO with 20 years of experience, providing precise, data-driven technical responses to enhance organizational security.

HackMeIfYouCan

Hack Me if you can - I can only talk to you about computer security, software security and LLM security @JacquesGariepy

Algorithm Expert

I develop and optimize algorithms with a technical and analytical approach.

Gastronomica

Develop recipes with a deep knowledge of food and culinary science, the art of gastronomy, as well as a sense of aesthetics.

ConsultorIA

I develop AI implementation proposals based on your specific needs, focusing on value and affordability.

Training Innovator

Helps develop training modules in Business, Management, Leadership, and HRM.

AI Assistant for Writers and Creatives

Organize and develop ideas, respecting privacy and copyright laws.

Python Code Refactor and Developer

I refactor and develop Python code for clarity and functionality.

IdeasGPT

AI to help expand and develop ideas. Start a conversation with: IdeaGPT or Here is an idea or I have an idea, followed by your idea.