Best AI tools for< Detect Risks >

20 - AI tool Sites

Giskard

Giskard is an automated Red Teaming platform designed to prevent security vulnerabilities and business compliance failures in AI agents. It offers advanced features for detecting AI vulnerabilities, proactive monitoring, and aligning AI testing with real business requirements. The platform integrates with observability stacks, provides enterprise-grade security, and ensures data protection. Giskard is trusted by enterprise AI teams and has been used to detect over 280,000 AI vulnerabilities.

DryRun Security

DryRun Security is an AI-driven application security tool that provides Contextual Security Analysis to detect and prevent logic flaws, authorization gaps, IDOR, and other code risks. It offers features like code insights, natural language code policies, and customizable notifications and reporting. The tool benefits CISOs, security leaders, and developers by enhancing code security, streamlining compliance, increasing developer engagement, and providing real-time feedback. DryRun Security supports various languages and frameworks and integrates with GitHub and Slack for seamless collaboration.

ScamMinder

ScamMinder is an AI-powered tool designed to enhance online safety by analyzing and evaluating websites in real-time. It harnesses cutting-edge AI technology to provide users with a safety score and detailed insights, helping them detect potential risks and red flags. By utilizing advanced machine learning algorithms, ScamMinder assists users in making informed decisions about engaging with websites, businesses, and online entities. With a focus on trustworthiness assessment, the tool aims to protect users from deceptive traps and safeguard their digital presence.

CopySight

CopySight is an ML-powered legal framework that enables enterprises to copyright AI-generated content. It caters to medium and large companies producing high volumes of visual content, offering a solution for marketing, creative, and legal teams, as well as business executives. With CopySight, users can confidently integrate AI content into their strategic plans while ensuring legal protection and peace of mind. The application helps streamline content creation, safeguard IP rights, unlock higher margins, and detect infringement risks.

Picterra

Picterra is a geospatial AI platform that offers reliable solutions for sustainability, compliance, monitoring, and verification. It provides an all-in-one plot monitoring system, professional services, and interactive tours. Users can build custom AI models to detect objects, changes, or patterns using various geospatial imagery data. Picterra aims to revolutionize geospatial analysis with its category-leading AI technology, enabling users to solve challenges swiftly, collaborate more effectively, and scale further.

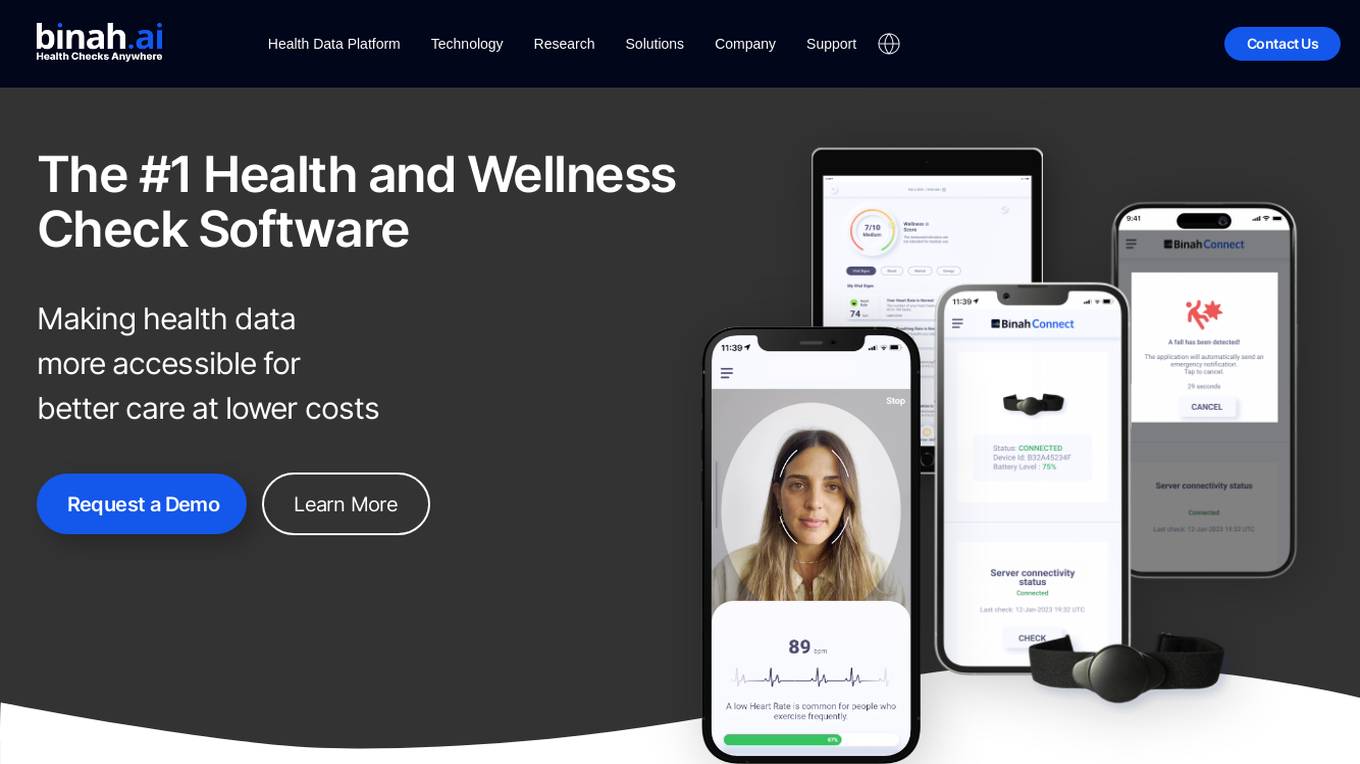

Binah.ai

Binah.ai is an AI-powered Health Data Platform that offers a software solution for video-based vital signs monitoring. The platform enables users to measure various health and wellness indicators using a smartphone, tablet, or laptop. It provides support for continuous monitoring through a raw PPG signal from external sensors and offers a range of features such as blood pressure monitoring, heart rate variability, oxygen saturation, and more. Binah.ai aims to make health data more accessible for better care at lower costs by leveraging AI and deep learning algorithms.

SymphonyAI Financial Crime Prevention AI SaaS Solutions

SymphonyAI offers AI SaaS solutions for financial crime prevention, helping organizations detect fraud, conduct customer due diligence, and prevent payment fraud. Their solutions leverage generative and predictive AI to enhance efficiency and effectiveness in investigating financial crimes. SymphonyAI's products cater to industries like banking, insurance, financial markets, and private banking, providing rapid deployment, scalability, and seamless integration to meet regulatory compliance requirements.

Unit21

Unit21 is a customizable no-code platform designed for risk and compliance operations. It empowers organizations to combat financial crime by providing end-to-end lifecycle risk analysis, fraud prevention, case management, and real-time monitoring solutions. The platform offers features such as AI Copilot for alert prioritization, Ask Your Data for data analysis, Watchlist & Sanctions for ongoing screening, and more. Unit21 focuses on fraud prevention and AML compliance, simplifying operations and accelerating investigations to respond to financial threats effectively and efficiently.

Overwatch Data

Overwatch Data is an AI-powered threat intelligence platform designed to provide fraud and cyber threat intelligence to businesses. The platform utilizes AI agents to monitor over 300k sources, including deep & dark web channels and social media platforms, to deliver real-time threat intelligence. Overwatch helps businesses prevent fraud campaigns, data breaches, and cyberattacks by providing personalized and contextualized intelligence. The platform offers customizable AI agents, tailored intelligence workflows, and context-rich alerts to enable fraud and security teams to respond quickly and confidently to threats.

Prompt Security

Prompt Security is a platform that secures all uses of Generative AI in the organization: from tools used by your employees to your customer-facing apps.

VOLT AI

VOLT AI is a cloud-based enterprise security application that utilizes advanced AI technology to intercept threats in real-time. The application offers solutions for various industries such as education, corporate, and cities, focusing on perimeter security, medical emergencies, and weapons detection. VOLT AI provides features like unified cameras, video intelligence, real-time notifications, automated escalations, and digital twin creation for advanced situational awareness. The application aims to enhance safety and security by detecting security risks and notifying users promptly.

TradeOS AI

TradeOS AI is an advanced AI tool designed to provide professional insights for traders and investors. The platform utilizes cutting-edge artificial intelligence algorithms to analyze market trends, predict price movements, and offer personalized trading recommendations. With TradeOS AI, users can access real-time data, historical analysis, and market sentiment indicators to make informed decisions and optimize their trading strategies. Whether you are a novice trader or an experienced investor, TradeOS AI empowers you with the tools and knowledge needed to succeed in the financial markets.

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

Endor Labs

Endor Labs is an AI-powered software supply chain security solution that helps organizations manage their software bills of materials (SBOM), secure their open source dependencies, optimize CI/CD pipeline security, and enhance application security with secret detection. The platform offers advanced features such as AI-assisted OSS selection, compliance management, reachability-based SCA, and repository security posture management. Endor Labs aims to streamline security processes, reduce false positives, and provide actionable insights to improve software supply chain security.

CUBE3.AI

CUBE3.AI is a real-time crypto fraud prevention tool that utilizes AI technology to identify and prevent various types of fraudulent activities in the blockchain ecosystem. It offers features such as risk assessment, real-time transaction security, automated protection, instant alerts, and seamless compliance management. The tool helps users protect their assets, customers, and reputation by proactively detecting and blocking fraud in real-time.

MindBridge

MindBridge is a global leader in financial risk discovery and anomaly detection. The MindBridge AI Platform drives insights and assesses risks across critical business operations. It offers various products like General Ledger Analysis, Company Card Risk Analytics, Payroll Risk Analytics, Revenue Risk Analytics, and Vendor Invoice Risk Analytics. With over 250 unique machine learning control points, statistical methods, and traditional rules, MindBridge is deployed to over 27,000 accounting, finance, and audit professionals globally.

Qwiet AI

Qwiet AI is a code vulnerability detection platform that accelerates secure coding by uncovering, prioritizing, and generating fixes for top vulnerabilities with a single scan. It offers features such as AI-enhanced SAST, contextual SCA, AI AutoFix, Container Security, SBOM, and Secrets detection. Qwiet AI helps InfoSec teams in companies to accurately pinpoint and autofix risks in their code, reducing false positives and remediation time. The platform provides a unified vulnerability dashboard, prioritizes risks, and offers tailored fix suggestions based on the full context of the code.

Fraud.net

Fraud.net is an AI-powered fraud detection and prevention platform designed for enterprises. It offers a comprehensive and customizable solution to manage and prevent financial fraud and risks. The platform utilizes AI and machine learning technologies to provide real-time monitoring, analytics, and reporting, helping businesses in various industries to combat fraud effectively. Fraud.net's solutions are trusted by CEOs, directors, technology and security officers, fraud managers, and analysts to ensure trust and beat fraud at every step of the customer lifecycle.

Brighterion AI

Brighterion AI, a Mastercard company, offers advanced AI solutions for financial institutions, merchants, and healthcare providers. With over 20 years of experience, Brighterion has revolutionized AI by providing market-ready models that enhance customer experience, reduce financial fraud, and mitigate risks. Their solutions are enriched with Mastercard's global network intelligence, ensuring scalability and powerful personalization. Brighterion's AI applications cater to acquirers, PSPs, issuers, and healthcare providers, offering custom AI solutions for transaction fraud monitoring, merchant monitoring, AML & compliance, and healthcare fraud detection. The company has received several prestigious awards for its excellence in AI and financial security.

Sixfold

Sixfold is a risk assessment AI solution designed exclusively for insurance underwriters. The platform enhances underwriting efficiency, accuracy, and transparency for insurers, MGAs, and reinsurers. Sixfold's AI capabilities enable faster case reviews, appetite-aware risk insights, and data gathering from days to minutes. The application ingests underwriting guidelines, extracts risk data, and provides tailored risk insights to align with unique risk preferences. It offers customized solutions for various insurance lines and features intake prioritization, contextual risk insights, risk signal detection, inconsistency identification, and data summarization.

0 - Open Source AI Tools

20 - OpenAI Gpts

ethicallyHackingspace (eHs)® METEOR™ STORM™

Multiple Environment Threat Evaluation of Resources (METEOR)™ Space Threats and Operational Risks to Mission (STORM)™ non-profit product AI co-pilot

Blue Team Guide

it is a meticulously crafted arsenal of knowledge, insights, and guidelines that is shaped to empower organizations in crafting, enhancing, and refining their cybersecurity defenses

Mónica

CSIRT que lidera un equipo especializado en detectar y responder a incidentes de seguridad, maneja la contención y recuperación, organiza entrenamientos y simulacros, elabora reportes para optimizar estrategias de seguridad y coordina con entidades legales cuando es necesario

Phoenix Vulnerability Intelligence GPT

Expert in analyzing vulnerabilities with ransomware focus with intelligence powered by Phoenix Security

Financial Cybersecurity Analyst - Lockley Cash v1

stunspot's advisor for all things Financial Cybersec

Phish or No Phish Trainer

Hone your phishing detection skills! Analyze emails, texts, and calls to spot deception. Become a security pro!

FallacyGPT

Detect logical fallacies and lapses in critical thinking to help avoid misinformation in the style of Socrates

AI Detector

AI Detector GPT is powered by Winston AI and created to help identify AI generated content. It is designed to help you detect use of AI Writing Chatbots such as ChatGPT, Claude and Bard and maintain integrity in academia and publishing. Winston AI is the most trusted AI content detector.