Best AI tools for< Deploy To Cloud >

20 - AI tool Sites

Lazy AI

Lazy AI is an AI tool that enables users to quickly build and modify web apps with prompts and deploy them to the cloud with just one click. Users can create various applications such as customer portals, API endpoints for AI text summarization, metrics dashboards, web scrapers, chatbots, and discord bots. The platform offers a wide range of template categories and tools for automation, data mining, AI agents, dashboards, reporting, and more. Users can also access reusable templates from the Lazy AI community to streamline their development process.

Lazy AI

Lazy AI is a platform that enables users to build full stack web applications 10 times faster by utilizing AI technology. Users can create and modify web apps with prompts and deploy them to the cloud with just one click. The platform offers a variety of features including AI Component Builder, eCommerce store creation, Crypto Arbitrage Scraper, Text to Speech Converter, Lazy Image to Video generation, PDF Chatbot, and more. Lazy AI aims to streamline the app development process and empower users to leverage AI for various tasks.

AIPage.dev

AIPage.dev is an AI-powered landing page generator that simplifies web development by utilizing cutting-edge AI technology. It allows users to create stunning landing pages with just a single prompt, eliminating the need for hours of coding and designing. The platform offers features like AI-driven design, intuitive editing interface, seamless cloud deployment, rapid development, effortless blog post creation, unlimited hosting for blog posts, lead collection, and seamless integration with leading providers. AIPage.dev aims to transform ideas into reality and empower users to showcase their projects and products effectively.

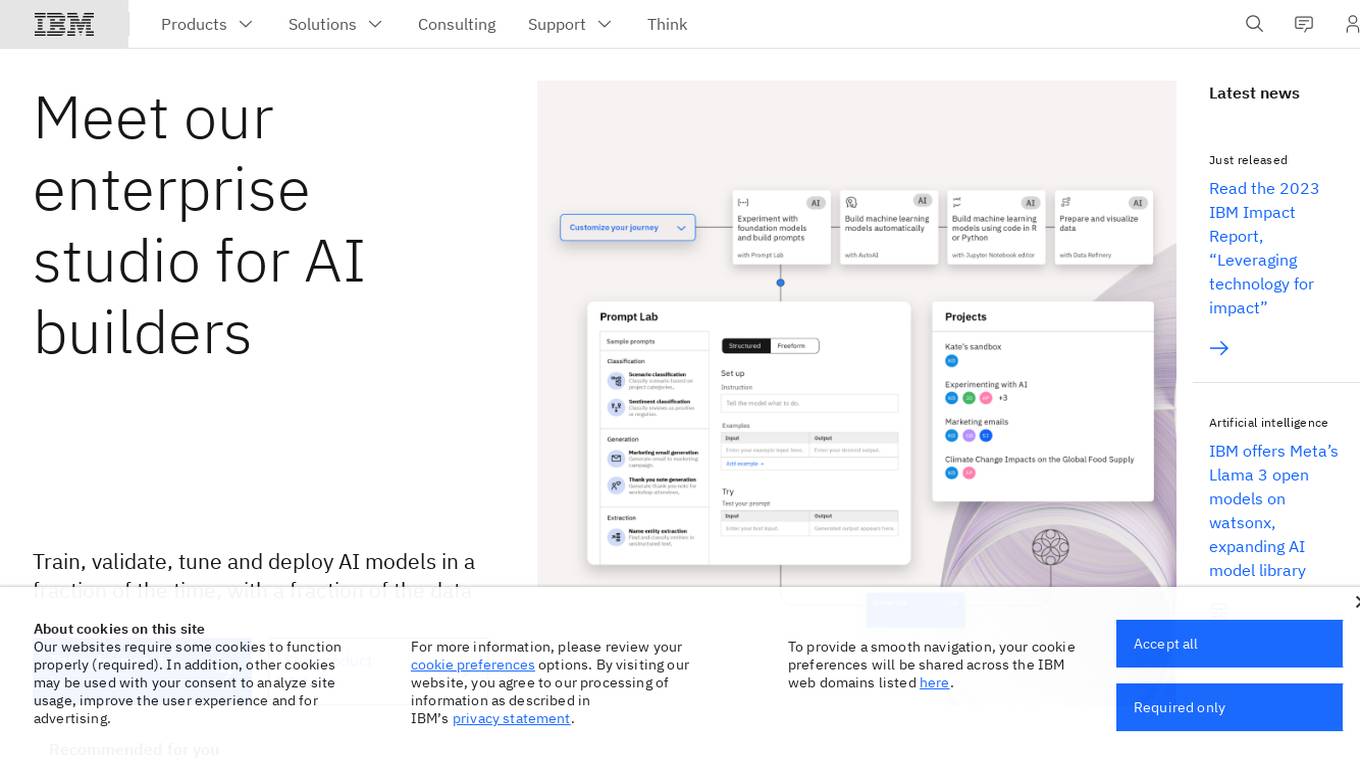

IBM Watsonx

IBM Watsonx is an enterprise studio for AI builders. It provides a platform to train, validate, tune, and deploy AI models quickly and efficiently. With Watsonx, users can access a library of pre-trained AI models, build their own models, and deploy them to the cloud or on-premises. Watsonx also offers a range of tools and services to help users manage and monitor their AI models.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

Mirage

Mirage is a custom AI platform that builds custom LLMs to accelerate productivity. It is backed by Sequoia and offers a variety of features, including the ability to create custom AI models, train models on your own data, and deploy models to the cloud or on-premises.

SmythOS

SmythOS is an AI-powered platform that allows users to create and deploy AI agents in minutes. With a user-friendly interface and drag-and-drop functionality, SmythOS enables users to build custom agents for various tasks without the need for manual coding. The platform offers pre-built agent templates, universal integration with AI models and APIs, and the flexibility to deploy agents locally or to the cloud. SmythOS is designed to streamline workflow automation, enhance productivity, and provide a seamless experience for developers and businesses looking to leverage AI technology.

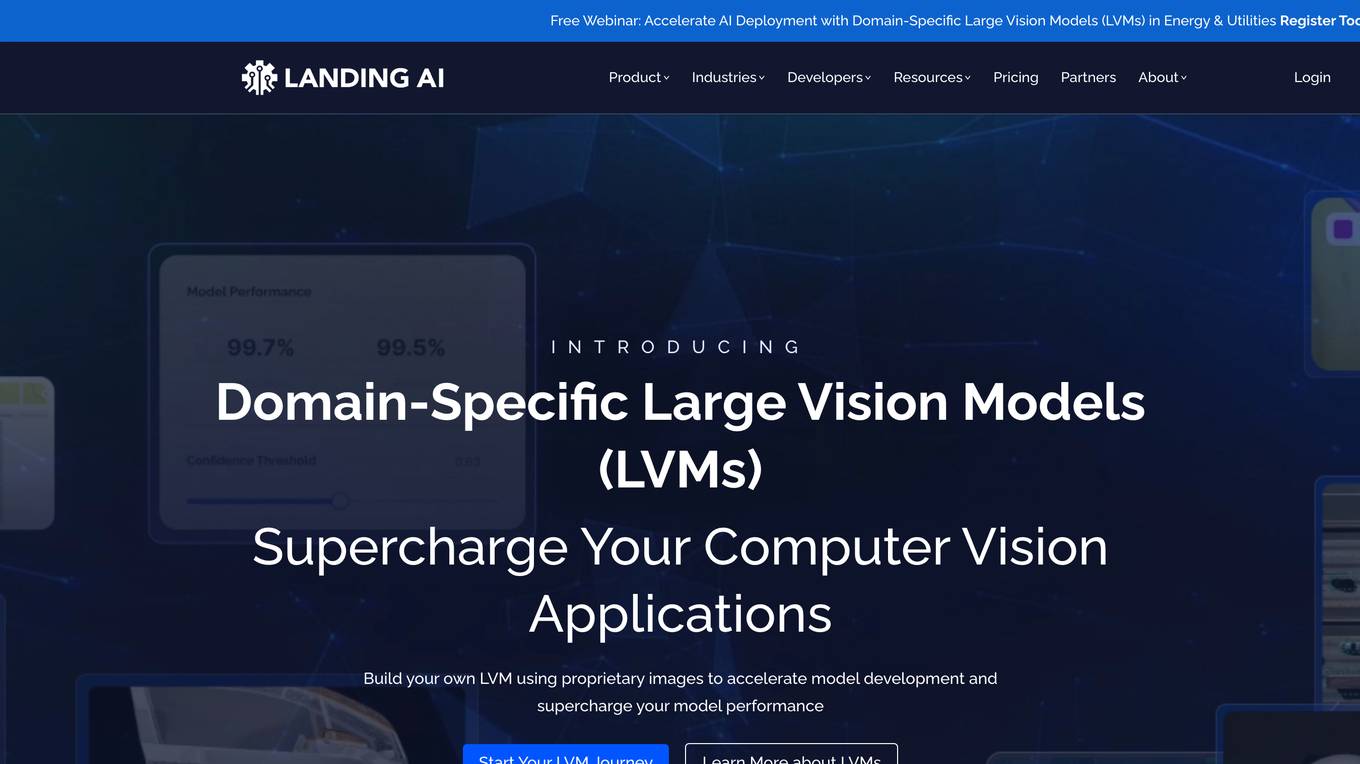

Landing AI

Landing AI is a computer vision platform and AI software company that provides a cloud-based platform for building and deploying computer vision applications. The platform includes a library of pre-trained models, a set of tools for data labeling and model training, and a deployment service that allows users to deploy their models to the cloud or edge devices. Landing AI's platform is used by a variety of industries, including automotive, electronics, food and beverage, medical devices, life sciences, agriculture, manufacturing, infrastructure, and pharma.

PoplarML

PoplarML is a platform that enables the deployment of production-ready, scalable ML systems with minimal engineering effort. It offers one-click deploys, real-time inference, and framework agnostic support. With PoplarML, users can seamlessly deploy ML models using a CLI tool to a fleet of GPUs and invoke their models through a REST API endpoint. The platform supports Tensorflow, Pytorch, and JAX models.

Ardor

Ardor is an AI tool that offers an all-in agentic software development lifecycle automation platform. It helps users build, deploy, and scale AI agents on the cloud efficiently and cost-effectively. With Ardor, users can start with a prompt, design AI agents visually, see their product get built, refine and iterate, and launch in minutes. The platform provides real-time collaboration features, simple pricing plans, and various tools like Ardor Copilot, AI Agent-Builder Canvas, Instant Build Messages, AI Debugger, Proactive Monitoring, Role-Based Access Control, and Single Sign-On.

Pulumi

Pulumi is an AI-powered infrastructure as code tool that allows engineers to manage cloud infrastructure using various programming languages like Node.js, Python, Go, .NET, Java, and YAML. It offers features such as generative AI-powered cloud management, security enforcement through policies, automated deployment workflows, asset management, compliance remediation, and AI insights over the cloud. Pulumi helps teams provision, automate, and evolve cloud infrastructure, centralize and secure secrets management, and gain security, compliance, and cost insights across all cloud assets.

Fifi.ai

Fifi.ai is a managed AI cloud platform that provides users with the infrastructure and tools to deploy and run AI models. The platform is designed to be easy to use, with a focus on plug-and-play functionality. Fifi.ai also offers a range of customization and fine-tuning options, allowing users to tailor the platform to their specific needs. The platform is supported by a team of experts who can provide assistance with onboarding, API integration, and troubleshooting.

Arya.ai

Arya.ai is an AI tool designed for Banks, Insurers, and Financial Services to deploy safe, responsible, and auditable AI applications. It offers a range of AI Apps, ML Observability Tools, and a Decisioning Platform. Arya.ai provides curated APIs, ML explainability, monitoring, and audit capabilities. The platform includes task-specific AI models for autonomous underwriting, claims processing, fraud monitoring, and more. Arya.ai aims to facilitate the rapid deployment and scaling of AI applications while ensuring institution-wide adoption of responsible AI practices.

Nomi.cloud

Nomi.cloud is a modern AI-powered CloudOps and HPC assistant designed for next-gen businesses. It offers developers, marketplace, enterprise solutions, and pricing console. With features like single pane of glass view, instant deployment, continuous monitoring, AI-powered insights, and budgets & alerts built-in, Nomi.cloud aims to revolutionize cloud management. It provides a user-friendly interface to manage infrastructure efficiently, optimize costs, and deploy resources across multiple regions with ease. Nomi.cloud is built for scale, trusted by enterprises, and offers a range of GPUs and cloud providers to suit various needs.

LambdaTest

LambdaTest is a next-generation mobile apps and cross-browser testing cloud platform that offers a wide range of testing services. It allows users to perform manual live-interactive cross-browser testing, run Selenium, Cypress, Playwright scripts on cloud-based infrastructure, and execute AI-powered automation testing. The platform also provides accessibility testing, real devices cloud, visual regression cloud, and AI-powered test analytics. LambdaTest is trusted by over 2 million users globally and offers a unified digital experience testing cloud to accelerate go-to-market strategies.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

ClawOneClick

ClawOneClick is an AI tool that allows users to deploy their own AI assistant in seconds without the need for technical setup. It offers a one-click deployment of an always-on AI chatbot powered by the latest AI models. Users can choose from various AI models and messaging channels to customize their AI assistant. ClawOneClick handles all the cloud infrastructure provisioning and management, ensuring secure connections and end-to-end encryption. The tool is designed to adapt to various tasks and can assist with email summarization, quick replies, translation, proofreading, customer queries, report condensation, meeting reminders, voice memo transcription, deadline tracking, schedule organization, meeting action item capture, time zone coordination, task automation, expense logging, priority planning, content generation, idea brainstorming, fast topic research, book and article summarization, concept learning, creative suggestions, code explanation, document analysis, professional document drafting, project goal definition, team updates preparation, data trend interpretation, job posting writing, product and price comparison, meal plan suggestion, and more.

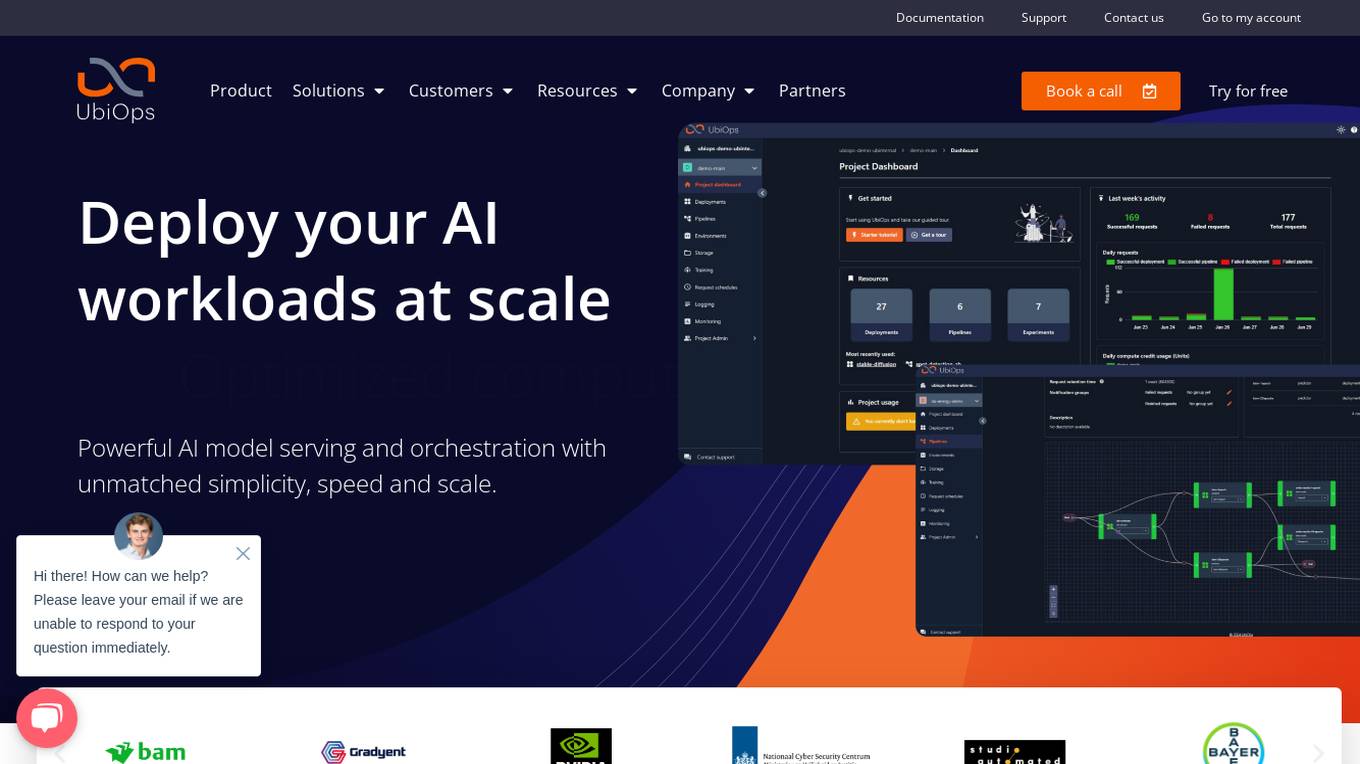

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

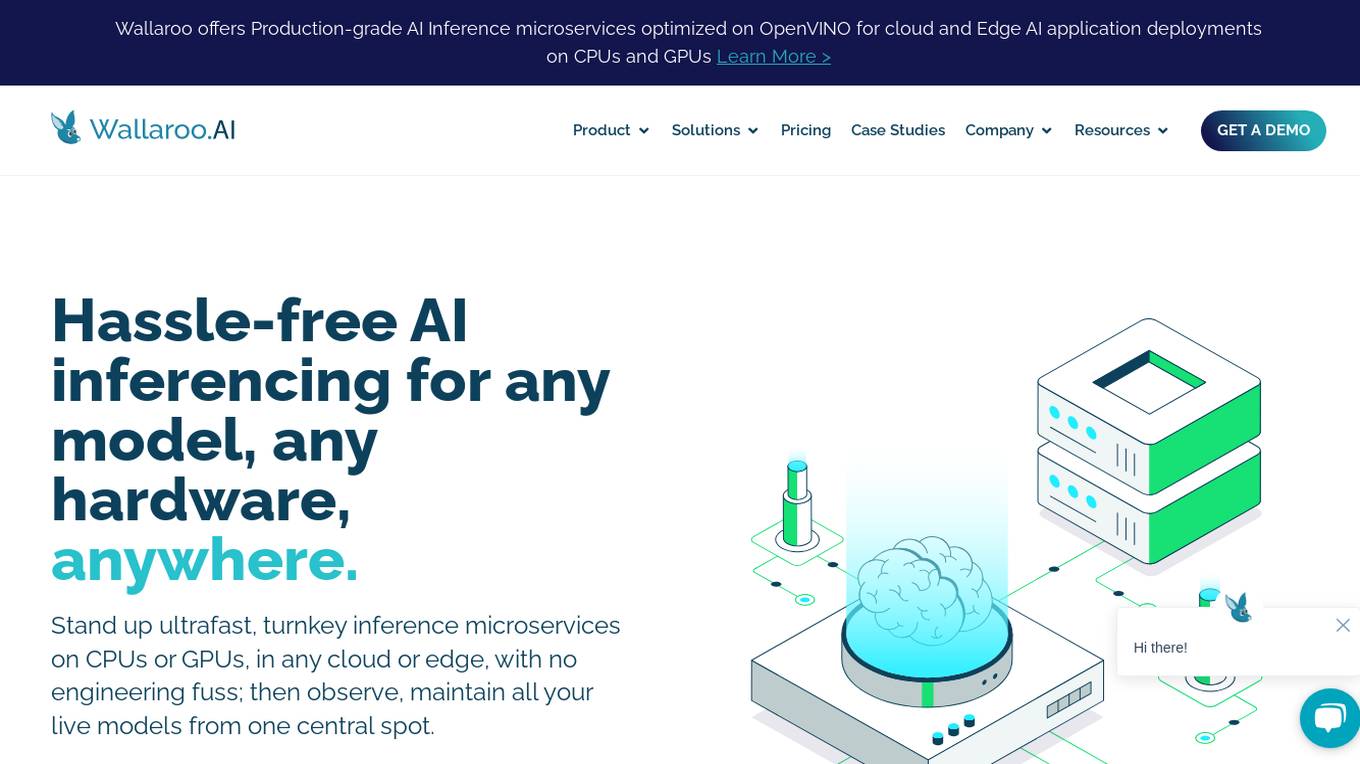

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

1 - Open Source AI Tools

serve

Jina-Serve is a framework for building and deploying AI services that communicate via gRPC, HTTP and WebSockets. It provides native support for major ML frameworks and data types, high-performance service design with scaling and dynamic batching, LLM serving with streaming output, built-in Docker integration and Executor Hub, one-click deployment to Jina AI Cloud, and enterprise-ready features with Kubernetes and Docker Compose support. Users can create gRPC-based AI services, build pipelines, scale services locally with replicas, shards, and dynamic batching, deploy to the cloud using Kubernetes, Docker Compose, or JCloud, and enable token-by-token streaming for responsive LLM applications.

20 - OpenAI Gpts

React on Rails Pro

Expert in Rails & React, focusing on high-standard software development.

Docker and Docker Swarm Assistant

Expert in Docker and Docker Swarm solutions and troubleshooting.

T3Stack開発アシスタント

T3Stackでの開発をサポートします:Next.js, TypeScript, Prisma, tRPC, Tailwind.css, Next-Auth.js, and more

![[latest] FastAPI GPT Screenshot](/screenshots_gpts/g-BhYCAfVXk.jpg)

[latest] FastAPI GPT

Up-to-date FastAPI coding assistant with knowledge of the latest version. Part of the [latest] GPTs family.

Solidity Master

I'll help you master Solidity to become a better smart contract developer.

API Architect

Create APIs from idea to deployment with beginner friendly instructions, structured layout, recommendations, etc

Flask Expert Assistant

This GPT is a specialized assistant for Flask, the popular web framework in Python. It is designed to help both beginners and experienced developers with Flask-related queries, ranging from basic setup and routing to advanced features like database integration and application scaling.