Best AI tools for< Deploy Local Models >

20 - AI tool Sites

Picovoice

Picovoice is an on-device Voice AI and local LLM platform designed for enterprises. It offers a range of voice AI and LLM solutions, including speech-to-text, noise suppression, speaker recognition, speech-to-index, wake word detection, and more. Picovoice empowers developers to build virtual assistants and AI-powered products with compliance, reliability, and scalability in mind. The platform allows enterprises to process data locally without relying on third-party remote servers, ensuring data privacy and security. With a focus on cutting-edge AI technology, Picovoice enables users to stay ahead of the curve and adapt quickly to changing customer needs.

GptSdk

GptSdk is an AI tool that simplifies incorporating AI capabilities into PHP projects. It offers dynamic prompt management, model management, bulk testing, collaboration chaining integration, and more. The tool allows developers to develop professional AI applications 10x faster, integrates with Laravel and Symfony, and supports both local and API prompts. GptSdk is open-source under the MIT License and offers a flexible pricing model with a generous free tier.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

OsirisBrain

OsirisBrain is an AI desktop agent that offers autonomous AI automation for Mac, Windows, and Linux. It provides users with access to 12 AI specialists on their desktop, each with a specialized system prompt tuned for their domain. The application evolves autonomously by analyzing, coding, testing, and deploying new specialized skills in real-time. OsirisBrain runs locally on the user's machine, ensuring data privacy, and offers features such as custom skill building, remote chat, vision capabilities, multi-provider connectivity, zero-config memory, learning loop, plugin manager, voice input, system monitor, and more. Users can use OsirisBrain for 3 hours free per day and benefit from features like Guardian Mode, End-to-End Encryption, Parental Mode, and a Cortex Memory System that mimics a real brain's memory architecture.

Restack

Restack is a developer tool and cloud infrastructure platform that enables users to build, launch, and scale AI products quickly and efficiently. With Restack, developers can go from local development to production in seconds, leveraging a variety of languages and frameworks. The platform offers templates, repository connections, and Dockerfile customization for seamless deployment. Restack Cloud provides cost-efficient scaling and GitHub integration for instant deployment. The platform simplifies the complexity of building and scaling AI applications, allowing users to move from code to production faster than ever before.

Team9

Team9 is an AI workspace application that allows users to hire AI Staff and collaborate with them as real teammates. It is built on OpenClaw and Moltbook, offering a zero-setup, managed OpenClaw experience. Users can assign tasks, share context, and coordinate work within the platform. Team9 aims to help users build an AI team, run AI-powered collaboration, and increase work efficiency with minimal overhead. The application connects users to the revolutionary AI agent ecosystem, providing instant deployment of OpenClaw without the need for complex setup or manual configuration.

Replit

Replit is a software creation platform that provides an integrated development environment (IDE), artificial intelligence (AI) assistance, and deployment services. It allows users to build, test, and deploy software projects directly from their browser, without the need for local setup or configuration. Replit offers real-time collaboration, code generation, debugging, and autocompletion features powered by AI. It supports multiple programming languages and frameworks, making it suitable for a wide range of development projects.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

Azure Static Web Apps

Azure Static Web Apps is a platform provided by Microsoft Azure for building and deploying modern web applications. It allows developers to easily host static web content and serverless APIs with seamless integration to popular frameworks like React, Angular, and Vue. With Azure Static Web Apps, developers can quickly set up continuous integration and deployment workflows, enabling them to focus on building great user experiences without worrying about infrastructure management.

PoplarML

PoplarML is a platform that enables the deployment of production-ready, scalable ML systems with minimal engineering effort. It offers one-click deploys, real-time inference, and framework agnostic support. With PoplarML, users can seamlessly deploy ML models using a CLI tool to a fleet of GPUs and invoke their models through a REST API endpoint. The platform supports Tensorflow, Pytorch, and JAX models.

ClawOneClick

ClawOneClick is an AI tool that allows users to deploy their own AI assistant in seconds without the need for technical setup. It offers a one-click deployment of an always-on AI chatbot powered by the latest AI models. Users can choose from various AI models and messaging channels to customize their AI assistant. ClawOneClick handles all the cloud infrastructure provisioning and management, ensuring secure connections and end-to-end encryption. The tool is designed to adapt to various tasks and can assist with email summarization, quick replies, translation, proofreading, customer queries, report condensation, meeting reminders, voice memo transcription, deadline tracking, schedule organization, meeting action item capture, time zone coordination, task automation, expense logging, priority planning, content generation, idea brainstorming, fast topic research, book and article summarization, concept learning, creative suggestions, code explanation, document analysis, professional document drafting, project goal definition, team updates preparation, data trend interpretation, job posting writing, product and price comparison, meal plan suggestion, and more.

Vercel

Vercel is an AI-powered cloud platform that enables developers to build, deploy, and scale web applications quickly and securely. It offers a range of developer tools and cloud infrastructure to optimize performance and enhance user experience. Vercel's AI capabilities include AI Cloud, AI SDK, AI Gateway, and Sandbox AI workflows, providing seamless integration of AI models into web applications.

TurboClaw

TurboClaw is an AI-powered platform that allows users to deploy their own 24/7 OpenClaw instance in under a minute, eliminating technical hassles. Users can choose from different AI models and messaging channels, and the platform handles server management, Docker setup, SSL configuration, and OpenClaw deployment. With TurboClaw, users can quickly set up AI bots for various tasks such as drafting replies, translating messages, organizing inboxes, managing subscriptions, finding best prices online, generating content ideas, setting goals, and more.

1ClickClaw

1ClickClaw is an AI tool that simplifies the process of deploying personal AI assistants by offering one-click deployment without the need for servers, SSH, or DevOps expertise. Users can choose from multiple AI models, connect their preferred messaging channels, and deploy their AI assistant in under a minute on a dedicated cloud server. The application streamlines the traditional time-consuming setup process, making AI deployment accessible to users without technical knowledge.

Hanabi.rest

Hanabi.rest is an AI-based API building platform that allows users to create REST APIs from natural language and screenshots using AI technology. Users can deploy the APIs on Cloudflare Workers and roll them out globally. The platform offers a live editor for testing database access and API endpoints, generates code compatible with various runtimes, and provides features like sharing APIs via URL, npm package integration, and CLI dump functionality. Hanabi.rest simplifies API design and deployment by leveraging natural language processing, image recognition, and v0.dev components.

Superflows

Superflows is a tool that allows you to add an AI Copilot to your SaaS product. This AI Copilot can answer questions and perform tasks for users via chat. It is designed to be easy to set up and configure, and it can be integrated into your codebase with just a few lines of code. Superflows is a great way to improve the user experience of your SaaS product and help users get the most out of your software.

Dreamlab

Dreamlab is a multiplayer game creation platform that allows users to build and deploy great 2D multiplayer games quickly and effortlessly. It features an in-browser collaborative editor, easy integration of multiplayer functionality, and AI assistance for code generation. Dreamlab is designed for indie game developers, game jam participants, Discord server activities, and rapid prototyping. Users can start building their games instantly without the need for downloads or installations. The platform offers simple and transparent pricing with a free plan and a pro plan for serious game developers.

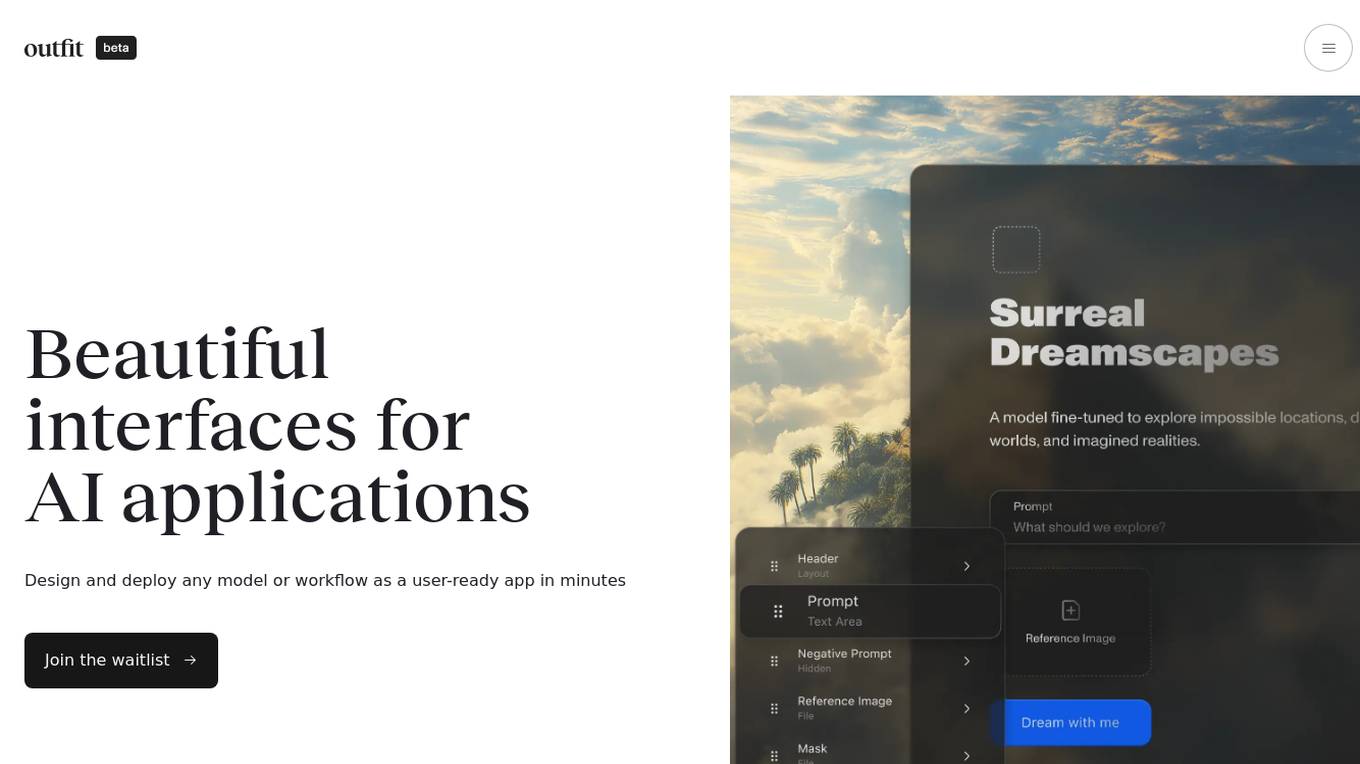

Outfit AI

Outfit AI is an AI tool that enables users to design and deploy AI models or workflows as user-ready applications in minutes. It allows users to create custom user interfaces for their AI-powered apps by dropping in an API key from Replicate or Hugging Face. With Outfit AI, users can have creative control over the design of their apps, build complex workflows without any code, and optimize prompts for better performance. The tool aims to help users launch their models faster, save time, and enhance their AI applications with a built-in product copilot.

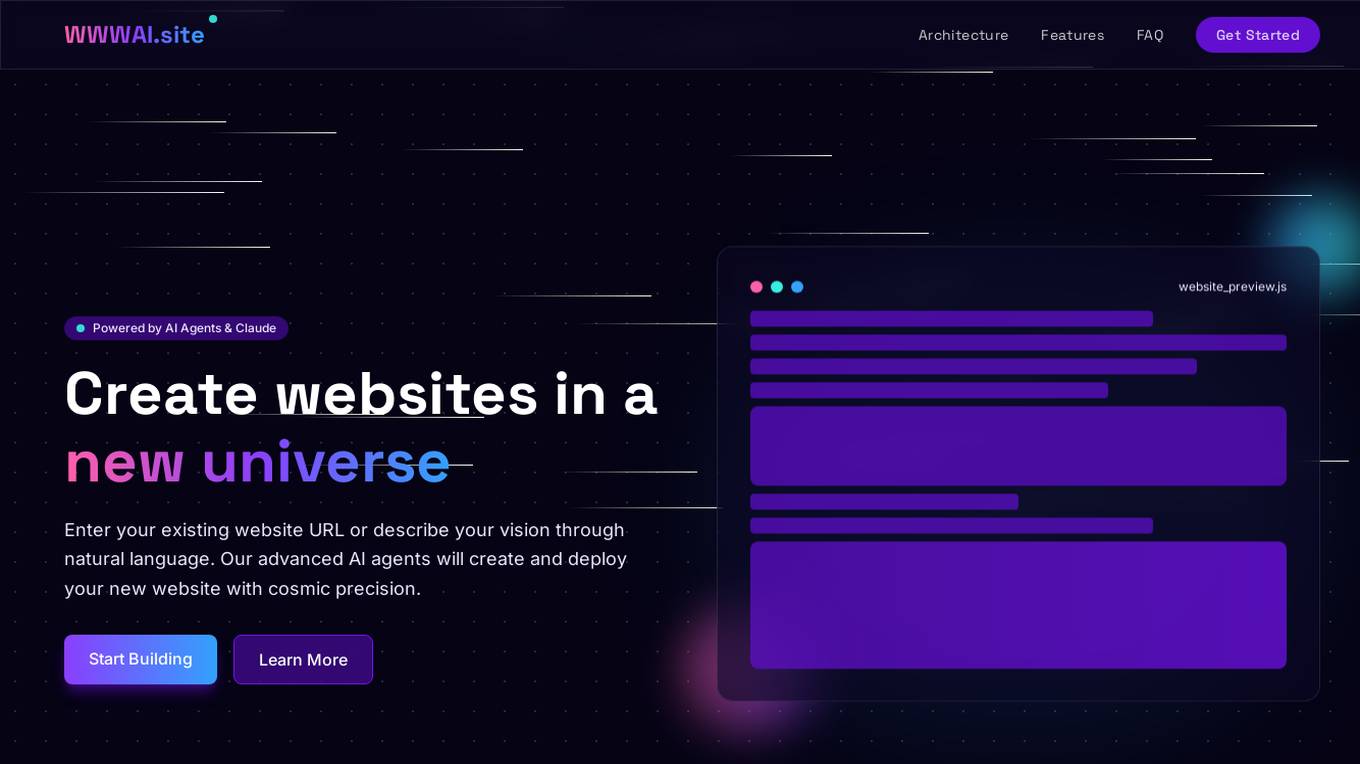

WWWAI.site

WWWAI.site is an AI-powered platform that revolutionizes web creation by allowing users to create and deploy websites using natural language input and advanced AI agents. The platform leverages specialized AI agents, such as Code Creation, Requirement Analysis, Concept Setting, and Error Validation, along with Claude API for language processing capabilities. Model Context Protocol (MCP) ensures consistency across all components, while users can choose between GitHub or CloudFlare for deployment. The platform is currently in beta testing with limited availability, offering users a seamless and innovative website creation experience.

1 - Open Source AI Tools

Qmedia

QMedia is an open-source multimedia AI content search engine designed specifically for content creators. It provides rich information extraction methods for text, image, and short video content. The tool integrates unstructured text, image, and short video information to build a multimodal RAG content Q&A system. Users can efficiently search for image/text and short video materials, analyze content, provide content sources, and generate customized search results based on user interests and needs. QMedia supports local deployment for offline content search and Q&A for private data. The tool offers features like content cards display, multimodal content RAG search, and pure local multimodal models deployment. Users can deploy different types of models locally, manage language models, feature embedding models, image models, and video models. QMedia aims to spark new ideas for content creation and share AI content creation concepts in an open-source manner.

20 - OpenAI Gpts

Frontend Developer

AI front-end developer expert in coding React, Nextjs, Vue, Svelte, Typescript, Gatsby, Angular, HTML, CSS, JavaScript & advanced in Flexbox, Tailwind & Material Design. Mentors in coding & debugging for junior, intermediate & senior front-end developers alike. Let’s code, build & deploy a SaaS app.

Azure Arc Expert

Azure Arc expert providing guidance on architecture, deployment, and management.

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

Docker and Docker Swarm Assistant

Expert in Docker and Docker Swarm solutions and troubleshooting.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.