Best AI tools for< Decompress Text >

0 - AI tool Sites

No tools available

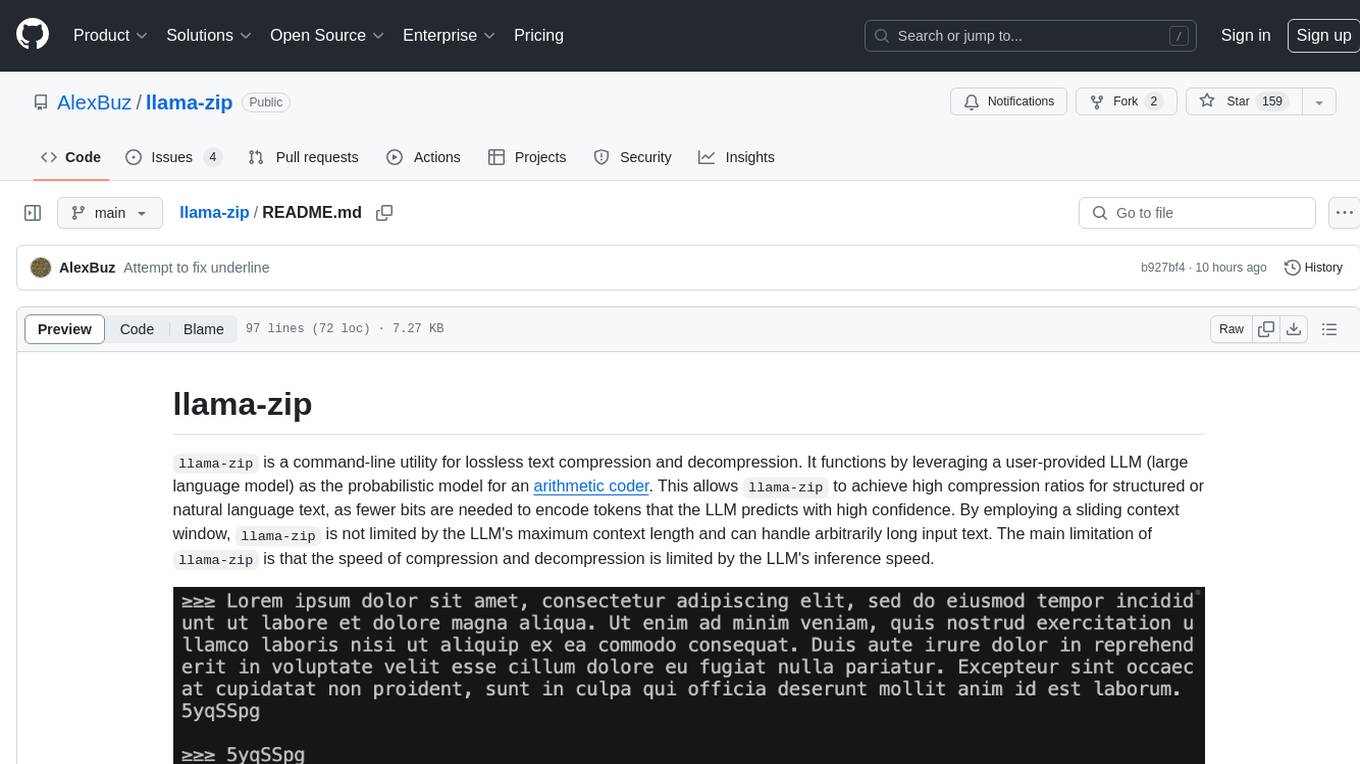

1 - Open Source AI Tools

llama-zip

llama-zip is a command-line utility for lossless text compression and decompression. It leverages a user-provided large language model (LLM) as the probabilistic model for an arithmetic coder, achieving high compression ratios for structured or natural language text. The tool is not limited by the LLM's maximum context length and can handle arbitrarily long input text. However, the speed of compression and decompression is limited by the LLM's inference speed.

github

: 158

2 - OpenAI Gpts

ConceptGPT

This GPT decomposes your message and suggests five powerful concepts to improve your thinking on the matter

gpt

: 100+