Best AI tools for< Debug Ml Models >

20 - AI tool Sites

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

Metaflow

Metaflow is an open-source framework for building and managing real-life ML, AI, and data science projects. It makes it easy to use any Python libraries for models and business logic, deploy workflows to production with a single command, track and store variables inside the flow automatically for easy experiment tracking and debugging, and create robust workflows in plain Python. Metaflow is used by hundreds of companies, including Netflix, 23andMe, and Realtor.com.

Aim

Aim is an open-source, self-hosted AI Metadata tracking tool designed to handle 100,000s of tracked metadata sequences. Two most famous AI metadata applications are: experiment tracking and prompt engineering. Aim provides a performant and beautiful UI for exploring and comparing training runs, prompt sessions.

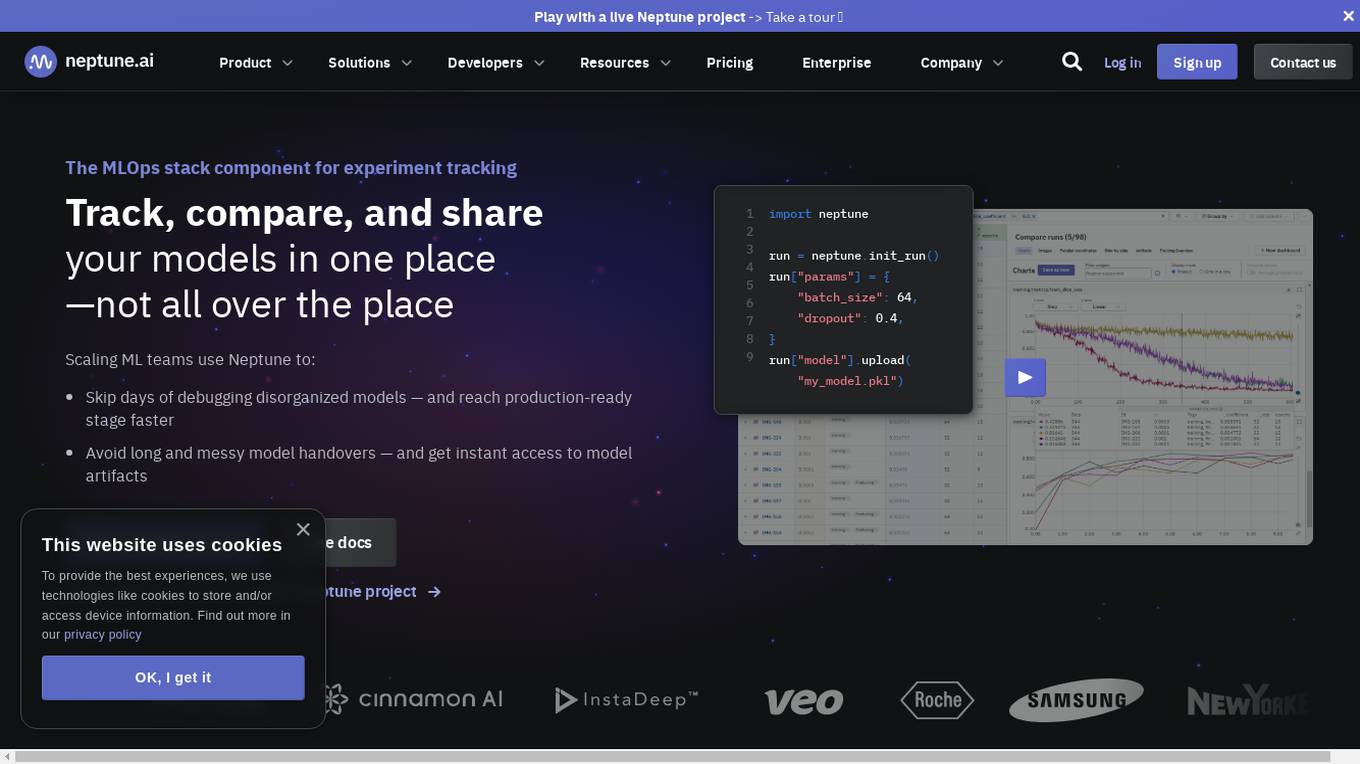

Neptune

Neptune is an MLOps stack component for experiment tracking. It allows users to track, compare, and share their models in one place. Neptune is used by scaling ML teams to skip days of debugging disorganized models, avoid long and messy model handovers, and start logging for free.

Client-Side Exception Resolver

The website is a platform that seems to be encountering client-side exceptions, resulting in application errors. Users are advised to check the browser console for more detailed information on the errors. The platform may be undergoing technical issues that need to be resolved to ensure smooth functionality.

Debug Sage

Debug Sage is a website designed to help users understand and troubleshoot errors in their software applications. The platform provides detailed insights into various types of errors, allowing users to identify and resolve issues efficiently. With a user-friendly interface, Debug Sage aims to streamline the debugging process for developers and software engineers. The website also offers resources and tools to enhance the overall debugging experience. By leveraging advanced technologies, Debug Sage empowers users to tackle complex errors with ease.

Whybug

Whybug is an AI tool designed to help developers debug their code by providing explanations for errors. By utilizing a large language model trained on data from StackExchange and other sources, Whybug can predict the causes of errors and suggest fixes. Users can simply paste an error message and receive detailed explanations on how to resolve the issue. The tool aims to streamline the debugging process and improve code quality.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

Rerun

Rerun is an SDK, time-series database, and visualizer for temporal and multimodal data. It is used in fields like robotics, spatial computing, 2D/3D simulation, and finance to verify, debug, and explain data. Rerun allows users to log data like tensors, point clouds, and text to create streams, visualize and interact with live and recorded streams, build layouts, customize visualizations, and extend data and UI functionalities. The application provides a composable data model, dynamic schemas, and custom views for enhanced data visualization and analysis.

Snaplet

Snaplet is a data management tool for developers that provides AI-generated dummy data for local development, end-to-end testing, and debugging. It uses a real programming language (TypeScript) to define and edit data, ensuring type safety and auto-completion. Snaplet understands database structures and relationships, automatically transforming personally identifiable information and seeding data accordingly. It integrates seamlessly into development workflows, providing data where it's needed most: on local machines, for CI/CD testing, and preview environments.

Langfuse

Langfuse is an AI tool that offers the Langfuse TypeScript SDK v4 for building and debugging LLM (Large Language Models) applications. It provides features such as tracing, prompt management, evaluation, and metrics to enhance the performance of LLM applications. Langfuse is backed by a team of experts and offers integrations with various platforms and SDKs. The tool aims to simplify the development process of complex LLM applications and improve overall efficiency.

SourceAI

SourceAI is an AI-powered code generator that allows users to generate code in any programming language. It is easy to use, even for non-developers, and has a clear and intuitive interface. SourceAI is powered by GPT-3 and Codex, the most advanced AI technology available. It can be used to generate code for a variety of tasks, including calculating the factorial of a number, finding the roots of a polynomial, and translating text from one language to another.

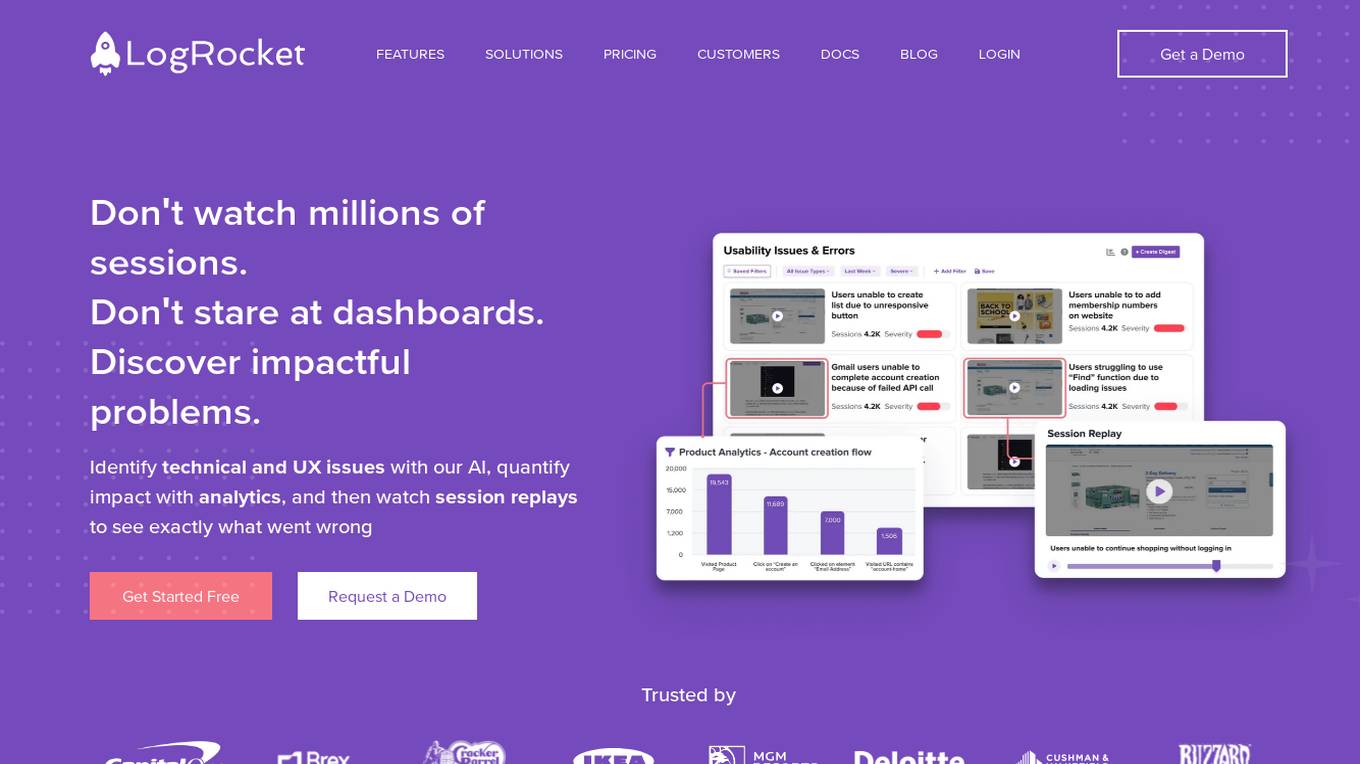

LogRocket

LogRocket is a session replay, product analytics, and issue detection platform that helps software teams deliver the best web and mobile experiences. With LogRocket, you can see exactly what users experienced on your app, as well as DOM playback, console and network logs, errors, and performance data. You can also surface the most impactful user issues with JavaScript errors, network errors, stack traces, automatic triaging, and alerting. LogRocket also provides product analytics to help you understand how users are interacting with your app, and UX analytics to help you visualize how users experience your app at both the individual and aggregate level.

CodeMate

CodeMate is an AI pair programmer tool designed to help developers write error-free code faster. It offers features like code navigation, understanding complex codebases, intuitive interface for smarter coding, instant debugging, code refactoring, and AI-powered code reviews. CodeMate supports all programming languages and provides suggestions for code optimizations. The tool ensures the security and privacy of user code and offers different pricing plans for individual developers, teams, and enterprises. Users can interact with their codebase, documentation, and Git repositories using CodeMate Chat. The tool aims to improve code quality and productivity by acting as a co-developer while programming.

RagaAI Catalyst

RagaAI Catalyst is a sophisticated AI observability, monitoring, and evaluation platform designed to help users observe, evaluate, and debug AI agents at all stages of Agentic AI workflows. It offers features like visualizing trace data, instrumenting and monitoring tools and agents, enhancing AI performance, agentic testing, comprehensive trace logging, evaluation for each step of the agent, enterprise-grade experiment management, secure and reliable LLM outputs, finetuning with human feedback integration, defining custom evaluation logic, generating synthetic data, and optimizing LLM testing with speed and precision. The platform is trusted by AI leaders globally and provides a comprehensive suite of tools for AI developers and enterprises.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

Zazzani AI Buddy

Zazzani AI Buddy is an AI-powered platform that empowers users to create, debug code, write articles, and communicate with AI in multiple languages. It enhances productivity by generating ideas, providing context-specific answers, and eliminating monotony through automated tasks. Users can sign up to receive updates and contribute to the platform's growth. Zazzani AI Buddy aims to streamline workflows and inspire creativity through its innovative AI capabilities.

AtozAi

AtozAi is an AI application designed to empower developers by providing AI-powered tools that enhance coding efficiency and productivity. The platform offers features such as AI-driven code debugging, efficient code conversion, smart regex generation, comprehensive code explanations, and instant text explanations. AtozAi aims to cover a wide range of coding tasks with specialized AI algorithms, continually expanding its toolkit to make tasks easier, more efficient, and creative for developers.

Kropply

Kropply is an AI-powered debugging tool that helps developers fix logic, package, and unit-level bugs in their codebase once they run the code. It integrates with VSCode to provide real-time insights and error correction, streamlining the debugging process and making coding more efficient.

Microsoft Copilot

Microsoft Copilot is an AI-powered coding assistant that helps developers write better code, faster. It provides real-time suggestions and code completions, and can even generate entire functions and classes. Copilot is available as a Visual Studio Code extension and as a standalone application.

1 - Open Source AI Tools

evidently

Evidently is an open-source Python library designed for evaluating, testing, and monitoring machine learning (ML) and large language model (LLM) powered systems. It offers a wide range of functionalities, including working with tabular, text data, and embeddings, supporting predictive and generative systems, providing over 100 built-in metrics for data drift detection and LLM evaluation, allowing for custom metrics and tests, enabling both offline evaluations and live monitoring, and offering an open architecture for easy data export and integration with existing tools. Users can utilize Evidently for one-off evaluations using Reports or Test Suites in Python, or opt for real-time monitoring through the Dashboard service.

20 - OpenAI Gpts

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

TypeScript Engineer

An expert TypeScript engineer to help you solve and debug problems together.

Deluge Developer by TechBloom

Zoho Deluge expert developer who is trained to write and debug Deluge Functions for Zoho CRM

The Dock - Your Docker Assistant

Technical assistant specializing in Docker and Docker Compose. Lets Debug !

Gif-PT

Gif generator. Uses Dalle3 to make a spritesheet, then code interpreter to slice it and animate. Includes an automatic refinement and debug mode. v1.2 GPTavern

María Dolores

Inspired by a TV character, lives on a farm, analytical and philosophical, with a 'DEBUG' mode.