Best AI tools for< Customize Training >

20 - AI tool Sites

Grasply

Grasply.ai is an AI-powered personalized training solution that transforms documents into impactful learning resources using multi-agent AI training assistants. It enhances productivity, improves skill transfer, and empowers teams to succeed by creating customized learning resources for training and assessment. Grasply allows users to upload documents, define learning goals, customize the learning experience, build tailored micro-courses with AI, share personalized courses, and track learner progress. It offers different pricing plans with varying features to cater to different user needs.

Paradiso AI

Paradiso AI is an AI application that offers a range of generative AI solutions tailored to businesses. From AI chatbots to AI employees and document generators, Paradiso AI helps businesses boost ROI, enhance customer satisfaction, optimize costs, and accelerate time-to-value. The platform provides customizable AI tools that seamlessly adapt to unique processes, accelerating tasks, ensuring precision, and driving exceptional outcomes. With a focus on data security, compliance, and cost efficiency, Paradiso AI aims to deliver high-quality outcomes at lower operating costs through sophisticated prompt optimization and ongoing refinements.

SC Training

SC Training, formerly known as EdApp, is a mobile learning management system that offers a comprehensive platform for creating, delivering, and tracking training courses. The application provides features such as admin control, content creation tools, analytics tracking, AI course generation, microlearning courses, gamification elements, and support for various industries. SC Training aims to deliver efficient and engaging training experiences to users, with a focus on bite-sized learning and accessibility across devices. The platform also offers course libraries, practical assessments, rapid course refresh, and group training options. Users can customize courses, integrate with existing tools, and access a range of resources through the help center and blog.

PaddleBoat

PaddleBoat is an AI-powered sales readiness platform designed to help sales representatives improve their cold calling skills through realistic AI roleplays. It offers automated call feedback, insights on objection handling, best calling practices, and areas for improvement in every roleplay. PaddleBoat aims to accelerate sales excellence by providing real-time insights, customizing roleplays, and minimizing ramp-up time for sales reps. The platform allows users to create engaging training programs, courses, wikis, and interactive videos to enhance their sales pitch skills and boost their confidence in sales conversations.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

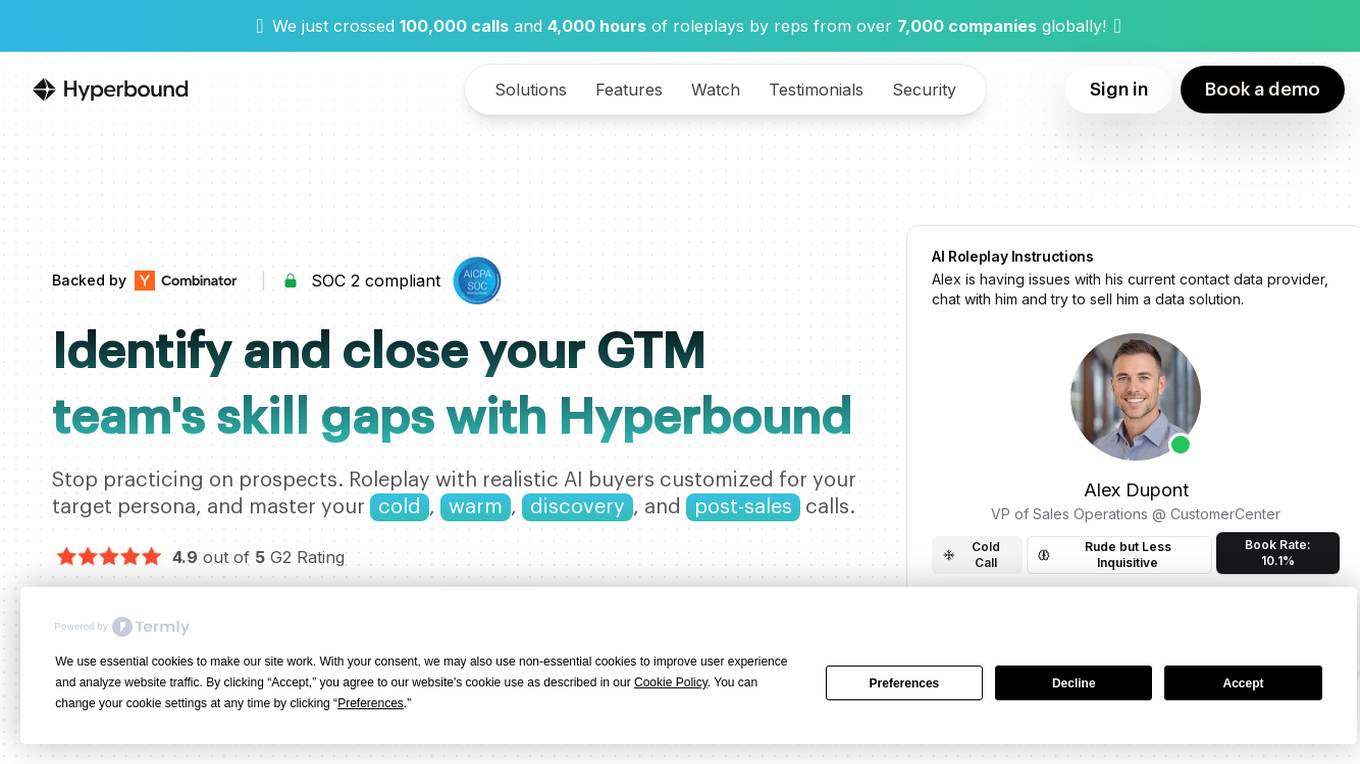

Hyperbound

Hyperbound is an AI Sales Role-Play & Upskilling Platform designed to help sales teams improve their skills through realistic AI roleplays. It allows users to practice cold, warm, discovery, and post-sales calls with AI buyers customized for their target persona. The platform has received high ratings and positive feedback from sales professionals globally, offering interactive demos and no credit card required for booking a demo.

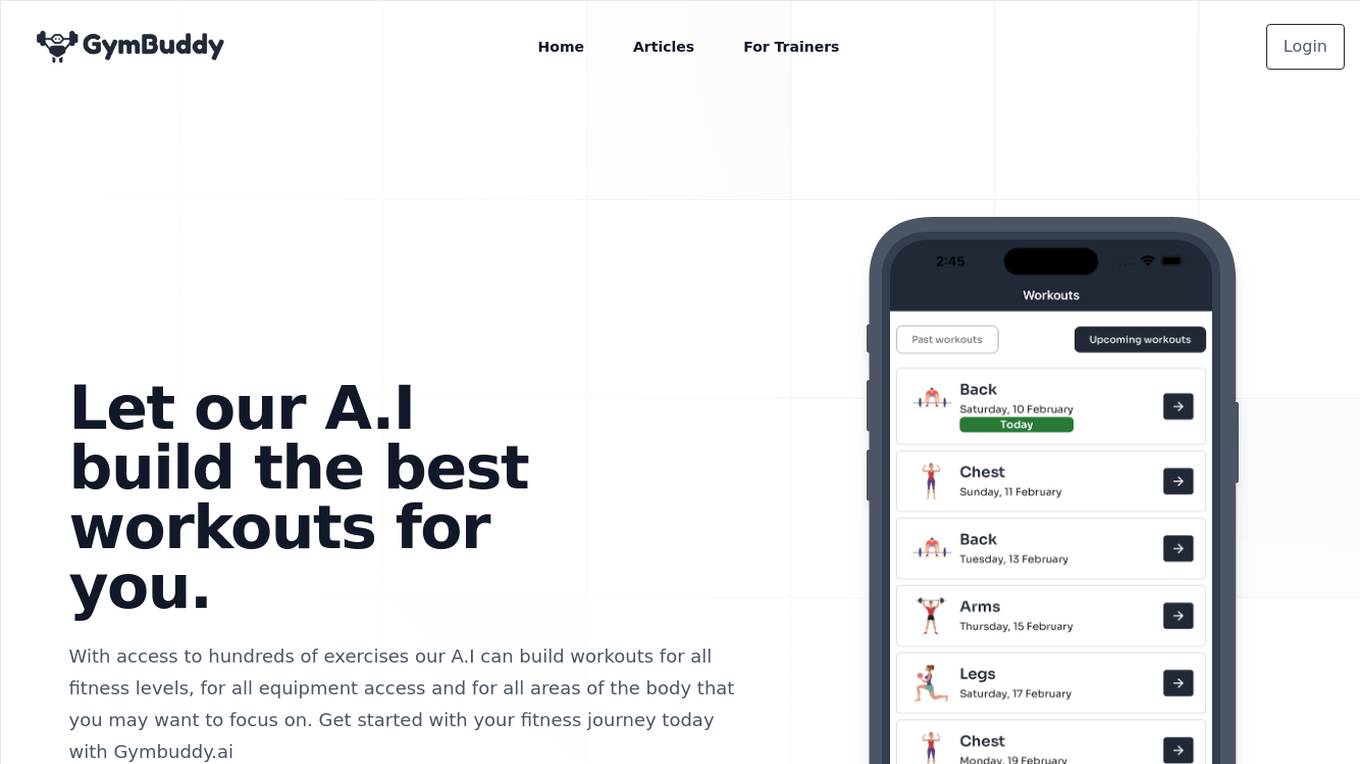

GymBuddy.ai

GymBuddy.ai is an AI workout planner that leverages artificial intelligence to create personalized workout plans tailored to individual fitness levels, equipment access, and target areas of the body. The platform offers a wide range of exercises and features to help users achieve their fitness goals effectively. With advanced analytics and full workout customization, GymBuddy.ai aims to revolutionize the way people approach their fitness journey.

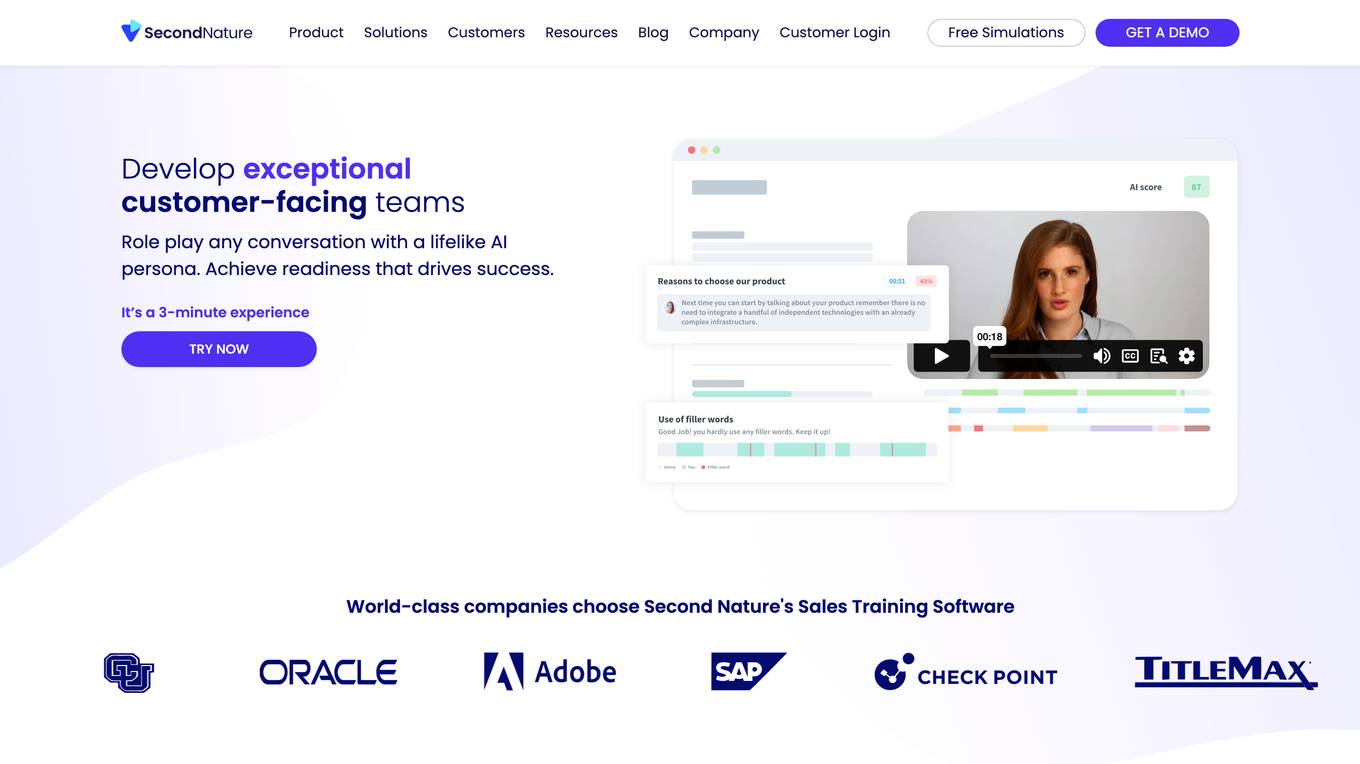

Second Nature

Second Nature is an AI-powered sales training software that offers life-like role-playing simulations to enhance sales skills and productivity. It provides personalized AI role plays, customized simulations, and virtual pitch partners to help sales teams practice conversations, improve performance, and drive results. The platform features AI avatars, template libraries, and various training modules for different industries and use cases.

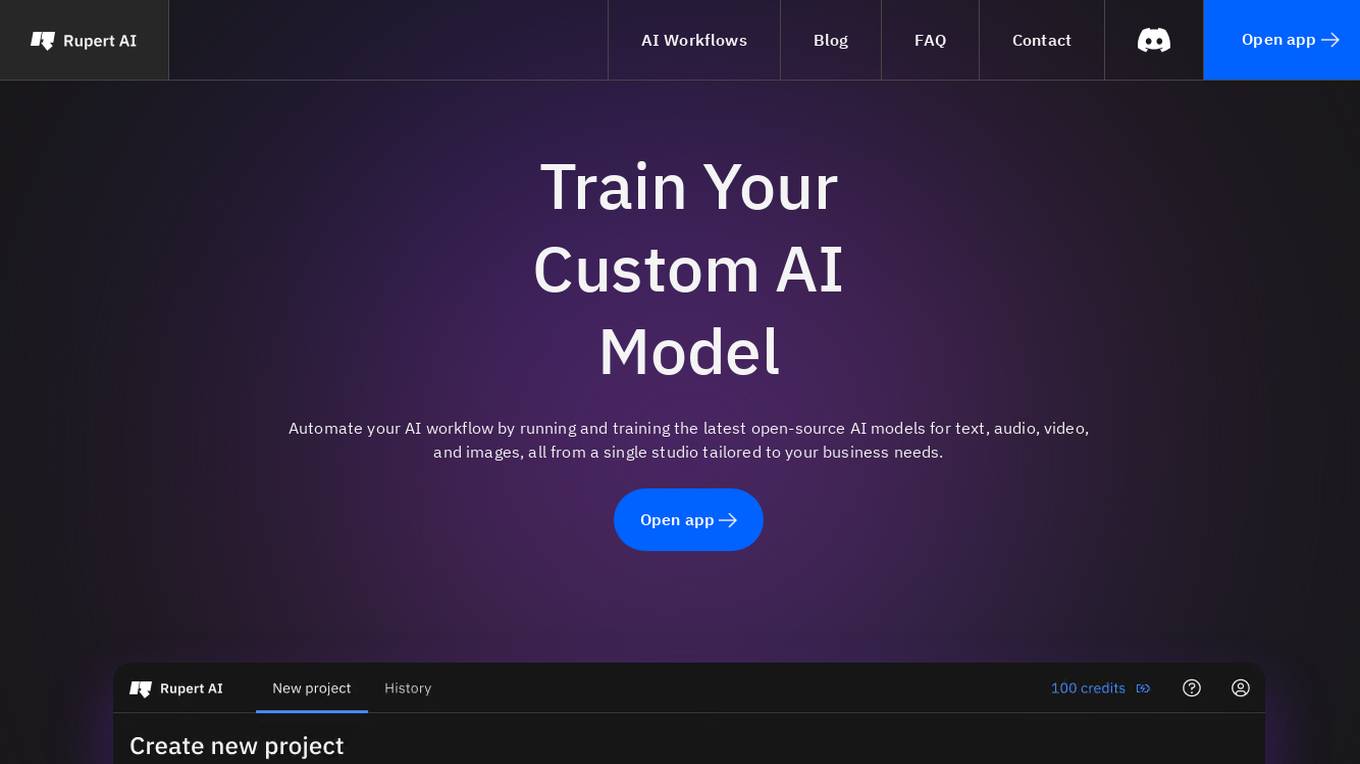

Rupert AI

Rupert AI is an all-in-one AI platform that allows users to train custom AI models for text, audio, video, and images. The platform streamlines AI workflows by providing access to the latest open-source AI models and tools in a single studio tailored to business needs. Users can automate their AI workflow, generate high-quality AI product photography, and utilize popular AI workflows like the AI Fashion Model Generator and Facebook Ad Testing Tool. Rupert AI aims to revolutionize the way businesses leverage AI technology to enhance marketing visuals, streamline operations, and make informed decisions.

Wix.com

Wix.com is a website building platform that allows users to create stunning websites with ease. Users can choose from a variety of templates and customize them to suit their needs. With Wix, you can easily connect your domain to your website and get online in no time. The platform offers a user-friendly interface and a range of features to help you build a professional-looking website without any coding knowledge.

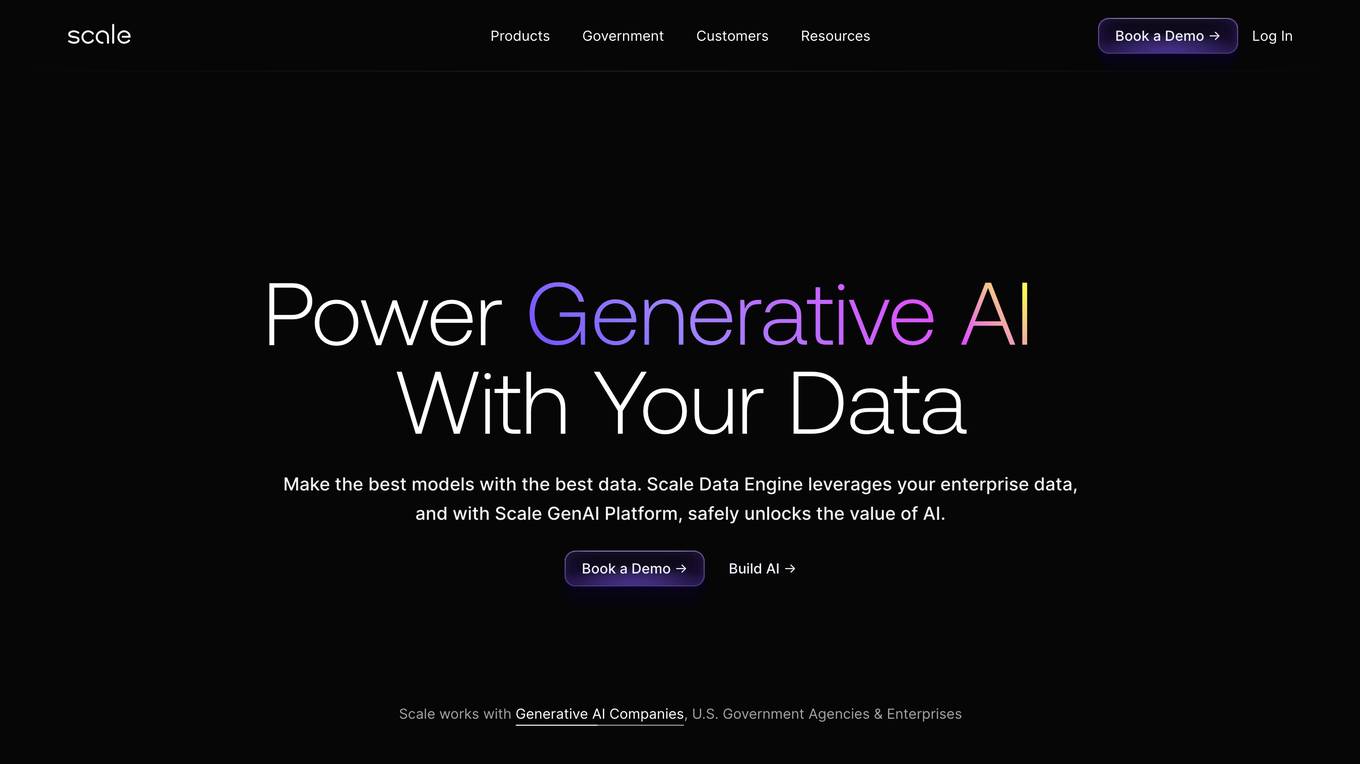

Scale AI

Scale AI is an AI tool that accelerates the development of AI applications for enterprise, government, and automotive sectors. It offers Scale Data Engine for generative AI, Scale GenAI Platform, and evaluation services for model developers. The platform leverages enterprise data to build sustainable AI programs and partners with leading AI models. Scale's focus on generative AI applications, data labeling, and model evaluation sets it apart in the AI industry.

Pooks.ai

Pooks.ai is a revolutionary AI-powered platform that offers personalized books in both ebook and audiobook formats. It leverages sophisticated algorithms and natural language processing to create dynamic and contextually relevant content tailored to individual preferences and needs. Users can enjoy a unique reading experience with books written on any non-fiction topic desired, personalized just for them. The platform provides swift, proficient, and user-friendly service, redefining how users engage with literature and absorb information. Pooks.ai is free to use and offers a wide range of personalized book options, making reading more engaging and meaningful.

Pooks.ai

Pooks.ai is a revolutionary AI-powered platform that offers personalized books in both ebook and audiobook formats. By leveraging sophisticated algorithms and natural language processing, Pooks.ai creates dynamic and contextually relevant content tailored to individual preferences and needs. Users can enjoy a unique reading experience with books crafted specifically for them, covering a wide range of topics from fitness and travel to pet care and self-help. The platform aims to transform the way people engage with literature by providing affordable and personalized reading experiences.

Slice Knowledge

Slice Knowledge is an AI-powered content creation platform designed for learning purposes. It offers fast and simple creation of learning units using AI technology. The platform is a perfect solution for course creators, HR and L&D teams, education experts, and enterprises looking to enhance their employee training programs. Slice Knowledge provides AI-powered creation, compliance templates, assistant bots, SCORM tracking integration, and multilingual support. It allows users to convert documents into interactive, responsive, SCORM-compliant learning materials with features like unlimited designer CSS, interactive video, multi-lingual support, and responsive design.

EduHunt

EduHunt is an AI-powered search engine that helps users find quality educational content on YouTube. It allows users to search for specific topics and filters the results to show only the most relevant and high-quality videos. EduHunt also offers a variety of features to help users customize their search results, such as the ability to filter by language, duration, and difficulty level.

Degreed

Degreed is an AI-driven learning platform that offers skill-building solutions for employees, from onboarding to retention. It partners with leading vendors to provide skills-first learning experiences. The platform leverages AI to deliver efficient and effective learning experiences, personalized skill development, and data-driven insights. Degreed helps organizations identify critical skill gaps, provide personalized learning paths, and measure the impact of upskilling and reskilling initiatives. With a focus on workforce transformation, Degreed empowers companies to drive business results through continuous learning and skill development.

Mendable

Mendable is an AI-powered search tool that helps businesses answer customer and employee questions by training a secure AI on their technical resources. It offers a variety of features such as answer correction, custom prompt edits, and model creativity control, allowing businesses to customize the AI to fit their specific needs. Mendable also provides enterprise-grade security features such as RBAC, SSO, and BYOK, ensuring the security and privacy of sensitive data.

CourseMagic.ai

CourseMagic.ai is an AI-powered platform designed to assist educators in effortlessly generating high-quality courses by leveraging best practices in learning design. The platform allows users to customize courses for any level, import directly into Learning Management Systems (LMS), and offers a range of frameworks and taxonomies to enhance course structure and content. CourseMagic.ai aims to streamline the course creation process, reduce development time, and provide interactive activities and assessments to engage learners effectively.

KreadoAI

KreadoAI is a cutting-edge AI video generator that allows users to create professional-quality videos in just minutes. With over 700 AI avatars and 1,600 AI voices in 140 languages, KreadoAI offers a simple editor and fast creation process. Trusted by over 2 million customers in 200+ countries, KreadoAI provides cost-saving, time-saving, and engagement-increasing solutions for video production. The platform is ideal for marketing, education, training, and healthcare industries, offering easy customization and sharing options.

Osher.ai

Osher.ai is a personal AI for businesses that allows users to interact with websites, intranets, knowledge bases, process documents, spreadsheets, and procedures. It can be used to train custom AIs on internal knowledge bases, process documents, and files. Osher.ai also offers private and public AIs, and users can customize their AIs' personality, purpose, and welcome message.

2 - Open Source AI Tools

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

fastfit

FastFit is a Python package designed for fast and accurate few-shot classification, especially for scenarios with many semantically similar classes. It utilizes a novel approach integrating batch contrastive learning and token-level similarity score, significantly improving multi-class classification performance in speed and accuracy across various datasets. FastFit provides a convenient command-line tool for training text classification models with customizable parameters. It offers a 3-20x improvement in training speed, completing training in just a few seconds. Users can also train models with Python scripts and perform inference using pretrained models for text classification tasks.

20 - OpenAI Gpts

Corporate Trainer

Develops training programs, customizing content to fit corporate culture and objectives.

VBPS TigerBot

This is a customized Chat bot that has been trained on the VBPS Employee Handbook, as well as the current teacher union contract.

The Learning Architect

An all-in-one, consultative L&D expert AI helping you build impactful, customized learning solutions for your organization.

Tattoo Ideas GPT

Helps design and customize tattoos, recommends artists, and provides aftercare advice.

Quick QR Art - QR Code AI Art Generator

Create, Customize, and Track Stunning QR Codes Art with Our Free QR Code AI Art Generator. Seamlessly integrate these artistic codes into your marketing materials, packaging, and digital platforms.

Instant Command GPT

Executes tasks via short commands instantly, using a single seesion to customize commands.

GAPP STORE

Welcome to GAPP Store: Chat, create, customize—your all-in-one AI app universe

Sneaker Genius

Expert in sneaker customization, buying, collecting, and offering detailed advice on painting techniques and design inspiration

Preference Card Estimator

Generates detailed orthopedic surgery cards using uploaded formats.

Vikas' Scripting Helper

Guides in creating, customizing Airtable scripts with user-friendly explanations.