Best AI tools for< Customize Llm Backend >

20 - AI tool Sites

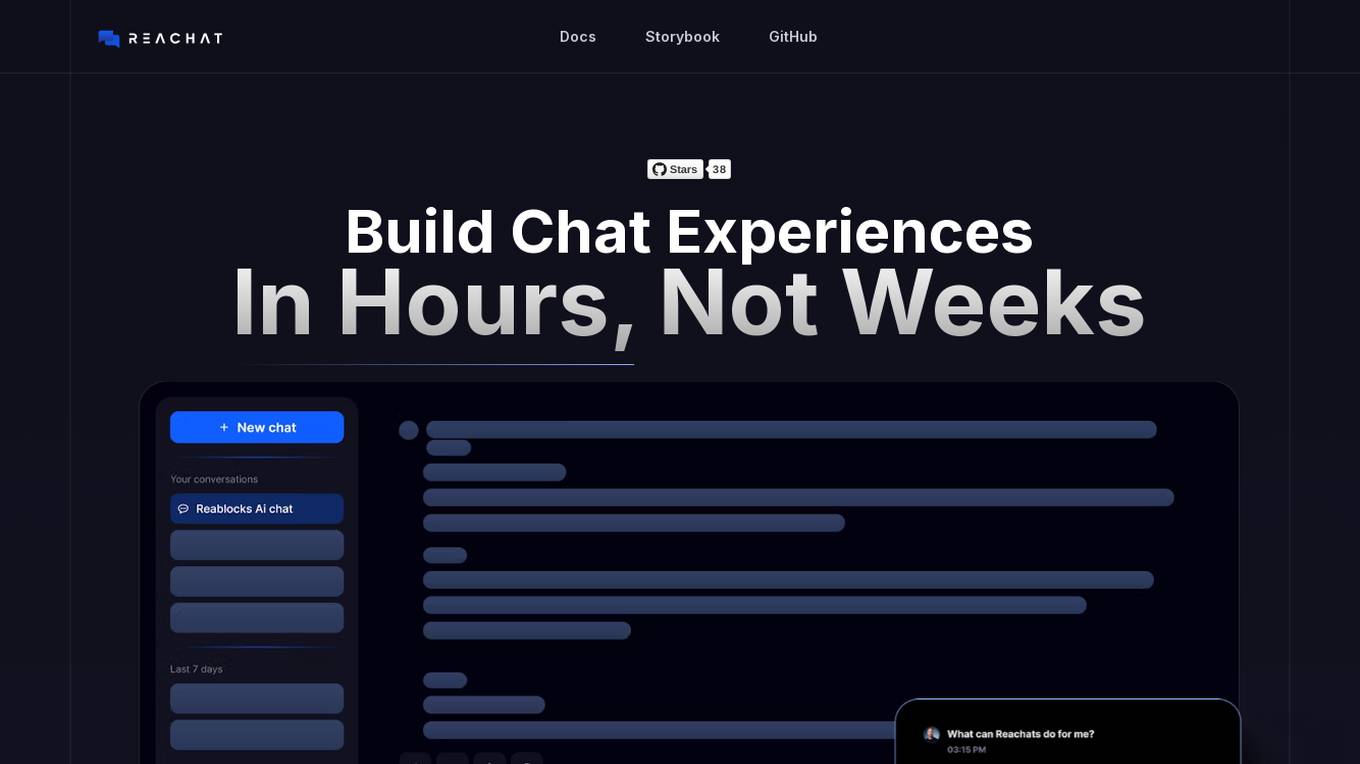

Reachat

Reachat is an open-source UI building library for creating chat interfaces in ReactJS. It offers highly customizable components and theming options, rich media support for file uploads and markdown formatting, an intuitive API for building custom chat experiences, and the ability to seamlessly switch between different AI models. Reachat is battle-tested and used in production across various enterprise products. It is a powerful, flexible, and user-friendly AI chat interface library that allows developers to easily integrate conversational AI capabilities into their applications without the need to spend weeks building custom components. Reachat is not tied to any specific backend or LLM, providing the freedom to use it with any backend or LLM of choice.

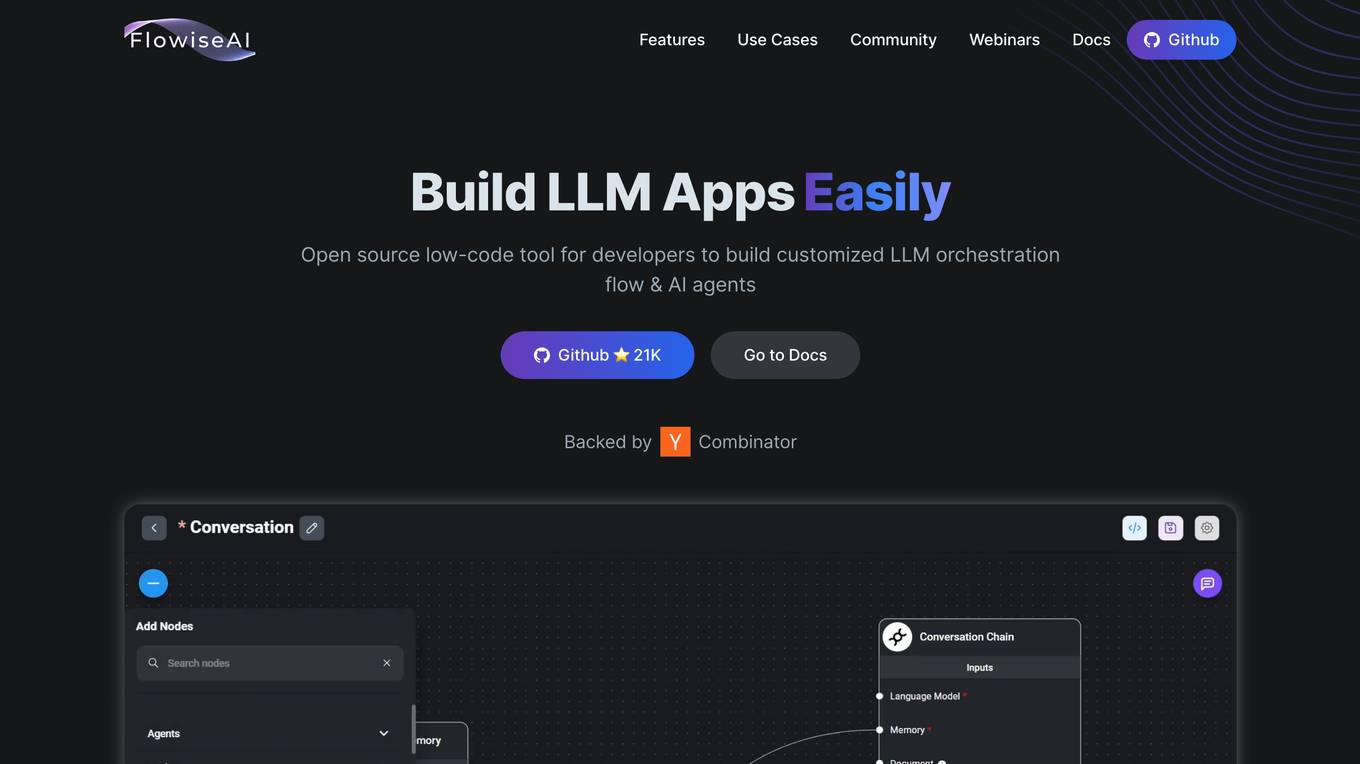

Flowise

Flowise is an open-source, low-code tool that enables developers to build customized LLM orchestration flows and AI agents. It provides a drag-and-drop interface, pre-built app templates, conversational agents with memory, and seamless deployment on cloud platforms. Flowise is backed by Combinator and trusted by teams around the globe.

LLM Quality Beefer-Upper

LLM Quality Beefer-Upper is an AI tool designed to enhance the quality and productivity of LLM responses by automating critique, reflection, and improvement. Users can generate multi-agent prompt drafts, choose from different quality levels, and upload knowledge text for processing. The application aims to maximize output quality by utilizing the best available LLM models in the market.

OAK

OAK is an open-source platform for building and deploying custom AI agents quickly and easily. It offers a modular design, powerful plugins, and seamless integration with various AI models. OAK is scalable, flexible, and developer-friendly, allowing users to create AI agents in minutes without hassle.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

Picovoice

Picovoice is an on-device Voice AI and local LLM platform designed for enterprises. It offers a range of voice AI and LLM solutions, including speech-to-text, noise suppression, speaker recognition, speech-to-index, wake word detection, and more. Picovoice empowers developers to build virtual assistants and AI-powered products with compliance, reliability, and scalability in mind. The platform allows enterprises to process data locally without relying on third-party remote servers, ensuring data privacy and security. With a focus on cutting-edge AI technology, Picovoice enables users to stay ahead of the curve and adapt quickly to changing customer needs.

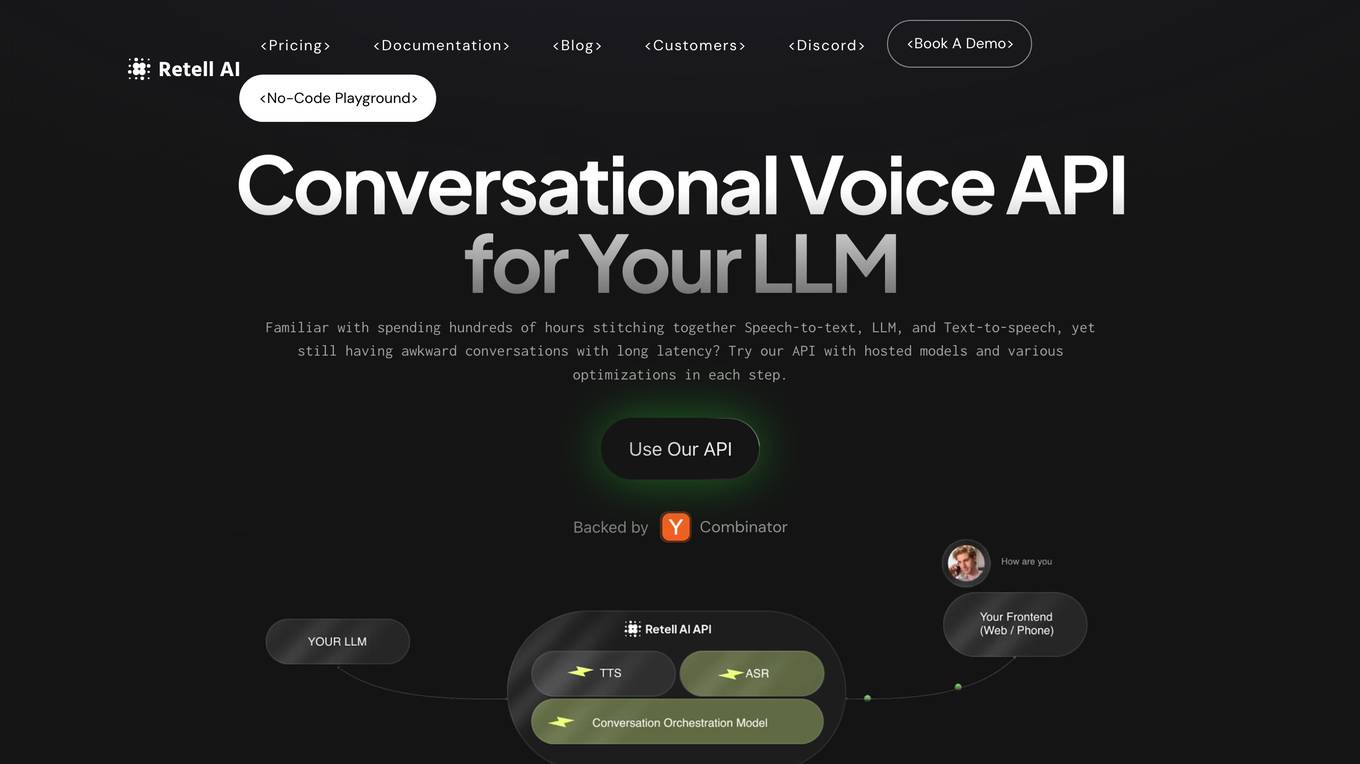

Retell AI

Retell AI provides a Conversational Voice API that enables developers to integrate human-like voice interactions into their applications. With Retell AI's API, developers can easily connect their own Large Language Models (LLMs) to create AI-powered voice agents that can engage in natural and engaging conversations. Retell AI's API offers a range of features, including ultra-low latency, realistic voices with emotions, interruption handling, and end-of-turn detection, ensuring seamless and lifelike conversations. Developers can also customize various aspects of the conversation experience, such as voice stability, backchanneling, and custom voice cloning, to tailor the AI agent to their specific needs. Retell AI's API is designed to be easy to integrate with existing LLMs and frontend applications, making it accessible to developers of all levels.

AppSec Assistant

AppSec Assistant is an AI-powered application designed to provide automated security recommendations in Jira Cloud. It focuses on ensuring data security by enabling secure-by-design software development. The tool simplifies setup by allowing users to add their OpenAI API key and organization, encrypts and stores data using Atlassian's Storage API, and provides tailored security recommendations for each ticket to reduce manual AppSec reviews. AppSec Assistant empowers developers by keeping up with their pace and helps in easing the security review bottleneck.

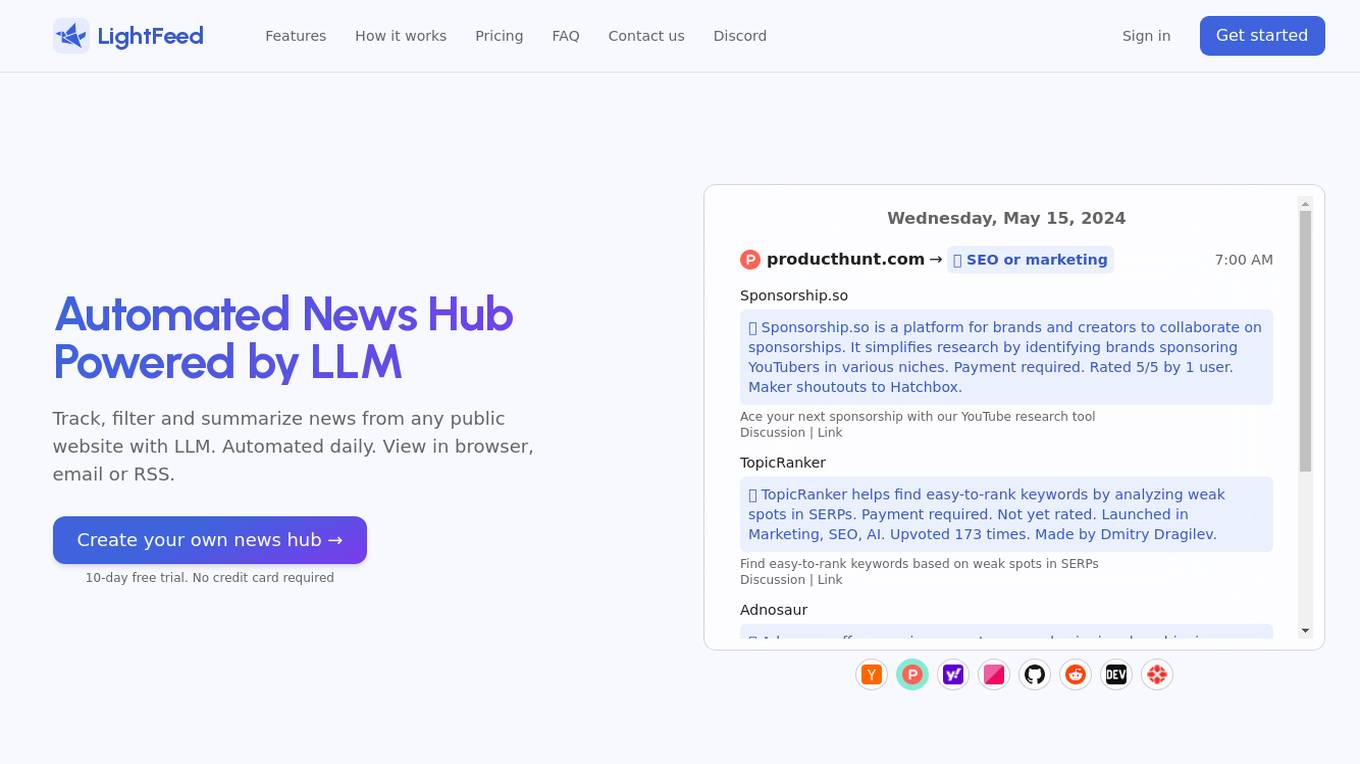

LightFeed

LightFeed is an automated news hub powered by LLM technology that allows users to track, filter, and summarize news from any public website. It offers automated daily updates that can be viewed in a browser, email, or RSS format. Users can create their own news hub with a 10-day free trial and no credit card required. LightFeed employs LLMs like GPT-3.5-turbo and Llama 3 to parse, filter, and summarize web pages into structured and readable feeds. The platform also supports customization of news feeds based on user preferences and provides options for automation and scheduling.

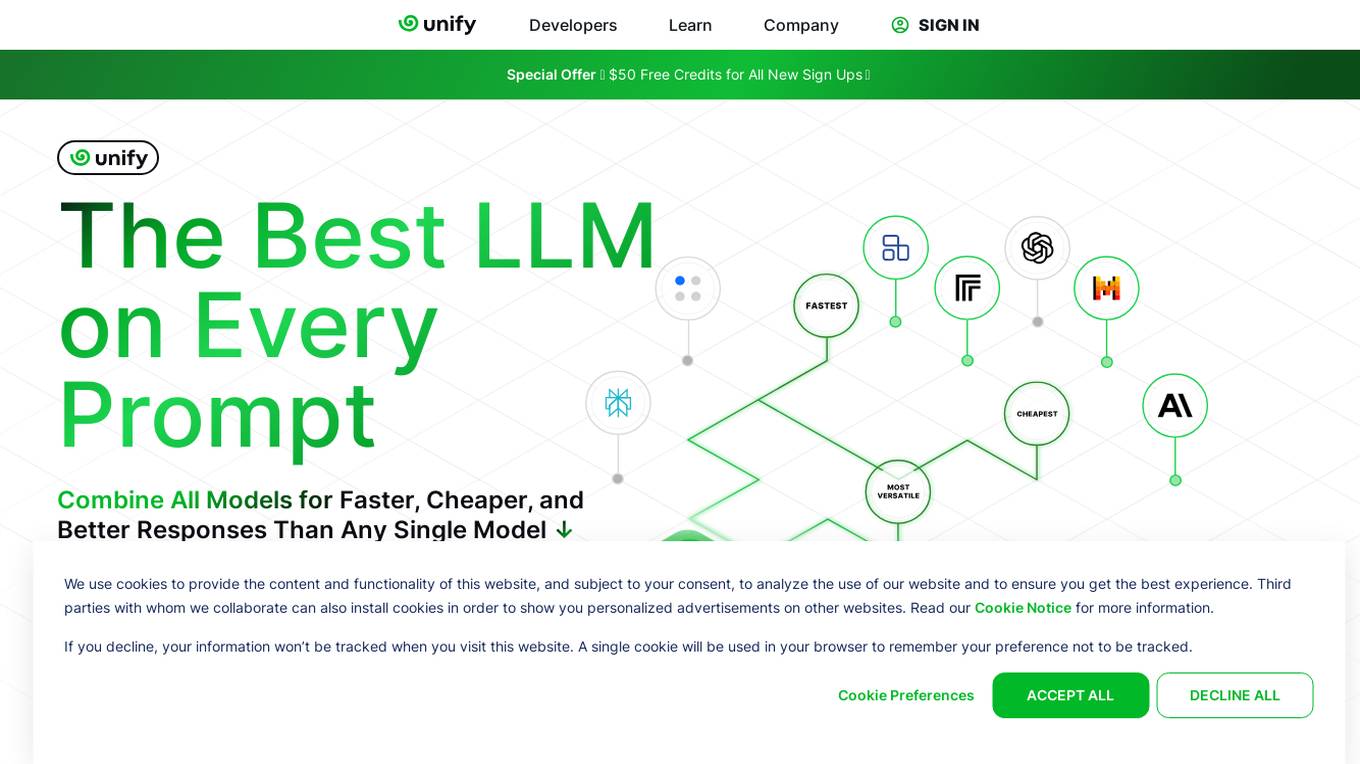

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

Puppeteer

Puppeteer is an AI application that offers Gen AI Nurses to empower patient support in healthcare. It addresses staffing shortages and enhances access to quality care through personalized and human-like patient experiences. The platform revolutionizes patient intake with features like mental health companions, virtual assistants, streamlined data collection, and clinic customization. Additionally, Puppeteer provides a comprehensive solution for building conversational bots, real-time API and database integrations, and personalized user experiences. It also offers a chatbot service for direct patient interaction and support in psychological help-seeking. The platform is designed to enhance healthcare delivery through AI integration and Large Language Models (LLMs) for modern medical solutions.

Janus Pro AI

Janus Pro AI is a cutting-edge multimodal image generation and understanding platform that empowers users to create high-quality images for various projects. It offers powerful features such as multiple art styles, smart editing, lightning-fast image generation, high resolution output, commercial rights, and 24/7 generation service. The platform is built on DeepSeek's advanced architecture, providing users with a seamless experience in generating images in different styles and settings.

Genie TechBio

Genie TechBio is the world's first AI bioinformatician, offering an LLM-powered omics analysis software that operates entirely in natural language, eliminating the need for coding. Researchers can effortlessly analyze extensive datasets by engaging in a conversation with Genie, receiving recommendations for analysis pipelines, and obtaining results. The tool aims to accelerate biomedical research and empower scientists with newfound data analysis capabilities.

Haystack

Haystack is a production-ready open-source AI framework designed to facilitate building AI applications. It offers a flexible components and pipelines architecture, allowing users to customize and build applications according to their specific requirements. With partnerships with leading LLM providers and AI tools, Haystack provides freedom of choice for users. The framework is built for production, with fully serializable pipelines, logging, monitoring integrations, and deployment guides for full-scale deployments on various platforms. Users can build Haystack apps faster using deepset Studio, a platform for drag-and-drop construction of pipelines, testing, debugging, and sharing prototypes.

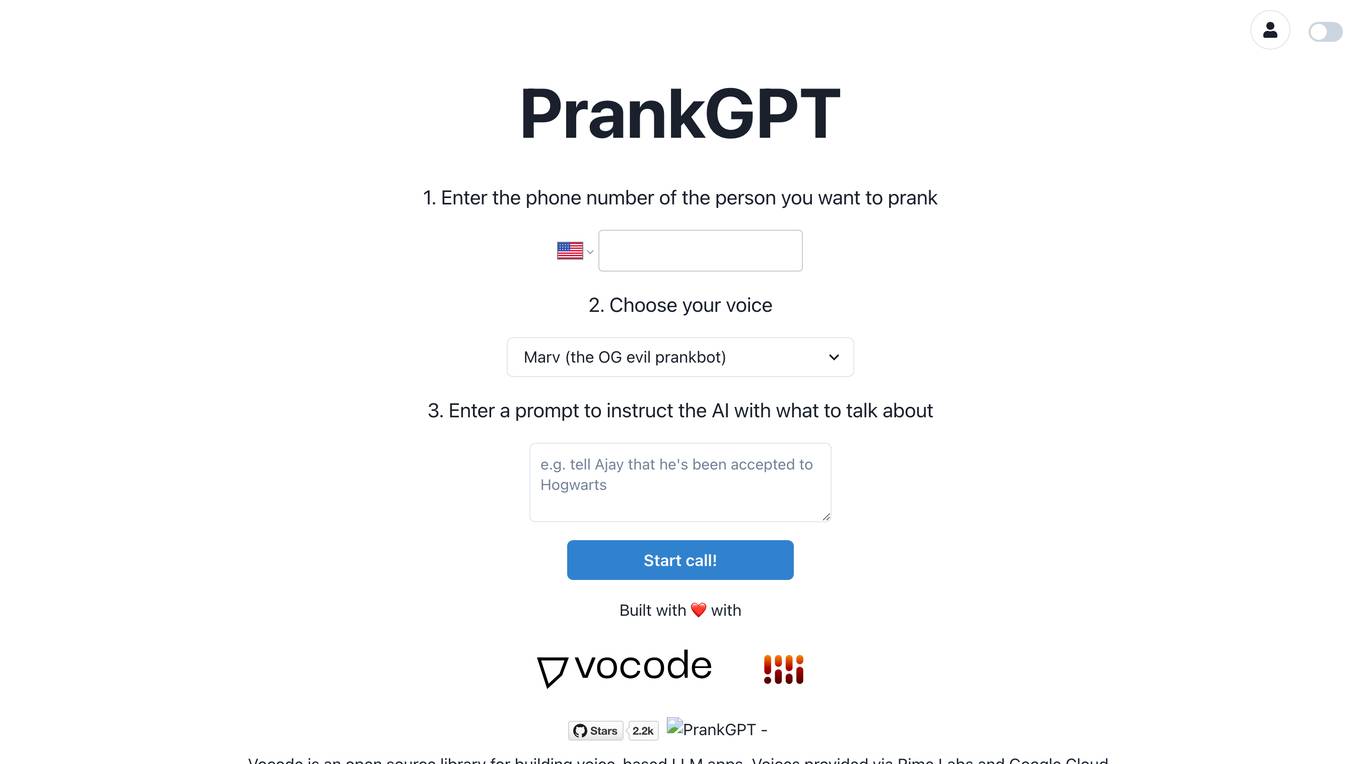

PrankGPT

PrankGPT is an AI tool designed for prank calling, allowing users to enter a phone number, choose a voice, and provide a prompt for the AI to talk about before initiating the call. The application is built with Vocode, an open-source library for creating voice-based LLM apps, and offers voices from Rime Labs and Google Cloud.

Karla

Karla is an AI tool designed for journalists to enhance their writing process by utilizing Large Language Models (LLMs). It helps journalists transform news information into well-structured articles efficiently, augment their sources, customize stories seamlessly, enjoy a sleek editing experience, and export their completed stories easily. Karla acts as a wrapper around the LLM of choice, providing dynamic prompts and integration into a text editor and workflow, allowing journalists to focus on writing without manual prompt crafting. It offers benefits over traditional LLM chat apps by providing efficient prompt crafting, seamless integration, enhanced outcomes, faster performance, model flexibility, and relevant content tailored for journalism.

Owlbot

Owlbot is one of the most advanced AI chatbot platforms in the world, empowering companies with AI to provide instant answers to customers, clients, and employees. It simplifies data analysis, integrates data from multiple sources, and offers customizable chatbot interfaces. Owlbot offers features like data integration, chatbot interface customization, conversation supervision, function calling, and leads generation. Its advantages include efficient data analysis, multilingual support, instant answers, diverse LLM models, and lead generation capabilities. However, Owlbot's disadvantages include potential data security concerns, the need for user expertise, and limited customer interaction compared to human operators.

Golem

Golem is an AI chat application that provides a new ChatGPT experience. It offers a beautiful and user-friendly design, ensuring delightful interactions. Users can chat with a Large Language Model (LLM) securely, with data stored locally or on their personal cloud. Golem is open-source, allowing contributions and use as a reference for Nuxt 3 projects.

Documate

Documate is an open-source tool designed to make your documentation site intelligent by embedding AI chat dialogues. It allows users to ask questions based on the content of the site and receive relevant answers. The tool offers hassle-free integration with popular doc site platforms like VitePress, Docusaurus, and Docsify, without requiring AI or LLM knowledge. Users have full control over the code and data, enabling them to choose which content to index. Documate also provides a customizable UI to meet specific needs, all while being developed with care by AirCode.

Tafi

Tafi is a leading AI tool for 3D content creation, offering a Text-to-3D AI character engine that generates procedurally generated, normalized 3D character and environment datasets at scale. It provides parametric character generation, real-time compatibility, dynamic clothing and hair simulation, semantic labeling, and metadata rich structured data. Tafi is trusted by major LLM providers and technology brands for its high-quality assets and enterprise-ready solutions.

1 - Open Source AI Tools

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

20 - OpenAI Gpts

Agent Prompt Generator for LLM's

This GPT generates the best possible LLM-agents for your system prompts. You can also specify the model size, like 3B, 33B, 70B, etc.

Tattoo Ideas GPT

Helps design and customize tattoos, recommends artists, and provides aftercare advice.

Quick QR Art - QR Code AI Art Generator

Create, Customize, and Track Stunning QR Codes Art with Our Free QR Code AI Art Generator. Seamlessly integrate these artistic codes into your marketing materials, packaging, and digital platforms.

Instant Command GPT

Executes tasks via short commands instantly, using a single seesion to customize commands.

GAPP STORE

Welcome to GAPP Store: Chat, create, customize—your all-in-one AI app universe

Sneaker Genius

Expert in sneaker customization, buying, collecting, and offering detailed advice on painting techniques and design inspiration

Preference Card Estimator

Generates detailed orthopedic surgery cards using uploaded formats.

Vikas' Scripting Helper

Guides in creating, customizing Airtable scripts with user-friendly explanations.

QR Code Creator & Customizer

Create a QR code in 30 seconds + add a cool design effect or overlay it on top of any image. Free, no watermarks, no email required, and we don't store your messages/images.

Corporate Trainer

Develops training programs, customizing content to fit corporate culture and objectives.