Best AI tools for< Create Transformers >

20 - AI tool Sites

FutureSmart AI

FutureSmart AI is a platform that provides custom Natural Language Processing (NLP) solutions. The platform focuses on integrating Mem0 with LangChain to enhance AI Assistants with Intelligent Memory. It offers tutorials, guides, and practical tips for building applications with large language models (LLMs) to create sophisticated and interactive systems. FutureSmart AI also features internship journeys and practical guides for mastering RAG with LangChain, catering to developers and enthusiasts in the realm of NLP and AI.

PPTs using GPTs

This website provides a tool that allows users to create PowerPoint presentations using GPTs (Generative Pre-trained Transformers). GPTs are large language models that can be used to generate text, translate languages, and answer questions. The tool is easy to use and can be used to create presentations on any topic. Users simply need to enter a few keywords and the tool will generate a presentation that is tailored to their needs.

GPTfy

GPTfy is a website that helps users find the best GPTs (Generative Pre-trained Transformers) for their needs. GPTs are AI-powered language models that can be used for a variety of tasks, such as writing, translating, and coding. GPTfy provides a directory of GPTs, as well as reviews and comparisons to help users choose the right GPT for their project.

NeuralBlender

NeuralBlender is a web-based application that allows users to create unique and realistic images using artificial intelligence. The application uses a generative adversarial network (GAN) to generate images from scratch, or to modify existing images. NeuralBlender is easy to use, and does not require any prior experience with artificial intelligence or image editing. Users simply need to upload an image or select a style, and the application will generate a new image based on the input. NeuralBlender can be used to create a wide variety of images, including landscapes, portraits, and abstract art. The application is also capable of generating images that are realistic, stylized, or even surreal.

SongR

SongR is an AI-powered application that allows users to create fully customized songs with just a few clicks, without the need for any musical experience. It enables everyone to generate unique, personalized songs that can be easily shared with others. SongR's all-in-one AI Text-to-Song Transformer feature generates custom lyrics based on keywords, adds vocals and accompaniments from a chosen genre, and creates a unique song for social media sharing. The platform aims to democratize the creation of songs and music for all users.

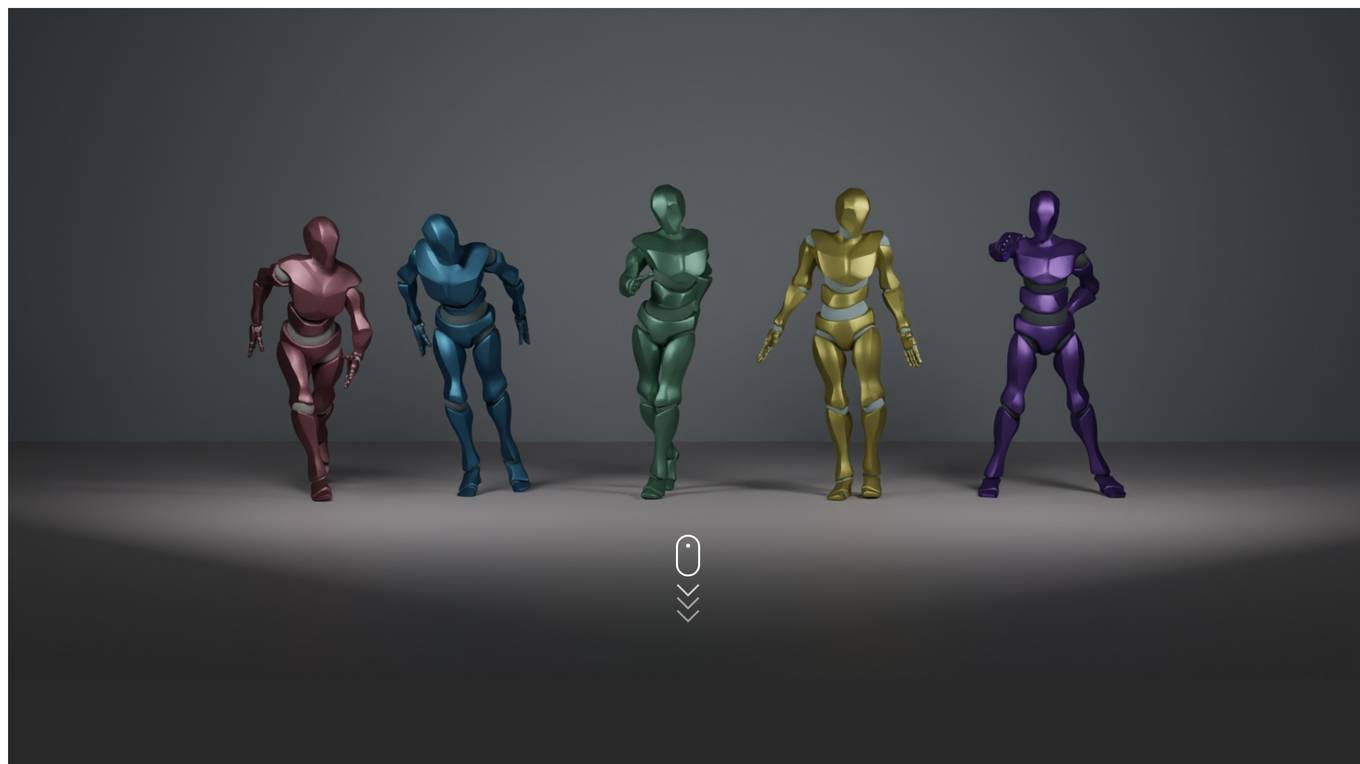

EDGE

EDGE is a powerful method for editable dance generation that creates realistic dances faithful to input music. It uses a transformer-based diffusion model with Jukebox for music feature extraction. EDGE offers editing capabilities like joint-wise conditioning, motion in-betweening, and dance continuation. Human raters prefer dances generated by EDGE over other methods due to its physical realism and powerful editing features.

Luma AI Video Generator

The Luma AI Video Generator is an advanced AI tool developed by Luma Labs that allows users to create realistic videos quickly from text prompts. It offers high-quality video generation capabilities using advanced neural networks and transformer models. The tool stands out in the market for its accessible, high-quality video creation features, making it ideal for both personal and professional use. Users can easily start creating videos for free online, leveraging the innovative technology developed by Luma Labs.

LITIC.AI

LITIC.AI is a leading innovative technology and intelligence company specializing in artificial intelligence applications, consultancy, and education. Established in Antwerp in 2009, the company has a strong foundation in IT services and advanced software for internet platforms. LITIC.AI focuses on developing functional AI applications for sectors such as law, real estate, mortgage, and e-commerce. In addition to AI solutions, the company offers consultancy services and educational workshops to empower clients with AI knowledge and tools. LITIC.AI also provides a range of free AI tools designed for practical applications, making AI accessible to everyone, regardless of their experience level.

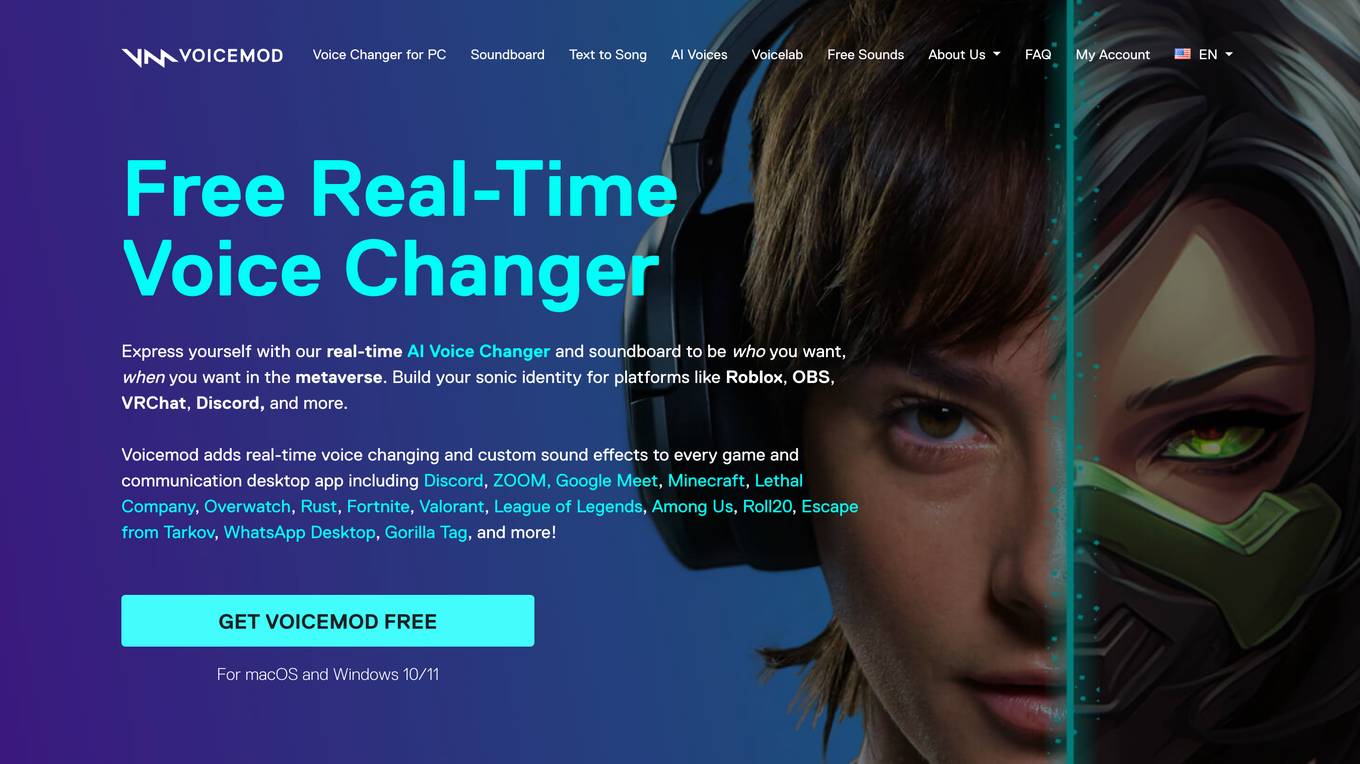

Voicemod

Voicemod is a free real-time voice changer and soundboard software that allows users to modify their voices in real-time. It is compatible with both Windows and macOS and can be used with a variety of applications, including games, chat apps, and video streaming platforms. Voicemod offers a wide range of voice effects, including robot, demon, chipmunk, woman, man, and many others. It also includes a soundboard feature that allows users to play sound effects at the touch of a button. Voicemod is a popular choice for gamers, content creators, and anyone who wants to add some fun and creativity to their voice communications.

Voicemod

Voicemod is a free real-time voice changer and soundboard available on both Windows and macOS. It allows users to change their voice in real-time, add sound effects, and create custom voices. Voicemod integrates with popular games, streaming software, and chat applications, making it a versatile tool for gamers, content creators, and anyone who wants to add some fun to their voice communication.

Dream Machine AI

Dream Machine AI by Luma Labs is an advanced artificial intelligence model designed to generate high-quality, realistic videos quickly from text and images. This highly scalable and efficient transformer model is trained directly on videos, enabling it to produce physically accurate, consistent, and eventful shots. The AI can generate 5-second video clips with smooth motion, cinematic quality, and dramatic elements, transforming static snapshots into dynamic stories. It understands interactions between people, animals, and objects, allowing for videos with great character consistency and accurate physics. Dream Machine AI supports a wide range of fluid, cinematic, and naturalistic camera motions that match the emotion and content of the scene.

Flux AI

Flux AI is a cutting-edge AI image generator that utilizes transformer-based flow models to produce high-quality images. It offers three models - FLUX.1[pro], FLUX.1[dev], and FLUX.1[schnell], each catering to different user needs. From advertising to game development, Flux AI empowers users to create diverse visual content effortlessly. With its user-friendly interface and advanced capabilities, Flux AI is revolutionizing the field of AI art generation.

Imagen

Imagen is an AI application that leverages text-to-image diffusion models to create photorealistic images based on input text. The application utilizes large transformer language models for text understanding and diffusion models for high-fidelity image generation. Imagen has achieved state-of-the-art results in terms of image fidelity and alignment with text. The application is part of Google Research's text-to-image work and focuses on encoding text for image synthesis effectively.

Dream Machine AI

Dream Machine AI is a free, instant-access video generation model that transforms text and images into high-quality videos using advanced transformer models. It leverages Luma AI to create stunning videos effortlessly, with features like incredibly fast generation, realistic and consistent motion, high character consistency, and natural camera movements. Users can access the platform for free and enjoy the benefits of quick video generation with physically accurate and emotionally resonant content.

Flux AI

Flux AI is a cutting-edge text-to-image AI model developed by Black Forest Labs. It uses advanced transformer-powered flow models to generate high-quality images from text descriptions. Flux AI offers multiple model variants catering to different use cases and performance levels, with the fastest model, FLUX.1 [schnell], available for free under an Apache 2.0 license. Users can create various styles of images with prompt adherence, size/aspect variability, and output diversity. The application is committed to making advanced AI technology accessible to all users, fostering innovation and collaboration within the AI community.

Vidu Studio

Vidu Studio is an AI video generation platform that utilizes a text-to-video artificial intelligence model developed by ShengShu-AI in collaboration with Tsinghua University. It can create high-quality video content from text prompts, offering a 16-second 1080P video clip with a single click. The platform is built on the Universal Vision Transformer (U-ViT) architecture, combining Diffusion and Transformer models to produce realistic and detailed video content. Vidu Studio stands out for its ability to generate culturally specific content, particularly focusing on Chinese cultural elements like pandas and loongs. It is a pioneering platform in the field of text-to-video technology, with a strong potential to influence the future of digital media and content creation.

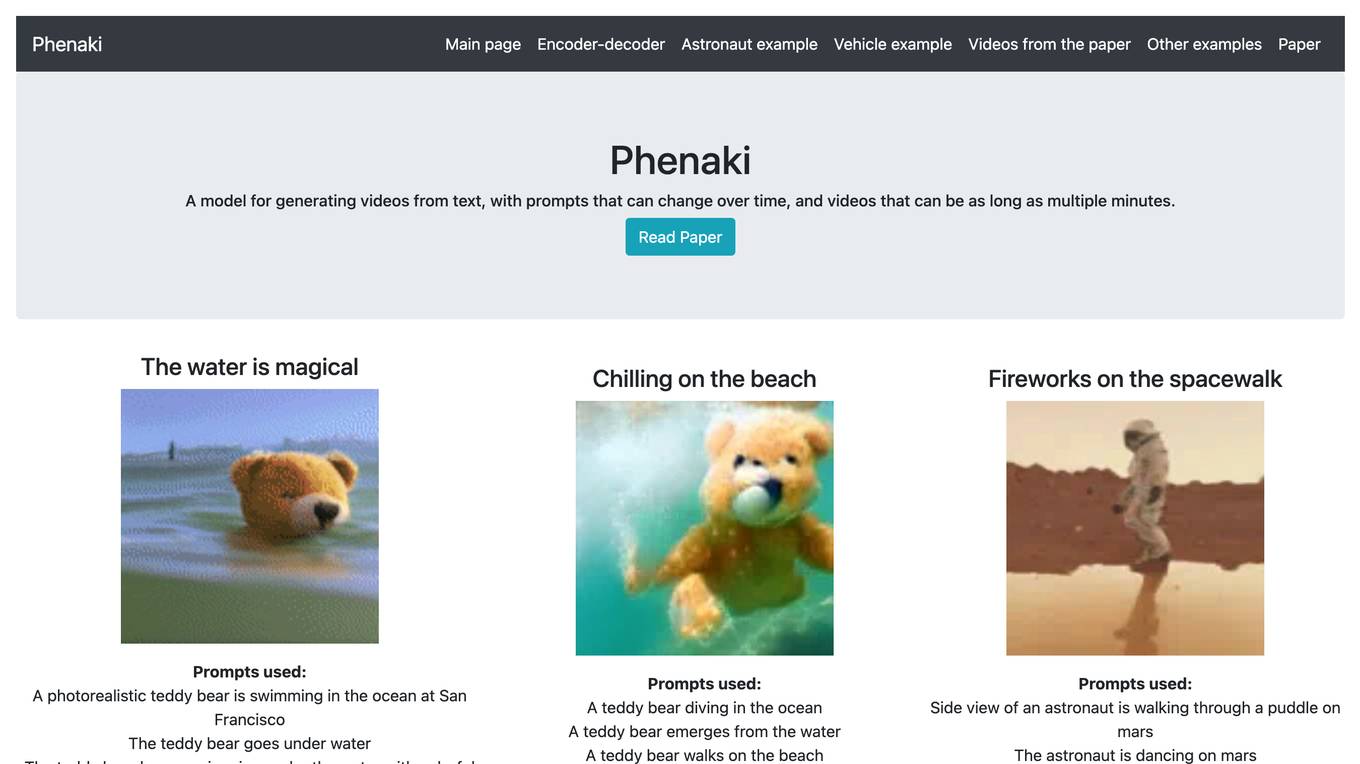

Phenaki

Phenaki is a model capable of generating realistic videos from a sequence of textual prompts. It is particularly challenging to generate videos from text due to the computational cost, limited quantities of high-quality text-video data, and variable length of videos. To address these issues, Phenaki introduces a new causal model for learning video representation, which compresses the video to a small representation of discrete tokens. This tokenizer uses causal attention in time, which allows it to work with variable-length videos. To generate video tokens from text, Phenaki uses a bidirectional masked transformer conditioned on pre-computed text tokens. The generated video tokens are subsequently de-tokenized to create the actual video. To address data issues, Phenaki demonstrates how joint training on a large corpus of image-text pairs as well as a smaller number of video-text examples can result in generalization beyond what is available in the video datasets. Compared to previous video generation methods, Phenaki can generate arbitrarily long videos conditioned on a sequence of prompts (i.e., time-variable text or a story) in an open domain. To the best of our knowledge, this is the first time a paper studies generating videos from time-variable prompts. In addition, the proposed video encoder-decoder outperforms all per-frame baselines currently used in the literature in terms of spatio-temporal quality and the number of tokens per video.

AIPetAvatar

AIPetAvatar.com is an AI-powered pet transformer that allows you to transform your pet into anything you can imagine. With just a few clicks, you can upload a photo of your pet and choose from a variety of templates to transform them into a superhero, a princess, a pirate, or even a work of art. The results are hilarious, heartwarming, and perfect for sharing on social media.

AI Music Generator

The AI Music Generator is an advanced platform powered by AI technology that allows users to create original music in any genre, style, or mood. It offers a range of features such as Text To Song, Lyrics To Song, AI Song Cover Generator, Voice Remover, Music Extension, Lyrics Generator, and more. The platform leverages deep learning models, transformer architecture, and neural networks to produce professional-quality music with voice synthesis and audio processing capabilities. Users can customize music styles, genres, and arrangements, and the tool is suitable for musicians, content creators, game developers, filmmakers, podcasters, businesses, and creative professionals.

Stable Diffusion 3

Stable Diffusion 3 is an advanced text-to-image model developed by Stability AI, offering significant improvements in image fidelity, multi-subject handling, and text adherence. Leveraging the Multimodal Diffusion Transformer (MMDiT) architecture, it features separate weights for image and language representations. Users can access the model through the Stable Diffusion 3 API, download options, and online platforms to experience its capabilities and benefits.

1 - Open Source AI Tools

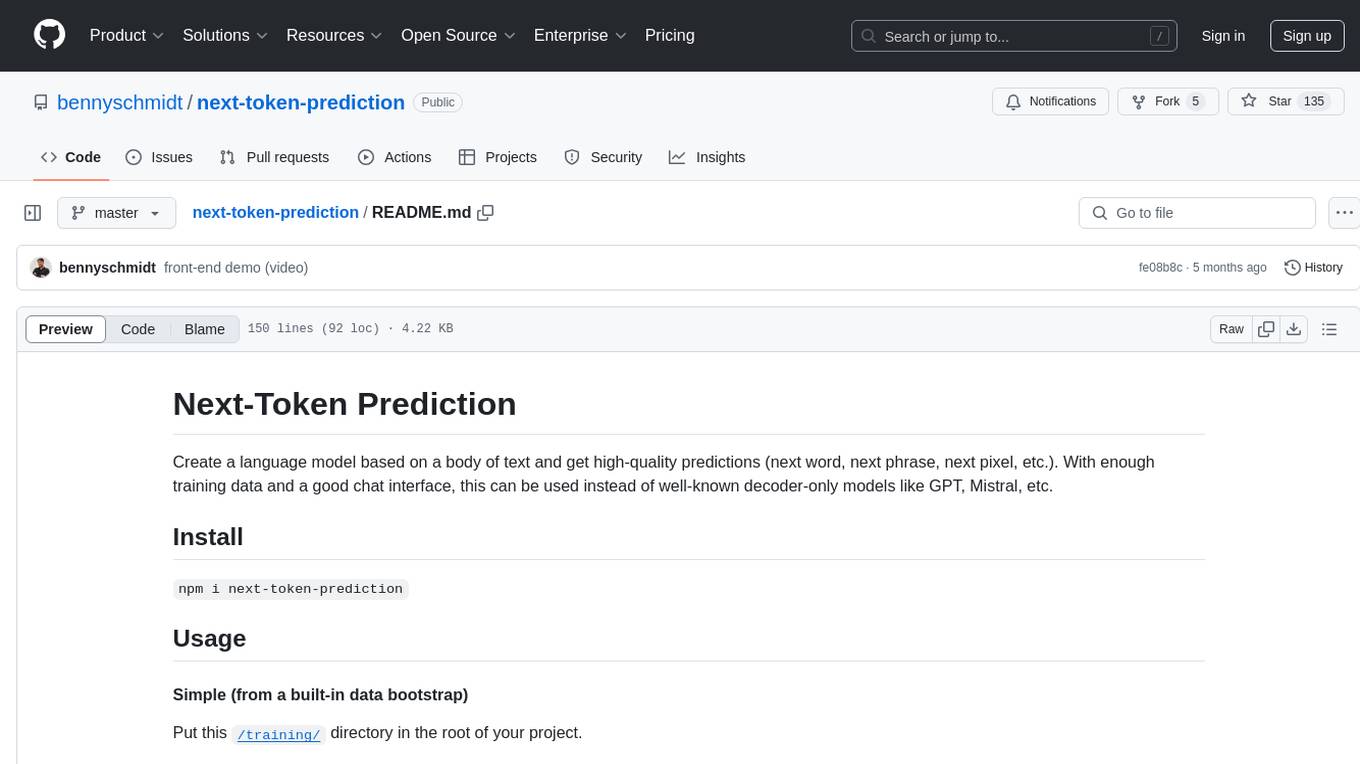

next-token-prediction

Next-Token Prediction is a language model tool that allows users to create high-quality predictions for the next word, phrase, or pixel based on a body of text. It can be used as an alternative to well-known decoder-only models like GPT and Mistral. The tool provides options for simple usage with built-in data bootstrap or advanced customization by providing training data or creating it from .txt files. It aims to simplify methodologies, provide autocomplete, autocorrect, spell checking, search/lookup functionalities, and create pixel and audio transformers for various prediction formats.

20 - OpenAI Gpts

Cartoon Transformer

I transform photos into cartoons, maintaining their original essence.

Chibify It (Chibi Art Transformer)

Expert in transforming photos into chibi-style illustrations using DALL-E.

Rockstar Art Transformer

Recria imagens no estilo dos jogos GTA e Red Dead Redemption. | Recreates images in the style of GTA and Red Dead Redemption games

PieGPT

Whimsical title transformer and pie-inclusive recipe creator - type something like "make me a daft PIe Nation recipe for the film "Friday the Thirteenth" and watch as "Pieday the Thirteenth" teases you with meat and pastry horror...

Your JoJo Stand

Transforms photos into JoJo-style Stands. Once uploading a photo, type: Create JoJo Stand

Confident Communicator

Generates, elevates, and transforms all types of communications, empowering you to effortlessly create messages in your style, invent new voices, or tap into its collection of learned tones.