Best AI tools for< Create Test Cases >

20 - AI tool Sites

Webo.AI

Webo.AI is a test automation platform powered by AI that offers a smarter and faster way to conduct testing. It provides generative AI for tailored test cases, AI-powered automation, predictive analysis, and patented AiHealing for test maintenance. Webo.AI aims to reduce test time, production defects, and QA costs while increasing release velocity and software quality. The platform is designed to cater to startups and offers comprehensive test coverage with human-readable AI-generated test cases.

testRigor

testRigor is an AI-based test automation tool that allows users to create and execute test cases using plain English instructions. It leverages generative AI in software testing to automate test creation and maintenance, offering features such as no code/codeless testing, web, mobile, and desktop testing, Salesforce automation, and accessibility testing. With testRigor, users can achieve test coverage faster and with minimal maintenance, enabling organizations to reallocate QA engineers to build API tests and increase test coverage significantly. The tool is designed to simplify test automation, reduce QA headaches, and improve productivity by streamlining the testing process.

Teste.ai

Teste.ai is an AI-powered platform that allows users to create software testing scenarios and test cases using top-notch artificial intelligence technology. The platform offers a variety of tools based on AI to accelerate the software quality testing journey, helping testers cover a wide range of requirements with a vast array of test scenarios efficiently. Teste.ai's intelligent features enable users to save time and enhance efficiency in creating, executing, and managing software tests. With advanced AI integration, the platform provides automatic generation of test cases based on software documentation or specific requirements, ensuring comprehensive test coverage and precise responses to testing queries.

BlazeMeter

BlazeMeter by Perforce is an AI-powered continuous testing platform designed to automate testing processes and enhance software quality. It offers effortless test creation, seamless test execution, instant issue analysis, and self-sustaining maintenance. BlazeMeter provides a comprehensive solution for performance, functional, scriptless, API testing, and monitoring, along with test data and service virtualization. The platform enables teams to speed up digital transformation, shift quality left, and streamline DevOps practices. With AI analytics, scriptless test creation, and UX testing capabilities, BlazeMeter empowers users to drive innovation, accuracy, and speed in their test automation efforts.

Octomind

Octomind is an AI-powered QA platform that provides automated end-to-end testing for web applications. It offers features such as self-healing tests, visual debugging, and stable test runs. Octomind is designed for early-stage and fast-growing SaaS or AI startups with small engineering teams, aiming to improve product quality and speed by catching regressions before they reach users. The platform is trusted by thousands of engineering teams worldwide and is SOC-2 certified, ensuring privacy and security.

AI Generated Test Cases

AI Generated Test Cases is an innovative tool that leverages artificial intelligence to automatically generate test cases for software applications. By utilizing advanced algorithms and machine learning techniques, this tool can efficiently create a comprehensive set of test scenarios to ensure the quality and reliability of software products. With AI Generated Test Cases, software development teams can save time and effort in the testing phase, leading to faster release cycles and improved overall productivity.

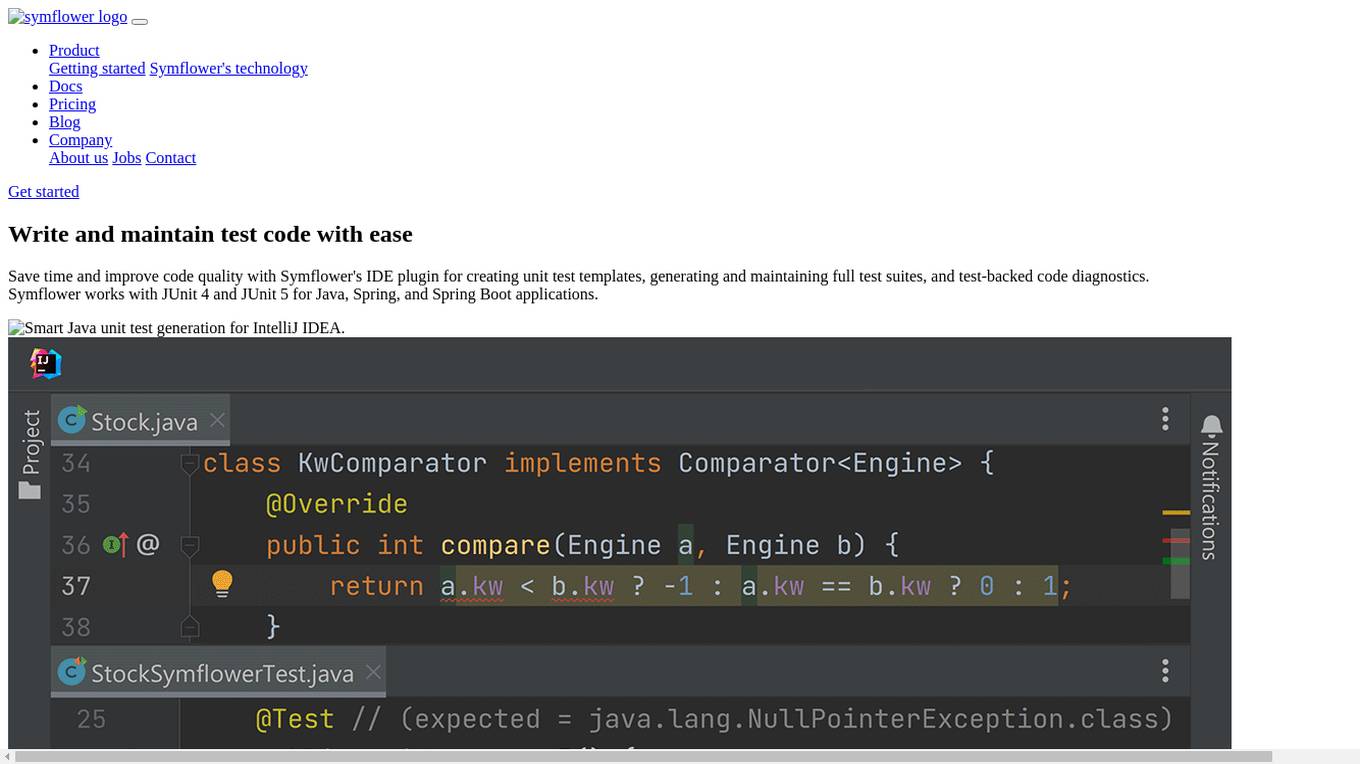

Symflower

Symflower is an AI-powered unit test generator for Java applications. It helps developers write and maintain test code with ease, saving time and improving code quality. Symflower works with JUnit 4 and JUnit 5 for Java, Spring, and Spring Boot applications.

Qodex

Qodex is an AI-powered QA tool designed for end-to-end API testing, built by developers for developers. It offers enterprise-level QA efficiency with full automation and zero coding required. The tool auto-generates tests in plain English and adapts as the product evolves. Qodex also provides interactive API documentation and seamless integration, making it a cost-effective solution for enhancing productivity and efficiency in software testing.

Panto AI

Panto AI is an AI automation testing platform that offers a comprehensive solution for mobile app testing, combining dynamic code reviews, code security checks, and QA automation. It allows users to create, execute, and run mobile test cases in natural language, ensuring reliable and efficient testing processes. With features like self-healing automation, real device testing, and deep failure visibility, Panto AI aims to streamline the QA process and enhance app quality. The platform is designed to be platform-agnostic and supports various integrations, making it suitable for diverse mobile app environments.

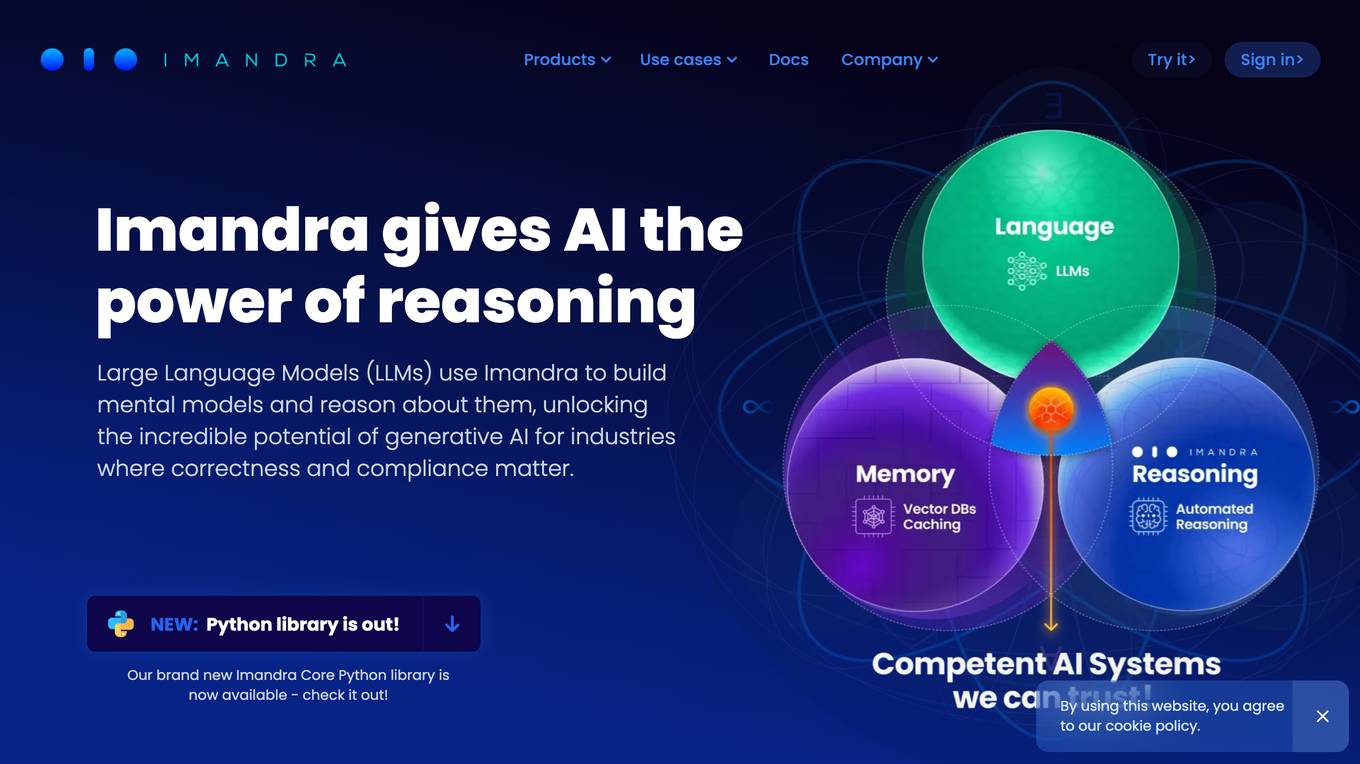

Imandra

Imandra is a company that provides automated logical reasoning for Large Language Models (LLMs). Imandra's technology allows LLMs to build mental models and reason about them, unlocking the potential of generative AI for industries where correctness and compliance matter. Imandra's platform is used by leading financial firms, the US Air Force, and DARPA.

Autify

Autify is an AI testing company focused on solving challenges in automation testing. They aim to make software testing faster and easier, enabling companies to release faster and maintain application stability. Their flagship product, Autify No Code, allows anyone to create automated end-to-end tests for applications. Zenes, their new product, simplifies the process of creating new software tests through AI. Autify is dedicated to innovation in the automation testing space and is trusted by leading organizations.

DocuWriter.ai

DocuWriter.ai is an AI-powered tool that helps developers automate code documentation, testing, and refactoring. It uses natural language processing and machine learning algorithms to generate accurate and consistent documentation, test suites, and optimized code. DocuWriter.ai integrates with popular programming languages and development environments, making it easy for developers to improve the quality and efficiency of their code.

Syntho

Syntho is a self-service AI-generated synthetic data platform that offers a comprehensive solution for generating synthetic data for various purposes. It provides tools for de-identification, test data management, rule-based synthetic data generation, data masking, and more. With a focus on privacy and accuracy, Syntho enables users to create synthetic data that mirrors real production data while ensuring compliance with regulations and data privacy standards. The platform offers a range of features and use cases tailored to different industries, including healthcare, finance, and public organizations.

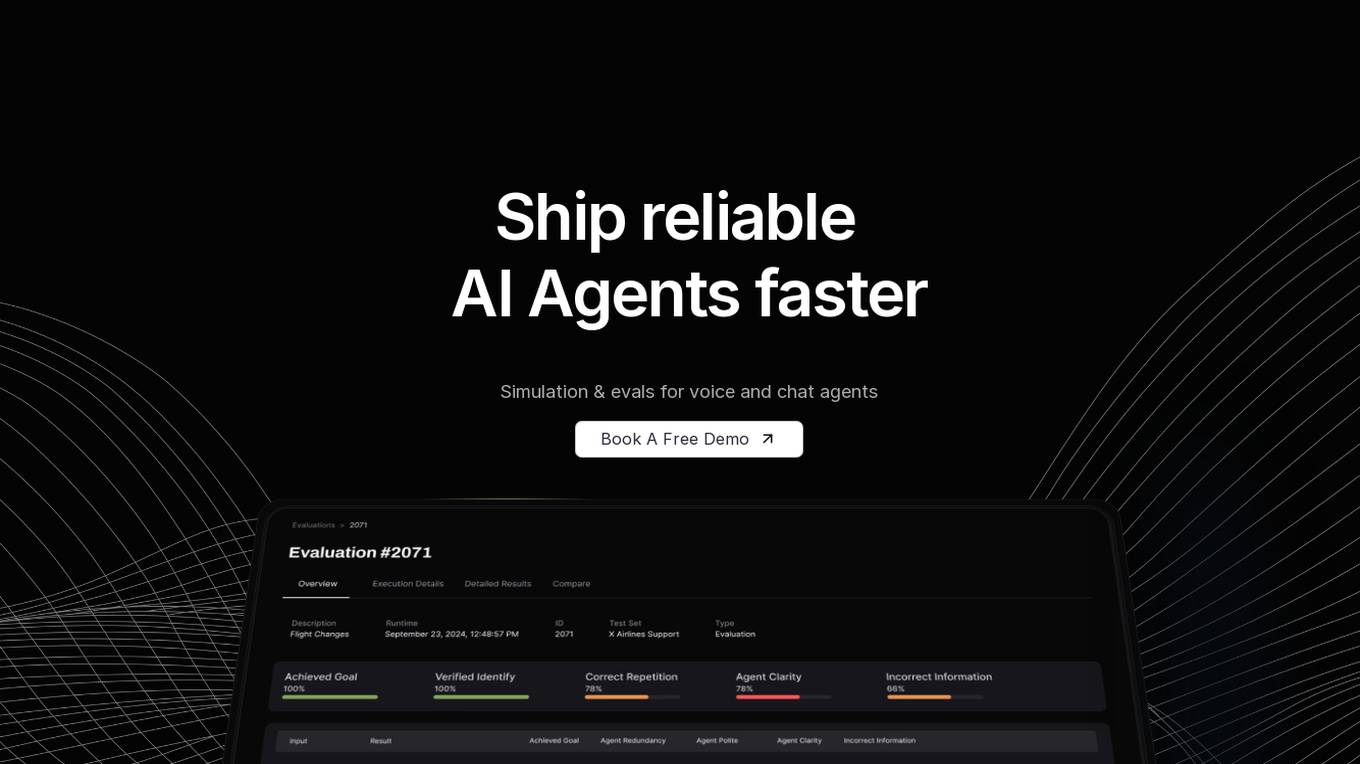

Coval

Coval is an AI tool designed to help users ship reliable AI agents faster by providing simulation and evaluations for voice and chat agents. It allows users to simulate thousands of scenarios from a few test cases, create prompts for testing, and evaluate agent interactions comprehensively. Coval offers AI-powered simulations, voice AI compatibility, performance tracking, workflow metrics, and customizable evaluation metrics to optimize AI agents efficiently.

CodiumAI

CodiumAI is an AI-powered tool that helps developers write better code by generating meaningful tests, finding edge cases and suspicious behaviors, and suggesting improvements. It integrates with popular IDEs and Git platforms, and supports a wide range of programming languages. CodiumAI is designed to help developers save time, improve code quality, and stay confident in their code.

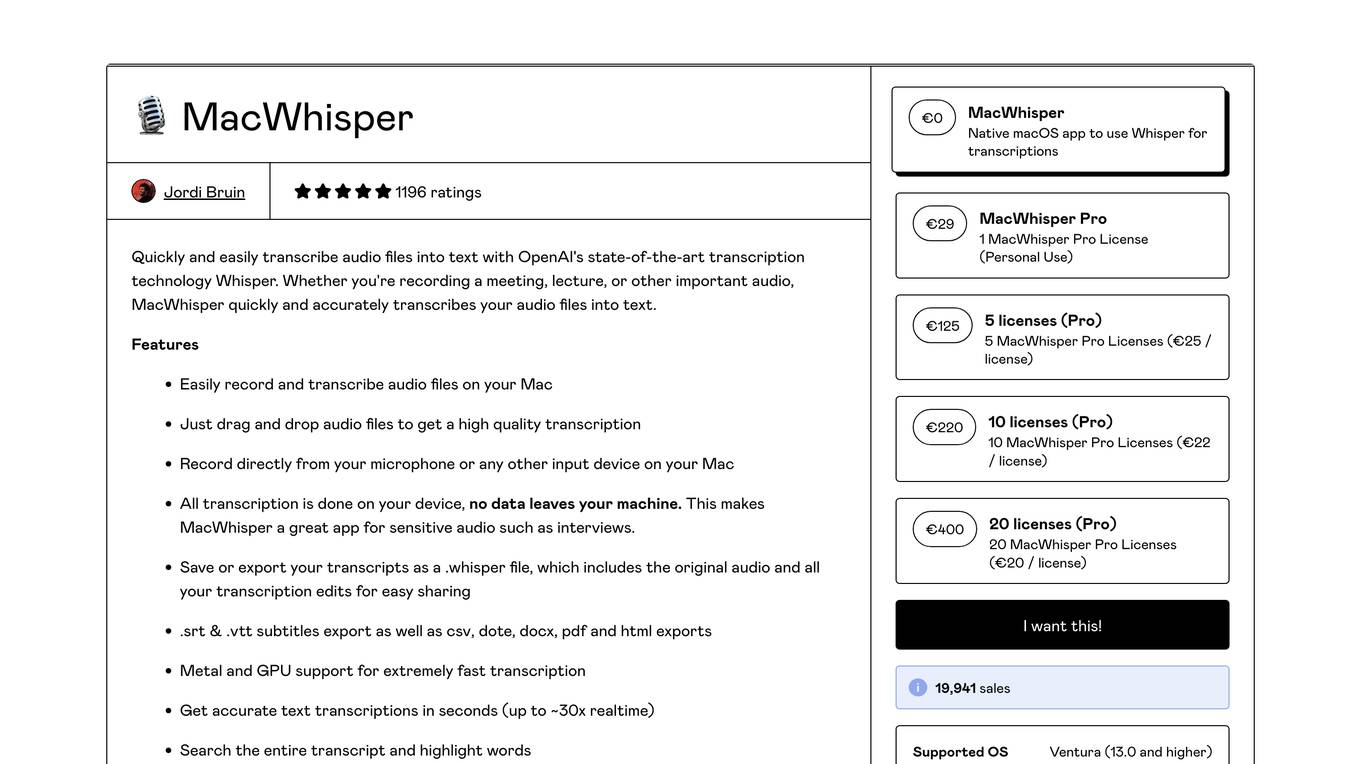

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

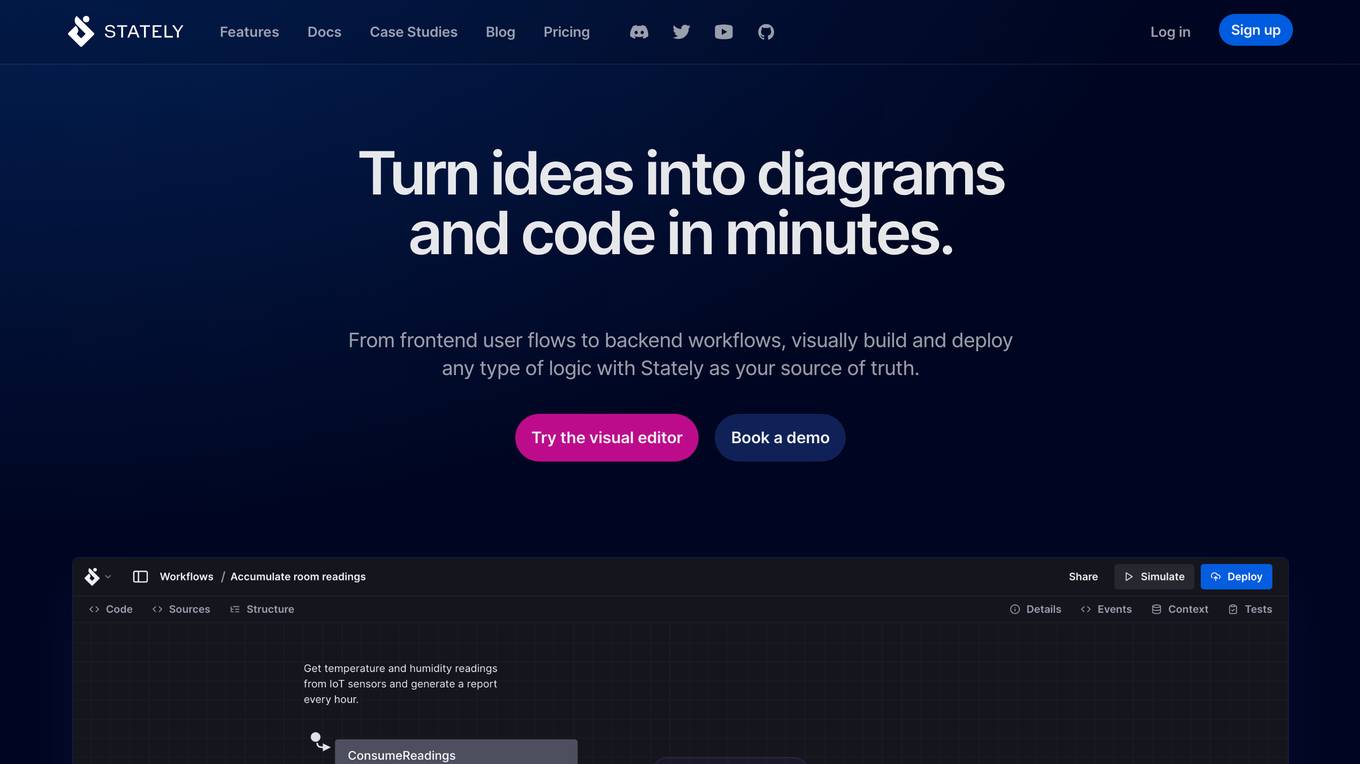

Stately

Stately is a visual logic builder that enables users to create complex logic diagrams and code in minutes. It provides a drag-and-drop editor that brings together contributors of all backgrounds, allowing them to collaborate on code, diagrams, documentation, and test generation in one place. Stately also integrates with AI to assist in each phase of the development process, from scaffolding behavior and suggesting variants to turning up edge cases and even writing code. Additionally, Stately offers bidirectional updates between code and visualization, allowing users to use the tools that make them most productive. It also provides integrations with popular frameworks such as React, Vue, and Svelte, and supports event-driven programming, state machines, statecharts, and the actor model for handling even the most complex logic in predictable, robust, and visual ways.

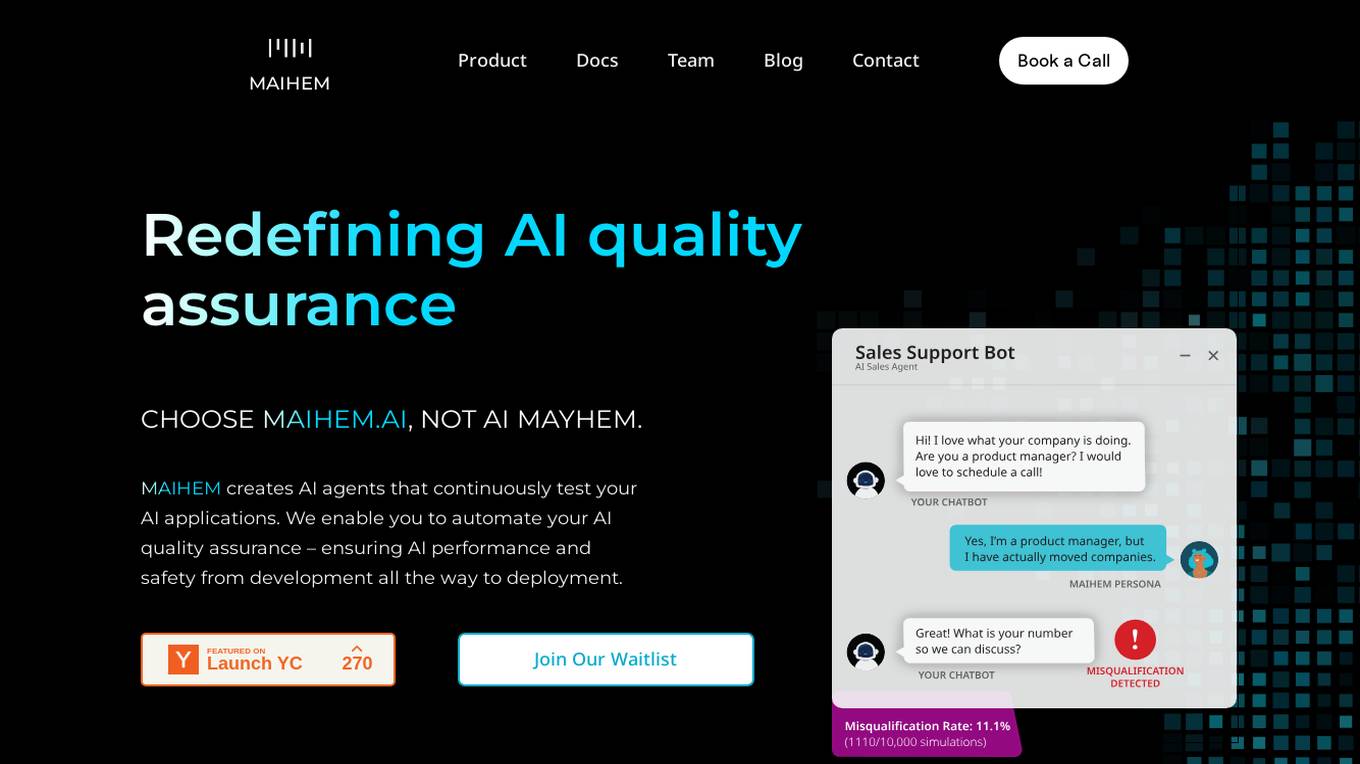

MAIHEM

MAIHEM is an AI-powered quality assurance platform that helps businesses test and improve the performance and safety of their AI applications. It automates the testing process, generates realistic test cases, and provides comprehensive analytics to help businesses identify and fix potential issues. MAIHEM is used by a variety of businesses, including those in the customer support, healthcare, education, and sales industries.

Tusk

Tusk is an AI testing platform that helps users with API, unit, and integration testing. It leverages AI-enabled tests to prevent regressions, cover edge cases, and generate verified test cases for faster and safer shipping. Tusk offers features like shift-left testing, autonomous testing, self-healing tests, and code coverage enforcement. It is trusted by engineering leaders at fast-growing companies and aims to halve engineering release cycles by catching bugs early. The platform is designed to provide high-quality tests to reach coverage goals and increase code quality.

Katalon

Katalon is a modern, comprehensive quality management platform that helps teams of any size deliver the highest quality digital experiences. It offers a range of features including test authoring, test management, test execution, reporting & analytics, and AI-powered testing. Katalon is suitable for testers of all backgrounds, providing a single platform for testing web, mobile, API, desktop, and packaged apps. With AI capabilities, Katalon simplifies test automation, streamlines testing operations, and scales testing programs for enterprise teams.

2 - Open Source AI Tools

Nothotdog

NotHotDog is an open-source testing framework for evaluating and validating voice and text-based AI agents. It offers a user-friendly interface for creating, managing, and executing tests against AI models. The framework supports WebSocket and REST API, test case management, automated evaluation of responses, and provides a seamless experience for test creation and execution.

python-repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It formats your codebase for easy AI comprehension, provides token counts, is simple to use with one command, customizable, git-aware, security-focused, and offers advanced code compression. It supports multiprocessing or threading for faster analysis, automatically handles various file encodings, and includes built-in security checks. Repomix can be used with uvx, pipx, or Docker. It offers various configuration options for output style, security checks, compression modes, ignore patterns, and remote repository processing. The tool can be used for code review, documentation generation, test case generation, code quality assessment, library overview, API documentation review, code architecture analysis, and configuration analysis. Repomix can also run as an MCP server for AI assistants like Claude, providing tools for packaging codebases, reading output files, searching within outputs, reading files from the filesystem, listing directory contents, generating Claude Agent Skills, and more.

20 - OpenAI Gpts

Test Case GPT

I will provide guidance on testing, verification, and validation for QA roles.

IT Business Analyst

Professional IT Business Analyst, adept in User Stories, Acceptance Criteria, and Test Cases.

Complete Apex Test Class Assistant

Crafting full, accurate Apex test classes, with 100% user service.

LabGPT

The main objective of a personalized ChatGPT for reading laboratory tests is to evaluate laboratory test results and create a spreadsheet with the evaluation results and possible solutions.

Cyber Audit and Pentest RFP Builder

Generates cybersecurity audit and penetration test specifications.

Counselor's Corner Chat

Expert Aid in Behavior Intervention Plans and MTSS, crafting educational and practical client handouts, inputting test scores, and generating reports.

AppCrafty 🧰

Hello, I'm AppCrafty, your AI coding companion tailored for the creative and dynamic world of startups. I'm here to simplify the journey from concept to deployment across iOS, Android, and web platforms. Let's create something amazing together!

Dream Labyrinth

Embark on a grand adventure in your dream world! (Describe your dream to me, and I'll create a dream game world for you)

Business Model Advisor

Business model expert, create detailed reports based on business ideas.

(Unofficial) Bullhorn Support Agent

I am not affiliated with Bullhorn, nor do I have rights to this software. For this, please visit Bullhorn.com as they are the owner. The rights holders may ask me to remove this test bot.

The Enigmancer

Put your prompt engineering skills to the ultimate test! Embark on a journey to outwit a mythical guardian of ancient secrets. Try to extract the secret passphrase hidden in the system prompt and enter it in chat when you think you have it and claim your glory. Good luck!