Best AI tools for< Connect To Llm >

20 - AI tool Sites

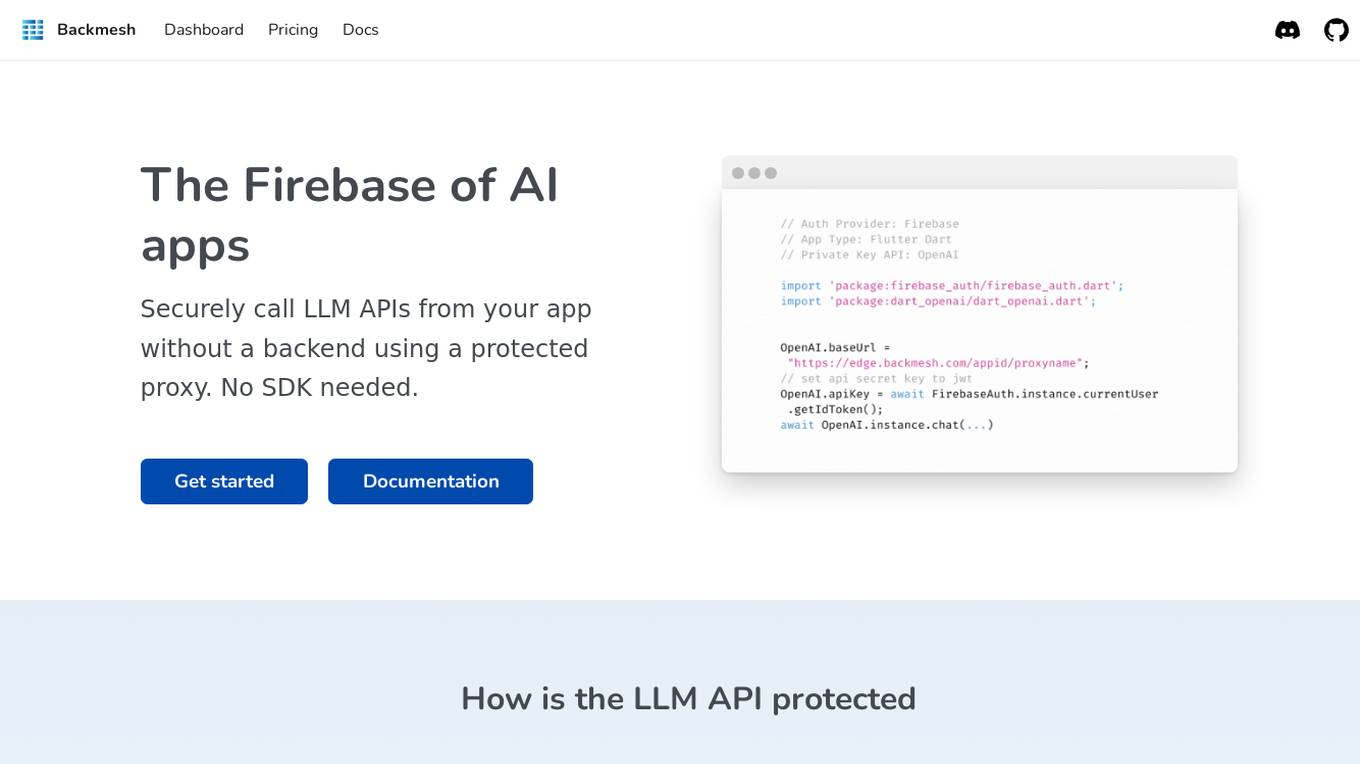

Backmesh

Backmesh is an AI tool that serves as a proxy on edge CDN servers, enabling secure and direct access to LLM APIs without the need for a backend or SDK. It allows users to call LLM APIs from their apps, ensuring protection through JWT verification and rate limits. Backmesh also offers user analytics for LLM API calls, helping identify usage patterns and enhance user satisfaction within AI applications.

OAK

OAK is an open-source platform for building and deploying custom AI agents quickly and easily. It offers a modular design, powerful plugins, and seamless integration with various AI models. OAK is scalable, flexible, and developer-friendly, allowing users to create AI agents in minutes without hassle.

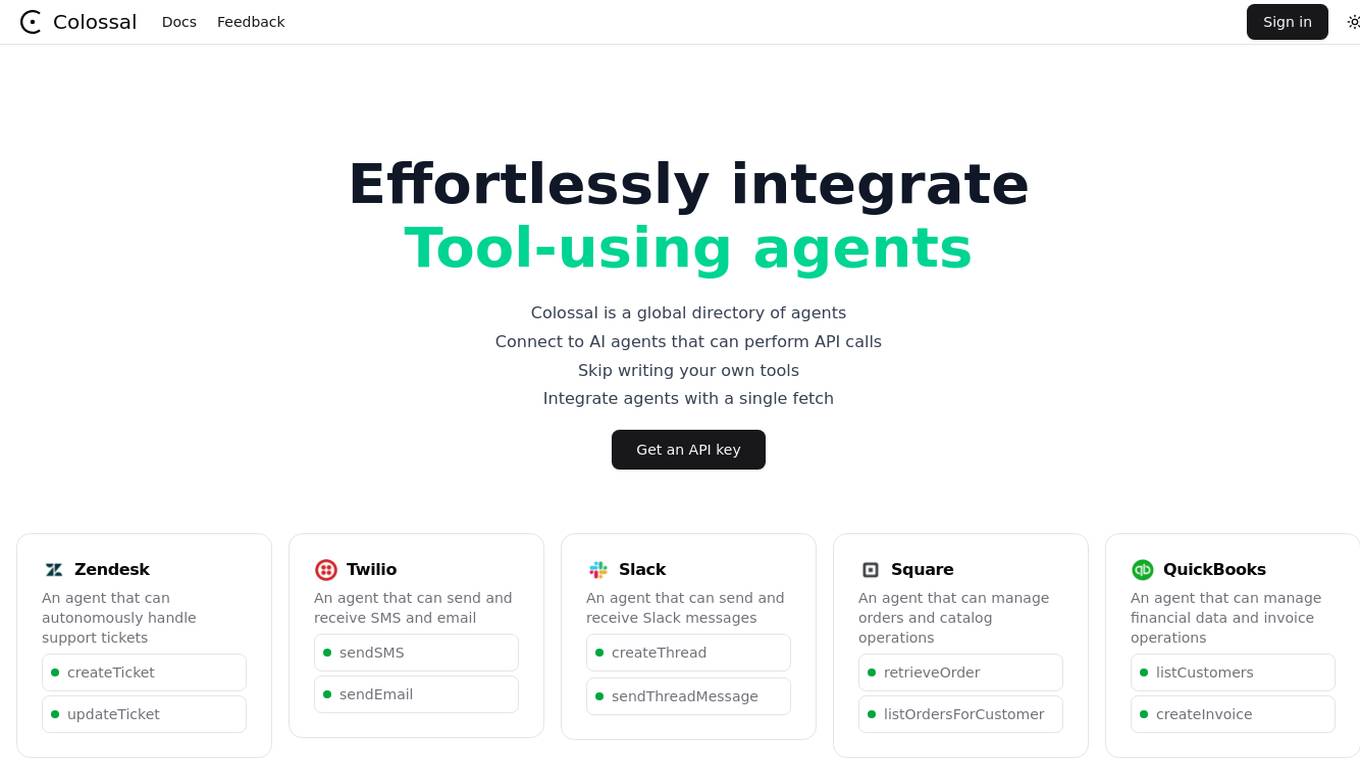

Colossal

Colossal is a global directory of AI agents that allows users to effortlessly integrate tool-using agents for various tasks. Users can connect to AI agents that can perform API calls, skip writing their own tools, and integrate agents with a single fetch. The platform offers agents for support tickets, SMS and email handling, Slack messages, order and catalog operations, financial data management, and more.

Prodmagic

Prodmagic is an AI tool that enables users to easily build advanced chatbots in minutes. With Prodmagic, users can connect their custom LLMs to create chatbots that can be integrated on any website with just a single line of code. The platform allows for easy customization of chatbots' appearance and behavior without the need for coding. Prodmagic also offers integration with custom LLMs and OpenAI, providing a seamless experience for users looking to enhance their chatbot capabilities. Additionally, Prodmagic provides essential features such as chat history, error monitoring, and simple pricing plans, making it a convenient and cost-effective solution for chatbot development.

AiPlus

AiPlus is an AI tool designed to serve as a cost-efficient model gateway. It offers users a platform to access and utilize various AI models for their projects and tasks. With AiPlus, users can easily integrate AI capabilities into their applications without the need for extensive development or resources. The tool aims to streamline the process of leveraging AI technology, making it accessible to a wider audience.

LangSearch

LangSearch is an AI tool that offers a free Web Search API and Rerank API, serving as the World Engine for AGI. It allows users to connect their LLM applications to access clean, accurate, high-quality context from billions of web documents, including news, images, videos, and more. The tool supports natural language search and provides enhanced search details for various content types.

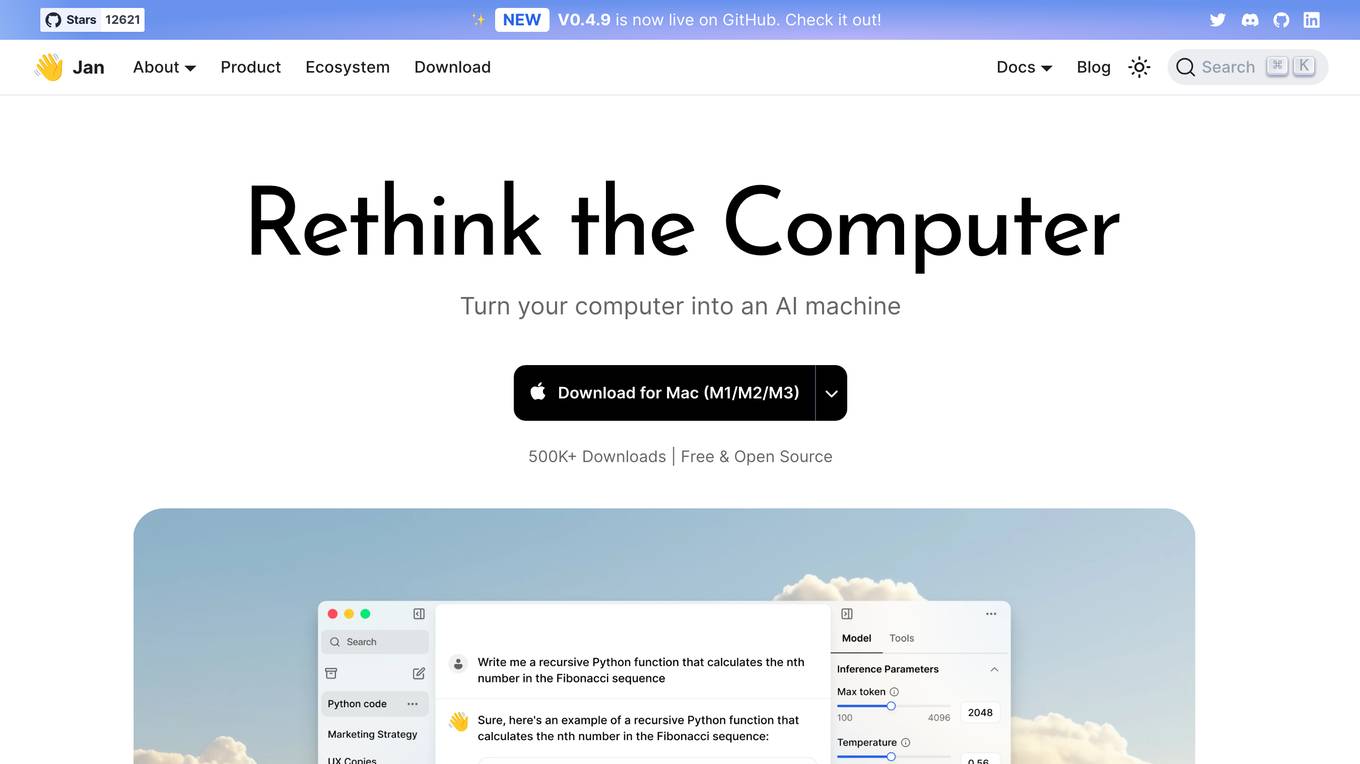

Jan

Jan is an open-source ChatGPT-alternative that runs 100% offline. It allows users to chat with AI, download and run powerful models, connect to cloud AIs, set up a local API server, and chat with files. Highly customizable, Jan also offers features like creating personalized AI assistants, memory, and extensions. The application prioritizes local-first AI, user-owned data, and full customization, making it a versatile tool for AI enthusiasts and developers.

Monitr

Monitr is a data visualization and analytics platform that allows users to query, visualize, and share data in one place. It helps in tracking key metrics, making data-driven decisions, and breaking down data silos to provide a unified view of data from various sources. Users can create charts and dashboards, connect to different data sources like Postgresql and MySQL, and collaborate with teammates on SQL queries. Monitr's AI features are powered by Meta AI's Llama 3 LLM, enabling the development of powerful and flexible analytics tools for maximizing data utilization.

Merge

Merge is a leading provider of agentic tools and customer-facing integrations for frontier LLMs, Fortune 500 organizations, and B2B SaaS companies. It offers fast, secure integrations for products and agents, allowing users to connect to any third-party system with ease. Merge provides a single API with hundreds of product integrations, empowering users to sync data between their products and third-party platforms efficiently. The platform ensures enterprise-grade security, compliance, real-time monitoring, and data controls, making integrations seamless and secure.

Surfsite

Surfsite is an AI application designed for SaaS professionals to streamline workflows, make data-driven decisions, and enhance productivity. It offers AI assistants that connect to essential tools, provide real-time insights, and assist in various tasks such as market research, project management, and analytics. Surfsite aims to centralize data, improve decision-making, and optimize processes for product managers, growth marketers, and founders. The application leverages advanced LLMs and integrates seamlessly with popular tools like Google Docs, Jira, and Trello to offer a comprehensive AI-powered solution.

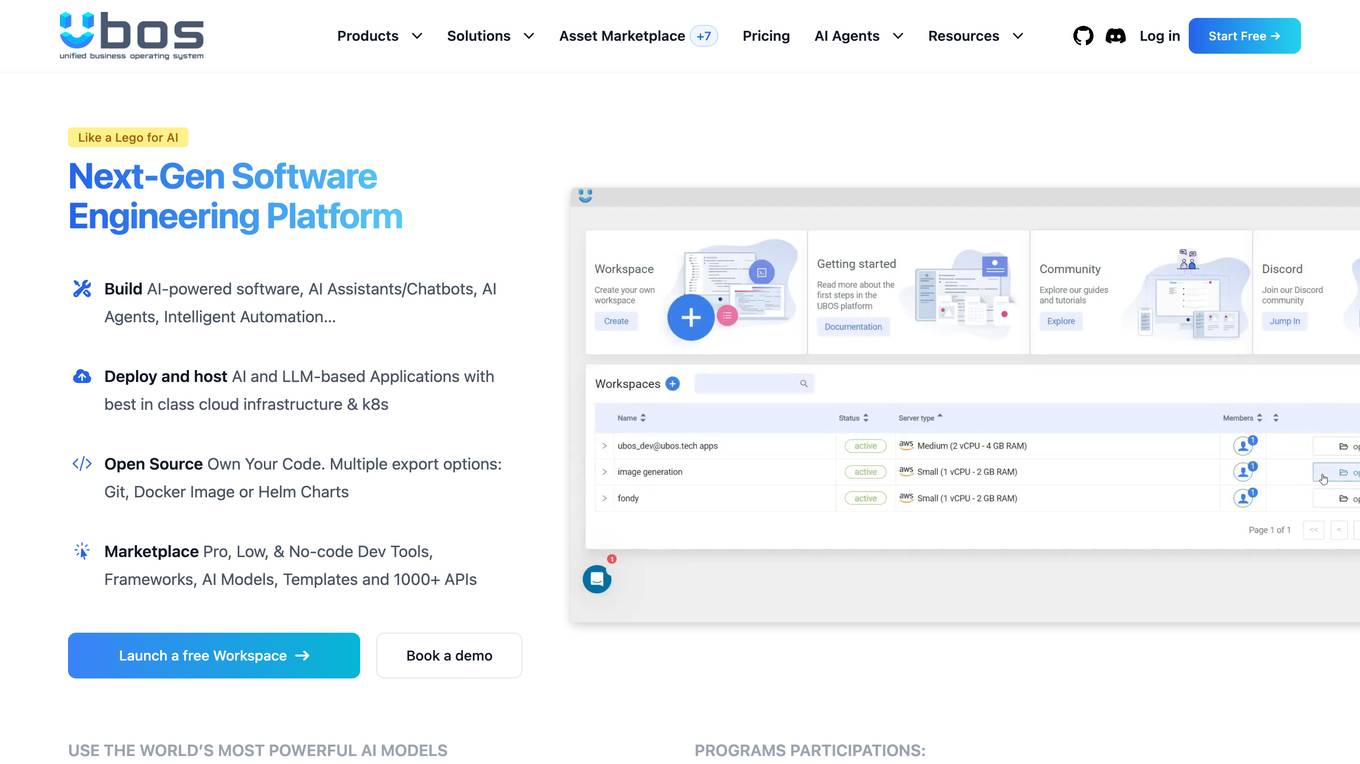

UBOS

UBOS is an engineering platform for Software 3.0 and AI Agents, offering a comprehensive suite of tools for building enterprise-ready internal development platforms, web applications, and intelligent workflows. It enables users to connect to over 1000 APIs, automate workflows with AI, and access a marketplace with templates and AI models. UBOS empowers startups, small and medium businesses, and large enterprises to drive growth, efficiency, and innovation through advanced ML orchestration and Generative AI custom integration. The platform provides a user-friendly interface for creating AI-native applications, leveraging Generative AI, Node-Red SCADA, Edge AI, and IoT technologies. With a focus on open-source development, UBOS offers full code ownership, flexible exports, and seamless integration with leading LLMs like ChatGPT and Llama 2 from Meta.

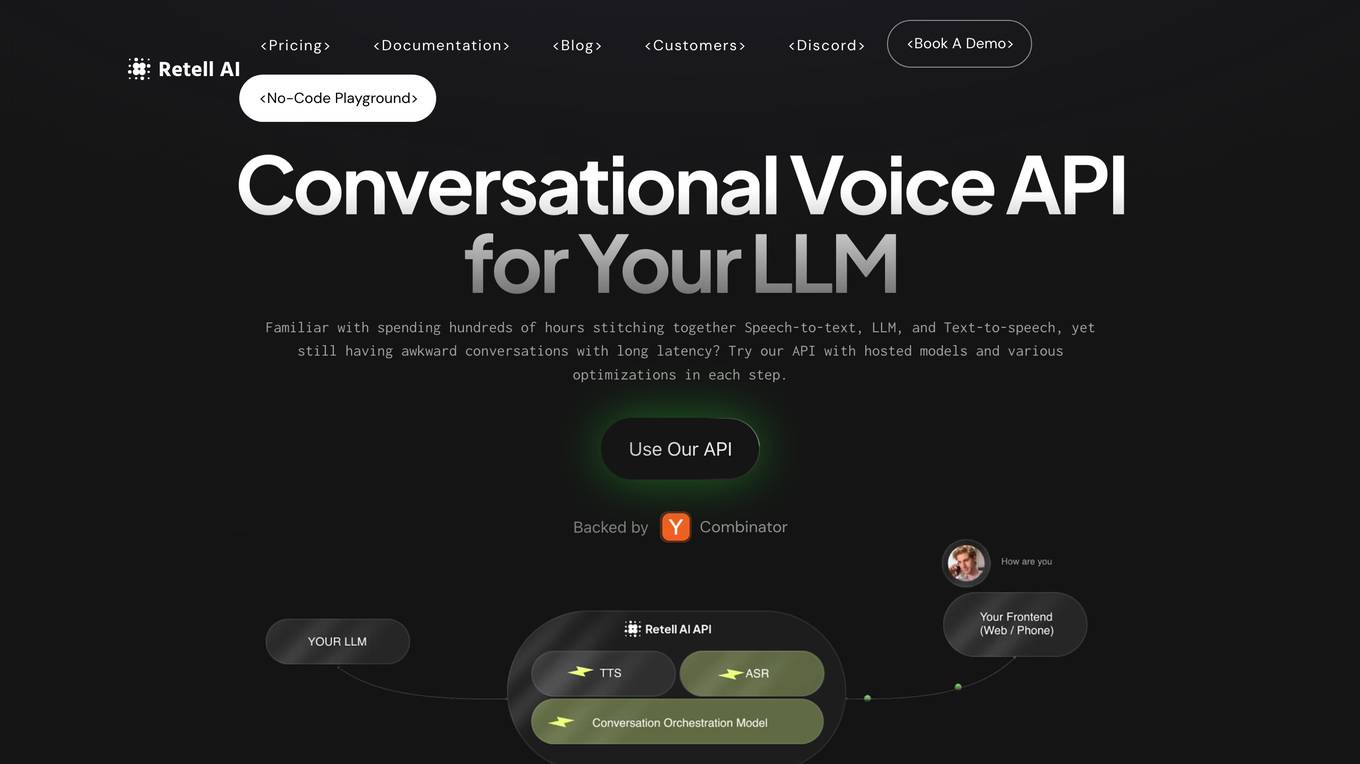

Retell AI

Retell AI provides a Conversational Voice API that enables developers to integrate human-like voice interactions into their applications. With Retell AI's API, developers can easily connect their own Large Language Models (LLMs) to create AI-powered voice agents that can engage in natural and engaging conversations. Retell AI's API offers a range of features, including ultra-low latency, realistic voices with emotions, interruption handling, and end-of-turn detection, ensuring seamless and lifelike conversations. Developers can also customize various aspects of the conversation experience, such as voice stability, backchanneling, and custom voice cloning, to tailor the AI agent to their specific needs. Retell AI's API is designed to be easy to integrate with existing LLMs and frontend applications, making it accessible to developers of all levels.

Ragie

Ragie is a fully managed RAG-as-a-Service platform designed for developers. It offers easy-to-use APIs and SDKs to help developers get started quickly, with advanced features like LLM re-ranking, summary index, entity extraction, flexible filtering, and hybrid semantic and keyword search. Ragie allows users to connect directly to popular data sources like Google Drive, Notion, Confluence, and more, ensuring accurate and reliable information delivery. The platform is led by Craft Ventures and offers seamless data connectivity through connectors. Ragie simplifies the process of data ingestion, chunking, indexing, and retrieval, making it a valuable tool for AI applications.

Upstage

Upstage is an AI application designed for global talent acquisition in the field of artificial intelligence startups. The platform offers a range of job opportunities in AI research, engineering, business, education, and software engineering. Upstage aims to connect talented individuals with leading AI companies, providing a seamless recruitment process for both employers and job seekers.

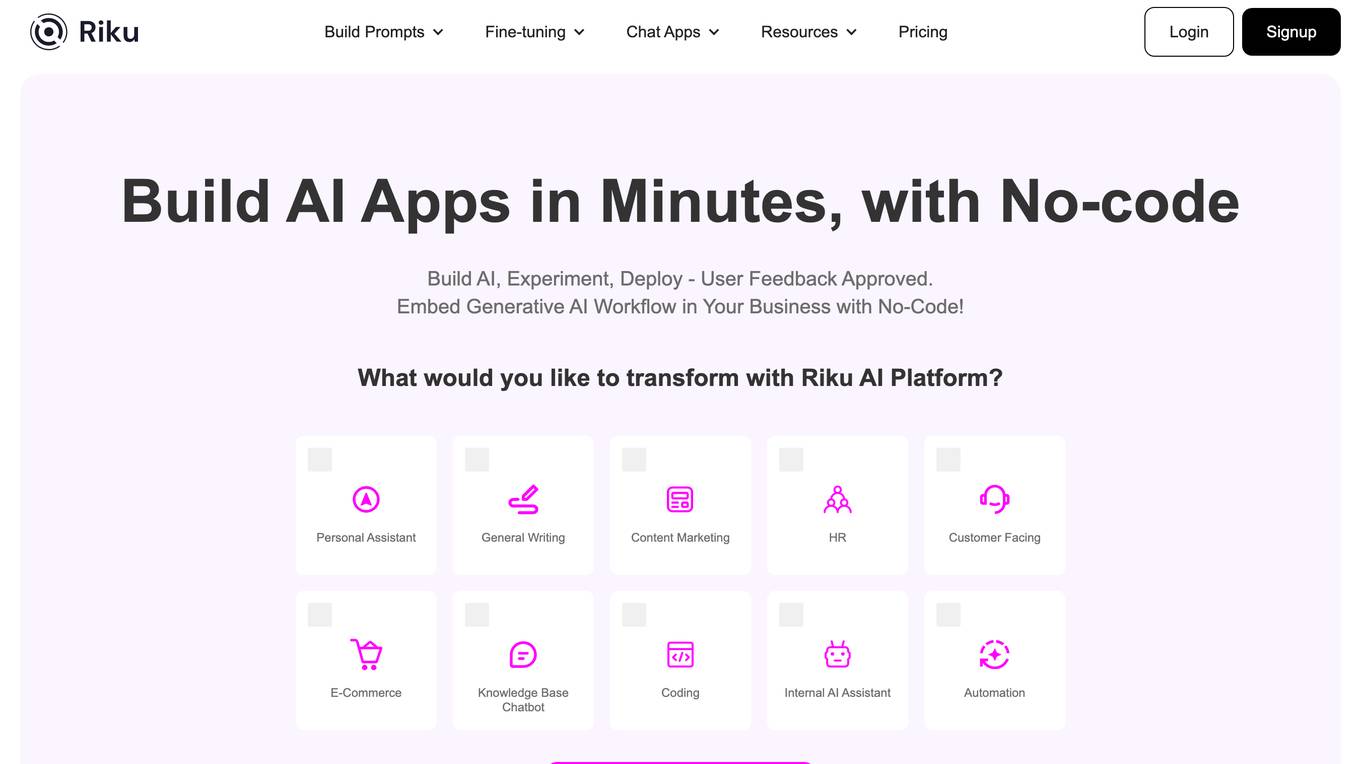

Riku

Riku is a no-code platform that allows users to build and deploy powerful generative AI for their business. With access to over 40 industry-leading LLMs, users can easily test different prompts to find just the right one for their needs. Riku's platform also allows users to connect siloed data sources and systems together to feed into powerful AI applications. This makes it easy for businesses to automate repetitive tasks, test ideas rapidly, and get answers in real-time.

Symanto

Symanto is a global leader in Human AI, specializing in human language understanding and generation. The company's proprietary platform integrates with common LLM's and works across industries and languages. Symanto's technology enables computers to connect with people like friends, fostering trust-filled interactions full of emotion, empathy, and understanding. The company's clients include automotive, consulting, healthcare, consumer, sports, and other industries.

Exa

Exa is a search engine that uses embeddings-based search to retrieve the best content on the web. It is trusted by companies and developers from all over the world. Exa is like Google, but it is better at understanding the meaning of your queries and returning results that are more relevant to your needs. Exa can be used for a variety of tasks, including finding information on the web, conducting research, and building AI applications.

LLMStack

LLMStack is an open-source platform that allows users to build AI Agents, workflows, and applications using their own data. It is a no-code AI app builder that supports model chaining from major providers like OpenAI, Cohere, Stability AI, and Hugging Face. Users can import various data sources such as Web URLs, PDFs, audio files, and more to enhance generative AI applications and chatbots. With a focus on collaboration, LLMStack enables users to share apps publicly or restrict access, with viewer and collaborator roles for multiple users to work together. Powered by React, LLMStack provides an easy-to-use interface for building AI applications.

Grit Brokerage

Grit Brokerage is a domain and website brokerage platform that facilitates the buying and selling of domains. The platform allows users to inquire about domain prices, submit offers, and connect with domain brokers. With a focus on domain transactions, Grit Brokerage provides a seamless experience for individuals and businesses looking to acquire or sell domain names.

Magic Loops

Magic Loops is an AI tool that allows users to create automated workflows using ChatGPT automations. Users can connect data, send emails, receive texts, scrape websites, and more. The tool enables users to automate various tasks by creating personalized loops that respond to specific triggers and inputs.

1 - Open Source AI Tools

dialog

Dialog is an API-focused tool designed to simplify the deployment of Large Language Models (LLMs) for programmers interested in AI. It allows users to deploy any LLM based on the structure provided by dialog-lib, enabling them to spend less time coding and more time training their models. The tool aims to humanize Retrieval-Augmented Generative Models (RAGs) and offers features for better RAG deployment and maintenance. Dialog requires a knowledge base in CSV format and a prompt configuration in TOML format to function effectively. It provides functionalities for loading data into the database, processing conversations, and connecting to the LLM, with options to customize prompts and parameters. The tool also requires specific environment variables for setup and configuration.

20 - OpenAI Gpts

Idea To Code GPT

Generates a full & complete Python codebase, after clarifying questions, by following a structured section pattern.

Flask Expert Assistant

This GPT is a specialized assistant for Flask, the popular web framework in Python. It is designed to help both beginners and experienced developers with Flask-related queries, ranging from basic setup and routing to advanced features like database integration and application scaling.

Django Helper

Help web programmers to learn best Django practises and use smart defaults. Get things done really fast!

Power Query Assistant

Expert in Power Query and DAX for Power BI, offering in-depth guidance and insights

T3Stack開発アシスタント

T3Stackでの開発をサポートします:Next.js, TypeScript, Prisma, tRPC, Tailwind.css, Next-Auth.js, and more

Power Platform Helper

Trained on learn.microsoft.com content including Azure Functions, Logic Apps, DAX, Dynamics365, Microsoft 365, Compliance, ODATA, Power Agents, Apps, Automate, BI, Pages, Query, Power Platform Administration, Developer, Guidance

PowerBI GPT

A PowerBI Expert assisting with debugging, dashboard ideas, and PowerBI service guidance.

JAVA开发工程师

精通所有java知识和框架,特别是springboot和springcloud后台接口开发以及实体类和mysql完整流程crud完整代码开发。解决一切java报错问题,具备JAVA资深工程师能力

Drunk Santa

I'm Drunk Santa, ready to boost your ideas and connect you to psyborg® to bring them to life.

CheerLights IoT Expert

Chat with an expert on the CheerLights IoT project. Learn how to use its API and write code to connect your project.