Best AI tools for< Configure Model Provider >

20 - AI tool Sites

Motific.ai

Motific.ai is a responsible GenAI tool powered by data at scale. It offers a fully managed service with natural language compliance and security guardrails, an intelligence service, and an enterprise data-powered, end-to-end retrieval augmented generation (RAG) service. Users can rapidly deliver trustworthy GenAI assistants and API endpoints, configure assistants with organization's data, optimize performance, and connect with top GenAI model providers. Motific.ai enables users to create custom knowledge bases, connect to various data sources, and ensure responsible AI practices. It supports English language only and offers insights on usage, time savings, and model optimization.

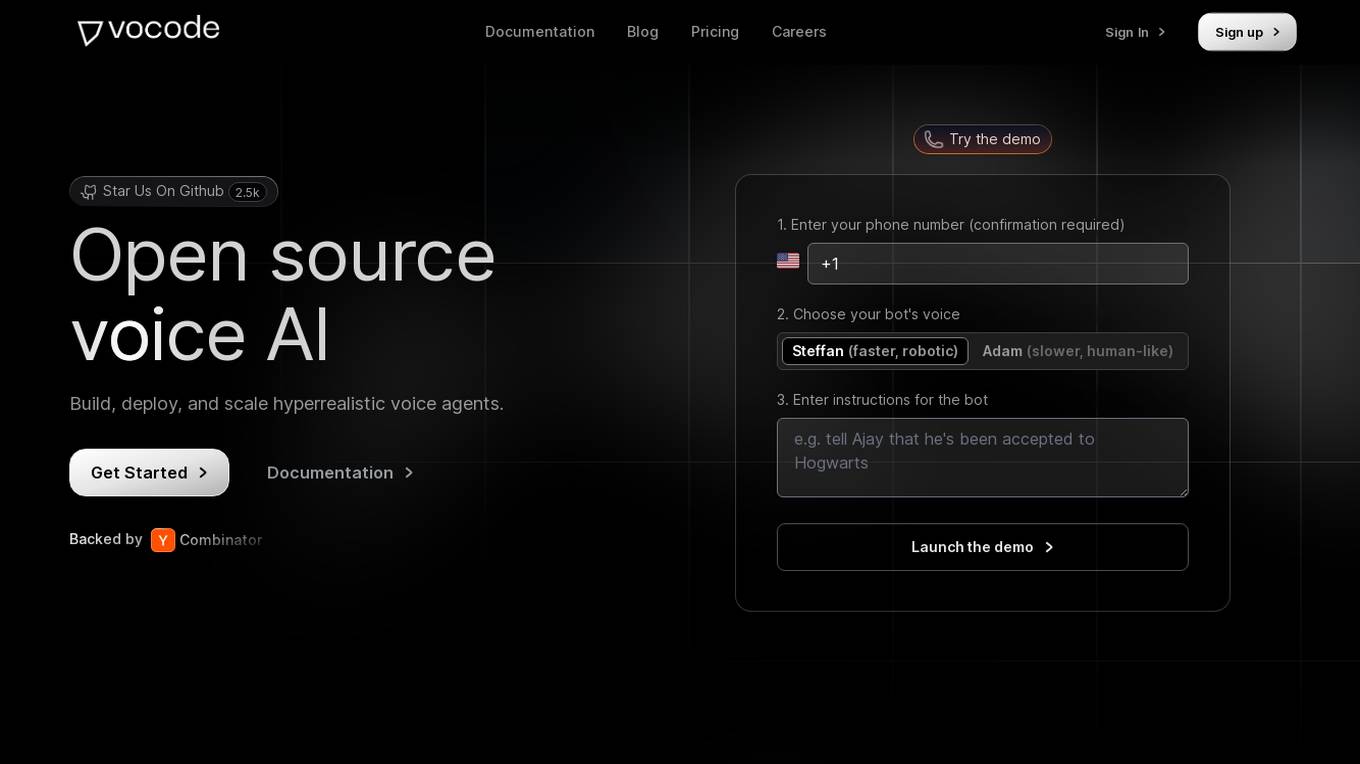

Vocode

Vocode is an open-source voice AI platform that enables users to build, deploy, and scale hyperrealistic voice agents. It offers fully programmable voice bots that can be integrated into workflows without the need for human intervention. With multilingual capability, custom language models, and the ability to connect to knowledge bases, Vocode provides a comprehensive solution for automating actions like scheduling, payments, and more. The platform also offers analytics and monitoring features to track bot performance and customer interactions, making it a valuable tool for businesses looking to enhance customer support and engagement.

Turing AI

Turing AI is a cloud-based video security system powered by artificial intelligence. It offers a range of AI-powered video surveillance products and solutions to enhance safety, security, and operations. The platform provides smart video search capabilities, real-time alerts, instant video sharing, and hardware offerings compatible with various cameras. With flexible licensing options and integration with third-party devices, Turing AI is trusted by customers across industries for its robust and innovative approach to cloud video security.

Lambda Docs

Lambda Docs is an AI tool that provides cloud and hardware solutions for individuals, teams, and organizations. It offers services such as Managed Kubernetes, Preinstalled Kubernetes, Slurm, and access to GPU clusters. The platform also provides educational resources and tutorials for machine learning engineers and researchers to fine-tune models and deploy AI solutions.

VeroCloud

VeroCloud is a platform offering tailored solutions for AI, HPC, and scalable growth. It provides cost-effective cloud solutions with guaranteed uptime, performance efficiency, and cost-saving models. Users can deploy HPC workloads seamlessly, configure environments as needed, and access optimized environments for GPU Cloud, HPC Compute, and Tally on Cloud. VeroCloud supports globally distributed endpoints, public and private image repos, and deployment of containers on secure cloud. The platform also allows users to create and customize templates for seamless deployment across computing resources.

SiteRetriever

SiteRetriever is a self-hosted AI chatbot platform that enables users to build and deploy chatbots on their websites without any monthly fees. It offers a completely self-contained and self-hosted solution, providing full control over data and costs. With features like easy embedding, complete control over customization, lightning-fast responses, and smart AI powered by advanced language models, SiteRetriever simplifies the process of creating and managing chatbots. Users can upload content, configure bot settings, and embed the chatbot on their site within minutes. Join the waitlist to experience the benefits of SiteRetriever and take control of your chatbot today.

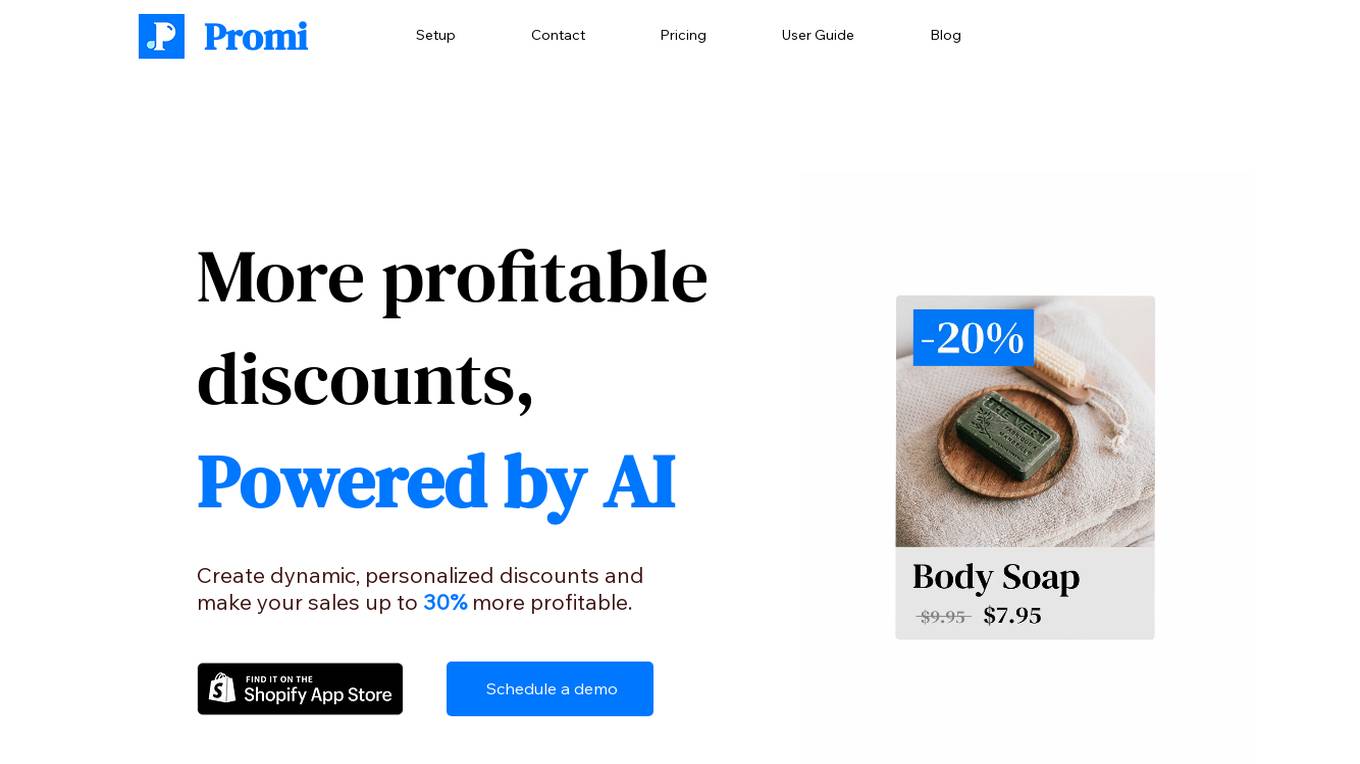

Promi

Promi is an AI-powered application that helps businesses create dynamic and personalized discounts to increase sales profitability by up to 30%. It offers powerful personalization features, dynamic clearance sales, product-level optimization, configurable price updates, and deep links for marketing. Promi leverages AI models to vary discount values based on user purchase intent, ensuring efficient product selling. The application provides seamless integration with major apps like Klaviyo and offers easy installation for both basic and advanced features.

BluePond GenAI PaaS

BluePond GenAI PaaS is an automation and insights powerhouse tailored for Property and Casualty Insurance. It offers end-to-end execution support from GenAI data scientists, engineers & human-in-the-loop processing. The platform provides automated intake extraction, classification enrichment, validation, complex document analysis, workflow automation, and decisioning. Users benefit from rapid deployment, complete control of data & IP, and pre-trained P&C domain library. BluePond GenAI PaaS aims to energize and expedite GenAI initiatives throughout the insurance value chain.

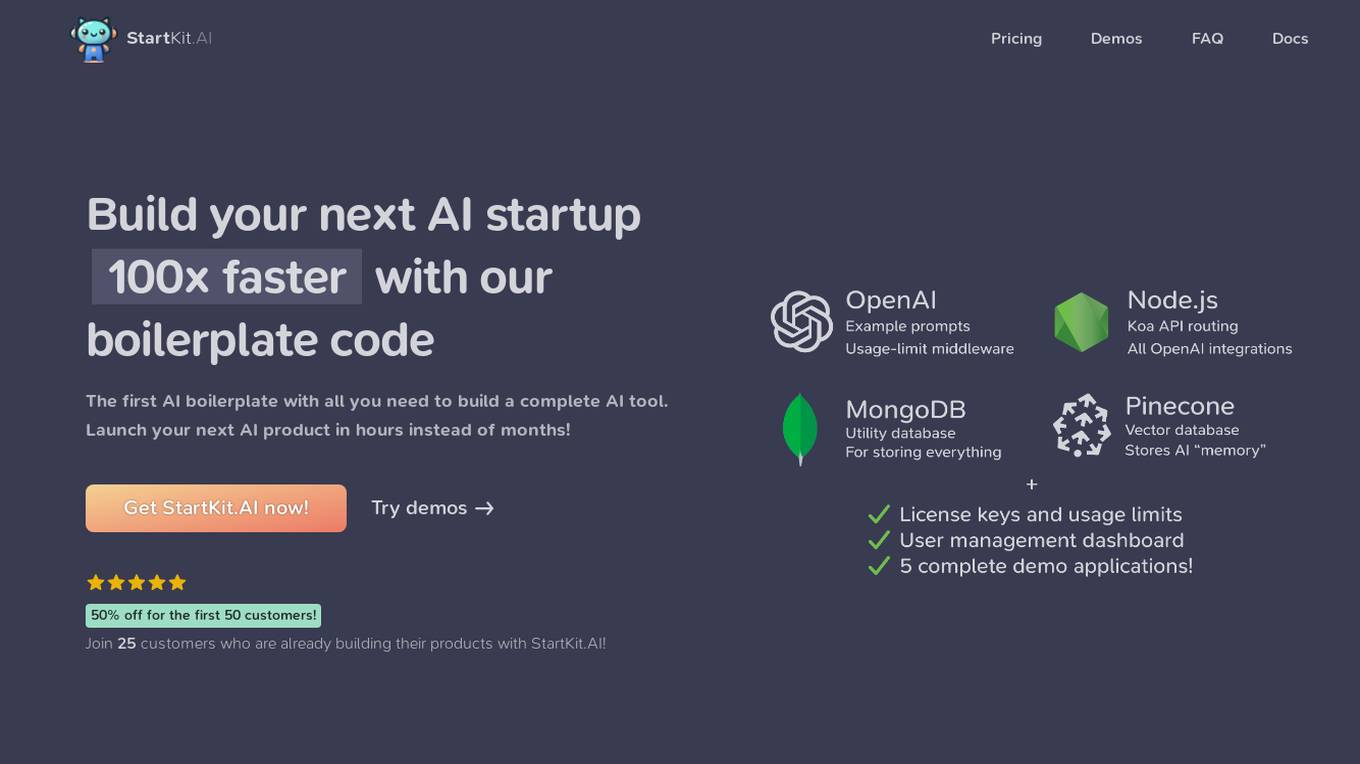

StartKit.AI

StartKit.AI is a boilerplate code for AI products that helps users build their AI startups 100x faster. It includes pre-built REST API routes for all common AI functionality, a pre-configured Pinecone for text embeddings and Retrieval-Augmented Generation (RAG) for chat endpoints, and five React demo apps to help users get started quickly. StartKit.AI also provides a license key and magic link authentication, user & API limit management, and full documentation for all its code. Additionally, users get access to guides to help them get set up and one year of updates.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

SD3 Medium

SD3 Medium is an advanced text-to-image model developed by Stability AI. It offers a cutting-edge approach to generating high-quality, photorealistic images based on textual prompts. The model is equipped with 2 billion parameters, ensuring exceptional quality and resource efficiency. SD3 Medium is currently in a research preview phase, primarily catering to educational and creative purposes. Users can access the model through various licensing options and explore its capabilities via the Stability Platform.

Cloobot X

Cloobot X is a Gen-AI-powered implementation studio that accelerates the deployment of enterprise applications with fewer resources. It leverages natural language processing to model workflow automation, deliver sandbox previews, configure workflows, extend functionalities, and manage versioning & changes. The platform aims to streamline enterprise application deployments, making them simple, swift, and efficient for all stakeholders.

Quivr

Quivr is an open-source chat-powered second brain application that transforms private and enterprise knowledge into a personal AI assistant. It continuously learns and improves at every interaction, offering AI-powered workplace search synced with user data. Quivr allows users to connect with their favorite tools, databases, and applications, and configure their 'second brain' to train on their company's unique context for improved search relevance and knowledge discovery.

Credal

Credal is an AI tool that allows users to build secure AI assistants for enterprise operations. It enables every employee to create customized AI assistants with built-in security, permissions, and compliance features. Credal supports data integration, access control, search functionalities, and API development. The platform offers real-time sync, automatic permissions synchronization, and AI model deployment with security and compliance measures. It helps enterprises manage ETL pipelines, schedule tasks, and configure data processing. Credal ensures data protection, compliance with regulations like HIPAA, and comprehensive audit capabilities for generative AI applications.

BOMML Smart AI Assistant

BOMML offers a Smart AI Assistant that can be used for a variety of tasks, including searching the web, writing articles, answering questions, and more. The assistant is easy to use and can be integrated into applications via a simple API or web interface. BOMML also offers AI APIs that can be used to add AI capabilities to applications. These APIs are fast, secure, and easy to use. BOMML's AI models are trained on a variety of data and can be used for a variety of tasks, including text generation, conversational chats, embeddings, controlling, analyzing, optical character recognition, and more.

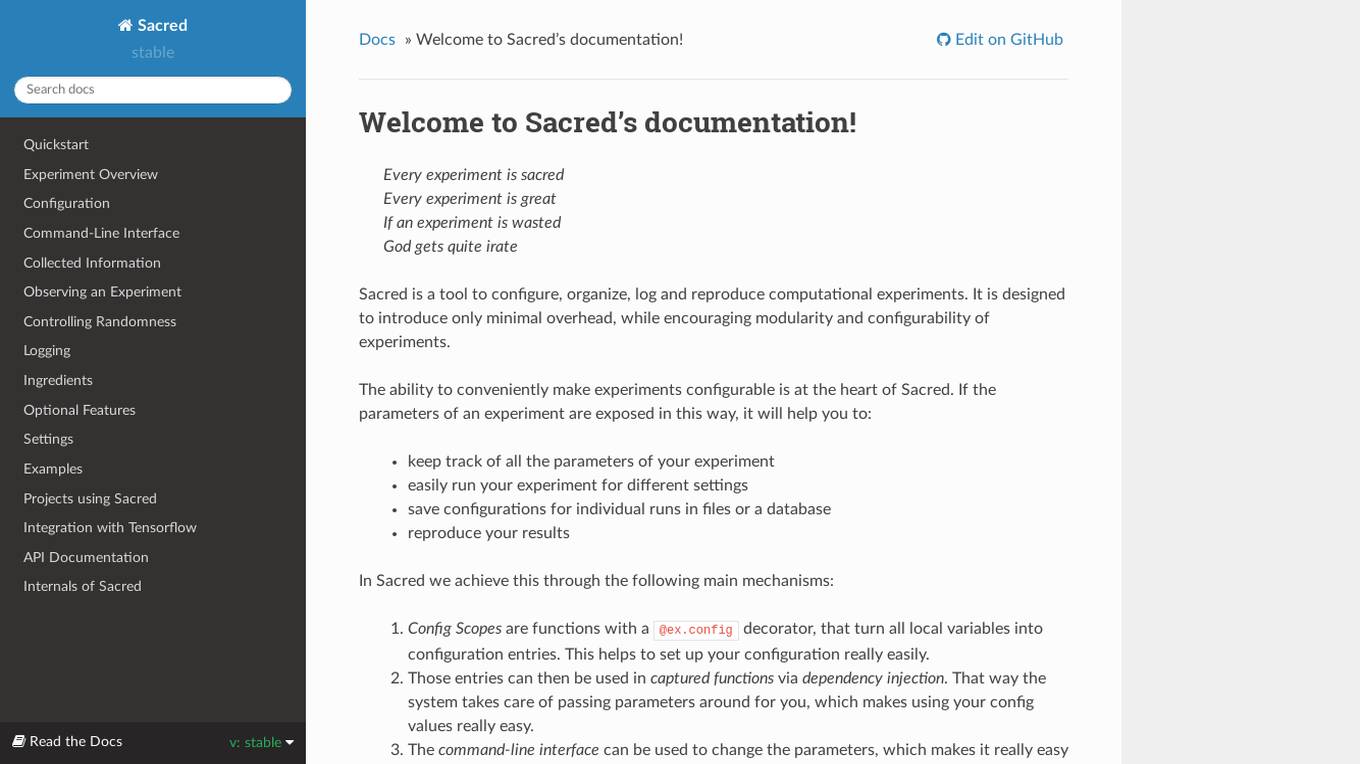

Sacred

Sacred is a tool to configure, organize, log and reproduce computational experiments. It is designed to introduce only minimal overhead, while encouraging modularity and configurability of experiments. The ability to conveniently make experiments configurable is at the heart of Sacred. If the parameters of an experiment are exposed in this way, it will help you to: keep track of all the parameters of your experiment easily run your experiment for different settings save configurations for individual runs in files or a database reproduce your results In Sacred we achieve this through the following main mechanisms: Config Scopes are functions with a @ex.config decorator, that turn all local variables into configuration entries. This helps to set up your configuration really easily. Those entries can then be used in captured functions via dependency injection. That way the system takes care of passing parameters around for you, which makes using your config values really easy. The command-line interface can be used to change the parameters, which makes it really easy to run your experiment with modified parameters. Observers log every information about your experiment and the configuration you used, and saves them for example to a Database. This helps to keep track of all your experiments. Automatic seeding helps controlling the randomness in your experiments, such that they stay reproducible.

FinetuneFast

FinetuneFast is an AI tool designed to help developers, indie makers, and businesses to efficiently finetune machine learning models, process data, and deploy AI solutions at lightning speed. With pre-configured training scripts, efficient data loading pipelines, and one-click model deployment, FinetuneFast streamlines the process of building and deploying AI models, saving users valuable time and effort. The tool is user-friendly, accessible for ML beginners, and offers lifetime updates for continuous improvement.

Gardian

Gardian is an AI tool designed to streamline content analysis processes by leveraging advanced AI technology. It allows users to create custom AI Agents with specific labels to detect and manage content that violates company policies. Gardian offers pre-configured models, custom analysis labels, a simple API for integration, multilanguage support, transparent pricing, and privacy protection. It serves various use cases such as content moderation, live chat moderation, and customer sentiment analysis, providing valuable insights and enhancing user experience.

FormX.ai

FormX.ai is an AI-powered data extraction and conversion tool that automates the process of extracting data from physical documents and converting it into digital formats. It supports a wide range of document types, including invoices, receipts, purchase orders, bank statements, contracts, HR forms, shipping orders, loyalty member applications, annual reports, business certificates, personnel licenses, and more. FormX.ai's pre-configured data extraction models and effortless API integration make it easy for businesses to integrate data extraction into their existing systems and workflows. With FormX.ai, businesses can save time and money on manual data entry and improve the accuracy and efficiency of their data processing.

Release.ai

Release.ai is an AI-centric platform that allows developers, operations, and leadership teams to easily deploy and manage AI applications. It offers pre-configured templates for popular open-source technologies, private AI environments for secure development, and access to GPU resources. With Release.ai, users can build, test, and scale AI solutions quickly and efficiently within their own boundaries.

2 - Open Source AI Tools

notebook-intelligence

Notebook Intelligence (NBI) is an AI coding assistant and extensible AI framework for JupyterLab. It greatly boosts the productivity of JupyterLab users with AI assistance by providing features such as code generation with inline chat, auto-complete, and chat interface. NBI supports various LLM Providers and AI Models, including local models from Ollama. Users can configure model provider and model options, remember GitHub Copilot login, and save configuration files. NBI seamlessly integrates with Model Context Protocol (MCP) servers, supporting both Standard Input/Output (stdio) and Server-Sent Events (SSE) transports. Users can easily add MCP servers to NBI, auto-approve tools, set environment variables, and group servers based on functionality. Additionally, NBI allows access to built-in tools from an MCP participant, enhancing the user experience and productivity.

samples

Strands Agents Samples is a repository showcasing easy-to-use examples for building AI agents using a model-driven approach. The examples provided are for demonstration and educational purposes only, not intended for direct production use. Users can explore various samples to understand concepts and techniques, ensuring proper security and testing procedures before implementation.

20 - OpenAI Gpts

Calendar and email Assistant

Your expert assistant for Google Calendar and gmail tasks, integrated with Zapier (works with free plan). Supports: list, add, update events to calendar, send gmail. You will be prompted to configure zapier actions when set up initially. Conversation data is not used for openai training.

Salesforce Sidekick

Personal assistant for Salesforce configuration, coding, troubleshooting, solutioning, proposal writing, and more. This is not an official Salesforce product or service.

Istio Advisor Plus

Rich in Istio knowledge, with a focus on configurations, troubleshooting, and bug reporting.

FlashSystem Expert

Expert on IBM FlashSystem, offering 'How-To' guidance and technical insights.

CUDA GPT

Expert in CUDA for configuration, installation, troubleshooting, and programming.

SIP Expert

A senior VoIP engineer with expertise in SIP, RTP, IMS, and WebRTC. Kinda employed at sipfront.com, your telco test automation company.

Gradle Expert

Your expert in Gradle build configuration, offering clear, practical advice.