Best AI tools for< Check Format >

20 - AI tool Sites

Bibit AI

Bibit AI is a real estate marketing AI designed to enhance the efficiency and effectiveness of real estate marketing and sales. It can help create listings, descriptions, and property content, and offers a host of other features. Bibit AI is the world's first AI for Real Estate. We are transforming the real estate industry by boosting efficiency and simplifying tasks like listing creation and content generation.

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

Write Breeze

Write Breeze is an AI writing assistant that offers a suite of over 20 smart tools to enhance your writing experience. From grammar and style suggestions to content optimization, Write Breeze helps users create polished and engaging content effortlessly. Whether you're a student, professional writer, or content creator, Write Breeze is designed to streamline your writing process and elevate the quality of your work.

ProRes.ai

ProRes.ai is an AI-enhanced resume building tool that helps users create tailored, ATS-friendly resumes and cover letters quickly and effortlessly. By leveraging artificial intelligence, ProRes streamlines the process of generating high-quality application documents, saving time and increasing the chances of landing job interviews. The tool offers lifetime access with a one-time payment, ensuring users have unlimited use of AI content generation tools to create, download, and modify resumes and cover letters.

AI QA Monkey

AI QA Monkey is a free website security scanner that offers instant security audits to check website security scores. The tool scans for vulnerabilities such as leaked sensitive data, open ports, passwords, .env files, and WordPress vulnerabilities. It provides a detailed security report with actionable insights and AI-powered fixes. Users can export reports in PDF, JSON, or CSV formats. AI QA Monkey is designed to help businesses improve their security posture and comply with GDPR regulations.

Duplichecker

Duplichecker is an AI-based plagiarism checker tool that offers accurate detection of plagiarism in text content. It provides multiple file format support, privacy guarantee, AI-based technology, multilingual support, writing enhancements, fast and deep scanning, and highlights duplication. The tool is developed using AI technology to detect minor traces of plagiarism and identify paraphrased content. It is widely used by writers, teachers, students, bloggers, and webmasters to ensure content originality and avoid copyright infringement.

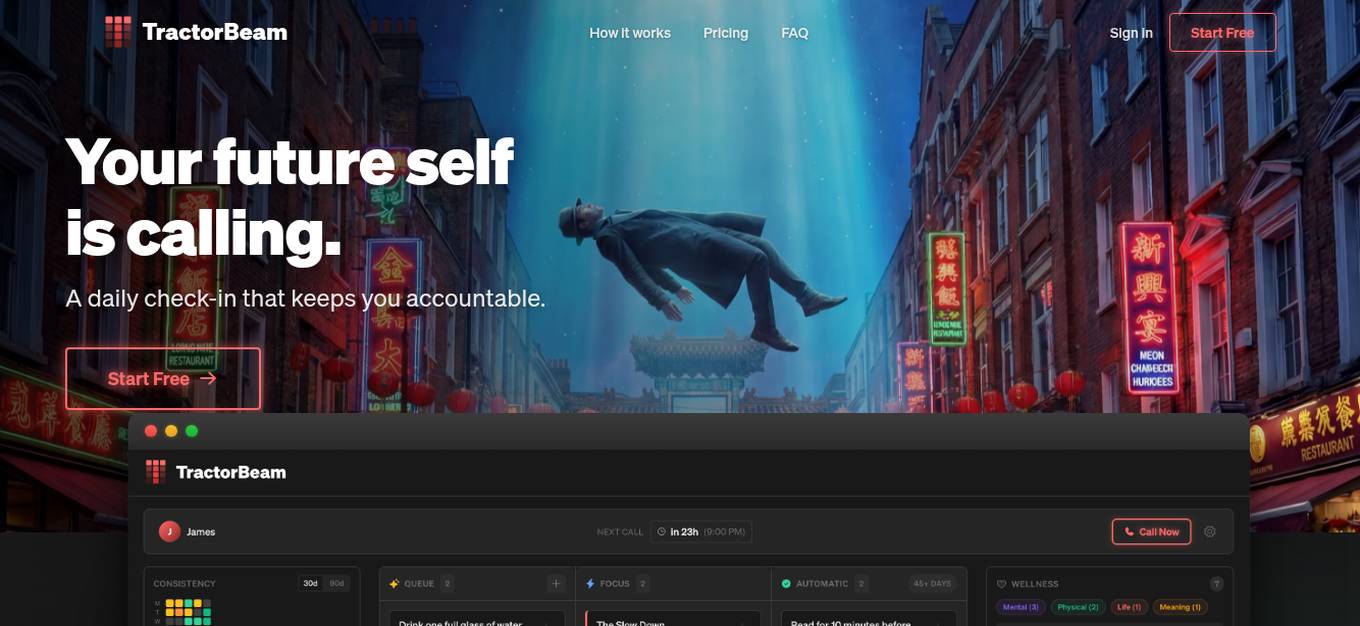

TractorBeam

TractorBeam is an AI accountability partner application that helps users build new habits and achieve their goals through daily check-ins and support. The application features voice AI partners who call users daily, automatically track progress in a live dashboard, and provide text support whenever needed. TractorBeam is designed to provide emotional support, help users get unstuck, and build momentum towards habit formation. The application is based on 45 years of habit research and aims to make habit-building automatic through consistent practice. TractorBeam offers different subscription plans with varying levels of features and support to cater to users' needs.

AI Powered Resume Checker

AI Powered Resume Checker is an AI-driven resume review tool that helps job seekers create impactful resumes that stand out in the job market. With detailed analysis, tailored suggestions, keyword optimization, and formatting tips, the tool empowers users to elevate their resumes and increase their chances of getting noticed by potential employers. The resume review is crafted with insights from industry experts, career coaches, and hiring managers, providing valuable guidance to job seekers.

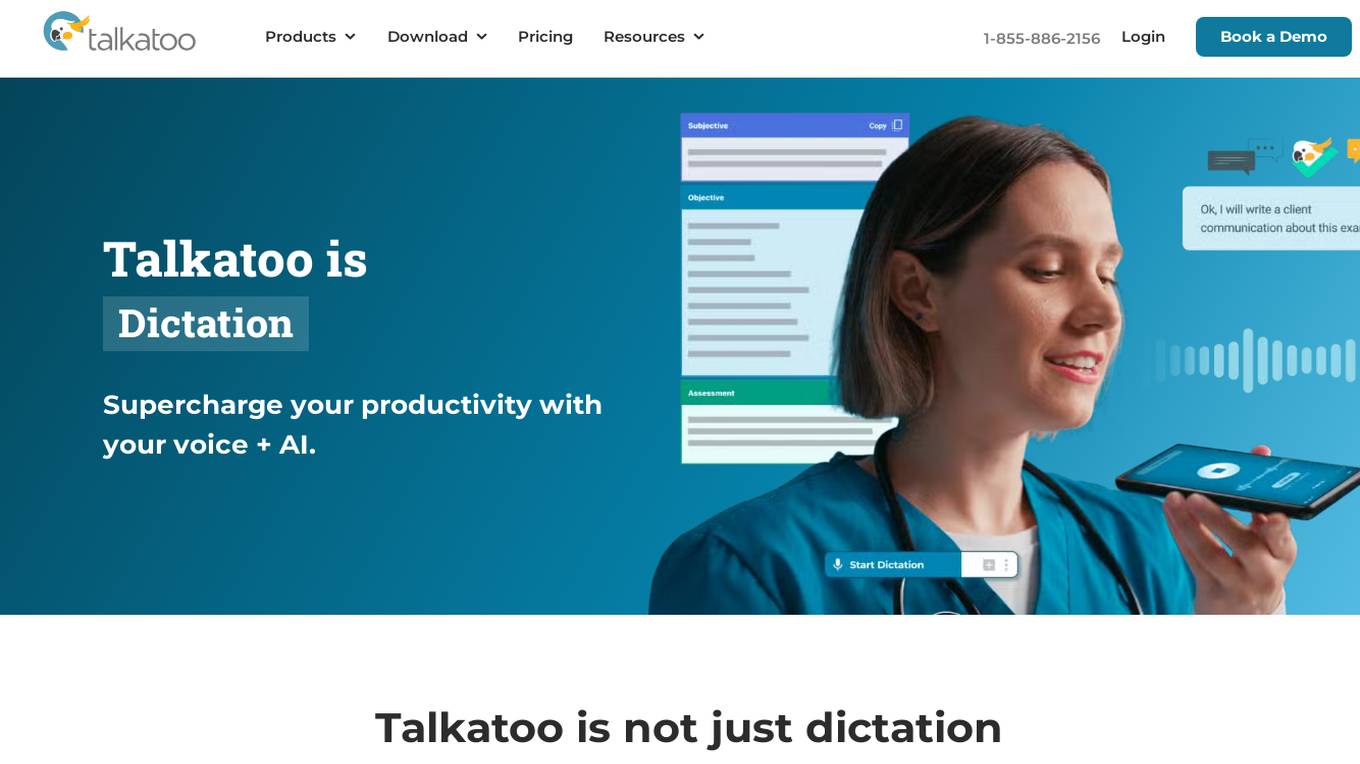

Talkatoo

Talkatoo is a dictation software that uses AI to help veterinarians save time and increase productivity. It offers three levels of control, so you can choose how hands-off you want to be. With Verified, you can simply record your notes and our scribes will verify the accuracy and place them in your PMS for you. With Auto-SOAP Records, you can record an entire exam or dictate your notes after and have Talkatoo auto-magically format the recording into a SOAP note, or other template. With Desktop Dictation, you can dictate in any field, in any app, on Mac or Windows. You can even connect your mobile device as a secure microphone to make the process easier.

Plag

Plag is an AI-powered platform that focuses on academic integrity, studies, and artificial intelligence. It offers solutions for students, educators, universities, and businesses in the areas of plagiarism detection, plagiarism removal, text formatting, and proofreading. The platform utilizes multilingual artificial intelligence technology to provide users with advanced tools to enhance their academic work and ensure originality.

AlphaDrafts

AlphaDrafts is an AI-powered writing platform that offers advanced analysis, real-time feedback, and intelligent suggestions to enhance writing skills. It caters to students, researchers, and professional writers by providing a complete writing workspace with features like AI-powered analysis, real-time suggestions, citation management, grammar checking, and style and tone suggestions. Users can transform their writing with the help of AlphaDrafts' AI technology, improving grammar, structure, and overall quality of their content.

Numberly

Numberly is a free online math assistant that helps you solve equations, perform conversions, and check your calculations as you type. It integrates with your favorite websites and apps, so you can use it anywhere you need to do math. Numberly is perfect for students, professionals, and anyone who wants to make math easier.

Resumecheck.net

Resumecheck.net is an AI-powered resume improvement platform that helps users create error-free, professional resumes that stand out to recruiters. The platform uses GPT4 technology to provide personalized feedback and suggestions, including grammar corrections, formatting adjustments, and industry-specific keyword optimization. Additionally, Resumecheck.net offers an AI Cover Letter Writer that generates tailored cover letters based on the user's resume and the specific job position they are applying for.

ExpiredDomains.com

ExpiredDomains.com is a free online platform that helps users find valuable expired and expiring domain names. It aggregates domain data from hundreds of top-level domains (TLDs) and displays them in a searchable, filterable format. Whether you’re seeking domains with SEO authority, existing traffic, or strong brand potential, the platform provides tools and insights to support smarter decisions. Users can access over 1 million domains across 677+ TLDs with exclusive data metrics, a user-friendly interface, and professional trust. ExpiredDomains.com does not register domains itself but connects users to trusted external registrars and marketplaces like GoDaddy for domain purchases.

MyDetector

MyDetector is a free and reliable AI detector tool designed to check for AI-generated content, including ChatGPT, GPT-4, and Gemini. It uses advanced algorithms to analyze text and identify patterns typical of AI-generated content. The tool is beneficial for writers, teachers, and businesses to ensure the authenticity of the content they use. MyDetector offers high-precision detection, multi-format support, real-time results, and content comparison features, making it a versatile platform for AI detection and humanization.

Rezi

Rezi is the leading AI resume builder trusted by over 2 million users. It automates the process of creating a professional resume by utilizing artificial intelligence to write, edit, format, and optimize resumes. Rezi offers features such as AI resume editing, summary generation, keyword scanning, ATS resume checking, cover letter writing, interview practice, and resignation letter creation. The platform aims to help job seekers improve their chances of landing interviews by tailoring resumes with targeted keywords and ensuring content quality. With a user-friendly interface and a range of templates, Rezi simplifies the resume-building process and provides valuable resources for job seekers.

Enhancv

Enhancv is an AI-powered online resume builder that helps users create professional resumes and cover letters tailored to their job applications. The tool offers a drag-and-drop resume builder with a variety of modern templates, a resume checker that evaluates resumes for ATS-friendliness, and provides actionable suggestions. Enhancv also provides resume and CV examples written by experienced professionals, a resume tailoring feature, and a free resume checker. Users can download their resumes in PDF or TXT formats and store up to 30 documents in cloud storage.

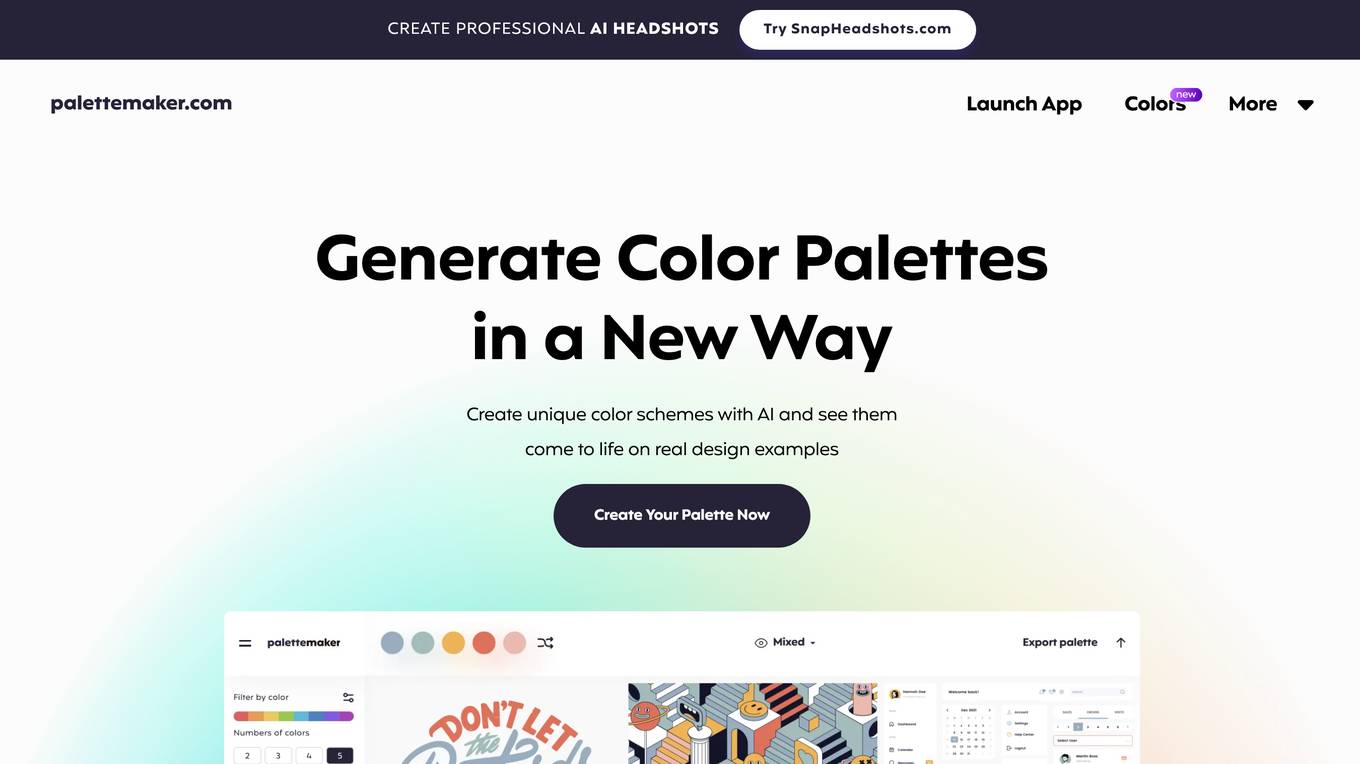

PaletteMaker

PaletteMaker is a unique tool for creative professionals and color lovers that allows you to create color palettes and test their behavior in pre-made design examples from the most common creative fields such as Logo design, UI/UX, Patterns, Posters and more. Check Color Behavior See how color works together in various of situations in graphic design. AI Color Palettes Filter palettes of different color tone and number of colors. Diverse Creative Fields Check your colors on logo, ui design, posters, illustrations and more. Create Palettes On-The-Go Instantly see the magic of creating color palettes. Totally Free PaletteMaker is created by professional designers, it’s completely free to use and forever will be. Powerful Export Export your palette in various formats, such as Procreate, Adobe ASE, Image, and even Code.

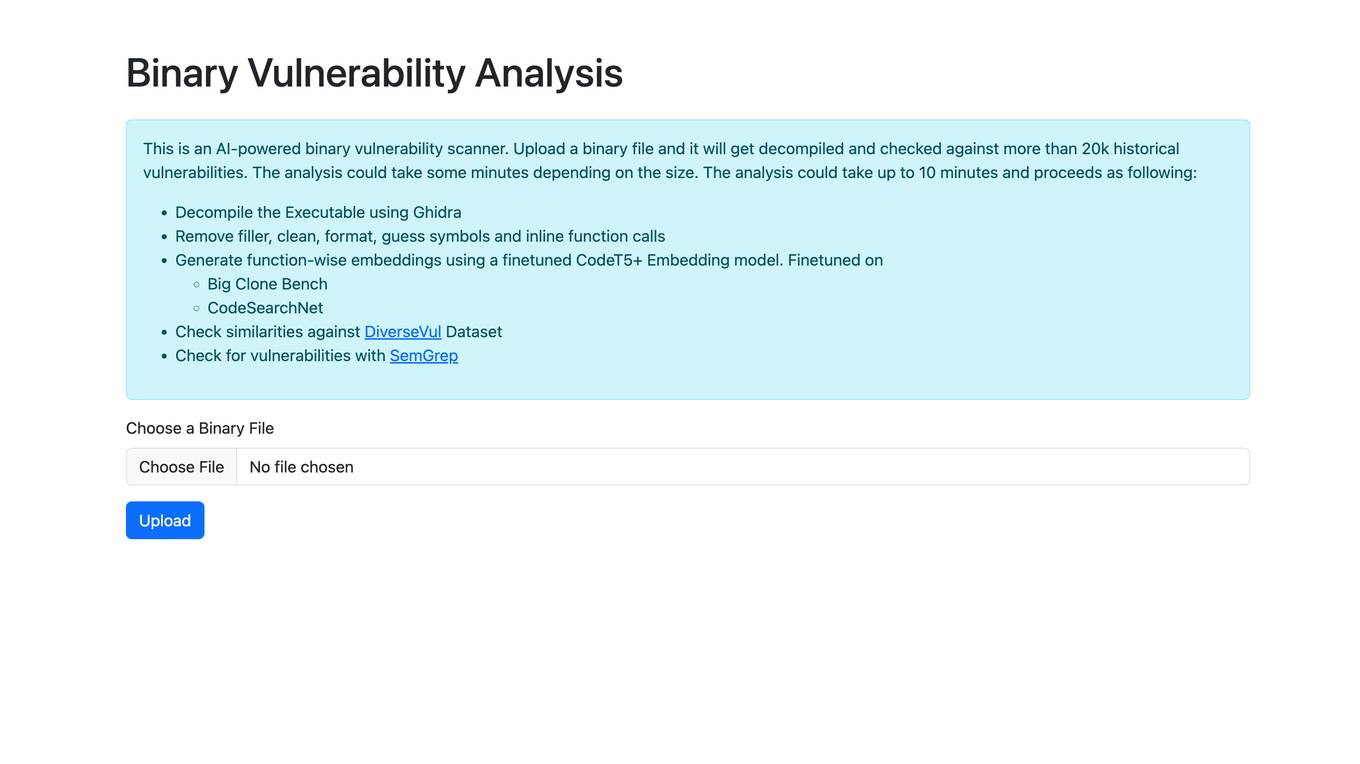

Binary Vulnerability Analysis

The website offers an AI-powered binary vulnerability scanner that allows users to upload a binary file for analysis. The tool decompiles the executable, removes filler, cleans, formats, and checks for historical vulnerabilities. It generates function-wise embeddings using a finetuned CodeT5+ Embedding model and checks for similarities against the DiverseVul Dataset. The tool also utilizes SemGrep to check for vulnerabilities in the binary file.

GradingPal

GradingPal is an AI-powered grading tool designed for K-12 teachers in the US. It provides high-quality first-draft scores and feedback within minutes, covering all subjects, assignments, and grade levels. The tool aims to streamline the grading process, saving teachers time and providing deeper insights into student performance. GradingPal integrates ethical and responsible AI to support teachers in enhancing student outcomes while maintaining teacher control over the grading process.

0 - Open Source AI Tools

20 - OpenAI Gpts

核心期刊专利论文写作助手

是一个可以帮助你撰写核心期刊专利论文的功能。它可以根据你的专利信息、研究背景、创新点、实施效果等信息,为你生成一份符合格式要求和技术要求的专利论文草稿,包括标题、摘要、关键词、引言、专利介绍、实施效果、结论和参考文献等。它还可以提供一些参考文献和范文,帮助你完善和优化你的专利论文。

AI Essay Writer

ChatGPT Essay Writer helps you to write essays with OpenAI. Generate Professional Essays with Plagiarism Check, Formatting, Cost Estimation & More.

AR 25-50, Preparing and Managing Correspondence

Can accurately answer questions about AR 25-50 and assist in refining documents to ensure they adhere to the Army guidelines for formatting, style, and protocol.

Writing Metier Footnote Assistant

The Writing Metier Footnote Assistant is a specialized GPT model designed to help students efficiently create, format, and verify footnotes for their academic papers.

Complaint Assistant

Creates conversational, effective complaint letters, offers document formatting.

French Speed Typist

Veuillez taper aussi vite que possible, ou vous pouvez coller un texte mal rédigé. Je le réviserai ensuite dans un format correctement structuré

Harvard Quick Citations

This tool is only useful if you have added new sources to your reference list and need to ensure that your in-text citations reflect these updates. Paste your essay below to get started.

Český jazyk - pravopis, typografie, citace

GPT, které se specializuje na český jazyk, jeho gramatiku, typografii a citace respektující ISO 690

Assistente de Elaboração de Trabalho Escolar ABNT

Desenvolver um assistente baseado em GPTs para auxiliar na criação de trabalhos escolares (ABNT)