Best AI tools for< Build Vectors >

20 - AI tool Sites

Fermat

Fermat is an AI toolmaker that allows users to build their own AI workflows and accelerate their creative process. It is trusted by professionals in fashion design, product design, interior design, and brainstorming. Fermat's unique features include the ability to blend AI models into tools that fit the way users work, embed processes in reusable tools, keep teams on the same page, and embed users' own style to get coherent results. With Fermat, users can visualize their sketches, change colors and materials, create photo shoots, turn images into vectors, and more. Fermat offers a free Starter plan for individuals and a Pro plan for teams and professionals.

Superlinked

Superlinked is a compute framework for your information retrieval and feature engineering systems, focused on turning complex data into vector embeddings. Vectors power most of what you already do online - hailing a cab, finding a funny video, getting a date, scrolling through a feed or paying with a tap. And yet, building production systems powered by vectors is still too hard! Our goal is to help enterprises put vectors at the center of their data & compute infrastructure, to build smarter and more reliable software.

VectorMind

VectorMind is a generative AI platform that empowers users to create stunning vector graphic assets in seconds. With its state-of-the-art AI engine, users can generate high-quality, memorable designs by simply entering text descriptions. VectorMind offers a wide range of features, including a prompt template library, discoverable graphic collections, and various download options. It is perfect for hobbyists, professionals, and small teams looking to leverage advanced AI design tools.

Singlebase

Singlebase.cloud is an AI-powered platform that serves as an alternative to Firebase and Supabase. It offers a comprehensive suite of tools and services to facilitate faster development and deployment through a unified API. The platform includes features such as Vector Database, NoSQL Database, Vector Embeddings, Generative AI, RAG, Knowledge Base, File storage, and Authentication, catering to a wide range of development needs.

Synthreo

Synthreo is a Multi-Tenant AI Automation Platform designed for Managed Service Providers (MSPs) to empower businesses by streamlining operations, reducing costs, and driving growth through intelligent AI agents. The platform offers cutting-edge AI solutions that automate routine tasks, enhance decision-making, and facilitate collaboration between human teams and digital labor. Synthreo's AI agents provide transformative advantages for businesses of all sizes, enabling operational efficiency and strategic growth.

Pinecone

Pinecone is a vector database that helps power AI for the world's best companies. It is a serverless database that lets you deliver remarkable GenAI applications faster, at up to 50x lower cost. Pinecone is easy to use and can be integrated with your favorite cloud provider, data sources, models, frameworks, and more.

Vellum AI

Vellum AI is an AI platform that supports using Microsoft Azure hosted OpenAI models. It offers tools for prompt engineering, semantic search, prompt chaining, evaluations, and monitoring. Vellum enables users to build AI systems with features like workflow automation, document analysis, fine-tuning, Q&A over documents, intent classification, summarization, vector search, chatbots, blog generation, sentiment analysis, and more. The platform is backed by top VCs and founders of well-known companies, providing a complete solution for building LLM-powered applications.

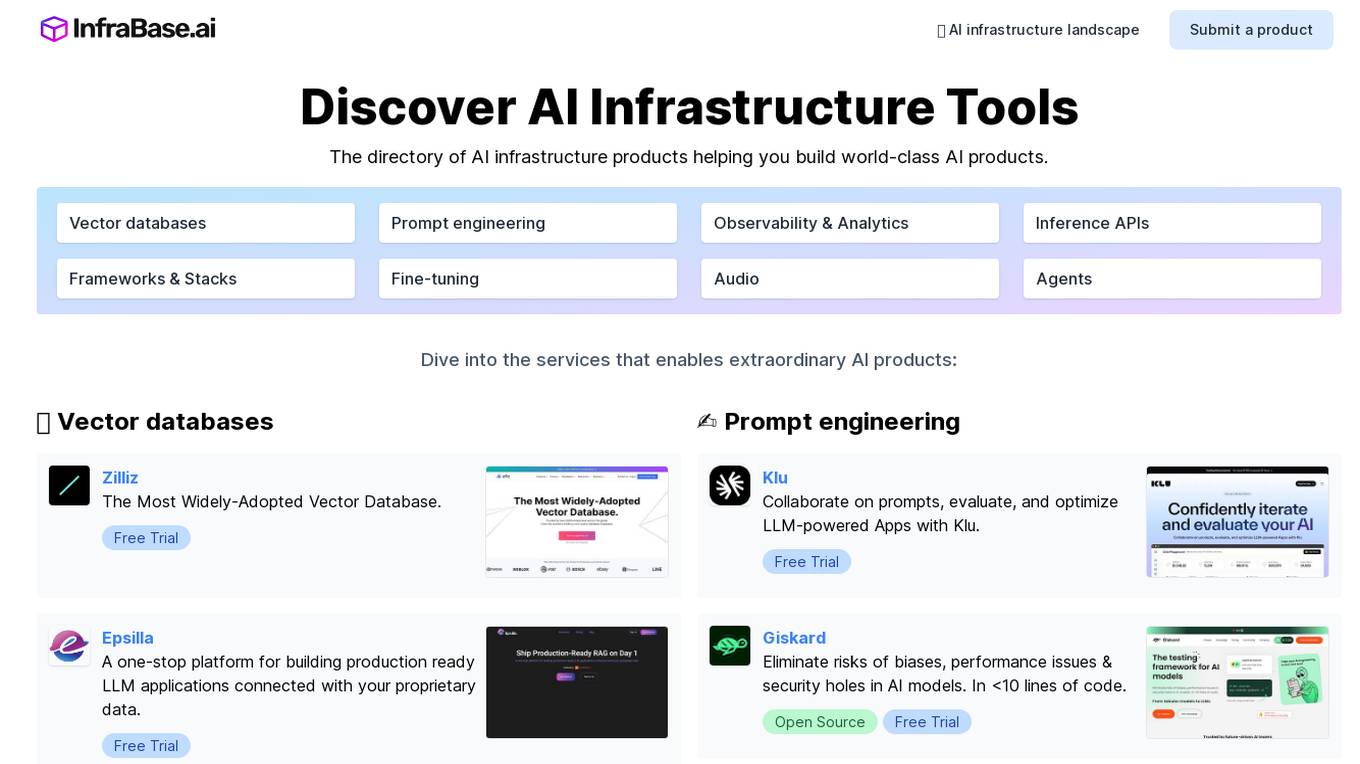

Infrabase.ai

Infrabase.ai is a directory of AI infrastructure products that helps users discover and explore a wide range of tools for building world-class AI products. The platform offers a comprehensive directory of products in categories such as Vector databases, Prompt engineering, Observability & Analytics, Inference APIs, Frameworks & Stacks, Fine-tuning, Audio, and Agents. Users can find tools for tasks like data storage, model development, performance monitoring, and more, making it a valuable resource for AI projects.

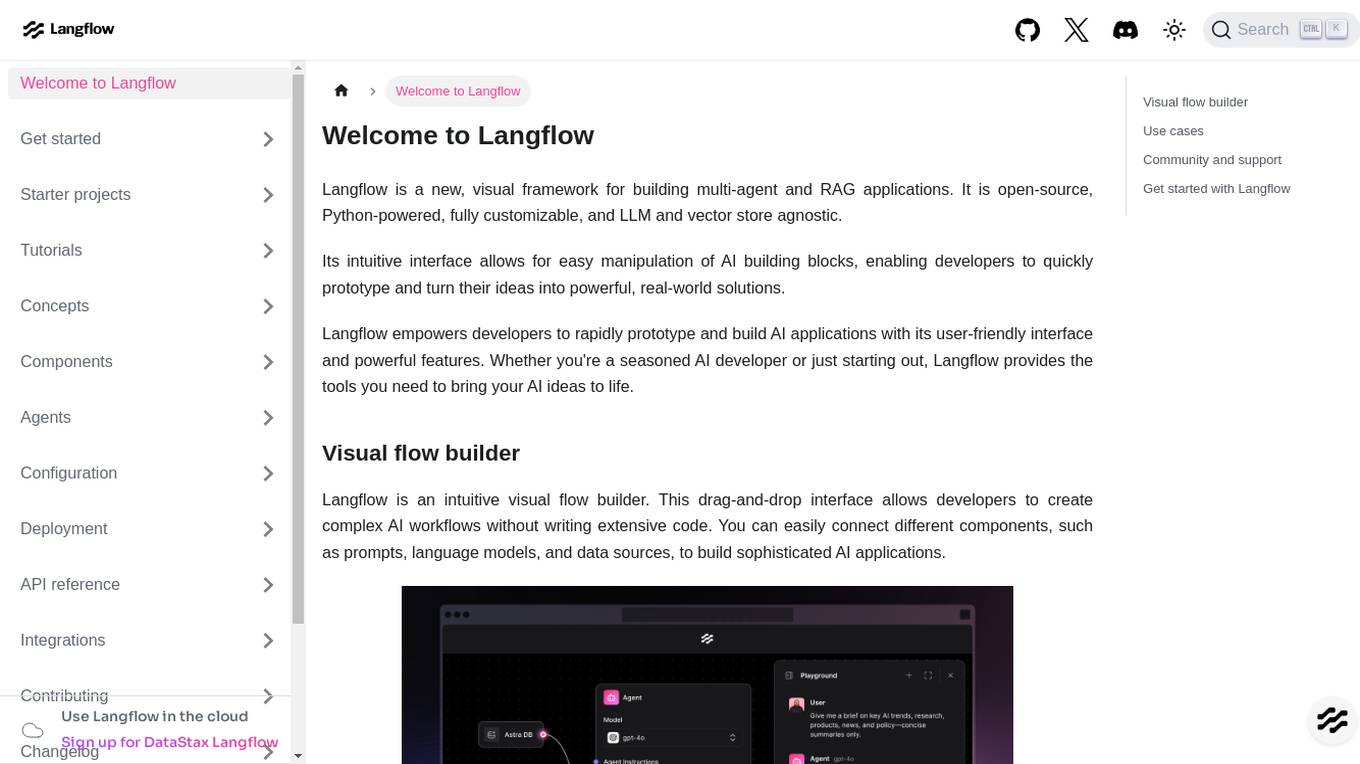

Langflow

Langflow is a new, visual framework for building multi-agent and RAG applications. It is open-source, Python-powered, fully customizable, and LLM and vector store agnostic. Langflow empowers developers to rapidly prototype and build AI applications with its user-friendly interface and powerful features. Whether you're a seasoned AI developer or just starting out, Langflow provides the tools you need to bring your AI ideas to life.

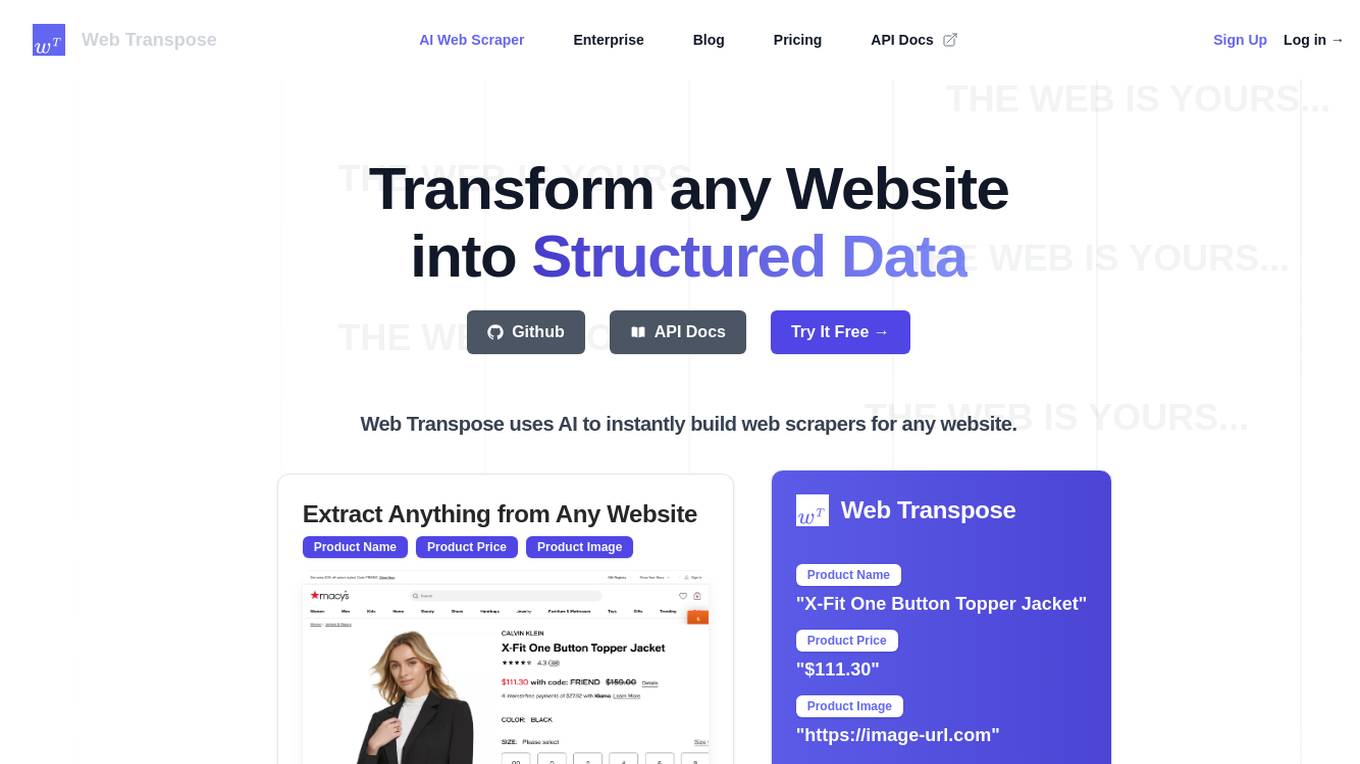

Web Transpose

Web Transpose is an AI-powered web scraping and web crawling API that allows users to transform any website into structured data. By utilizing artificial intelligence, Web Transpose can instantly build web scrapers for any website, enabling users to extract valuable information efficiently and accurately. The tool is designed for production use, offering low latency and effective proxy handling. Web Transpose learns the structure of the target website, reducing latency and preventing hallucinations commonly associated with traditional web scraping methods. Users can query any website like an API and build products quickly using the scraped data.

Trieve

Trieve is an AI-first infrastructure API that offers search, recommendations, and RAG capabilities by combining language models with tools for fine-tuning ranking and relevance. It helps companies build unfair competitive advantages through their discovery experiences, powering over 30,000 discovery experiences across various categories. Trieve supports semantic vector search, BM25 & SPLADE full-text search, hybrid search, merchandising & relevance tuning, and sub-sentence highlighting. The platform is built on open-source models, ensuring data privacy, and offers self-hostable options for sensitive data and maximum performance.

SingleStore

SingleStore is a real-time data platform designed for apps, analytics, and gen AI. It offers faster hybrid vector + full-text search, fast-scaling integrations, and a free tier. SingleStore can read, write, and reason on petabyte-scale data in milliseconds. It supports streaming ingestion, high concurrency, first-class vector support, record lookups, and more.

Gista

Gista is an AI-powered conversion agent that helps businesses turn more website visitors into leads. It is equipped with knowledge about your products and services and can offer value props, build an email list, and more. Gista is easy to set up and use, and it integrates with your favorite platforms.

Pinecone

Pinecone is a vector database designed to help power AI applications for various companies. It offers a serverless platform that enables users to build knowledgeable AI applications quickly and cost-effectively. With Pinecone, users can perform low-latency vector searches for tasks such as search, recommendation, detection, and more. The platform is scalable, secure, and cloud-native, making it suitable for a wide range of AI projects.

Context Data

Context Data is an enterprise data platform designed for Generative AI applications. It enables organizations to build AI apps without the need to manage vector databases, pipelines, and infrastructure. The platform empowers AI teams to create mission-critical applications by simplifying the process of building and managing complex workflows. Context Data also provides real-time data processing capabilities and seamless vector data processing. It offers features such as data catalog ontology, semantic transformations, and the ability to connect to major vector databases. The platform is ideal for industries like financial services, healthcare, real estate, and shipping & supply chain.

deepset

deepset is an AI platform that offers enterprise-level products and solutions for AI teams. It provides deepset Cloud, a platform built with Haystack, enabling fast and accurate prototyping, building, and launching of advanced AI applications. The platform streamlines the AI application development lifecycle, offering processes, tools, and expertise to move from prototype to production efficiently. With deepset Cloud, users can optimize solution accuracy, performance, and cost, and deploy AI applications at any scale with one click. The platform also allows users to explore new models and configurations without limits, extending their team with access to world-class AI engineers for guidance and support.

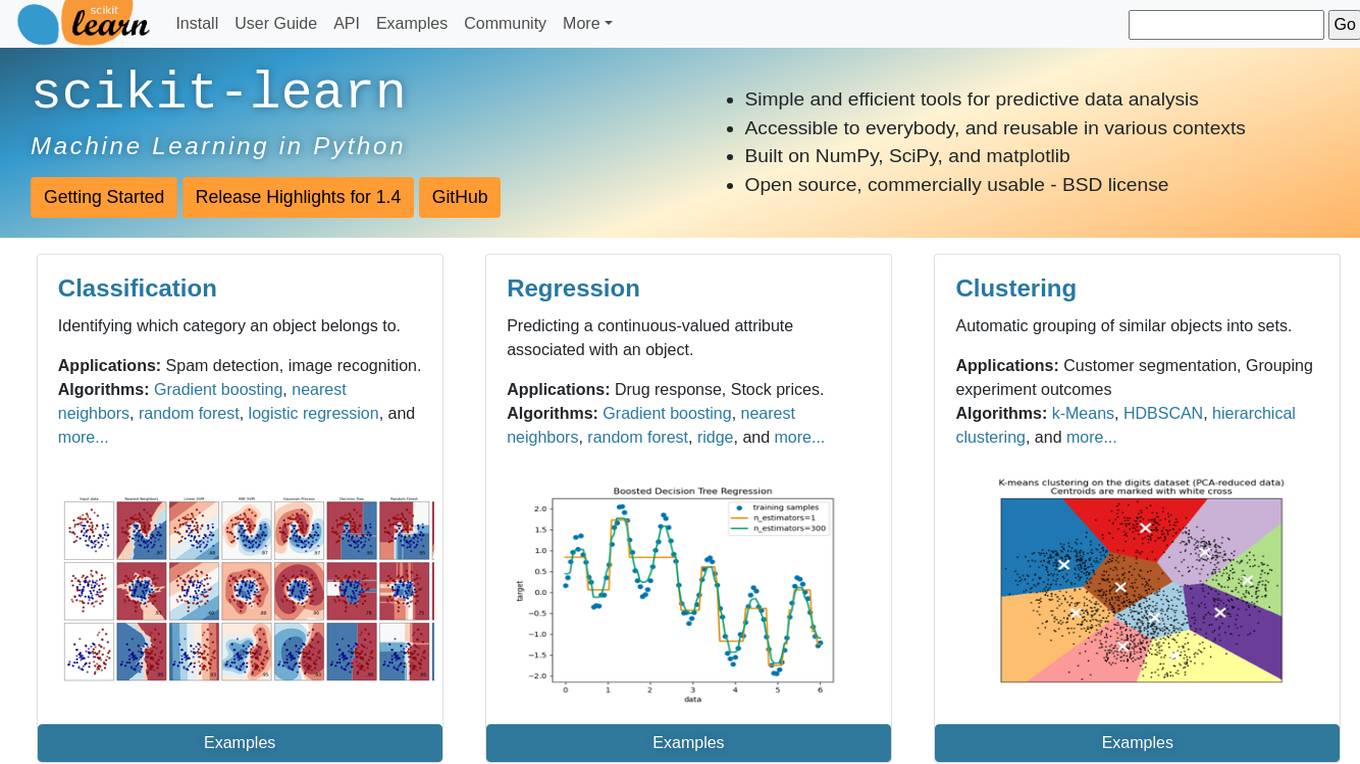

scikit-learn

Scikit-learn is a free software machine learning library for the Python programming language. It features various classification, regression and clustering algorithms including support vector machines, random forests, gradient boosting, k-means and DBSCAN, and is designed to interoperate with the Python numerical and scientific libraries NumPy and SciPy.

Pinecone

Pinecone is a vector database designed to build knowledgeable AI applications. It offers a serverless platform with high capacity and low cost, enabling users to perform low-latency vector search for various AI tasks. Pinecone is easy to start and scale, allowing users to create an account, upload vector embeddings, and retrieve relevant data quickly. The platform combines vector search with metadata filters and keyword boosting for better application performance. Pinecone is secure, reliable, and cloud-native, making it suitable for powering mission-critical AI applications.

Shakudo

Shakudo is an AI application designed for critical infrastructure, offering a unified platform to build an ideal data stack. It provides various AI components such as AI Agents, Knowledge Graph, Vector Database, Workflow Automation, and Text to SQL. Shakudo caters to industries like Aerospace, Automotive & Transportation, Climate & Energy, Financial Services, Healthcare & Life Sciences, Manufacturing, Real Estate, and Retail, offering use cases like managing customer retention, personalizing learning pathways, and extracting key insights from financial documents. The platform also features case studies, white papers, and resources for in-depth learning and implementation.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

1 - Open Source AI Tools

superlinked

Superlinked is a compute framework for information retrieval and feature engineering systems, focusing on converting complex data into vector embeddings for RAG, Search, RecSys, and Analytics stack integration. It enables custom model performance in machine learning with pre-trained model convenience. The tool allows users to build multimodal vectors, define weights at query time, and avoid postprocessing & rerank requirements. Users can explore the computational model through simple scripts and python notebooks, with a future release planned for production usage with built-in data infra and vector database integrations.

20 - OpenAI Gpts

Build a Brand

Unique custom images based on your input. Just type ideas and the brand image is created.

Beam Eye Tracker Extension Copilot

Build extensions using the Eyeware Beam eye tracking SDK

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

League Champion Builder GPT

Build your own League of Legends Style Champion with Abilities, Back Story and Splash Art

RenovaTecno

Your tech buddy helping you refurbish or build a PC from scratch, tailored to your needs, budget, and language.

Gradle Expert

Your expert in Gradle build configuration, offering clear, practical advice.

XRPL GPT

Build on the XRP Ledger with assistance from this GPT trained on extensive documentation and code samples.