Best AI tools for< Build Hpc Cluster >

20 - AI tool Sites

Backend.AI

Backend.AI is an enterprise-scale cluster backend for AI frameworks that offers scalability, GPU virtualization, HPC optimization, and DGX-Ready software products. It provides a fast and efficient way to build, train, and serve AI models of any type and size, with flexible infrastructure options. Backend.AI aims to optimize backend resources, reduce costs, and simplify deployment for AI developers and researchers. The platform integrates seamlessly with existing tools and offers fractional GPU usage and pay-as-you-play model to maximize resource utilization.

NVIDIA

NVIDIA is a world leader in artificial intelligence computing, providing hardware and software solutions for gaming, entertainment, data centers, edge computing, and more. Their platforms like Jetson and Isaac enable the development and deployment of AI-powered autonomous machines. NVIDIA's AI applications span various industries, from healthcare to manufacturing, and their technology is transforming the world's largest industries and impacting society profoundly.

NVIDIA

NVIDIA is a world leader in artificial intelligence computing. The company's products and services are used by businesses and governments around the world to develop and deploy AI applications. NVIDIA's AI platform includes hardware, software, and tools that make it easy to build and train AI models. The company also offers a range of cloud-based AI services that make it easy to deploy and manage AI applications. NVIDIA's AI platform is used in a wide variety of industries, including healthcare, manufacturing, retail, and transportation. The company's AI technology is helping to improve the efficiency and accuracy of a wide range of tasks, from medical diagnosis to product design.

Nomi.cloud

Nomi.cloud is a modern AI-powered CloudOps and HPC assistant designed for next-gen businesses. It offers developers, marketplace, enterprise solutions, and pricing console. With features like single pane of glass view, instant deployment, continuous monitoring, AI-powered insights, and budgets & alerts built-in, Nomi.cloud aims to revolutionize cloud management. It provides a user-friendly interface to manage infrastructure efficiently, optimize costs, and deploy resources across multiple regions with ease. Nomi.cloud is built for scale, trusted by enterprises, and offers a range of GPUs and cloud providers to suit various needs.

Build Club

Build Club is a leading training campus for AI learners, experts, and builders. It offers a platform where individuals can upskill into AI careers, get certified by top AI companies, learn the latest AI tools, and earn money by solving real problems. The community at Build Club consists of AI learners, engineers, consultants, and founders who collaborate on cutting-edge AI projects. The platform provides challenges, support, and resources to help individuals build AI projects and advance their skills in the field.

Unified DevOps platform to build AI applications

This is a unified DevOps platform to build AI applications. It provides a comprehensive set of tools and services to help developers build, deploy, and manage AI applications. The platform includes a variety of features such as a code editor, a debugger, a profiler, and a deployment manager. It also provides access to a variety of AI services, such as natural language processing, machine learning, and computer vision.

Build Chatbot

Build Chatbot is a no-code chatbot builder designed to simplify the process of creating chatbots. It enables users to build their chatbot without any coding knowledge, auto-train it with personalized content, and get the chatbot ready with an engaging UI. The platform offers various features to enhance user engagement, provide personalized responses, and streamline communication with website visitors. Build Chatbot aims to save time for both businesses and customers by making information easily accessible and transforming visitors into satisfied customers.

Build Club

Build Club is an AI tool designed to help individuals learn and explore various aspects of artificial intelligence. The platform offers a wide range of courses, challenges, hackathons, and community projects to enhance users' AI skills. Users can build AI models for tasks like image and video generation, AI marketing, and creating AI agents. Build Club aims to create a collaborative learning environment for AI enthusiasts to grow their knowledge and skills in the field of artificial intelligence.

Mimir

Mimir is an AI-native product management tool that helps users figure out what to build next by importing or uploading feedback, interviews, or metrics. It provides evidence-backed recommendations, refines them in chat, and generates AI agent-ready specs. Mimir stands out by creating GitHub issues from recommendations with complete specs and implementation tasks, enabling users to ship features in hours. The tool extracts structured insights, clusters them into themes, and generates prioritized recommendations based on product management best practices. Mimir learns from every interaction, aligning recommendations with the user's business context over time.

GitHub

GitHub is a collaborative platform that allows users to build and ship software efficiently. GitHub Copilot, an AI-powered tool, helps developers write better code by providing coding assistance, automating workflows, and enhancing security. The platform offers features such as instant dev environments, code review, code search, and collaboration tools. GitHub is widely used by enterprises, small and medium teams, startups, and nonprofits across various industries. It aims to simplify the development process, increase productivity, and improve the overall developer experience.

Google Cloud

Google Cloud is a suite of cloud computing services that runs on the same infrastructure as Google. Its services include computing, storage, networking, databases, machine learning, and more. Google Cloud is designed to make it easy for businesses to develop and deploy applications in the cloud. It offers a variety of tools and services to help businesses with everything from building and deploying applications to managing their infrastructure. Google Cloud is also committed to sustainability, and it has a number of programs in place to reduce its environmental impact.

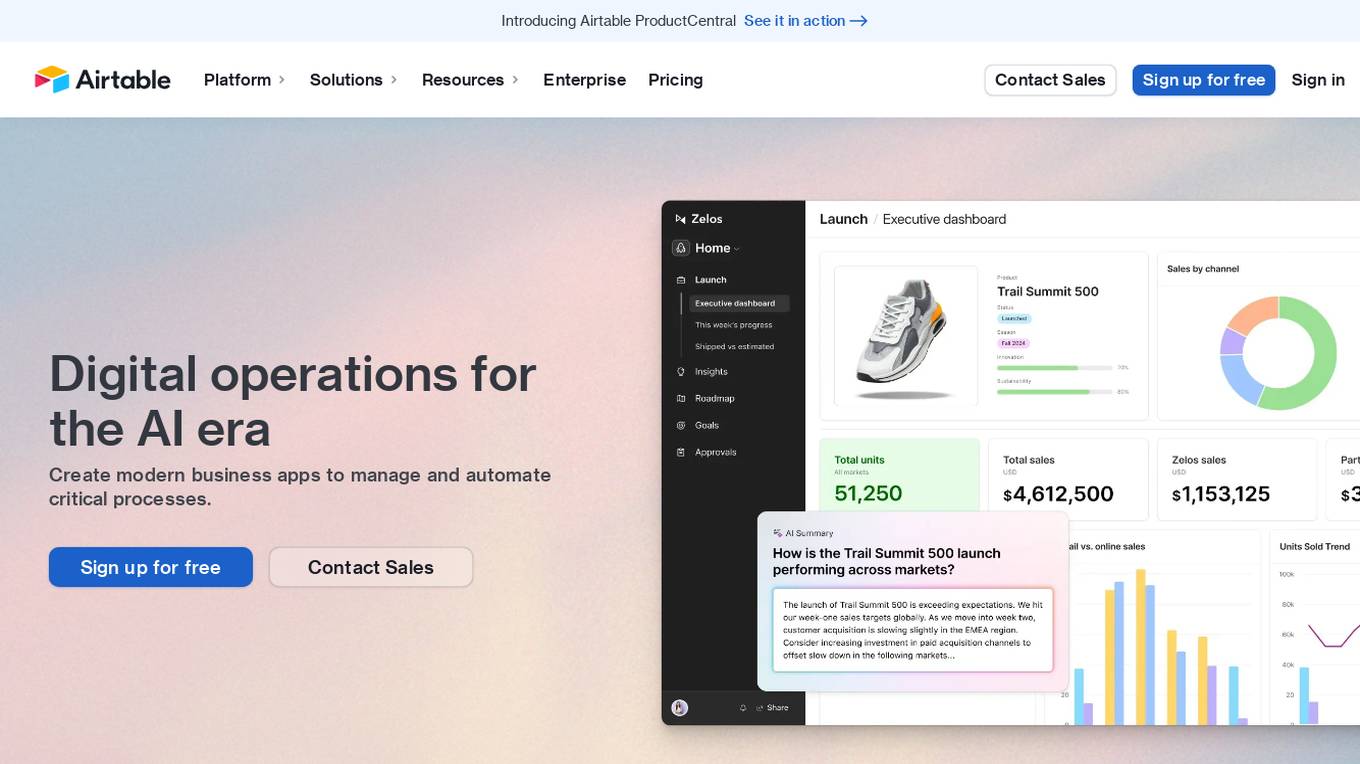

Airtable

Airtable is a next-gen app-building platform that enables teams to create custom business apps without the need for coding. It offers features like AI integration, connected data, automations, interface design, and data visualization. Airtable allows users to manage security, permissions, and data protection at scale. The platform also provides integrations with popular tools like Slack, Google Drive, and Salesforce, along with an extension marketplace for additional templates and apps. Users can streamline workflows, automate processes, and gain insights through reporting and analytics.

Gemini

Gemini is a large and powerful AI model developed by Google. It is designed to handle a wide variety of text and image reasoning tasks, and it can be used to build a variety of AI-powered applications. Gemini is available in three sizes: Ultra, Pro, and Nano. Ultra is the most capable model, but it is also the most expensive. Pro is the best performing model for a wide variety of tasks, and it is a good value for the price. Nano is the most efficient model, and it is designed for on-device use cases.

Notion

Notion is an AI-integrated workspace platform that combines wiki, docs, and project management functionalities in one tool. It offers a centralized hub for teams to collaborate, share knowledge, manage projects, and streamline workflows. With AI assistance, users can enhance their productivity by automating tasks, generating content, and finding information quickly. Notion aims to simplify work processes and empower teams to work more efficiently and creatively.

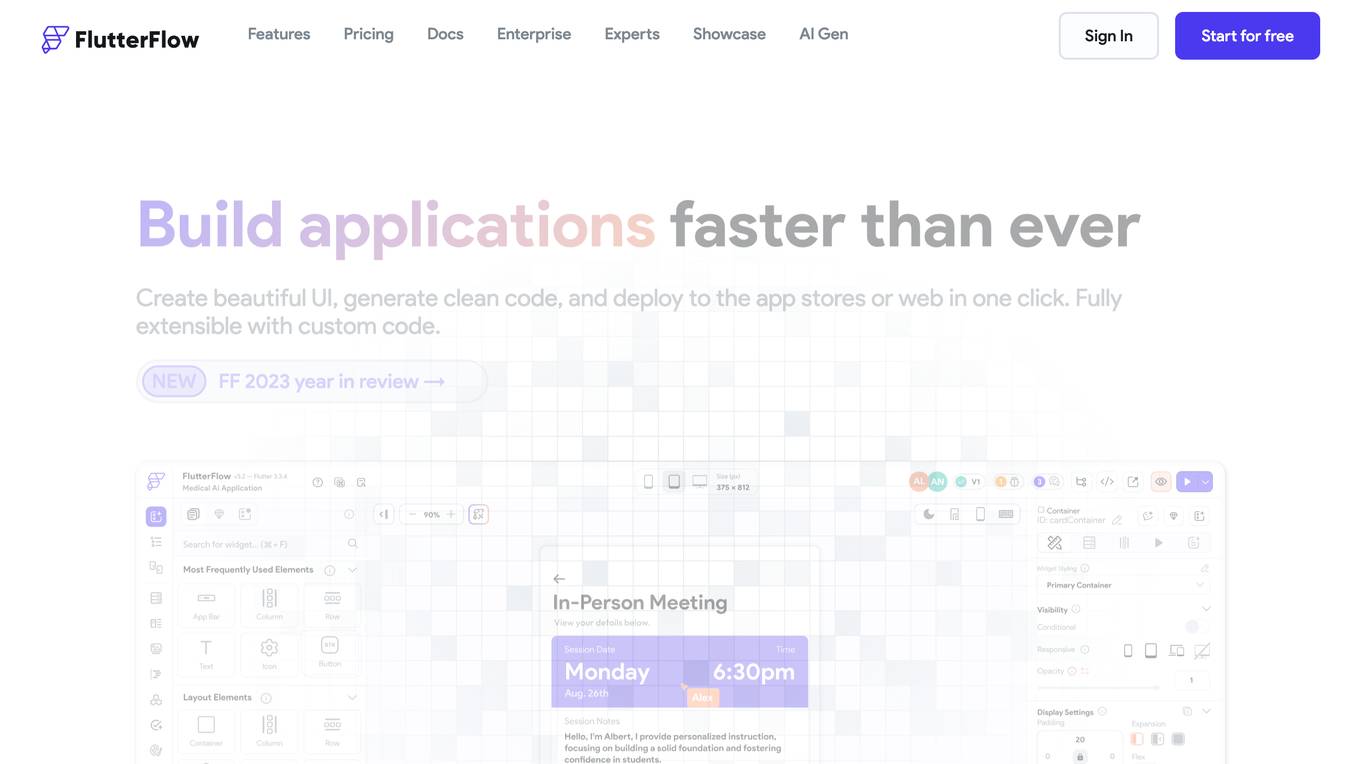

FlutterFlow

FlutterFlow is a low-code development platform that enables users to build cross-platform mobile and web applications without writing code. It provides a visual interface for designing user interfaces, connecting data, and implementing complex logic. FlutterFlow is trusted by users at leading companies around the world and has been used to build a wide range of applications, from simple prototypes to complex enterprise solutions.

Enhancv

Enhancv is an AI-powered online resume builder that helps users create professional resumes and cover letters tailored to their job applications. The tool offers a drag-and-drop resume builder with a variety of modern templates, a resume checker that evaluates resumes for ATS-friendliness, and provides actionable suggestions. Enhancv also provides resume and CV examples written by experienced professionals, a resume tailoring feature, and a free resume checker. Users can download their resumes in PDF or TXT formats and store up to 30 documents in cloud storage.

Abacus.AI

Abacus.AI is the world's first AI platform where AI, not humans, build Applied AI agents and systems at scale. Using generative AI and other novel neural net techniques, AI can build LLM apps, gen AI agents, and predictive applied AI systems at scale.

Bubble

Bubble is a no-code application development platform that allows users to build and deploy web and mobile applications without writing any code. It provides a visual interface for designing and developing applications, and it includes a library of pre-built components and templates that can be used to accelerate development. Bubble is suitable for a wide range of users, from beginners with no coding experience to experienced developers who want to build applications quickly and easily.

Zyro

Zyro is a website builder that allows users to create professional websites and online stores without any coding knowledge. It offers a range of features, including customizable templates, drag-and-drop editing, and AI-powered tools to help users brand and grow their businesses.

Pinecone

Pinecone is a vector database that helps power AI for the world's best companies. It is a serverless database that lets you deliver remarkable GenAI applications faster, at up to 50x lower cost. Pinecone is easy to use and can be integrated with your favorite cloud provider, data sources, models, frameworks, and more.

1 - Open Source AI Tools

cluster-toolkit

Cluster Toolkit is an open-source software by Google Cloud for deploying AI/ML and HPC environments on Google Cloud. It allows easy deployment following best practices, with high customization and extensibility. The toolkit includes tutorials, examples, and documentation for various modules designed for AI/ML and HPC use cases.

20 - OpenAI Gpts

Build a Brand

Unique custom images based on your input. Just type ideas and the brand image is created.

Beam Eye Tracker Extension Copilot

Build extensions using the Eyeware Beam eye tracking SDK

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

League Champion Builder GPT

Build your own League of Legends Style Champion with Abilities, Back Story and Splash Art

RenovaTecno

Your tech buddy helping you refurbish or build a PC from scratch, tailored to your needs, budget, and language.

Gradle Expert

Your expert in Gradle build configuration, offering clear, practical advice.

XRPL GPT

Build on the XRP Ledger with assistance from this GPT trained on extensive documentation and code samples.