Best AI tools for< Batch Data >

20 - AI tool Sites

BuildShip

BuildShip is a batch processing tool for ChatGPT that allows users to process ChatGPT tasks in parallel on a spreadsheet UI with CSV/JSON import and export. It supports various OpenAI models, including GPT4, Claude 3, and Gemini. Users can start with readymade templates and customize them with their own logic and models. The data generated is stored securely on the user's own Google Cloud project, and team collaboration is supported with granular access control.

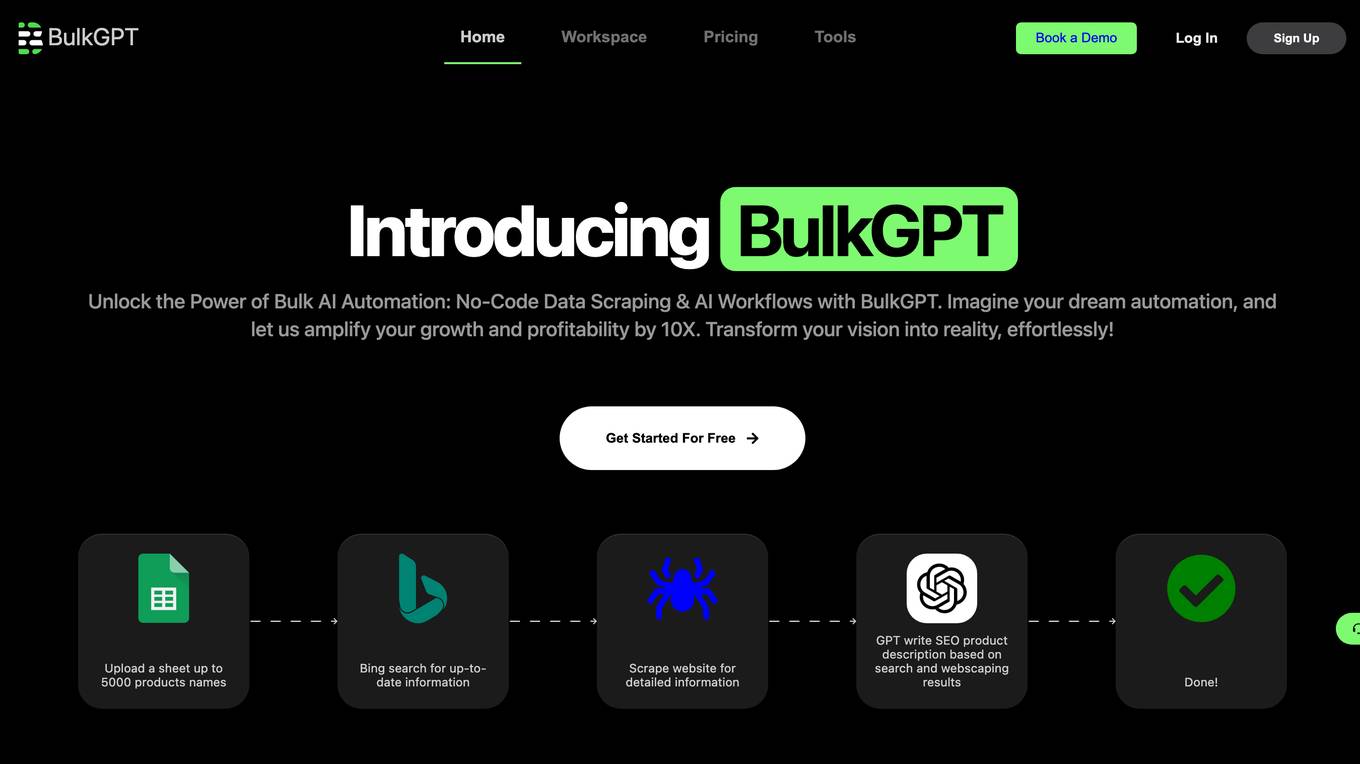

BulkGPT

BulkGPT is a no-code AI workflow automation tool that allows users to build custom workflows for mass web scraping and content creation without the need for coding. It simplifies tasks such as scraping web pages, generating SEO blogs, and creating personalized messages. The tool enables users to upload data, run it in Google Sheets, integrate it with other tools via API, and automate content creation efficiently. BulkGPT offers features like web scraping in Google Sheets, crawling any URL for data extraction, and AI-powered tasks such as SEO content creation, e-commerce product description generation, ChatGPT automation, data scraping, and marketing email campaigns.

YTVidHub

YTVidHub is an AI-powered tool designed for bulk YouTube subtitle extraction and analysis. It offers a suite of features to efficiently download subtitles in various formats, clean transcripts, and generate AI summaries. The tool is trusted by professionals worldwide for its accuracy, speed, and convenience in handling large-scale subtitle extraction tasks.

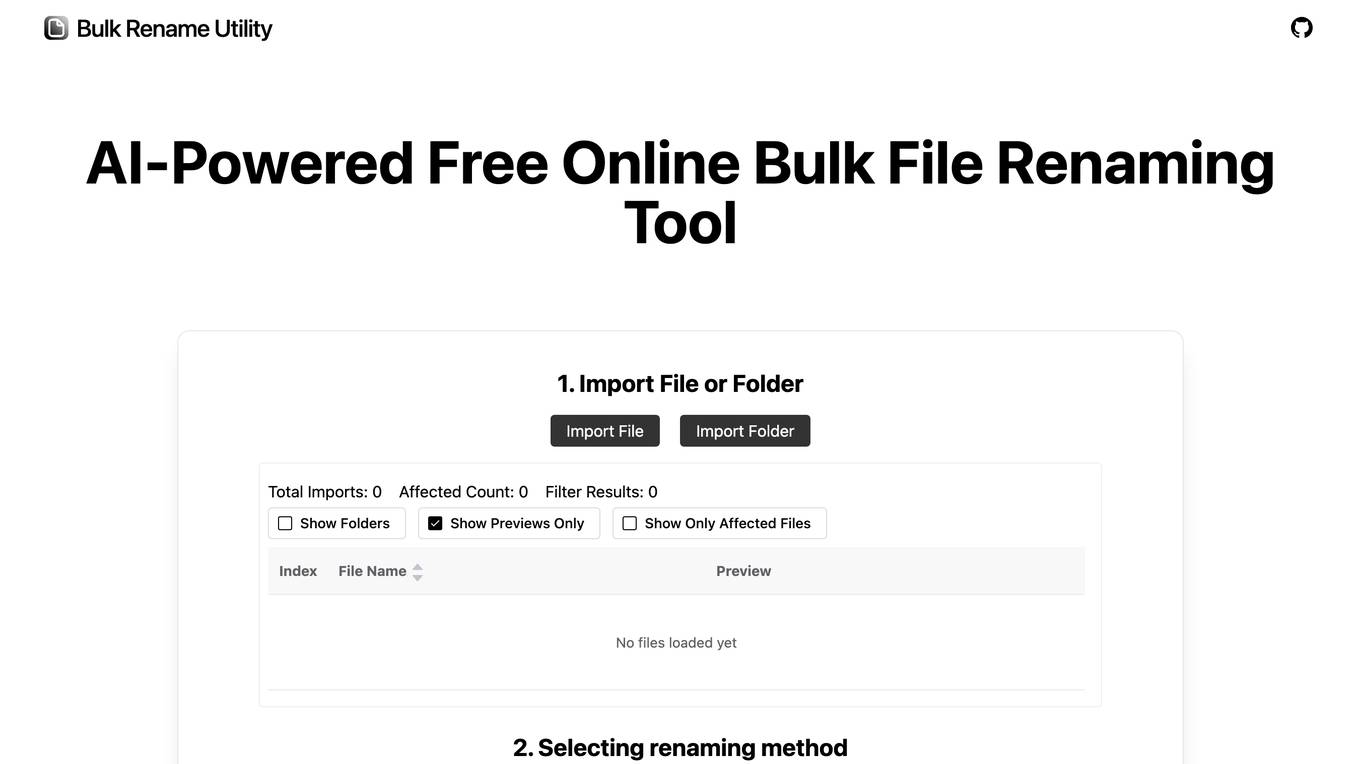

AI Bulk Rename Utility

AI Bulk Rename Utility is an intelligent file renaming tool powered by advanced AI technology, designed to simplify file organization and photo management for Windows and Mac users. The tool offers smart batch renaming capabilities, file organization features, and photo renaming functionalities. It ensures complete privacy protection by processing all operations locally on the user's device, without the need for file uploads to external servers.

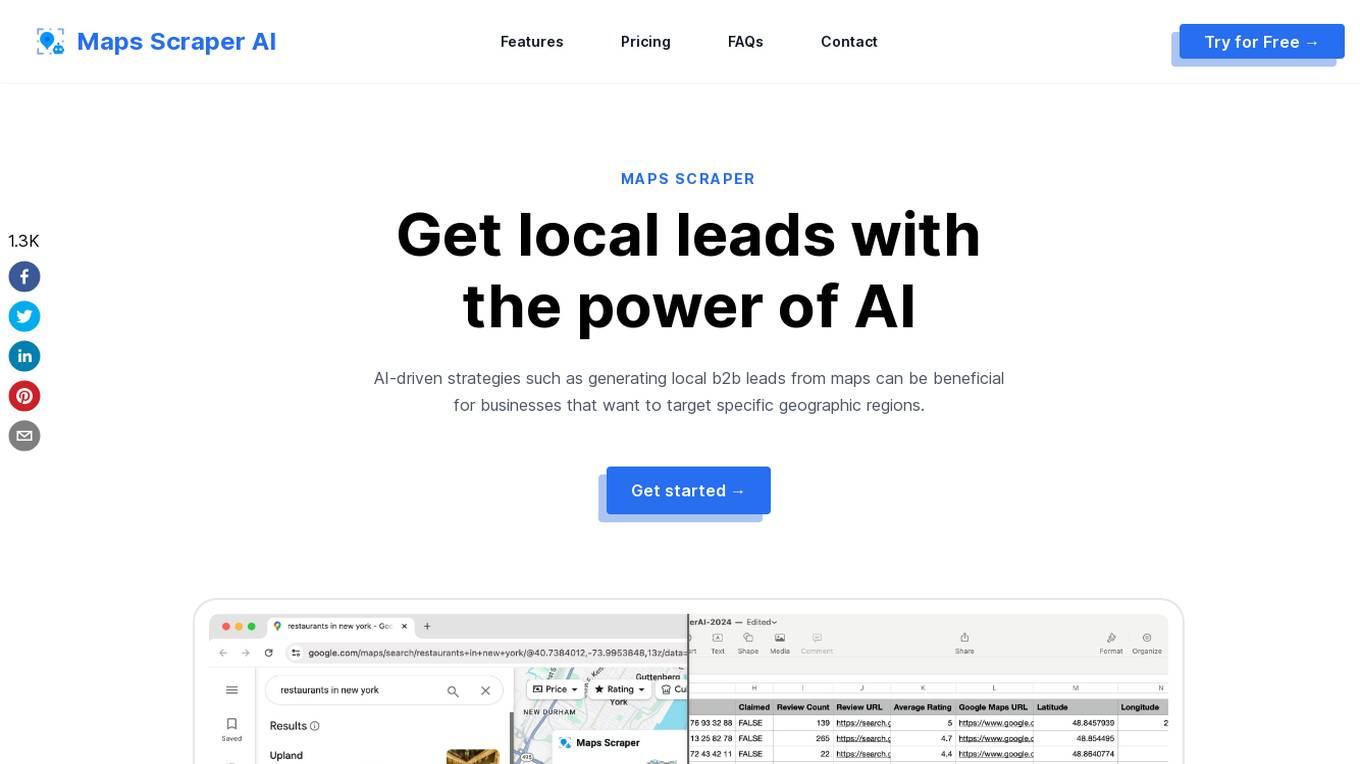

MapsScraperAI

MapsScraperAI is an AI-powered tool designed to extract leads and data from Maps. It offers businesses the ability to generate local B2B leads, conduct research, monitor competition, and obtain business contact details. With features like batch lookup, lightning-fast results, and the unique ability to extract email addresses, MapsScraperAI streamlines the process of data extraction without the need for coding. The tool mimics real user behavior to reduce the risk of being blocked by Maps and ensures timely updates to accommodate any changes on the Maps website.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

Manifold

Manifold is an AI data platform designed specifically for life sciences. It offers a collaborative workbench, data science tools, AI-powered cohort exploration, batch bioinformatics, data dashboards, data engineering solutions, access control, and more. The platform aims to enable faster collaboration and research in the life sciences field by providing a comprehensive suite of tools and features. Trusted by leading institutions, Manifold helps streamline data collection, analysis, and collaboration to accelerate scientific research.

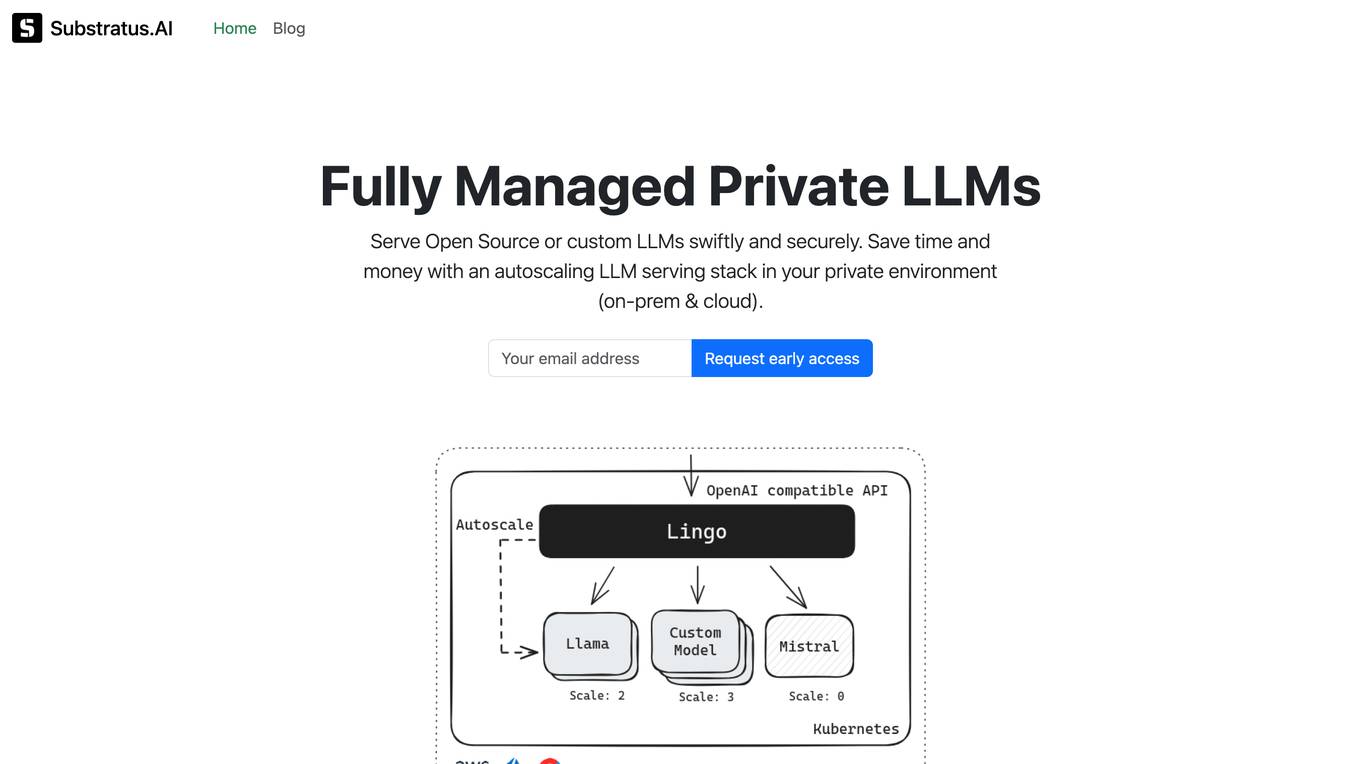

Substratus.AI

Substratus.AI is a fully managed private LLMs platform that allows users to serve LLMs (Llama and Mistral) in their own cloud account. It enables users to keep control of their data while reducing OpenAI costs by up to 10x. With Substratus.AI, users can utilize LLMs in production in hours instead of weeks, making it a convenient and efficient solution for AI model deployment.

Hopsworks

Hopsworks is an AI platform that offers a comprehensive solution for building, deploying, and monitoring machine learning systems. It provides features such as a Feature Store, real-time ML capabilities, and generative AI solutions. Hopsworks enables users to develop and deploy reliable AI systems, orchestrate and monitor models, and personalize machine learning models with private data. The platform supports batch and real-time ML tasks, with the flexibility to deploy on-premises or in the cloud.

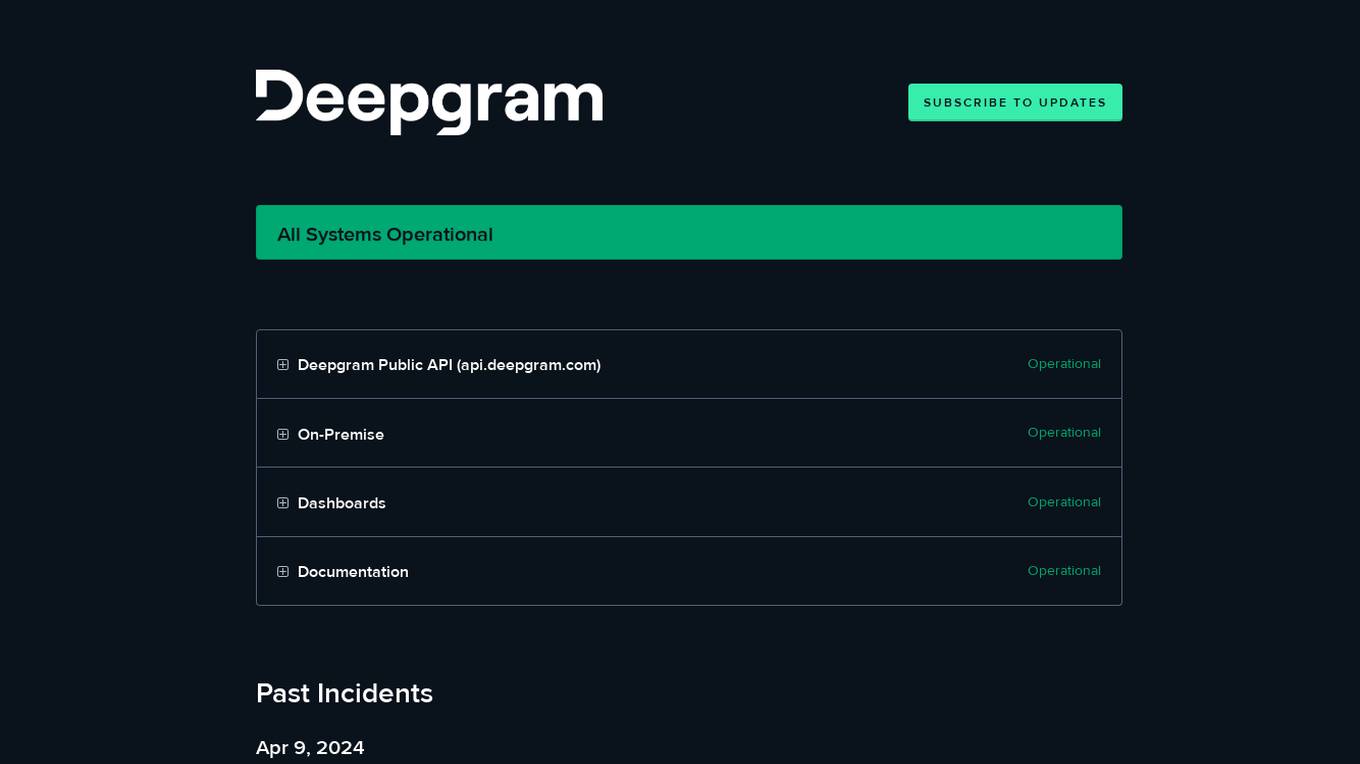

Deepgram

Deepgram is a speech recognition and transcription service that uses artificial intelligence to convert audio into text. It is designed to be accurate, fast, and easy to use. Deepgram offers a variety of features, including: - Automatic speech recognition - Speaker diarization - Language identification - Custom acoustic models - Real-time transcription - Batch transcription - Webhooks - Integrations with popular platforms such as Zoom, Google Meet, and Microsoft Teams

AI Renamer

AI Renamer is an application that utilizes artificial intelligence to automatically rename files based on their content. It offers powerful features such as smart recognition, batch processing, and support for various file types. Users can also integrate their own AI models for enhanced flexibility and privacy. The application provides credit-based pricing options and supports both Mac and Windows platforms.

Nano Banana AI Image Editor

Nano Banana AI Image Editor is a cutting-edge AI-powered photo editing tool that offers professional-grade image processing experience. It utilizes deep learning-based technology to provide features such as background removal, smart enhancement, precise cropping, style conversion, image restoration, and batch processing. The tool ensures privacy and security by processing all image data locally without collecting any personal information. Users can experience fast and efficient image editing with unlimited usage and subscription plans tailored for individuals, professional creators, and large enterprises.

Habsy

Habsy is an AI-powered business card scanner application designed for individuals and teams to effortlessly scan, enrich, and manage business cards. It offers features like fast scanning, batch processing, easy contact organization, eco-friendly networking, accurate data extraction, and security and privacy measures. Habsy aims to make networking smarter, paperless, and more efficient for modern professionals by providing a seamless experience for capturing and utilizing contact information.

TractoAI

TractoAI is an advanced AI platform that offers deep learning solutions for various industries. It provides Batch Inference with no rate limits, DeepSeek offline inference, and helps in training open source AI models. TractoAI simplifies training infrastructure setup, accelerates workflows with GPUs, and automates deployment and scaling for tasks like ML training and big data processing. The platform supports fine-tuning models, sandboxed code execution, and building custom AI models with distributed training launcher. It is developer-friendly, scalable, and efficient, offering a solution library and expert guidance for AI projects.

Eden AI

Eden AI is a platform that provides access to over 100 AI models through a unified API gateway. Users can easily integrate and manage multiple AI models from a single API, with control over cost, latency, and quality. The platform offers ready-to-use AI APIs, custom chatbot, image generation, speech to text, text to speech, OCR, prompt optimization, AI model comparison, cost monitoring, API monitoring, batch processing, caching, multi-API key management, and more. Additionally, professional services are available for custom AI projects tailored to specific business needs.

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

Monkt

Monkt is a powerful document processing platform that transforms various document formats into AI-ready Markdown or structured JSON. It offers features like instant conversion of PDF, Word, PowerPoint, Excel, CSV, web pages, and raw HTML into clean markdown format optimized for AI/LLM systems. Monkt enables users to create intelligent applications, custom AI chatbots, knowledge bases, and training datasets. It supports batch processing, image understanding, LLM optimization, and API integration for seamless document processing. The platform is designed to handle document transformation at scale, with support for multiple file formats and custom JSON schemas.

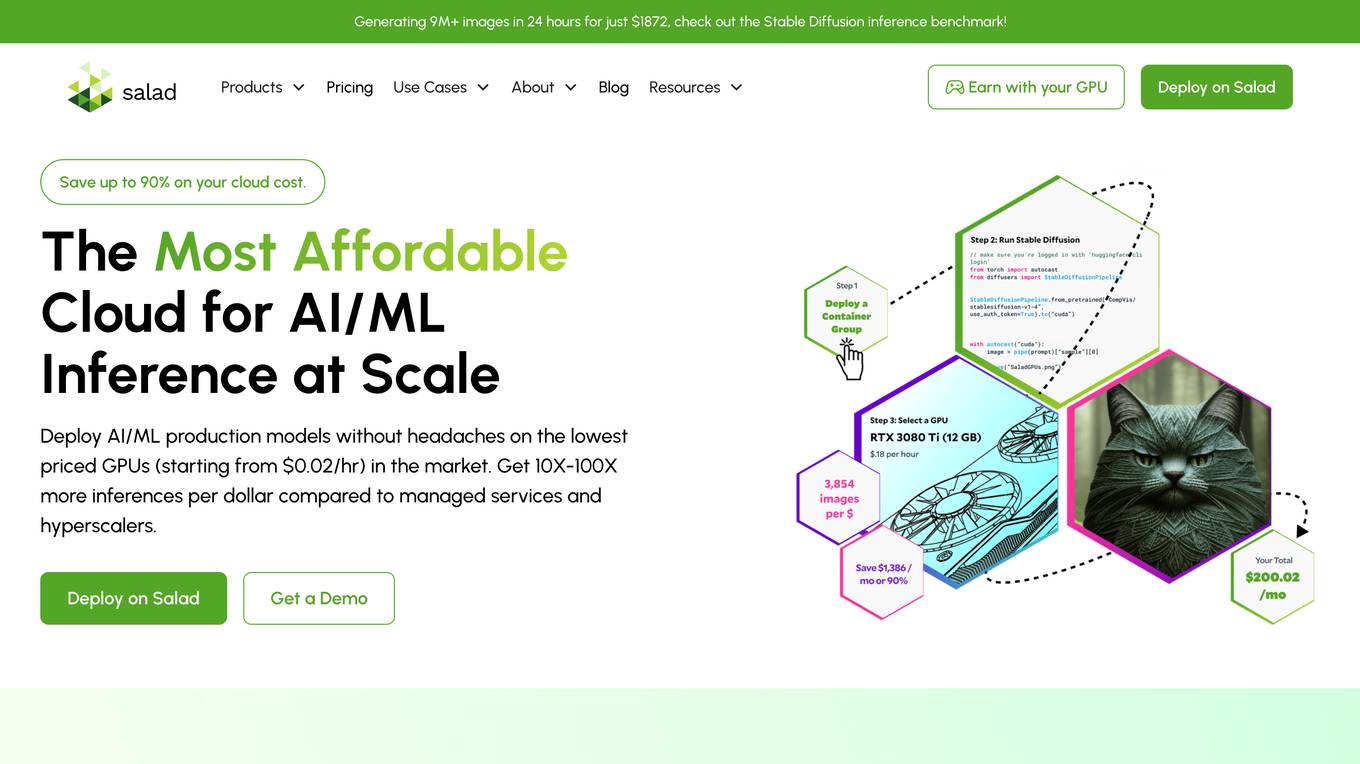

Salad

Salad is a distributed GPU cloud platform that offers fully managed and massively scalable services for AI applications. It provides the lowest priced AI transcription in the market, with features like image generation, voice AI, computer vision, data collection, and batch processing. Salad democratizes cloud computing by leveraging consumer GPUs to deliver cost-effective AI/ML inference at scale. The platform is trusted by hundreds of machine learning and data science teams for its affordability, scalability, and ease of deployment.

Anyscale

Anyscale is a company that provides a scalable compute platform for AI and Python applications. Their platform includes a serverless API for serving and fine-tuning open LLMs, a private cloud solution for data privacy and governance, and an open source framework for training, batch, and real-time workloads. Anyscale's platform is used by companies such as OpenAI, Uber, and Spotify to power their AI workloads.

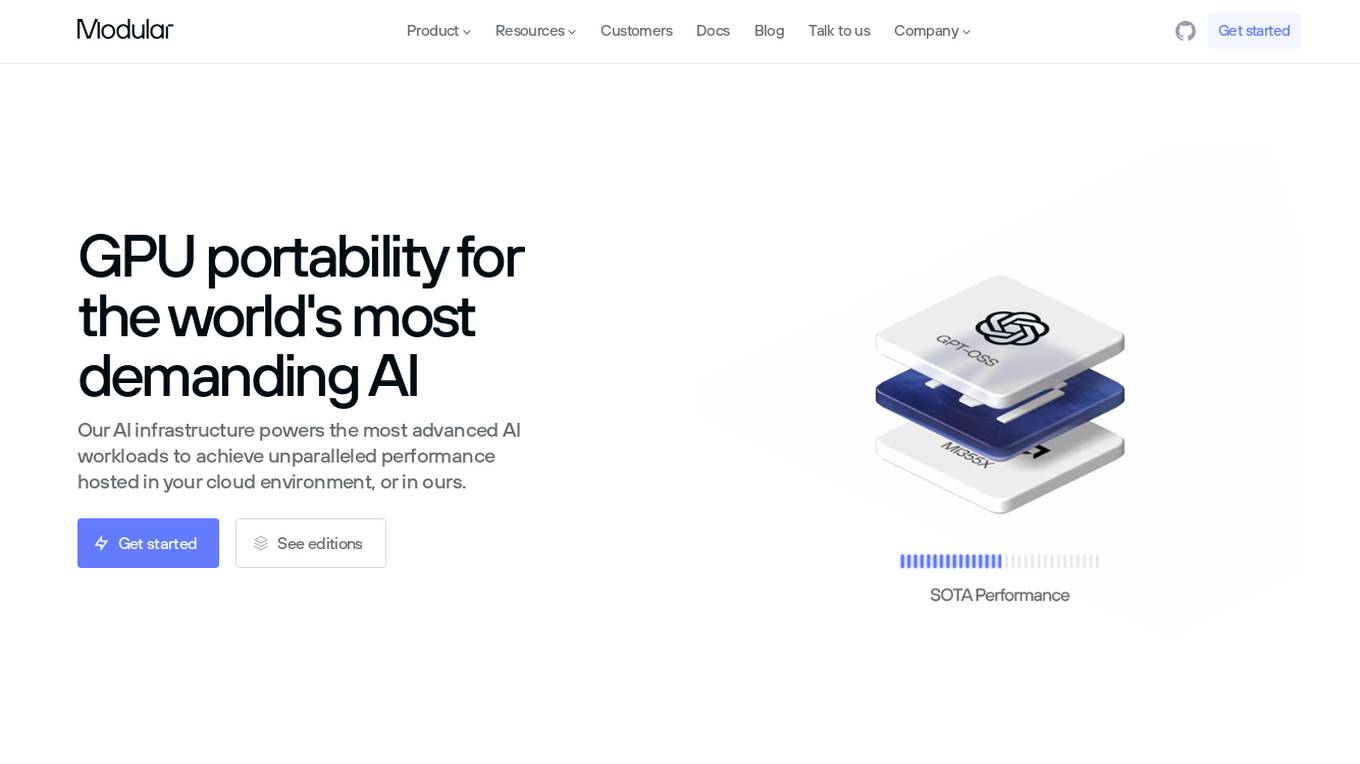

Modular

Modular is a fast, scalable Gen AI inference platform that offers a comprehensive suite of tools and resources for AI development and deployment. It provides solutions for AI model development, deployment options, AI inference, research, and resources like documentation, models, tutorials, and step-by-step guides. Modular supports GPU and CPU performance, intelligent scaling to any cluster, and offers deployment options for various editions. The platform enables users to build agent workflows, utilize AI retrieval and controlled generation, develop chatbots, engage in code generation, and improve resource utilization through batch processing.

1 - Open Source AI Tools

aphrodite-engine

Aphrodite is the official backend engine for PygmalionAI, serving as the inference endpoint for the website. It allows serving Hugging Face-compatible models with fast speeds. Features include continuous batching, efficient K/V management, optimized CUDA kernels, quantization support, distributed inference, and 8-bit KV Cache. The engine requires Linux OS and Python 3.8 to 3.12, with CUDA >= 11 for build requirements. It supports various GPUs, CPUs, TPUs, and Inferentia. Users can limit GPU memory utilization and access full commands via CLI.

6 - OpenAI Gpts

Nifty — PHP Standalone Script Maker

Creates standalone reusable PHP scripts, tools and batch processes.