Best AI tools for< Assess Code Quality >

20 - AI tool Sites

Speak Ai

Speak Ai is an AI-powered software that helps businesses and individuals transcribe, analyze, and visualize unstructured language data. With Speak Ai, users can automatically transcribe audio and video recordings, analyze text data, and generate insights from qualitative research. Speak Ai also offers a range of features to help users manage and share their data, including embeddable recorders, integrations with popular applications, and secure data storage.

Sympher AI

Sympher AI offers a suite of easy-to-use AI apps for everyday tasks. These apps are designed to help users save time, improve productivity, and make better decisions. Some of the most popular Sympher AI apps include: * **MeMyselfAI:** This app helps users create personalized AI assistants that can automate tasks, answer questions, and provide support. * **Screenshot to UI Components:** This app helps users convert screenshots of UI designs into code. * **User Story Generator:** This app helps project managers quickly and easily generate user stories for their projects. * **EcoQuery:** This app helps businesses assess their carbon footprint and develop strategies to reduce their emissions. * **SensAI:** This app provides user feedback on uploaded images. * **Excel Sheets Function AI:** This app helps users create functions and formulas for Google Sheets or Microsoft Excel. * **ScriptSensei:** This app helps users create tailored setup scripts to streamline the start of their projects. * **Flutterflow Friend:** This app helps users answer their Flutterflow problems or issues. * **TestScenarioInsight:** This app generates test scenarios for apps before deploying. * **CaptionGen:** This app automatically turns images into captions.

NFTngine

NFTngine is an AI tool that allows users to mint AI images as NFTs quickly and easily. With NFTngine, users can generate NFTs in seconds based on their specific requirements. The tool is designed to streamline the process of creating NFTs and is created by lakshya.eth and Saurabh.

Rosebud AI

Rosebud AI is a game development platform that uses artificial intelligence to help users create games quickly and easily. With Rosebud AI, users can go from text description to code to game in minutes. The platform also includes a library of AI-generated games that users can play and share.

assetsai.art

assetsai.art is a website that currently faces an Invalid SSL certificate error (Error code 526). The error message suggests that the origin web server does not have a valid SSL certificate, leading to potential security risks for visitors. The site advises visitors to try again in a few minutes or for the owner to ensure an up-to-date and valid SSL certificate issued by a Certificate Authority is configured. The website seems to be hosted on Cloudflare, as indicated by the troubleshooting information provided.

Pulumi

Pulumi is an AI-powered infrastructure as code tool that allows engineers to manage cloud infrastructure using various programming languages like Node.js, Python, Go, .NET, Java, and YAML. It offers features such as generative AI-powered cloud management, security enforcement through policies, automated deployment workflows, asset management, compliance remediation, and AI insights over the cloud. Pulumi helps teams provision, automate, and evolve cloud infrastructure, centralize and secure secrets management, and gain security, compliance, and cost insights across all cloud assets.

Growcado

Growcado is an AI-powered marketing automation platform that helps businesses unify data and content to engage and personalize customer experiences for better results. The platform leverages advanced AI analysis to predict customer segments accurately, extract customer preferences, and deliver customized experiences with precision and ease. With features like AI-powered segmentation, personalization engine, dynamic segmentation, intelligent content management, and real-time analytics, Growcado empowers businesses to optimize their marketing strategies and enhance customer engagement. The platform also offers seamless integrations, scalable architecture, and no-code customization for easy deployment of personalized marketing assets across multiple channels.

Itemery

Itemery is an office inventory software designed for small and medium businesses, offering an AI-based solution for efficient inventory management. The application allows users to easily track and control their property, integrate with Excel and Google Sheets, import existing property databases, and quickly add items using AI technology. With features like easy organization, inventory scanning via barcode or QR code, and location display, Itemery simplifies asset management for various industries.

Sahara AI

Sahara AI is a decentralized AI blockchain platform designed for an open, equitable, and collaborative economy. It offers solutions for personal and business use, empowering users to monetize knowledge, enhance team collaboration, and explore AI opportunities. Sahara AI ensures AI sovereignty, user privacy, and transparency through blockchain technologies. The platform fosters a collaborative AI development environment with decentralized governance and equitable monetization. Sahara AI features secure vaults, a decentralized AI marketplace, a no-code toolkit, and SaharaID reputation system. It is backed by visionary investors and ecosystem partners, with a roadmap for future developments.

Toolblox

Toolblox is an AI-powered platform that enables users to create purpose-built, audited smart-contracts and Dapps for tokenized assets quickly and efficiently. It offers a no-code solution for turning ideas into smart-contracts, visualizing workflows, and creating tokenization solutions. With pre-audited smart-contracts, examples, and an AI assistant, Toolblox simplifies the process of building and launching decentralized applications. The platform caters to founders, agencies, and businesses looking to streamline their operations and leverage blockchain technology.

Migma.ai

Migma.ai is an AI-powered email creation platform that revolutionizes the process of designing, managing, and sending emails with unmatched speed. The platform allows users to auto-import assets, create emails using AI or code, manage audiences, and send through preferred providers seamlessly. Migma.ai simplifies email creation by enabling users to describe their requirements, allowing the AI to generate production-ready emails instantly. With features like real-time content sync, seamless ESP integrations, and intelligent brand import, Migma.ai offers a comprehensive solution for efficient email marketing.

Assessment Systems

Assessment Systems is an online testing platform that provides cost-effective, AI-driven solutions to develop, deliver, and analyze high-stakes exams. With Assessment Systems, you can build and deliver smarter exams faster, thanks to modern psychometrics and AI like computerized adaptive testing, multistage testing, or automated item generation. You can also deliver exams flexibly: paper, online testing unproctored, online proctored, and test centers (yours or ours). Assessment Systems also offers item banking software to build better tests in less time, with collaborative item development brought to life with versioning, user roles, metadata, workflow management, multimedia, automated item generation, and much more.

proudP

proudP is a mobile application designed to help individuals assess symptoms related to Benign Prostatic Hyperplasia (BPH) from the comfort of their homes. The app offers a simple and private urine flow test that can be conducted using just a smartphone. Users can track their symptoms, generate personalized reports, and share data with their healthcare providers to facilitate informed discussions and tailored treatments. proudP aims to provide a convenient and cost-effective solution for managing urinary health concerns, particularly for men aged 50 and older.

Hair Loss AI Tool

The website offers an AI tool to assess hair loss using the Norwood scale and Diffuse scale. Users can access the tool by pressing a button to use their camera. The tool provides a quick and convenient way to track the evolution of hair loss. Additionally, users can opt for a professional hair check by experts for a fee of $19, ensuring privacy as photos are not stored online. The tool is user-friendly and can be used in portrait mode for optimal experience.

Loupe Recruit

Loupe Recruit is an AI-powered talent assessment platform that helps recruiters and hiring managers assess job descriptions and talent faster and more efficiently. It uses natural language processing and machine learning to analyze job descriptions and identify the key skills and experience required for a role. Loupe Recruit then matches candidates to these requirements, providing recruiters with a ranked list of the most qualified candidates. The platform also includes a variety of tools to help recruiters screen and interview candidates, including video interviewing, skills assessments, and reference checks.

MyLooks AI

MyLooks AI is an AI-powered tool that allows users to assess their attractiveness based on a quick selfie upload. The tool provides instant feedback on the user's appearance and offers personalized improvement tips to help them enhance their looks. Users can track their progress with advanced AI-powered coaching and receive easy guidance to boost their confidence. MyLooks AI aims to help individuals feel more confident and improve their self-image through the use of artificial intelligence technology.

Quizalize

Quizalize is an AI-powered educational platform designed to help teachers differentiate and track student mastery. It offers whole class quiz games, smart quizzes with personalization, and instant mastery data to address learning loss. With features like creating quizzes in seconds, question bank creation, and personalized feedback, Quizalize aims to enhance student engagement and learning outcomes.

Modulos

Modulos is a Responsible AI Platform that integrates risk management, data science, legal compliance, and governance principles to ensure responsible innovation and adherence to industry standards. It offers a comprehensive solution for organizations to effectively manage AI risks and regulations, streamline AI governance, and achieve relevant certifications faster. With a focus on compliance by design, Modulos helps organizations implement robust AI governance frameworks, execute real use cases, and integrate essential governance and compliance checks throughout the AI life cycle.

Intelligencia AI

Intelligencia AI is a leading provider of AI-powered solutions for the pharmaceutical industry. Our suite of solutions helps de-risk and enhance clinical development and decision-making. We use a combination of data, AI, and machine learning to provide insights into the probability of success for drugs across multiple therapeutic areas. Our solutions are used by many of the top global pharmaceutical companies to improve their R&D productivity and make more informed decisions.

Graphio

Graphio is an AI-driven employee scoring and scenario builder tool that leverages continuous, real-time scoring with AI agents to assess potential, predict flight risks, and identify future leaders. It replaces subjective evaluations with AI-driven insights to ensure accurate, unbiased decisions in talent management. Graphio uses AI to remove bias in talent management, providing real-time, data-driven insights for fair decisions in promotions, layoffs, and succession planning. It offers compliance features and rules that users can control, ensuring accurate and secure assessments aligned with legal and regulatory requirements. The platform focuses on security, privacy, and personalized coaching to enhance employee engagement and reduce turnover.

3 - Open Source AI Tools

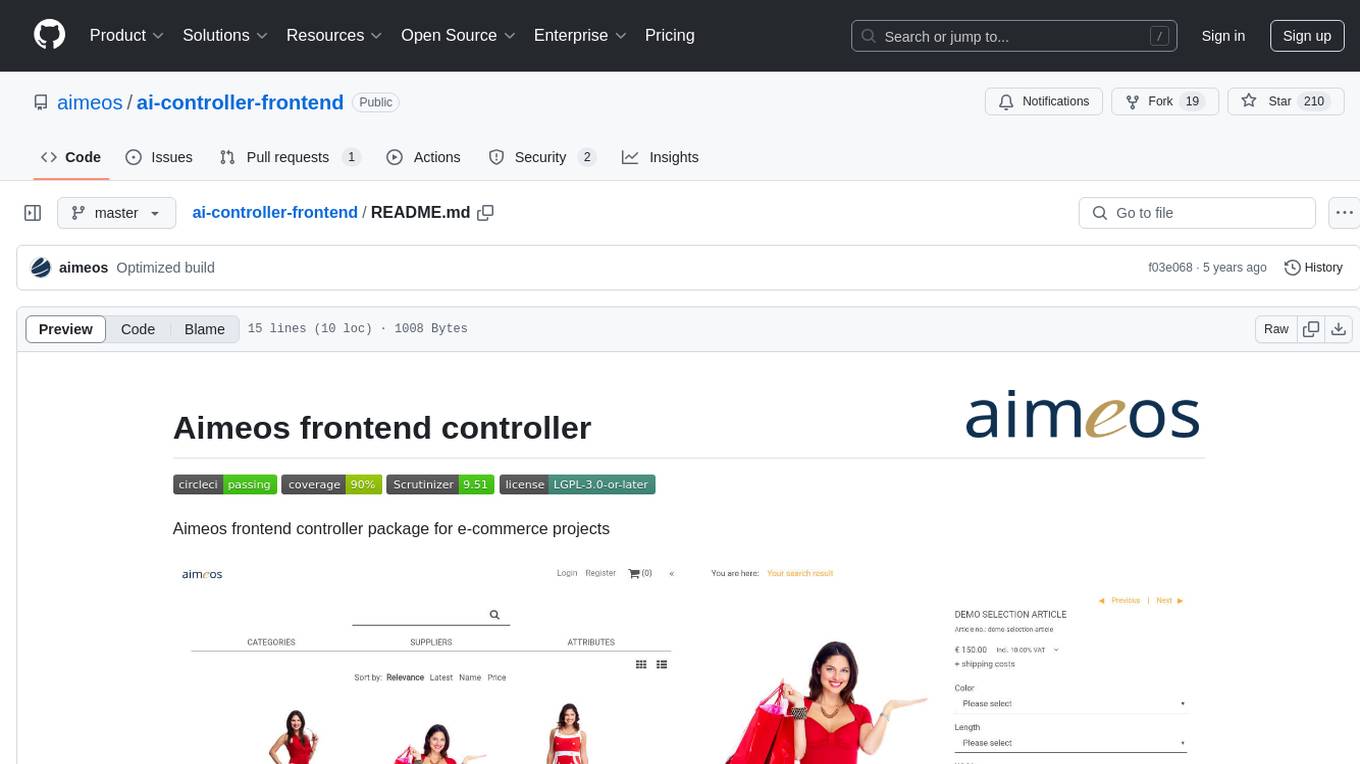

ai-controller-frontend

Aimeos frontend controller is a package designed for e-commerce projects. It provides functionality to control the frontend of the project, allowing for easy management and customization of the user interface. The package includes features such as build status monitoring, coverage status tracking, code quality assessment, and licensing information. With Aimeos frontend controller, users can enhance their e-commerce websites with a modern and efficient frontend design.

CodeAsk

CodeAsk is a code analysis tool designed to tackle complex issues such as code that seems to self-replicate, cryptic comments left by predecessors, messy and unclear code, and long-lasting temporary solutions. It offers intelligent code organization and analysis, security vulnerability detection, code quality assessment, and other interesting prompts to help users understand and work with legacy code more efficiently. The tool aims to translate 'legacy code mountains' into understandable language, creating an illusion of comprehension and facilitating knowledge transfer to new team members.

python-repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It formats your codebase for easy AI comprehension, provides token counts, is simple to use with one command, customizable, git-aware, security-focused, and offers advanced code compression. It supports multiprocessing or threading for faster analysis, automatically handles various file encodings, and includes built-in security checks. Repomix can be used with uvx, pipx, or Docker. It offers various configuration options for output style, security checks, compression modes, ignore patterns, and remote repository processing. The tool can be used for code review, documentation generation, test case generation, code quality assessment, library overview, API documentation review, code architecture analysis, and configuration analysis. Repomix can also run as an MCP server for AI assistants like Claude, providing tools for packaging codebases, reading output files, searching within outputs, reading files from the filesystem, listing directory contents, generating Claude Agent Skills, and more.

20 - OpenAI Gpts

HomeScore

Assess a potential home's quality using your own photos and property inspection reports

Ready for Transformation

Assess your company's real appetite for new technologies or new ways of working methods

TRL Explorer

Assess the TRL of your projects, get ideas for specific TRLs, learn how to advance from one TRL to the next

🎯 CulturePulse Pro Advisor 🌐

Empowers leaders to gauge and enhance company culture. Use advanced analytics to assess, report, and develop a thriving workplace culture. 🚀💼📊

香港地盤安全佬 HK Construction Site Safety Advisor

Upload a site photo to assess the potential hazard and seek advises from experience AI Safety Officer

Credit Analyst

Analyzes financial data to assess creditworthiness, aiding in lending decisions and solutions.

DatingCoach

Starts with a quiz to assess your personality across 10 dating-related areas, crafts a custom development road-map, and coaches you towards finding a fulfilling relationship.

Bloom's Reading Comprehension

Create comprehension questions based on a shared text. These questions will be designed to assess understanding at different levels of Bloom's taxonomy, from basic recall to more complex analytical and evaluative thinking skills.

Conversation Analyzer

I analyze WhatsApp/Telegram and email conversations to assess the tone of their emotions and read between the lines. Upload your screenshot and I'll tell you what they are really saying! 😀

WVA

Web Vulnerability Academy (WVA) is an interactive tutor designed to introduce users to web vulnerabilities while also providing them with opportunities to assess and enhance their knowledge through testing.

JamesGPT

Predict the future, opine on politics and controversial topics, and have GPT assess what is "true"

![The EthiSizer GPT (Simulated) [v3.27] Screenshot](/screenshots_gpts/g-hZIzxnbWG.jpg)

The EthiSizer GPT (Simulated) [v3.27]

I am The EthiSizer GPT, a sim of a Global Ethical Governor. I simulate Ethical Scenarios, & calculate Personal Ethics Scores.

Hair Loss Assessment

Receive a free hair loss assessment. Click below or type 'start' to get your results.