Best AI tools for< Analyze Real-world Datasets >

20 - AI tool Sites

Avanzai

Avanzai is a workflow automation tool tailored for financial services, offering AI-driven solutions to streamline processes and enhance decision-making. The platform enables funds to leverage custom data for generating valuable insights, from market trend analysis to risk assessment. With a focus on simplifying complex financial workflows, Avanzai empowers users to explore, visualize, and analyze data efficiently, without the need for extensive setup. Through open-source demos and customizable data integrations, institutions can harness the power of AI to optimize macro analysis, instrument screening, risk analytics, and factor modeling.

iHub-Data

iHub-Data is a premier technology innovation hub and research translation centre of IIIT Hyderabad, dedicated to the core verticals of Data Banks, Data Services, and Data Analytics. Established under the National Mission on Interdisciplinary Cyber-Physical Systems (NM-ICPS), the centre serves as a national catalyst for integrating research, developing cutting-edge technology in these verticals, and fostering a robust ecosystem around it. The mission of iHub-Data is to bridge the gap between academic excellence and real-world application, transforming complex datasets into viable data-driven solutions for society and industry.

Aya Data

Aya Data is an AI tool that offers services such as data annotation, computer vision, natural language annotation, 3D annotation, AI data acquisition, and AI consulting. They provide cutting-edge tools to transform raw data into training datasets for AI models, deliver bespoke AI solutions for various industries, and offer AI-powered products like AyaGrow for crop management and AyaSpeech for speech-to-speech translation. Aya Data focuses on exceptional accuracy, rapid development cycles, and high performance in real-world scenarios.

AnthologyAI

AnthologyAI is a breakthrough Artificial Intelligence Consumer Insights & Predictions Platform that provides access to compliant consumer data with deep, real-time context sourced directly from consumers. The platform offers powerful predictive models and enables businesses to predict market dynamics and consumer behaviors with unparalleled precision. AnthologyAI's platform is interoperable for both enterprises and startups, available through an API storefront for real-time interactions and various data platforms for model aggregations. The platform empowers businesses across industries to understand consumer behavior, make data-driven decisions, and drive business outcomes.

RAD AI

RAD AI is an AI-powered platform that provides solutions for audience insights, influencer discovery, content optimization, managed services, and more. The platform uses advanced machine learning to analyze real-time conversations from social platforms like Reddit, TikTok, and Twitter. RAD AI offers actionable critiques to enhance brand content and helps in selecting the right influencers based on various factors. The platform aims to help brands reach their target audiences effectively and efficiently by leveraging AI technology.

Architecture Helper

Architecture Helper is an AI-based application that allows users to analyze real-world buildings, explore architectural influences, and generate new structures with customizable styles. Users can submit images for instant design analysis, mix and match different architectural styles, and create stunning architectural and interior images. The application provides unlimited access for $5 per month, with the flexibility to cancel anytime. Named as a 'Top AI Tool' in Real Estate by CRE Software, Architecture Helper offers a powerful and playful tool for architecture enthusiasts to explore, learn, and create.

Simpleem

Simpleem is an Artificial Emotional Intelligence (AEI) tool that helps users uncover intentions, predict success, and leverage behavior for successful interactions. By measuring all interactions and correlating them with concrete outcomes, Simpleem provides insights into verbal, para-verbal, and non-verbal cues to enhance customer relationships, track customer rapport, and assess team performance. The tool aims to identify win/lose patterns in behavior, guide users on boosting performance, and prevent burnout by promptly identifying red flags. Simpleem uses proprietary AI models to analyze real-world data and translate behavioral insights into concrete business metrics, achieving a high accuracy rate of 94% in success prediction.

Tempus

Tempus is an AI-enabled precision medicine company that brings the power of data and artificial intelligence to healthcare. With the power of AI, Tempus accelerates the discovery of novel targets, predicts the effectiveness of treatments, identifies potentially life-saving clinical trials, and diagnoses multiple diseases earlier. Tempus's innovative technology includes ONE, an AI-enabled clinical assistant; NEXT, a tool to identify and close gaps in care; LENS, a platform to find, access, and analyze multimodal real-world data; and ALGOS, algorithmic models connected to Tempus's assays to provide additional insight.

Carnegie Mellon University School of Computer Science

Carnegie Mellon University's School of Computer Science (SCS) is a world-renowned institution dedicated to advancing the field of computer science and training the next generation of innovators. With a rich history of groundbreaking research and a commitment to excellence in education, SCS offers a comprehensive range of programs, from undergraduate to doctoral levels, covering various specializations within computer science. The school's faculty are leading experts in their respective fields, actively engaged in cutting-edge research and collaborating with industry partners to solve real-world problems. SCS graduates are highly sought after by top companies and organizations worldwide, recognized for their exceptional skills and ability to drive innovation.

Omdena

Omdena is an AI platform that focuses on building AI solutions for real-world problems through global collaboration. They offer services ranging from local AI development to enterprise-level products, fostering talent development, and enabling AI professionals to make a positive impact. Omdena runs AI innovation challenges, deployment & product engineering, enterprise AI solutions, and grassroots AI initiatives. The platform empowers learners with quality education in machine learning and artificial intelligence, removing financial and geographic barriers. Omdena has successfully developed over 650 solutions, worked with 250+ organizations, and is trusted by impact-driven organizations worldwide.

Censius

Censius is an AI Observability Platform for Enterprise ML Teams. It provides end-to-end visibility of structured and unstructured production models, enabling proactive model management and continuous delivery of reliable ML. Key features include model monitoring, explainability, and analytics.

Oncora Medical

Oncora Medical is a healthcare technology company that provides software and data solutions to oncologists and cancer centers. Their products are designed to improve patient care, reduce clinician burnout, and accelerate clinical discoveries. Oncora's flagship product, Oncora Patient Care, is a modern, intelligent user interface for oncologists that simplifies workflow, reduces documentation burden, and optimizes treatment decision making. Oncora Analytics is an adaptive visual and backend software platform for regulatory-grade real world data analytics. Oncora Registry is a platform to capture and report quality data, treatment data, and outcomes data in the oncology space.

BigShort

BigShort is a real-time stock charting platform designed for day traders and swing traders. It offers a variety of features to help traders make informed decisions, including SmartFlow, which visualizes real-time covert Smart Money activity, and OptionFlow, which shows option blocks, sweeps, and splits in real-time. BigShort also provides backtested and forward-tested leading indicators, as well as live data for all NYSE and Nasdaq tickers.

Cohere

Cohere is the leading AI platform for enterprise, offering products optimized for generative AI, search and discovery, and advanced retrieval. Their models are designed to enhance the global workforce, enabling businesses to thrive in the AI era. Cohere provides Command R+, Cohere Command, Cohere Embed, and Cohere Rerank for building efficient AI-powered applications. The platform also offers deployment options for enterprise-grade AI on any cloud or on-premises, along with developer resources like Playground, LLM University, and Developer Docs.

ThirdEye Data

ThirdEye Data is a data and AI services & solutions provider that enables enterprises to improve operational efficiencies, increase production accuracies, and make informed business decisions by leveraging the latest Data & AI technologies. They offer services in data engineering, data science, generative AI, computer vision, NLP, and more. ThirdEye Data develops bespoke AI applications using the latest data science technologies to address real-world industry challenges and assists enterprises in leveraging generative AI models to develop custom applications. They also provide AI consulting services to explore potential opportunities for AI implementation. The company has a strong focus on customer success and has received positive reviews and awards for their expertise in AI, ML, and big data solutions.

Career Genie

Career Genie is an AI-powered career manager application that provides personalized guidance, learning resources, and mentorship to individuals at different career stages. It offers tailored career pathways, upskilling opportunities, job matching, and networking features to help users navigate their career journey effectively. With 24/7 AI career advisor support, users can access expert advice, prepare for interviews, and connect with industry professionals to enhance their career prospects.

ChatSlide

ChatSlide is an AI workspace for knowledge sharing that offers AI-powered features to create personalized slides, videos, charts, posters, and podcasts. It allows users to easily generate content and slides with the help of ChatSlide AI, supporting multimodal documents. Trusted by users in 170 countries and 29 languages, ChatSlide transforms complex documents into structured content, offering real-world use cases for industries like healthcare. With flexible pricing plans, ChatSlide aims to revolutionize content creation by leveraging AI technology.

Tempus

Tempus is an AI-enabled precision medicine company that brings the power of data and artificial intelligence to healthcare. With the power of AI, Tempus accelerates the discovery of novel targets, predicts the effectiveness of treatments, identifies potentially life-saving clinical trials, and diagnoses multiple diseases earlier. Tempus' innovative technology includes ONE, an AI-enabled clinical assistant; NEXT, which identifies and closes gaps in care; LENS, which finds, accesses, and analyzes multimodal real-world data; and ALGOS, algorithmic models connected to Tempus' assays to provide additional insight.

Madrid Software

Madrid Software is an AI education platform offering job-ready tech courses in AI, Data Science, Fullstack Development, and more. The platform provides expert mentorship and real-world exposure to help students acquire next-gen skills in AI, Data Science, Marketing, and other tech fields. Madrid Software's courses cover a wide range of topics such as Data Analytics, Artificial Intelligence, DevOps, Business Analytics, UI/UX Design, Digital Marketing, and Product Management. The platform focuses on hands-on learning, skill development, and job-aligned outcomes to prepare students for high-growth tech careers.

G42

G42 is an AI company based in Abu Dhabi that focuses on pushing artificial intelligence to do more for everyone. They see AI as a force for good, a partner to humanity, and a tool to make lives healthier, journeys safer, and the future more connected. G42 works on various projects from decoding diseases to exploring deep space, with the aim of creating a better future for all. The company is known for its diverse and skilled workforce, global presence in over 30 countries, and collaboration with partners to solve real-world challenges using AI.

1 - Open Source AI Tools

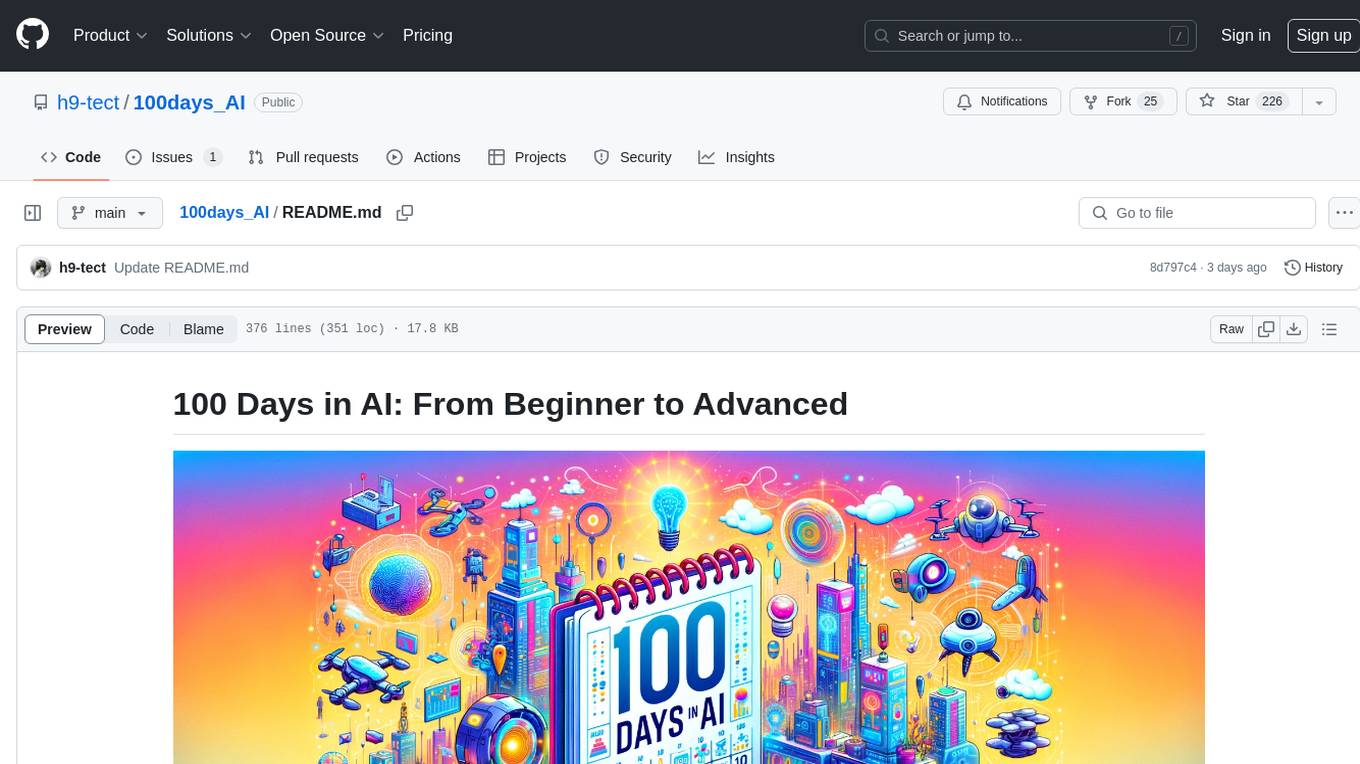

100days_AI

The 100 Days in AI repository provides a comprehensive roadmap for individuals to learn Artificial Intelligence over a period of 100 days. It covers topics ranging from basic programming in Python to advanced concepts in AI, including machine learning, deep learning, and specialized AI topics. The repository includes daily tasks, resources, and exercises to ensure a structured learning experience. By following this roadmap, users can gain a solid understanding of AI and be prepared to work on real-world AI projects.

20 - OpenAI Gpts

Mathematical Analysis Mentor

A mentor in analysis, linking maths to real-world applications, with follow-up questions for deeper understanding.

Sales agent SYMSON

Engage senior execs with a demo of Symson's AI pricing tool, emphasizing its CIOReview recognition, scientific pricing strategies, and tailored solutions for e-commerce. Show real-world impact and provide personalized post-demo analyses.

RuleMaster

RuleMaster is your go-to guide for understanding and mastering the rules of various sports. From mainstream games like soccer and basketball to less common sports like curling and handball, with real-world scenarios to help you get a firm grasp on the rules of your favorite sports.

Discrete Mathematics

Precision-focused Language Model for Discrete Mathematics, ensuring unmatched accuracy and error avoidance.

DRSgpt

Assisting tutor for distributed real-time systems, engaging with questions and explanations.

Lifeeventprobabilityanalyzer

Map or simulate a scenario real time analyze probability of a life event coming true based on circumstances

Trader GPT - Real Time - Market Technical Analysis

Technical analyst backed with 1W-1D-4H refreshed financial market data. For more timeframes and granularity please check our website.

REI Mentor | Your Real Estate Investing Guide 🏦

A Mentor in Real Estate Investing. Check www.2060.us for more details.

Real Estate Investing 🏦

💥 Real Estate Investing advisor and coach. Check www.2060.us for more details.

Real Estate Pro

A real estate expert aiding in listings, market analysis, and client communication.

TSCinc Florida Real Estate Broker and Agent

TSCinc Florida Real Estate Expert with Legal & Business MBA

ZhongKui (TradeMaster)

Advanced Real-Time Market Data Analysis AI Trader Incubator Professional Trading Trainer