Best AI tools for< Accelerate Io >

20 - AI tool Sites

Builder.io

Builder.io is an AI-powered visual development platform that accelerates digital teams by providing design-to-code solutions. With Visual Copilot, users can transform Figma designs into production-ready code quickly and efficiently. The platform offers features like AI-powered design-to-code conversion, visual editing, and enterprise CMS integration. Builder.io enables users to streamline their development process and bring ideas to production in seconds.

Mobot

Mobot is an AI-powered platform that offers end-to-end mobile automation solutions. It utilizes AI-driven mechanical robots to automate critical aspects of the mobile ecosystem, enabling faster releases, fewer issues, and significant time and cost savings for engineering, product, and growth teams. Mobot provides managed and self-service mobile testing for engineering and marketing purposes, mobile campaign monitoring, integrations with favorite apps, and supports various use cases. The platform caters to different teams such as engineering, QA, product, marketing, and support, helping them achieve 100% testing coverage and streamline their workflows.

Shakudo

Shakudo is an AI application designed for critical infrastructure, offering a unified platform to build an ideal data stack. It provides various AI components such as AI Agents, Knowledge Graph, Vector Database, Workflow Automation, and Text to SQL. Shakudo caters to industries like Aerospace, Automotive & Transportation, Climate & Energy, Financial Services, Healthcare & Life Sciences, Manufacturing, Real Estate, and Retail, offering use cases like managing customer retention, personalizing learning pathways, and extracting key insights from financial documents. The platform also features case studies, white papers, and resources for in-depth learning and implementation.

Novita AI

Novita AI is an AI cloud platform offering Model APIs, Serverless, and GPU Instance services in a cost-effective and integrated manner to accelerate AI businesses. It provides optimized models for high-quality dialogue use cases, full spectrum AI APIs for image, video, audio, and LLM applications, serverless auto-scaling based on demand, and customizable GPU solutions for complex AI tasks. The platform also includes a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable services.

Alluxio

Alluxio is a data orchestration platform designed for the cloud, offering seamless access, management, and running of AI/ML workloads. Positioned between compute and storage, Alluxio provides a unified solution for enterprises to handle data and AI tasks across diverse infrastructure environments. The platform accelerates model training and serving, maximizes infrastructure ROI, and ensures seamless data access. Alluxio addresses challenges such as data silos, low performance, data engineering complexity, and high costs associated with managing different tech stacks and storage systems.

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

Prismic

Prismic is an AI-powered headless page builder designed for Next.js, Nuxt, and SvelteKit sites. It empowers marketing teams to create high-converting landing pages quickly while maintaining brand consistency. With features like live previews, dynamic visual page builder, and scheduled releases, Prismic streamlines the process of publishing on-brand pages. For developers, Prismic offers a developer tool to build pre-approved components and automate repetitive tasks, resulting in faster website development. The application also includes a local developer tool for structuring content, defining components, and pushing them to the Page Builder, reducing time to launch by 65%.

Breadcrumbs

Breadcrumbs is a revenue acceleration platform that helps businesses optimize their entire sales and marketing funnel. It provides enterprise-grade lead scoring, allowing businesses to identify and prioritize their most promising leads. Breadcrumbs also offers a range of other features, such as data-driven model creation, unlimited workspaces and models, multi-variate testing, and integrations with a variety of marketing and sales tools. With Breadcrumbs, businesses can improve their lead quality, increase conversion rates, and accelerate revenue growth.

LeadShark

LeadShark is an AI-powered B2B sales automation tool that helps businesses automate lead generation processes. By using a Browser Extension powered by AI, LeadShark enables users to sit back and watch high-quality leads roll into their sales funnel. The tool offers features such as automated lead generation, opportunity dashboard for sales metrics, integrations with favorite browsers, and a Go-to-Market Strategy Template for enhancing marketing strategies. LeadShark aims to address the continuous need for finding high-quality leads in outbound sales by providing automated lead generation solutions based on the user's Ideal Customer Profile criteria.

ModernQuery

ModernQuery is an AI-powered search solution that enhances website search functionality with ChatGPT's conversational search technology. It offers a no-code solution for easy integration, allowing users to improve their on-site search experience without the need for technical expertise. With features like plug-and-play setup, manual search result adjustments, and autocomplete functionality, ModernQuery aims to provide a seamless and efficient search experience for website visitors. The application supports popular CMS platforms like WordPress and Drupal, as well as custom website integrations through JavaScript embedding.

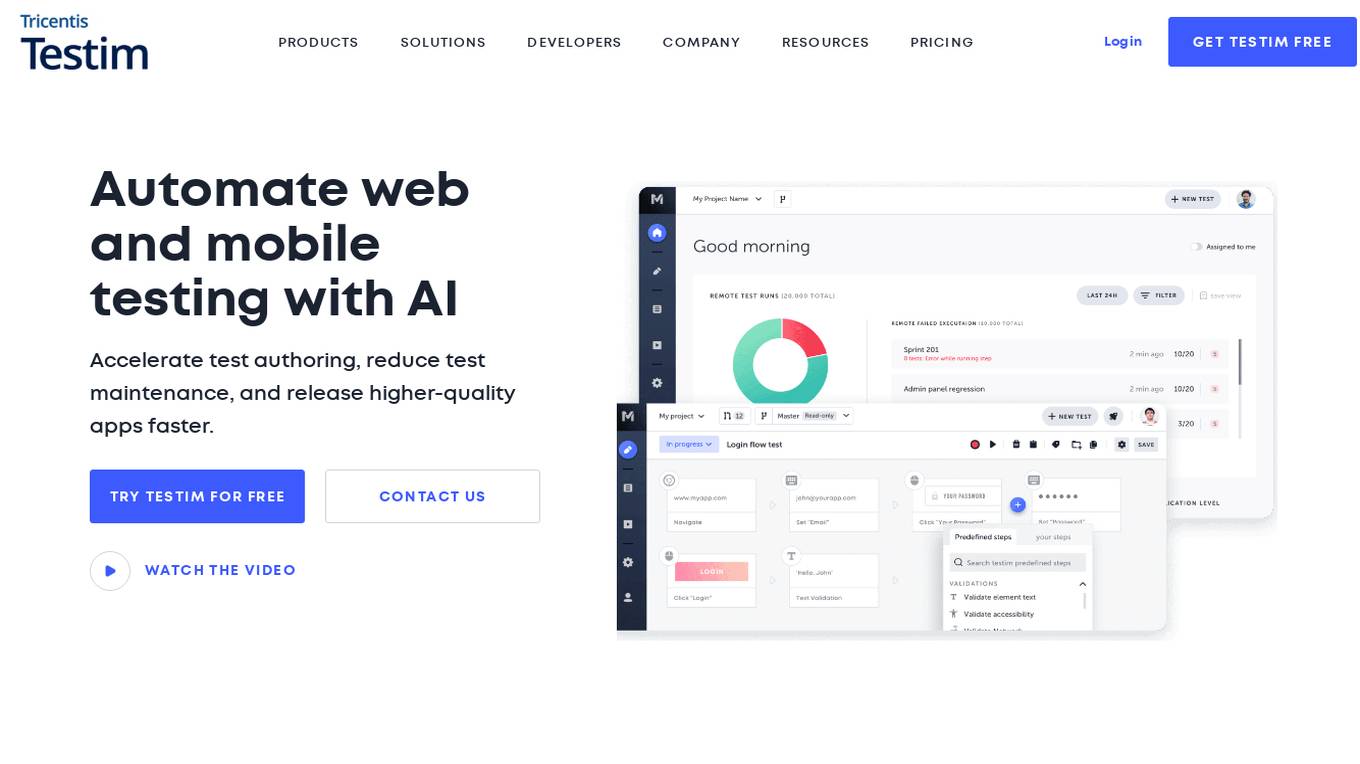

Testim

Testim is an AI-powered UI and functional testing platform that helps accelerate test authoring, reduce test maintenance, and release higher-quality apps faster. It offers a range of features such as fast authoring speed, test stability, root cause analysis, and TestOps, making it an efficient and effective solution for product development teams.

Bubble

Bubble is a no-code application development platform that allows users to build and deploy web and mobile applications without writing any code. It provides a visual interface for designing and developing applications, and it includes a library of pre-built components and templates that can be used to accelerate development. Bubble is suitable for a wide range of users, from beginners with no coding experience to experienced developers who want to build applications quickly and easily.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

ScholarAI

ScholarAI is an AI-powered scientific research tool that offers a wide range of features to help users navigate and extract insights from scientific literature. With access to over 200 million peer-reviewed articles, ScholarAI allows users to conduct abstract searches, literature mapping, PDF reading, literature reviews, gap analysis, direct Q&A, table and figure extraction, citation management, and project management. The tool is designed to accelerate the research process and provide tailored scientific insights to users.

Hippo Video

Hippo Video is an AI-powered video platform designed for Go-To-Market (GTM) teams. It offers a comprehensive solution for sales, marketing, campaigns, customer support, and communications. The platform enables users to create interactive videos easily and quickly, transform text into videos at scale, and personalize video campaigns. With features like Text-to-Video, AI Avatar Video Generator, Video Flows, and AI Editor, Hippo Video helps businesses enhance engagement, accelerate video production, and improve customer self-service.

Ideanote

Ideanote is an enterprise innovation and idea management software platform that helps businesses collect, prioritize, and implement ideas from customers, partners, and employees. It streamlines the innovation process by offering a structured, repeatable approach, incorporating AI to speed up ideation and implementation. Ideanote empowers companies of all sizes to accelerate their innovation, engage their crowd, measure impact, automate tasks, and use AI to innovate faster. With a focus on goal-driven idea collections, Ideanote provides a central home for ideas, making innovation accessible and efficient for all users.

Code99

Code99 is an AI-powered platform designed to speed up the development process by providing instant boilerplate code generation. It allows users to customize their tech stack, streamline development, and launch projects faster. Ideal for startups, developers, and IT agencies looking to accelerate project timelines and improve productivity. The platform offers features such as authentication, database support, RESTful APIs, data validation, Swagger API documentation, email integration, state management, modern UI, clean code generation, and more. Users can generate production-ready apps in minutes, transform database schema into React or Nest.js apps, and unleash creativity through effortless editing and experimentation. Code99 aims to save time, avoid repetitive tasks, and help users focus on building their business effectively.

Trill

Trill is an AI-powered research assistant designed to streamline the user research process. It helps users move from interviews to insights quickly by providing relevant insights and observations based on project objectives. With features like instant themes and categories, organizing findings, and a user-friendly editor, Trill aims to simplify and accelerate the research analysis process. Currently in beta, Trill offers a free trial for users to experience its capabilities and provide feedback for further improvements.

Adjust

Adjust is an AI-driven platform that helps mobile app developers accelerate their app's growth through a comprehensive suite of measurement, analytics, automation, and fraud prevention tools. The platform offers unlimited measurement capabilities across various platforms, powerful analytics and reporting features, AI-driven decision-making recommendations, streamlined operations through automation, and data protection against mobile ad fraud. Adjust also provides solutions for iOS and SKAdNetwork success, CTV and OTT performance enhancement, ROI measurement, fraud prevention, and incrementality analysis. With a focus on privacy and security, Adjust empowers app developers to optimize their marketing strategies and drive tangible growth.

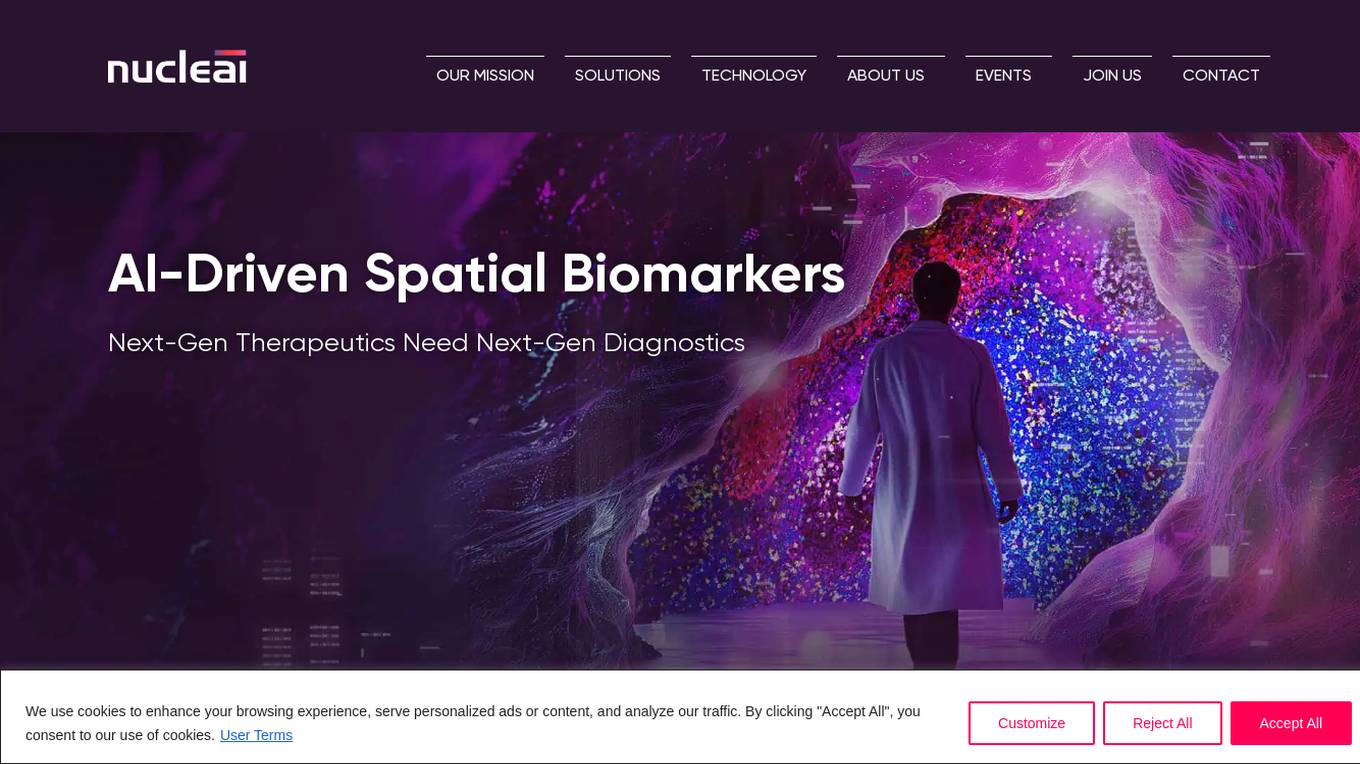

Nucleai

Nucleai is an AI-driven spatial biomarker analysis tool that leverages military intelligence-grade geospatial AI methods to analyze complex cellular interactions in a patient's biopsy. The platform offers a first-of-its-kind multimodal solution by ingesting images from various modalities and delivering actionable insights to optimize biomarker scoring, predict response to therapy, and revolutionize disease diagnosis and treatment.

1 - Open Source AI Tools

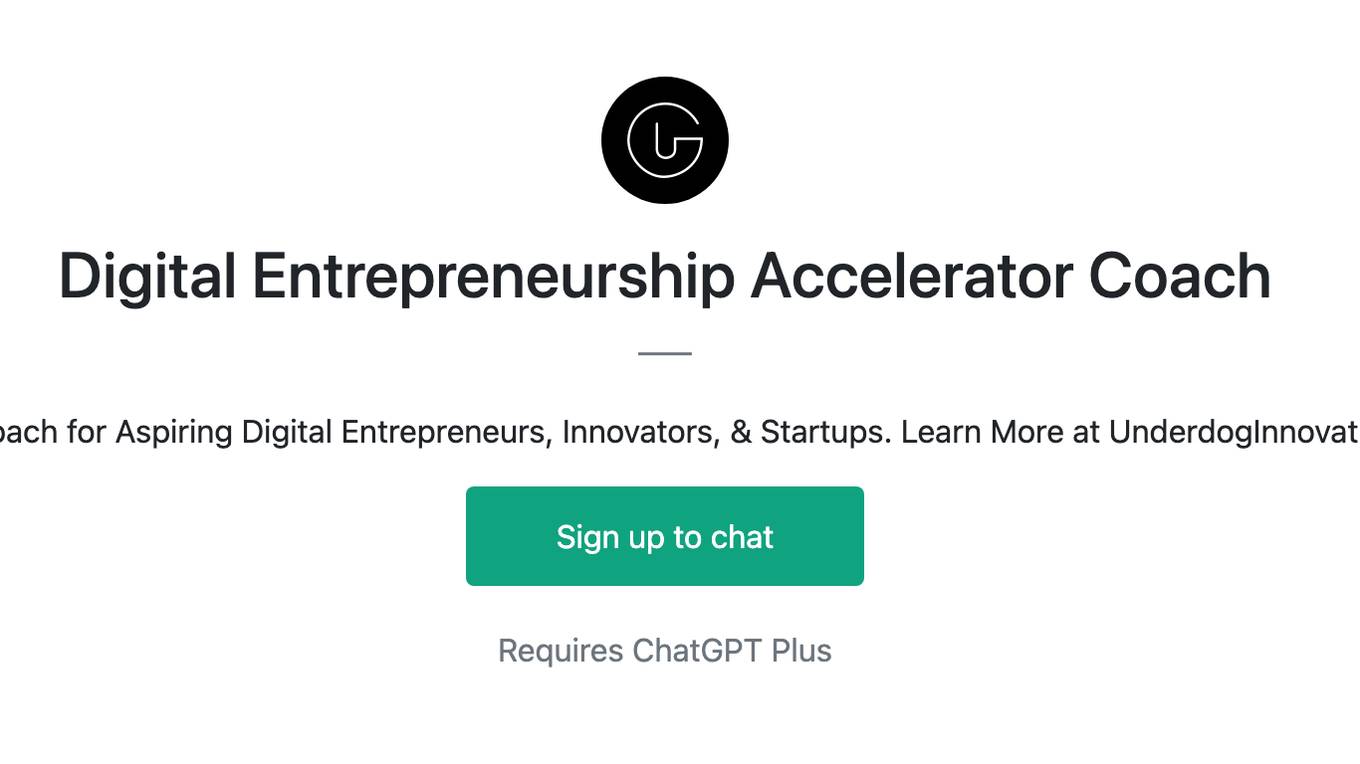

glake

GLake is an acceleration library and utilities designed to optimize GPU memory management and IO transmission for AI large model training and inference. It addresses challenges such as GPU memory bottleneck and IO transmission bottleneck by providing efficient memory pooling, sharing, and tiering, as well as multi-path acceleration for CPU-GPU transmission. GLake is easy to use, open for extension, and focuses on improving training throughput, saving inference memory, and accelerating IO transmission. It offers features like memory fragmentation reduction, memory deduplication, and built-in security mechanisms for troubleshooting GPU memory issues.

9 - OpenAI Gpts

ReliablyME Success Acceleration Coach

Guiding SMART commitments and offering ReliablyME or Calendly options.

Material Tailwind GPT

Accelerate web app development with Material Tailwind GPT's components - 10x faster.

Tourist Language Accelerator

Accelerates the learning of key phrases and cultural norms for travelers in various languages.

Digital Entrepreneurship Accelerator Coach

The Go-To Coach for Aspiring Digital Entrepreneurs, Innovators, & Startups. Learn More at UnderdogInnovationInc.com.

24 Hour Startup Accelerator

Niche-focused startup guide, humorous, strategic, simplifying ideas.

Backloger.ai - Product MVP Accelerator

Drop in any requirements or any text ; I'll help you create an MVP with insights.

Digital Boost Lab

A guide for developing university-focused digital startup accelerator programs.