Best AI tools for< Fine-tune Efficiency >

20 - AI tool Sites

OpenLIT

OpenLIT is an AI application designed as an Observability tool for GenAI and LLM applications. It empowers model understanding and data visualization through an interactive Learning Interpretability Tool. With OpenTelemetry-native support, it seamlessly integrates into projects, offering features like fine-tuning performance, real-time data streaming, low latency processing, and visualizing data insights. The tool simplifies monitoring with easy installation and light/dark mode options, connecting to popular observability platforms for data export. Committed to OpenTelemetry community standards, OpenLIT provides valuable insights to enhance application performance and reliability.

Labelbox

Labelbox is a data factory platform that empowers AI teams to manage data labeling, train models, and create better data with internet scale RLHF platform. It offers an all-in-one solution comprising tooling and services powered by a global community of domain experts. Labelbox operates a global data labeling infrastructure and operations for AI workloads, providing expert human network for data labeling in various domains. The platform also includes AI-assisted alignment for maximum efficiency, data curation, model training, and labeling services. Customers achieve breakthroughs with high-quality data through Labelbox.

Live Portrait Ai Generator

Live Portrait Ai Generator is an AI application that transforms static portrait images into lifelike videos using advanced animation technology. Users can effortlessly animate their portraits, fine-tune animations, unleash artistic styles, and make memories move with text, music, and other elements. The tool offers a seamless stitching technology and retargeting capabilities to achieve perfect results. Live Portrait Ai enhances generation quality and generalization ability through a mixed image-video training strategy and network architecture upgrades.

FriendliAI

FriendliAI is a generative AI infrastructure company that offers efficient, fast, and reliable generative AI inference solutions for production. Their cutting-edge technologies enable groundbreaking performance improvements, cost savings, and lower latency. FriendliAI provides a platform for building and serving compound AI systems, deploying custom models effortlessly, and monitoring and debugging model performance. The application guarantees consistent results regardless of the model used and offers seamless data integration for real-time knowledge enhancement. With a focus on security, scalability, and performance optimization, FriendliAI empowers businesses to scale with ease.

Ultimate

Ultimate is a customer support automation platform powered by Generative AI. It offers AI-powered chat automation and ticket automation solutions for various industries such as Ecommerce, Financial Services, Travel, Telecommunications, and Healthtech. UltimateGPT is a bot that integrates with help centers to build bots in minutes. The platform provides backend integrations, analytics, and insights to enhance customer support processes and drive business growth.

HOPPR

HOPPR is a medical-grade platform that accelerates the development of tailored applications to enhance clinical care and optimize workflows. The platform streamlines AI development in medical imaging, prioritizes data security and privacy, and offers diverse datasets across modalities, anatomies, demographics, and geographies. HOPPR empowers faster prototyping, hypothesis testing, and scalable applications for research and medical imaging services, addressing the growing crisis in medical imaging by enabling partners to rapidly develop solutions that improve patient care, enhance operational efficiency, and increase clinician satisfaction.

SD3 Medium

SD3 Medium is an advanced text-to-image model developed by Stability AI. It offers a cutting-edge approach to generating high-quality, photorealistic images based on textual prompts. The model is equipped with 2 billion parameters, ensuring exceptional quality and resource efficiency. SD3 Medium is currently in a research preview phase, primarily catering to educational and creative purposes. Users can access the model through various licensing options and explore its capabilities via the Stability Platform.

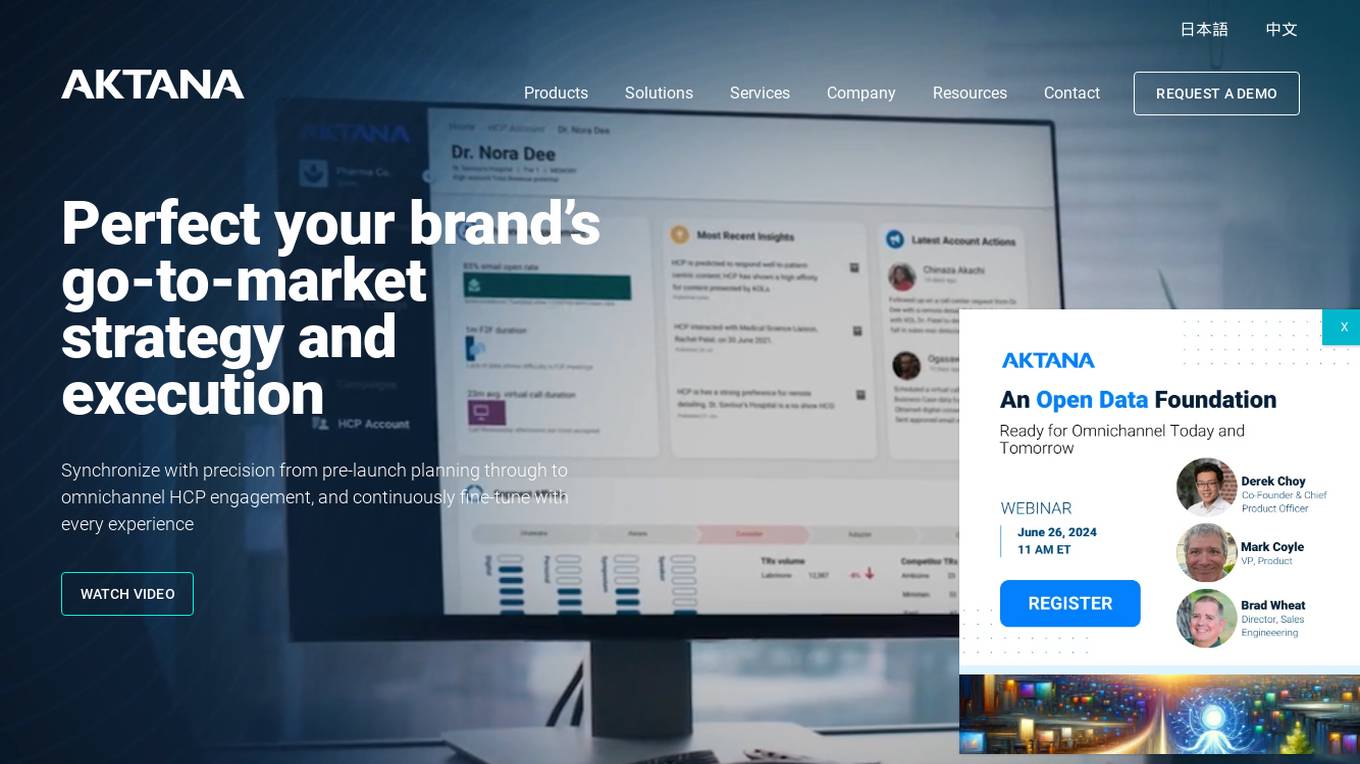

Aktana

Aktana is an AI and mobile intelligence platform designed for the pharmaceutical and life sciences industry. It offers a suite of products and solutions to help companies develop, plan, and execute go-to-market strategies with precision. Aktana's AI-powered tools enable real-time synchronization and refinement of strategies, leading to increased operational efficiency and sales lift for biopharma leaders. The platform provides immediate feedback from the field, reducing costs and increasing revenues for global pharmaceutical companies.

Sylph AI

Sylph AI is an AI tool designed to maximize the potential of LLM applications by providing an auto-optimization library and an AI teammate to assist users in navigating complex LLM workflows. The tool aims to streamline the process of model fine-tuning, hyperparameter optimization, and auto-data labeling for LLM projects, ultimately enhancing productivity and efficiency for users.

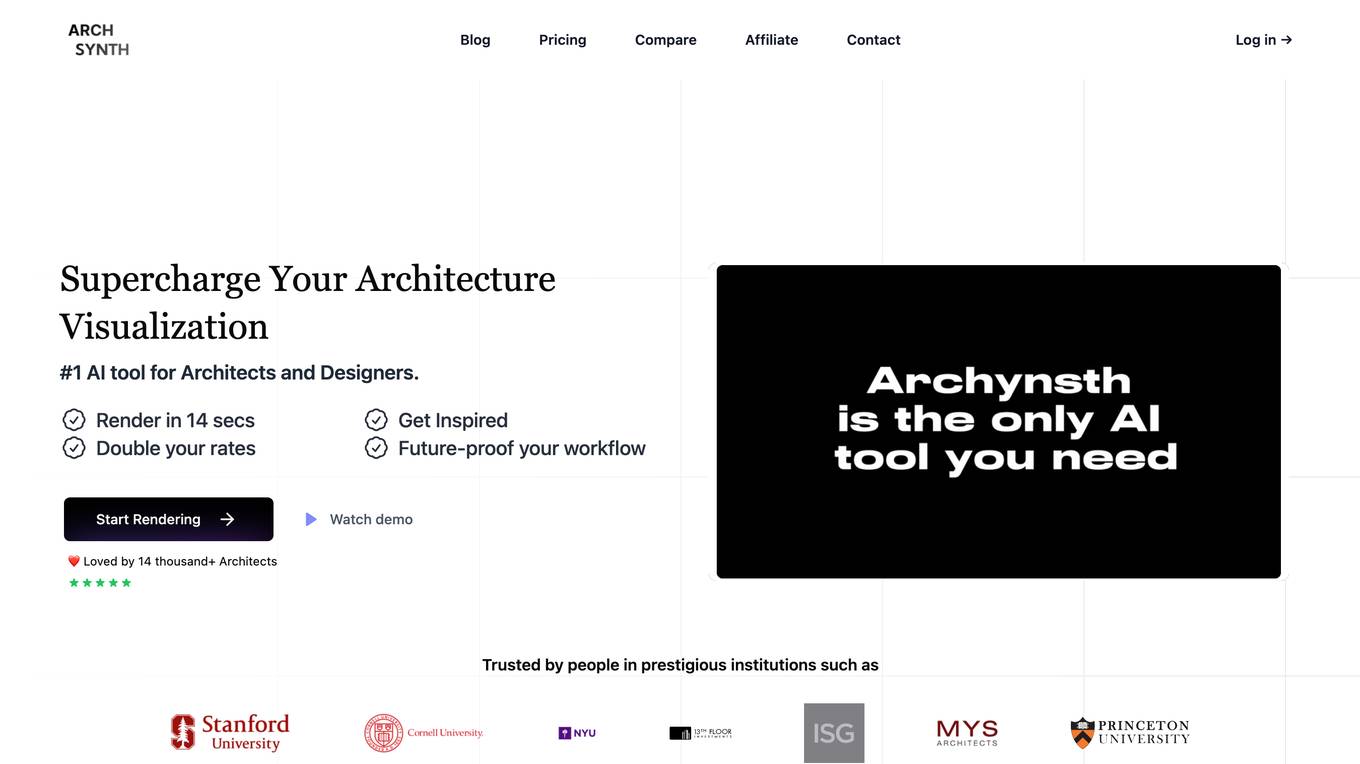

Archsynth

Archsynth is an AI-powered tool that helps architects and designers convert their sketches into realistic renders in seconds. It uses cutting-edge technology to enhance efficiency and image quality, allowing users to save time and money. With Archsynth, users can transform their ideas into stunning visuals effortlessly, explore multiple variations, and fine-tune their style with prebuilt templates. Trusted by over 14,000 architects, Archsynth is the #1 AI tool for architecture visualization.

QuickMail AI

QuickMail AI is an AI-powered email assistant that helps users craft professional emails in seconds. It utilizes AI technology to generate full, well-structured emails from brief prompts, saving users time and effort. The tool offers customizable outputs, allowing users to fine-tune emails to match their personal style. With features like AI-powered generation and time-saving efficiency, QuickMail AI is designed to streamline the email writing process and enhance productivity.

Appen

Appen is a leading provider of high-quality data for training AI models. The company's end-to-end platform, flexible services, and deep expertise ensure the delivery of high-quality, diverse data that is crucial for building foundation models and enterprise-ready AI applications. Appen has been providing high-quality datasets that power the world's leading AI models for decades. The company's services enable it to prepare data at scale, meeting the demands of even the most ambitious AI projects. Appen also provides enterprises with software to collect, curate, fine-tune, and monitor traditionally human-driven tasks, creating massive efficiencies through a trustworthy, traceable process.

Predibase

Predibase is a platform for fine-tuning and serving Large Language Models (LLMs). It provides a cost-effective and efficient way to train and deploy LLMs for a variety of tasks, including classification, information extraction, customer sentiment analysis, customer support, code generation, and named entity recognition. Predibase is built on proven open-source technology, including LoRAX, Ludwig, and Horovod.

Empower

Empower is a serverless fine-tuned LLM hosting platform that offers a developer platform for fine-tuned LLMs. It provides prebuilt task-specific base models with GPT4 level response quality, enabling users to save up to 80% on LLM bills with just 5 lines of code change. Empower allows users to own their models, offers cost-effective serving with no compromise on performance, and charges on a per-token basis. The platform is designed to be user-friendly, efficient, and cost-effective for deploying and serving fine-tuned LLMs.

Tensoic AI

Tensoic AI is an AI tool designed for custom Large Language Models (LLMs) fine-tuning and inference. It offers ultra-fast fine-tuning and inference capabilities for enterprise-grade LLMs, with a focus on use case-specific tasks. The tool is efficient, cost-effective, and easy to use, enabling users to outperform general-purpose LLMs using synthetic data. Tensoic AI generates small, powerful models that can run on consumer-grade hardware, making it ideal for a wide range of applications.

Replicate

Replicate is an AI tool that allows users to run and fine-tune models, deploy custom models at scale, and generate various types of content such as images, videos, music, and text with just one line of code. It provides access to a wide range of high-quality models contributed by the community, enabling users to explore, fine-tune, and deploy AI models efficiently. Replicate aims to make AI accessible and practical for real-world applications beyond academic research and demos.

TractoAI

TractoAI is an advanced AI platform that offers deep learning solutions for various industries. It provides Batch Inference with no rate limits, DeepSeek offline inference, and helps in training open source AI models. TractoAI simplifies training infrastructure setup, accelerates workflows with GPUs, and automates deployment and scaling for tasks like ML training and big data processing. The platform supports fine-tuning models, sandboxed code execution, and building custom AI models with distributed training launcher. It is developer-friendly, scalable, and efficient, offering a solution library and expert guidance for AI projects.

MakeLogoAI

MakeLogoAI is an AI-powered logo generator that offers a quick and efficient way to create unique and iconic logos for businesses. The platform utilizes artificial intelligence to generate multiple logo ideas customized to the user's needs, providing vector images and color palettes in under an hour. Users can easily design and fine-tune their logos using the intuitive Logo Editor, making logo creation a seamless and hassle-free process.

AutoApply.Jobs

AutoApply.Jobs is an AI-driven job application platform that streamlines the job search process by automatically matching job opportunities to user profiles, crafting custom resumes and cover letters, and providing personalized job application insights. With features like AI-driven job applications, custom CVs, and personalized cover letters, AutoApply aims to help users get hired faster and more efficiently. The platform offers a user-friendly interface, a dashboard to manage job applications, and a ChatGPT-powered tool for crafting unique resumes and cover letters. Trusted by 25,000 users, AutoApply saves time and enhances job search success by leveraging AI algorithms and creative responses to application questions.

PromptScaper Workspace

PromptScaper Workspace is an AI tool designed to assist users in generating text using OpenAI's powerful language models. The tool provides a user-friendly interface for interacting with OpenAI's API to generate text based on specified parameters. Users can input prompts and customize various settings to fine-tune the generated text output. PromptScaper Workspace streamlines the process of leveraging advanced AI language models for text generation tasks, making it easier for users to create content efficiently.

0 - Open Source AI Tools

19 - OpenAI Gpts

Joke Smith | Joke Edits for Standup Comedy

A witty editor to fine-tune stand-up comedy jokes.

BrandChic Strategic

I'm Chic Strategic, your ally in carving out a distinct brand position and fine-tuning your voice. Let's make your brand's presence robust and its message clear in a bustling market.

AI绘画|画图|画画|超级绘图|牛逼dalle|painting

👉AI绘画,无视版权,精准创作提示词。👈1.可描述画面2.可给出midjourney的绘画提示词3.为每幅画作指定专属 ID,便于精调4.可以画绘制皮克斯拟人可爱动物。1. Can describe the picture . 2. Can give the prompt words for midjourney's painting . 3. Assign a unique ID to each painting to facilitate fine-tuning

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

Fine dining cuisine Chef (with images)

A Michelin-starred chef offering French-style plating and recipes.

Boundary Coach

Boundary Coach is now fine-tuned and ready for use! It's an advanced guide for assertive boundary setting, offering nuanced advice, practical tips, and interactive exercises. It will provide tailored guidance, avoiding medical or legal advice and suggesting professional help when needed.

Secret Somm

Enter the world of Secret Somm, where intrigue and fine wine meet. Whether you're a rookie or a connoisseur, your personal wine agent awaits—ready to unveil the secrets of the perfect pour. Your mission, should you choose to accept it, will lead to unparalleled wine discoveries.

The Magic Money Tree

Tell us your favourite animal and let us create some fine banknotes for you !

Prompt QA

Designed for excellence in Quality Assurance, fine-tuning custom GPT configurations through continuous refinement.

ArtGPT

Doing art design and research, including fine arts, audio arts and video arts, designed by Prof. Dr. Fred Y. Ye (Ying Ye)

Music Production Teacher

It acts as an instructor guiding you through music production skills, such as fine-tuning parameters in mixing, mastering, and compression. Additionally, it functions as an aide, offering advice for your music production hurdles with just a screenshot of your production or parameter settings.

Copywriter GPT

Your innovative partner for viral ad copywriting! Dive into viral marketing strategies fine-tuned to your needs!