Best AI tools for< Benchmark Models >

20 - AI tool Sites

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

Gorilla

Gorilla is an AI tool that integrates a large language model (LLM) with massive APIs to enable users to interact with a wide range of services. It offers features such as training the model to support parallel functions, benchmarking LLMs on function-calling capabilities, and providing a runtime for executing LLM-generated actions like code and API calls. Gorilla is open-source and focuses on enhancing interaction between apps and services with human-out-of-loop functionality.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

Embedl

Embedl is an AI tool that specializes in developing advanced solutions for efficient AI deployment in embedded systems. With a focus on deep learning optimization, Embedl offers a cost-effective solution that reduces energy consumption and accelerates product development cycles. The platform caters to industries such as automotive, aerospace, and IoT, providing cutting-edge AI products that drive innovation and competitive advantage.

Spotrank.ai

Spotrank.ai is an AI-powered platform that provides advanced analytics and insights for businesses and individuals. It leverages artificial intelligence algorithms to analyze data and generate valuable reports to help users make informed decisions. The platform offers a user-friendly interface and customizable features to cater to diverse needs across various industries. Spotrank.ai is designed to streamline data analysis processes and enhance decision-making capabilities through cutting-edge AI technology.

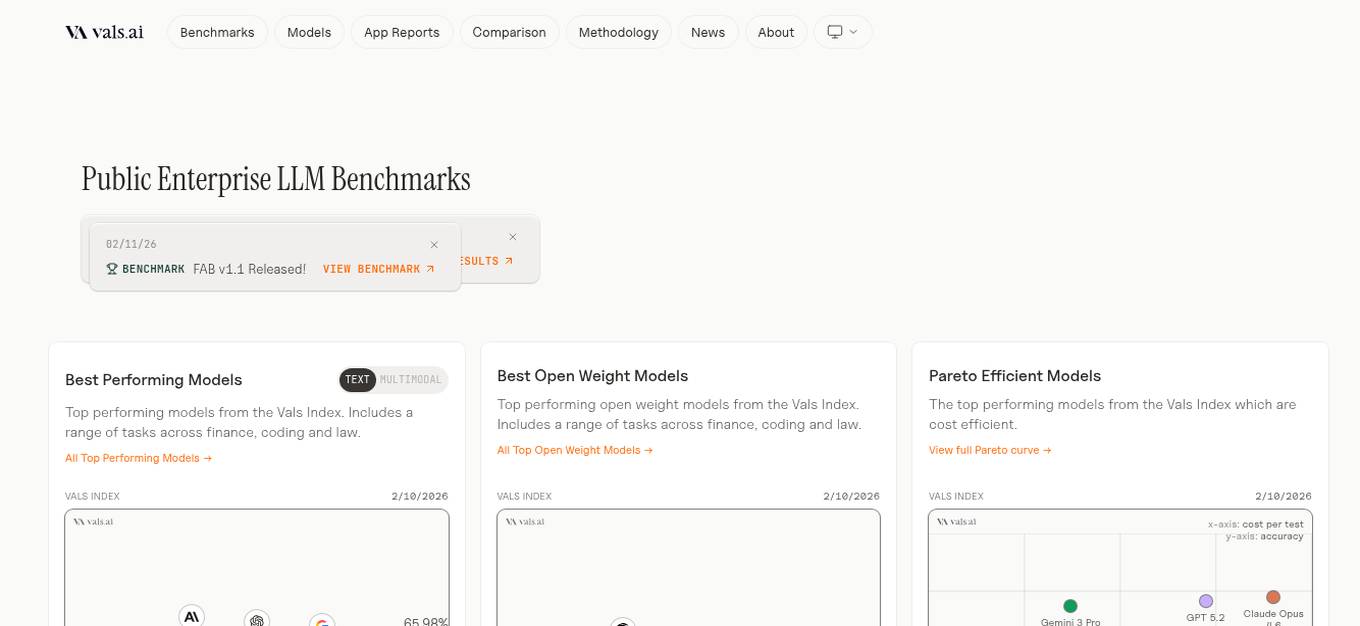

Vals AI

Vals AI is an advanced AI tool that provides benchmark reports and comparisons for various models in the fields of finance, coding, and law. The platform offers insights into the performance of different AI models across different tasks and industries. Vals AI aims to bridge the gap in model benchmarking and provide valuable information for users looking to evaluate and compare AI models for specific tasks.

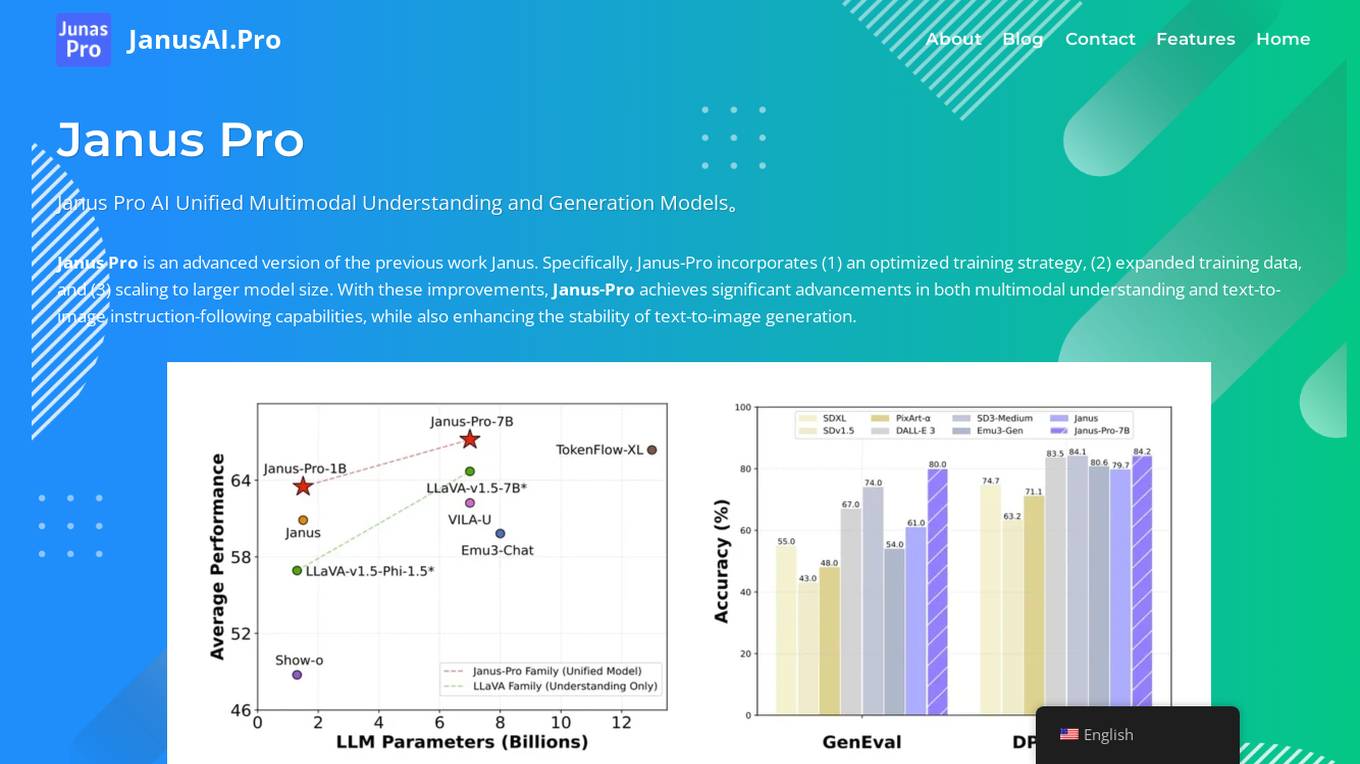

Janus Pro AI

Janus Pro AI is an advanced unified multimodal AI model that combines image understanding and generation capabilities. It incorporates optimized training strategies, expanded training data, and larger model scaling to achieve significant advancements in both multimodal understanding and text-to-image generation tasks. Janus Pro features a decoupled visual encoding system, outperforming leading models like DALL-E 3 and Stable Diffusion in benchmark tests. It offers open-source compatibility, vision processing specifications, cost-effective scalability, and an optimized training framework.

Hailo Community

Hailo Community is an AI tool designed for developers and enthusiasts working with Raspberry Pi and Hailo-8L AI Kit. The platform offers resources, benchmarks, and support for training custom models, optimizing AI tasks, and troubleshooting errors related to Hailo and Raspberry Pi integration.

Deepfake Detection Challenge Dataset

The Deepfake Detection Challenge Dataset is a project initiated by Facebook AI to accelerate the development of new ways to detect deepfake videos. The dataset consists of over 100,000 videos and was created in collaboration with industry leaders and academic experts. It includes two versions: a preview dataset with 5k videos and a full dataset with 124k videos, each featuring facial modification algorithms. The dataset was used in a Kaggle competition to create better models for detecting manipulated media. The top-performing models achieved high accuracy on the public dataset but faced challenges when tested against the black box dataset, highlighting the importance of generalization in deepfake detection. The project aims to encourage the research community to continue advancing in detecting harmful manipulated media.

Perspect

Perspect is an AI-powered platform designed for high-performance software teams. It offers real-time insights into team contributions and impact, optimizing developer experience, and rewarding high-performers. With 50+ integrations, Perspect enables visualization of impact, benchmarking performance, and uses machine learning models to identify and eliminate blockers. The platform is deeply integrated with web3 wallets and offers built-in reward mechanisms. Managers can align resources around crucial KPIs, identify top talent, and prevent burnout. Perspect aims to enhance team productivity and employee retention through AI and ML technologies.

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

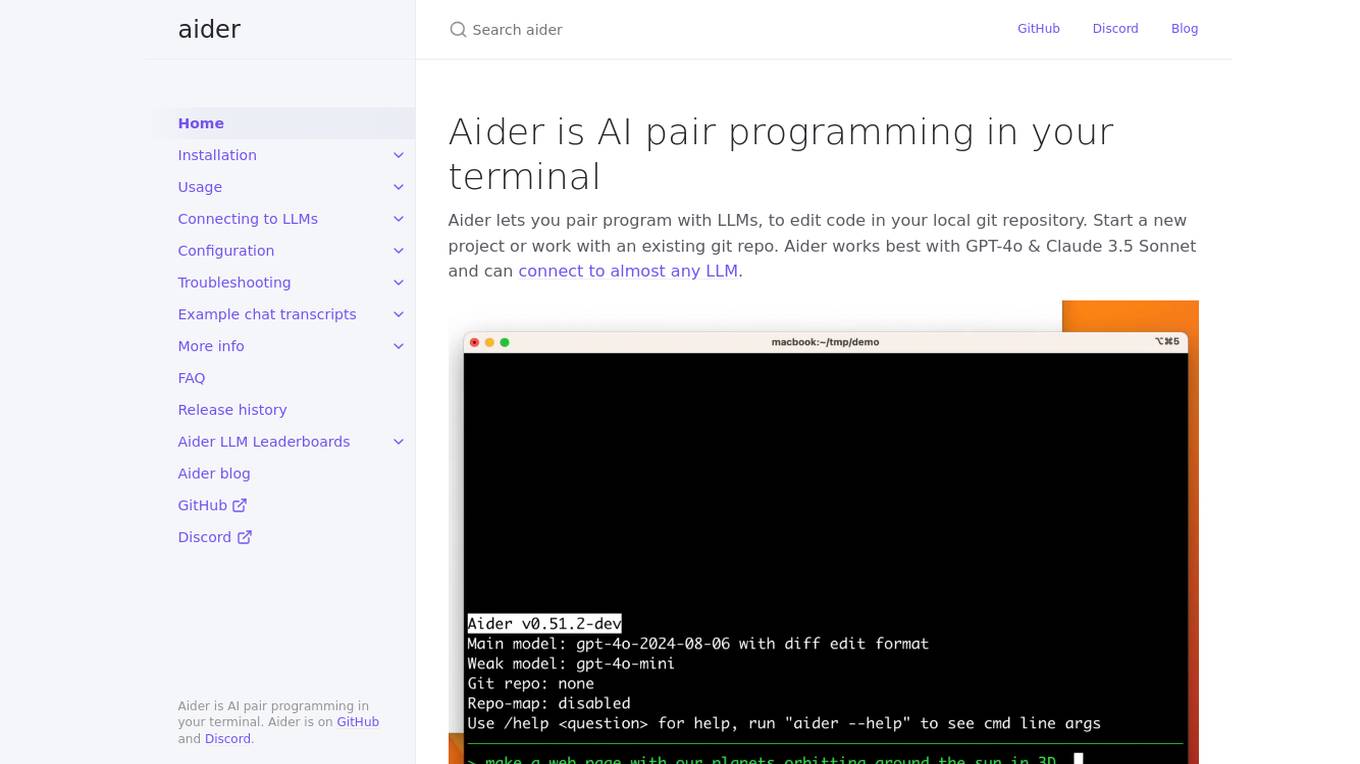

Aider

Aider is an AI pair programming tool that allows users to collaborate with Language Model Models (LLMs) to edit code in their local git repository. It supports popular languages like Python, JavaScript, TypeScript, PHP, HTML, and CSS. Aider can handle complex requests, automatically commit changes, and work well in larger codebases by using a map of the entire git repository. Users can edit files while chatting with Aider, add images and URLs to the chat, and even code using their voice. Aider has received positive feedback from users for its productivity-enhancing features and performance on software engineering benchmarks.

Groq

Groq is a fast AI inference tool that offers instant intelligence for openly-available models like Llama 3.1. It provides ultra-low-latency inference for cloud deployments and is compatible with other providers like OpenAI. Groq's speed is proven to be instant through independent benchmarks, and it powers leading openly-available AI models such as Llama, Mixtral, Gemma, and Whisper. The tool has gained recognition in the industry for its high-speed inference compute capabilities and has received significant funding to challenge established players like Nvidia.

ApX Machine Learning

ApX Machine Learning is a comprehensive resource for AI students, developers, and researchers, offering tools and learning resources to pioneer the future of AI. It provides a wide range of courses, tools, and benchmarks for learners, developers, and researchers in the field of machine learning and artificial intelligence. The platform aims to enhance the capabilities of existing large language models (LLMs) through the Model Context Protocol (MCP), providing access to resources, benchmarks, and tools to improve LLM performance and efficiency.

Waikay

Waikay is an AI tool designed to help individuals, businesses, and agencies gain transparency into what AI knows about their brand. It allows users to manage reputation risks, optimize strategic positioning, and benchmark against competitors with actionable insights. By providing a comprehensive analysis of AI mentions, citations, implied facts, and competition, Waikay ensures a 360-degree view of model understanding. Users can easily track their brand's digital footprint, compare with competitors, and monitor their brand and content across leading AI search platforms.

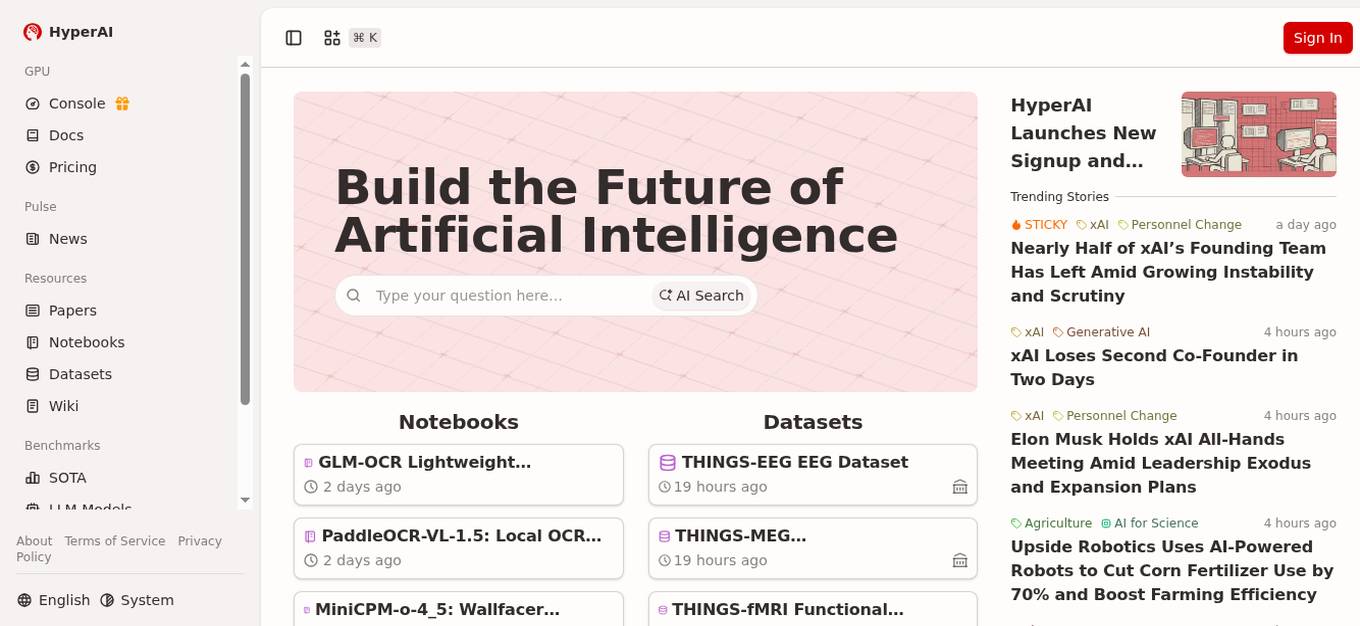

HyperAI

HyperAI is an advanced AI tool designed to accelerate AI development by providing a free AI co-coding environment, out-of-the-box solutions, and cost-effective GPU pricing. It offers cutting-edge AI research papers, benchmarks, and models to help users stay updated with the latest trends and advancements in the field of artificial intelligence.

Reflection 70B

Reflection 70B is a next-gen open-source LLM powered by Llama 70B, offering groundbreaking self-correction capabilities that outsmart GPT-4. It provides advanced AI-powered conversations, assists with various tasks, and excels in accuracy and reliability. Users can engage in human-like conversations, receive assistance in research, coding, creative writing, and problem-solving, all while benefiting from its innovative self-correction mechanism. Reflection 70B sets new standards in AI performance and is designed to enhance productivity and decision-making across multiple domains.

AI Alliance

The AI Alliance is a community dedicated to building and advancing open-source AI agents, data, models, evaluation, safety, applications, and advocacy to ensure everyone can benefit. They focus on various areas such as skills and education, trust and safety, applications and tools, hardware enablement, foundation models, and advocacy. The organization supports global AI skill-building, education, and exploratory research, creates benchmarks and tools for safe generative AI, builds capable tools for AI model builders and developers, fosters AI hardware accelerator ecosystem, enables open foundation models and datasets, and advocates for regulatory policies for healthy AI ecosystems.

Lucid Engine

Lucid Engine is an AI tool designed to optimize digital presence for better visibility in AI-generated answers. It helps users track their citations across AI search engines, benchmark competitors, and prioritize actions to improve recommendations. The tool offers features such as Visibility Audit, Competitor Radar, and Action Backlog to enhance AI visibility and competitiveness. Lucid Engine provides real-time monitoring of strategic prompts across multiple AI engines, enabling users to stay ahead of competitors and model shifts.

JaanchAI

JaanchAI is an AI-powered tool that provides valuable insights for e-commerce businesses. It utilizes artificial intelligence algorithms to analyze data and trends in the e-commerce industry, helping businesses make informed decisions to optimize their operations and increase sales. With JaanchAI, users can gain a competitive edge by leveraging advanced analytics and predictive modeling techniques tailored for the e-commerce sector.

0 - Open Source AI Tools

10 - OpenAI Gpts

HVAC Apex

Benchmark HVAC GPT model with unmatched expertise and forward-thinking solutions, powered by OpenAI

SaaS Navigator

A strategic SaaS analyst for CXOs, with a focus on market trends and benchmarks.

Transfer Pricing Advisor

Guides businesses in managing global tax liabilities efficiently.

Salary Guides

I provide monthly salary data in euros, using a structured format for global job roles.

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.