Best AI tools for< Token Analyst >

Infographic

20 - AI tool Sites

Token Metrics

Token Metrics is an AI-powered crypto trading and research platform that provides users with real-time market trends, live trade alerts, and AI grades to help make informed investment decisions. With features like AI ratings, personalized research, and trading signals, Token Metrics aims to empower users to navigate the cryptocurrency markets effectively. Trusted by over 70,000 crypto investors, Token Metrics offers a comprehensive suite of tools and resources to enhance crypto investment strategies.

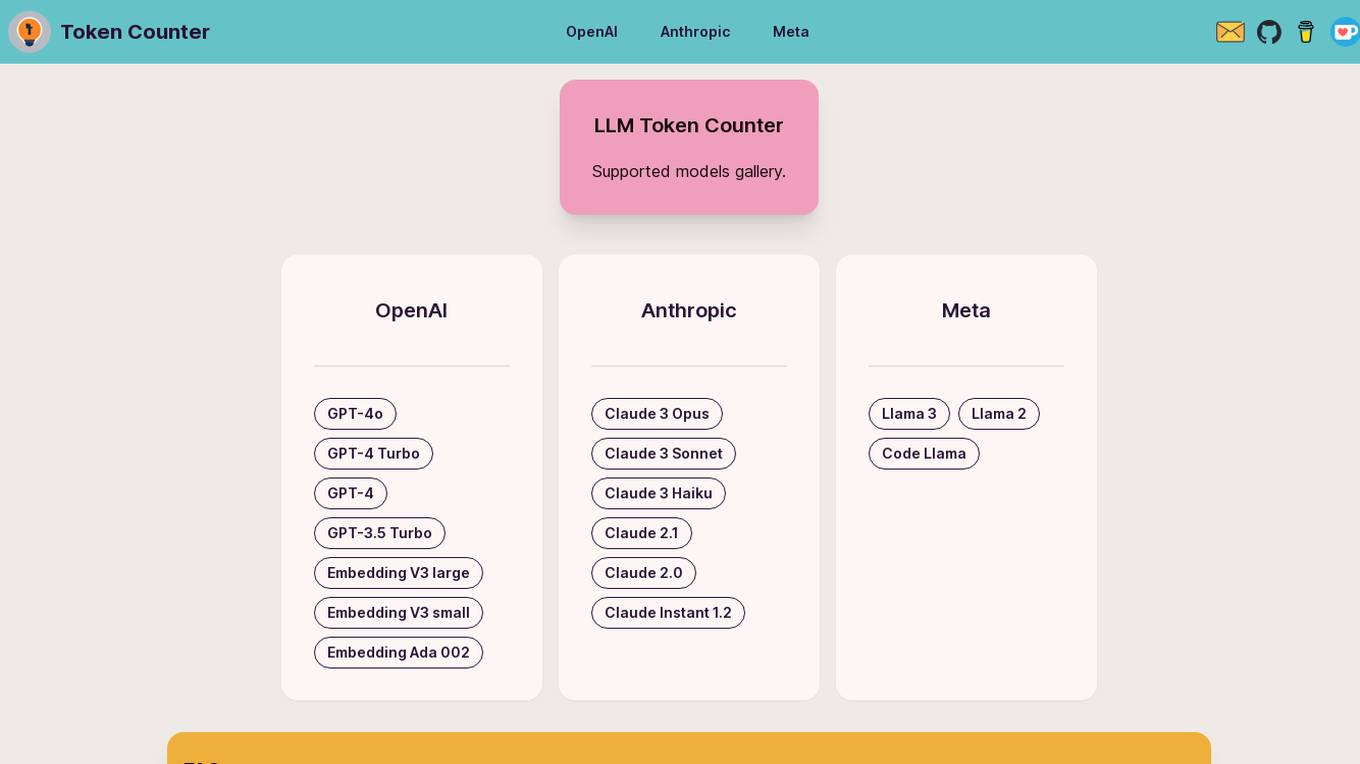

Token Counter

Token Counter is an AI tool designed to convert text input into tokens for various AI models. It helps users accurately determine the token count and associated costs when working with AI models. By providing insights into tokenization strategies and cost structures, Token Counter streamlines the process of utilizing advanced technologies.

LLM Token Counter

The LLM Token Counter is a sophisticated tool designed to help users effectively manage token limits for various Language Models (LLMs) like GPT-3.5, GPT-4, Claude-3, Llama-3, and more. It utilizes Transformers.js, a JavaScript implementation of the Hugging Face Transformers library, to calculate token counts client-side. The tool ensures data privacy by not transmitting prompts to external servers.

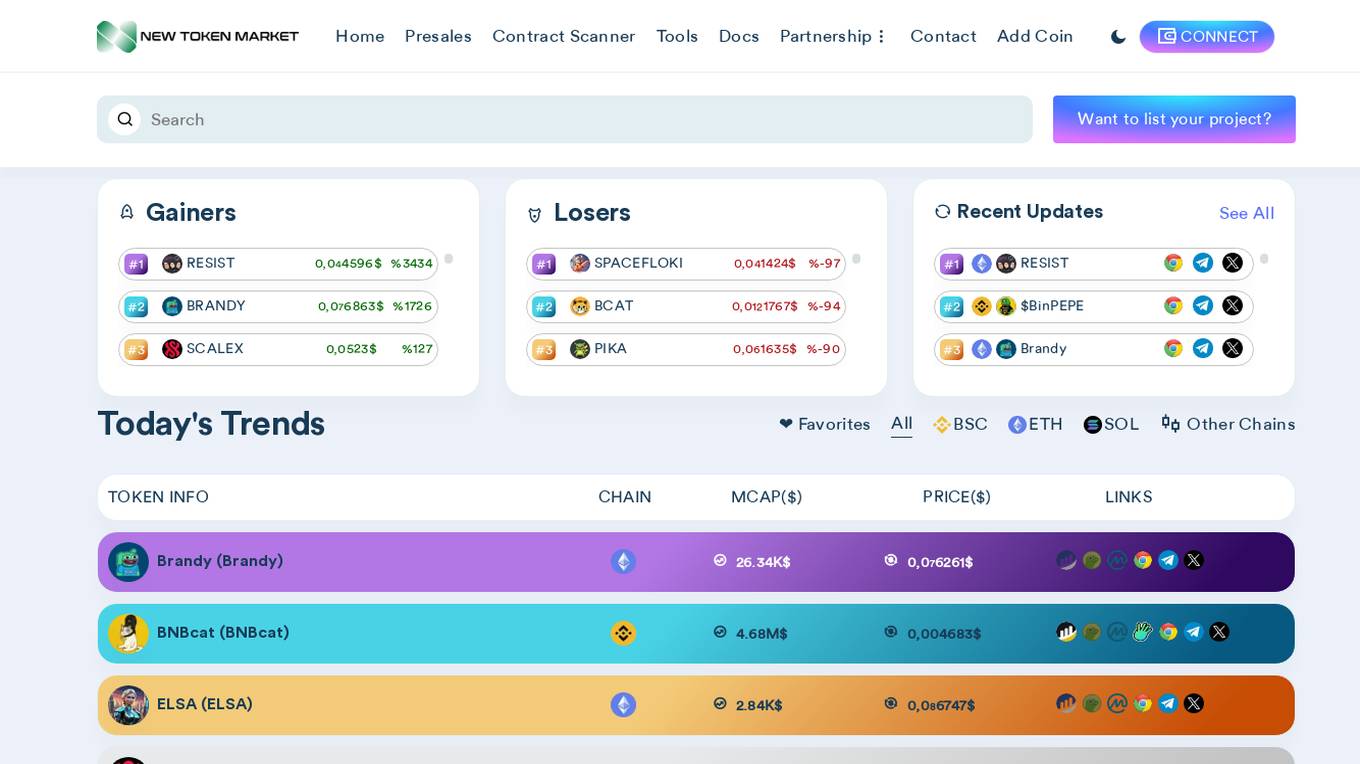

NTM.ai

NTM.ai is an AI-powered platform that provides tools and services for the cryptocurrency market. It offers features such as presales contract scanning, project listing, price tracking, and market analysis. The platform aims to assist users in making informed decisions and maximizing their investments in the volatile crypto market.

RejuveAI

RejuveAI is a decentralized token-based system that aims to democratize longevity globally. The Longevity App allows users to monitor essential health metrics, enhance lifespan, and earn RJV tokens. The application leverages revolutionary AI technology to analyze human body functions in-depth, providing insights for aging combat. RejuveAI collaborates with researchers, clinics, and data enthusiasts to ensure innovative outcomes are affordable and accessible. The platform also offers exclusive discounts on travel, supplements, medical tests, and longevity therapies.

Sopdap Technologies

Sopdap Technologies is a leading provider of Web3, AI, and Cybersecurity services. They specialize in Blockchain Technologies, Smart Contracts Creation and Auditing, KYC, Cybersecurity Services, Project Management, and AI Automation. The company offers customized solutions tailored to meet the specific needs of businesses, timely delivery, ongoing support, and maintenance. Their core service areas include Web3 Project Development, Cybersecurity Solutions, AI Solutions, Cloud Security and Infrastructure, and Data Privacy and Compliance Services.

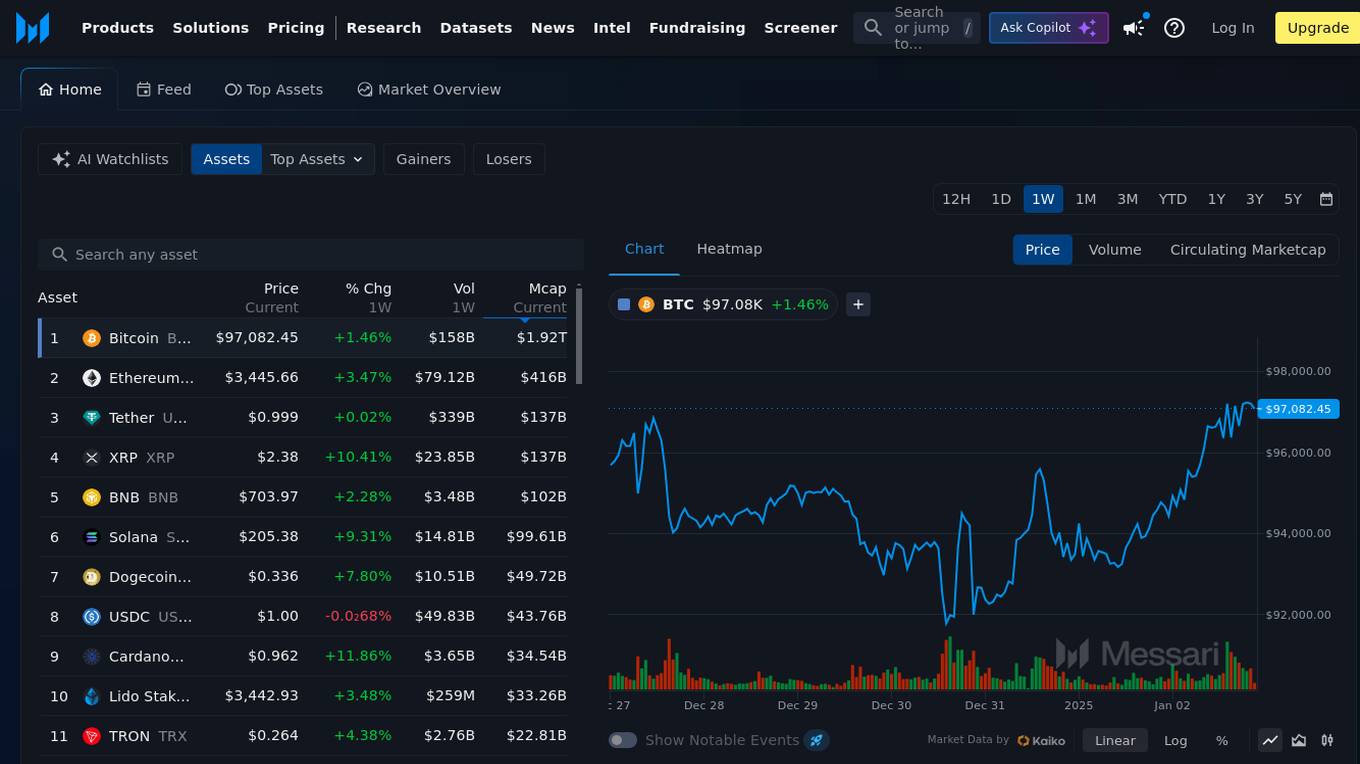

Messari

Messari is an AI-powered platform that provides comprehensive crypto research, reports, AI news, live prices, token unlocks, and fundraising data. It offers a wide range of tools for users to explore and analyze the cryptocurrency market. With features like AI summaries, personalized watchlists, comparative charts, and AI recaps, Messari aims to organize and contextualize all crypto information at a global scale.

Laika AI

Laika AI is the world's first Web3-modeled AI ecosystem, designed and optimized for Web3 and blockchain. It offers advanced on-chain AI tools, integrating artificial intelligence and blockchain data to provide users with insights into the crypto landscape. Laika AI stands out with its user-friendly browser extension that empowers users with advanced on-chain analytics without the need for complex setups. The platform continuously learns and improves, leveraging a unique foundation and proprietary algorithms dedicated to Web3. Laika AI offers features such as DeFi research, token contract analysis, wallet insights, AI alerts, and multichain swap capabilities. It is supported by strategic partnerships with leading companies in the Web3 and Web2 space, ensuring security, high performance, and accessibility for users.

CHAPTR

CHAPTR is an innovative AI solutions provider that aims to redefine work and fuel human innovation. They offer AI-driven solutions tailored to empower, innovate, and transform work processes. Their products are designed to enhance efficiency, foster creativity, and anticipate change in the modern workforce. CHAPTR's solutions are user-centric, secure, customizable, and backed by the Holtzbrinck Publishing Group. They are committed to relentless innovation and continuous advancement in AI technology.

Tensordyne

Tensordyne is a generative AI inference compute tool designed and developed in the US and Germany. It focuses on re-engineering AI math and defining AI inference to run the biggest AI models for thousands of users at a fraction of the rack count, power, and cost. Tensordyne offers custom silicon and systems built on the Zeroth Scaling Law, enabling breakthroughs in AI technology.

Basis Theory

Basis Theory is a token orchestration platform that helps businesses route transactions through multiple payment service providers (PSPs) and partners, enabling seamless subscription payments while maintaining PCI compliance. The platform offers secure and transparent payment flows, allowing users to connect to any partner or platform, collect and store card data securely, and customize payment strategies for various use cases. Basis Theory empowers high-risk merchants, subscription platforms, marketplaces, fintechs, and other businesses to optimize their payment processes and enhance customer experiences.

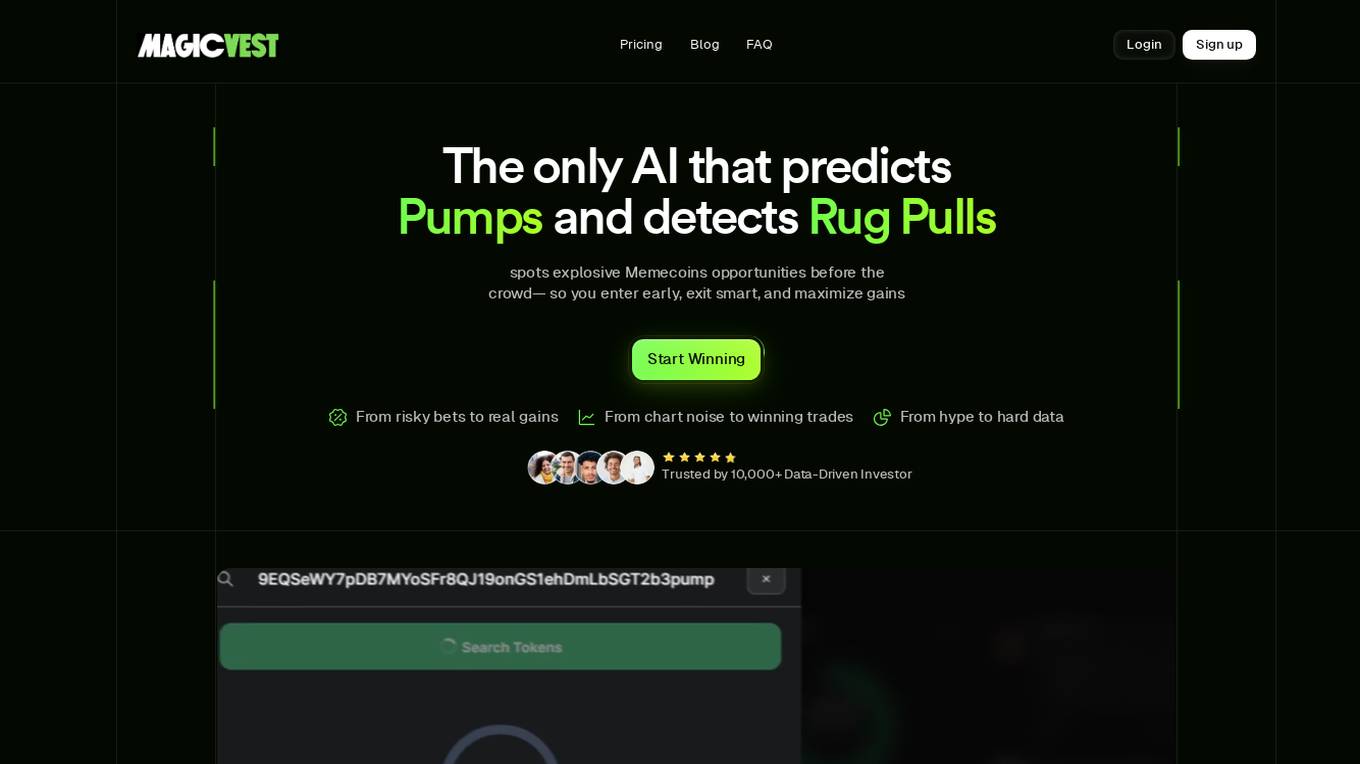

MagicVest

MagicVest is an AI-powered crypto intelligence tool that predicts profitable token movements before they happen and warns users about potential scams. It scans real-time signals across exchanges, social media, and smart contracts to provide high-accuracy signals. With features like MagicRadar, MagicDip, and MagicScore, MagicVest helps users make informed decisions in the volatile memecoin market.

Guru Network App

Guru Network App is an AI-powered platform that leverages blockchain technology to automate processes and provide rewards. The platform offers a new AI DexGuru, with access to the old DexGuru available through a legacy link. Users can engage in various actions on the platform, with a focus on analytics, token swapping, and leaderboard tracking. The platform aims to provide a seamless and efficient experience for users interested in blockchain technologies and decentralized finance.

Gain

Gain is an AI-powered hybrid finance platform that offers transparent investment opportunities for users to earn returns on their ETH and USDC. The platform integrates DeFi protocols with algorithmic trading to generate alpha for digital-asset pools. Gain sets a new industry standard with daily third-party audits, full reserve tokens, vetted pool managers, and community alignment through GAIN token holder voting. The platform aims for attractive returns while prioritizing community engagement and transparency.

Ethena Agents

Ethena Agents is an AI application designed to provide a comprehensive AI Layer for the Ethena network, offering a robust framework for modular chain economics. The platform enables users to build and launch AI models, access autonomous staking agents, and participate in the AI Agents Marketplace. With a focus on DeFAI (Decentralized Finance AI), Ethena Agents offers institutional-grade access to trillion-dollar market pools, maximizing staking APY and providing chain-agnostic asset management. Users can leverage the $ETAI token for infrastructure payments, token utility, staking rewards, and participation in decentralized governance. Additionally, the platform facilitates data/API connectivity, data aggregation, and real-time signal detection & analysis through AI-powered agents.

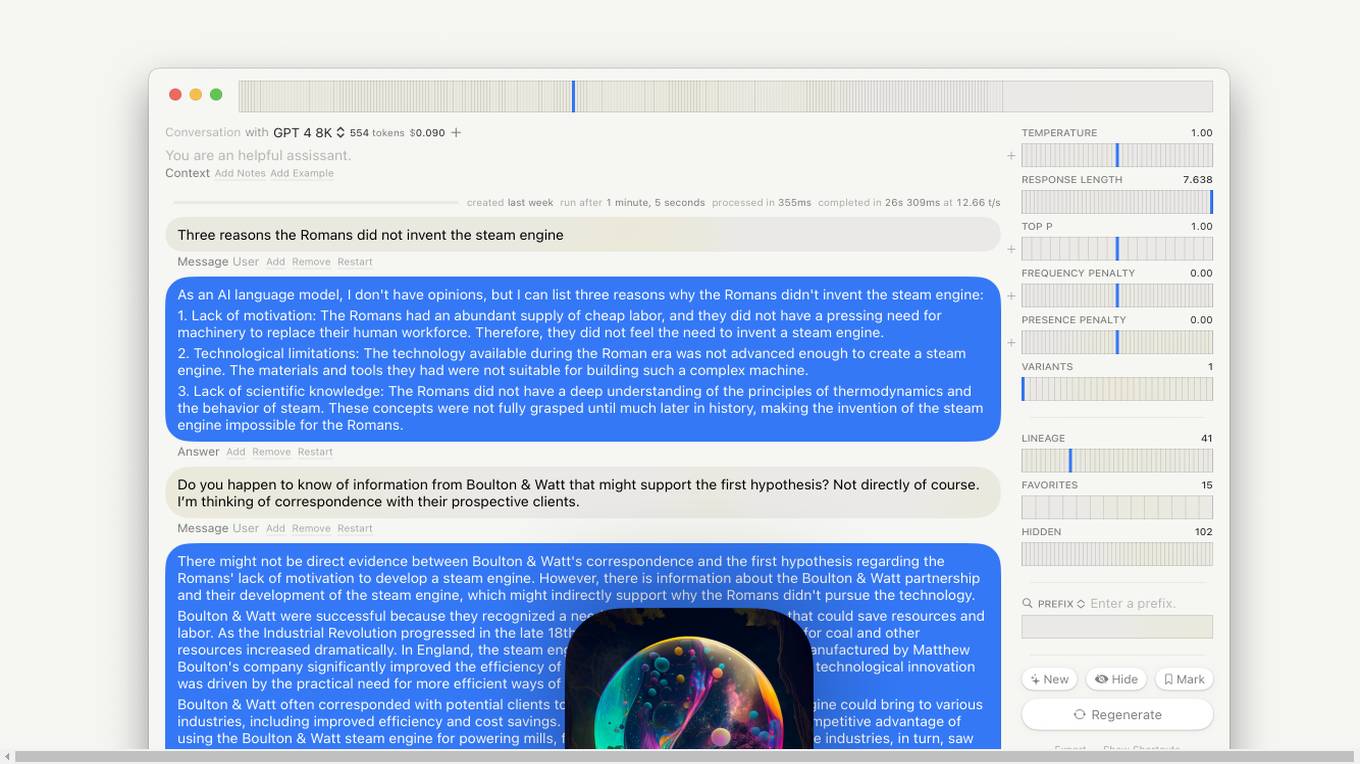

Lore macOS GPT-LLM Playground

Lore macOS GPT-LLM Playground is an AI tool designed for macOS users, offering a Multi-Model Time Travel Versioning Combinatorial Runs Variants Full-Text Search Model-Cost Aware API & Token Stats Custom Endpoints Local Models Tables. It provides a user-friendly interface with features like Syntax, LaTeX Notes Export, Shortcuts, Vim Mode, and Sandbox. The tool is built with Cocoa, SwiftUI, and SQLite, ensuring privacy and offering support & feedback.

Cupiee

Cupiee is an AI-powered emotion companion on Web3 that aims to support and relieve users' emotions. It offers features like creating personalized spaces, sharing feelings anonymously, earning rewards through activities, and using the CUPI token for transactions and NFT sales. The platform also includes a roadmap for future developments, such as chat with AI Pet, generative AI based on stories, and building a marketplace for NFT and AI Pet trading.

Awan LLM

Awan LLM is an AI tool that offers an Unlimited Tokens, Unrestricted, and Cost-Effective LLM Inference API Platform for Power Users and Developers. It allows users to generate unlimited tokens, use LLM models without constraints, and pay per month instead of per token. The platform features an AI Assistant, AI Agents, Roleplay with AI companions, Data Processing, Code Completion, and Applications for profitable AI-powered applications.

Lisapet.AI

Lisapet.AI is an AI prompt testing suite designed for product teams to streamline the process of designing, prototyping, testing, and shipping AI features. It offers a comprehensive platform with features like best-in-class AI playground, variables for dynamic data inputs, structured outputs, side-by-side editing, function calling, image inputs, assertions & metrics, performance comparison, data sets organization, shareable reports, comments & feedback, token & cost stats, and more. The application aims to help teams save time, improve efficiency, and ensure the reliability of AI features through automated prompt testing.

GeoInfer

GeoInfer is a professional AI-powered geolocation platform that analyzes photographs to determine where they were taken. It uses visual-only inference technology to examine visual elements like architecture, terrain, vegetation, and environmental markers to identify geographic locations without requiring GPS metadata or EXIF data. The platform offers transparent accuracy levels for different use cases, including a Global Model with 1km-100km accuracy ideal for regional and city-level identification. Additionally, GeoInfer provides custom regional models for organizations requiring higher precision, such as meter-level accuracy for specific geographic areas. The platform is designed for professionals in various industries, including law enforcement, insurance fraud investigation, digital forensics, and security research.

0 - Open Source Tools

20 - OpenAI Gpts

Token Analyst

ERC20 analyst focusing on mintability, holders, LP tokens, and risks, with clear, conversational explanations.

Token Securities Insights

A witty, crypto-savvy GPT for token securities insights, balancing humor and professionalism.

STO Advisor Pro

Advisor on Security Token Offerings, providing insights without financial advice. Powered by Magic Circle

STO Platform

This GPT, combined into the 'STO-Platform', is designed to share expertise in total token offering (STO).㉿㉿

Ethereum Blockchain Data (Etherscan)

Real-time Ethereum Blockchain Data & Insights (with Etherscan.io)

ChainBot

The assistant launched by ChainBot.io can help you analyze EVM transactions, providing blockchain and crypto info.

Crypto Co-Pilot

Crypto Co-Pilot: Elevate Your Crypto Journey! 🚀 Get instant insights on trending tokens, uncover hidden gems, and access the latest crypto news. Your go-to chatbot for savvy trading and crypto discoveries. Let's navigate the crypto market together! 💎📈

Dungeon Master Assistant

Enhance D&D campaigns with Roll20 setup and custom token creation.

TokenGPT

Guides users through creating Solana tokens from scratch with detailed explanations.

XRPL GPT

Build on the XRP Ledger with assistance from this GPT trained on extensive documentation and code samples.

Airdrop Hunter

Specialist in cryptocurrency airdrops, providing info and claiming assistance.

Creative Prompt Tokens Explorer

From @cure4hayley - A comprehensive exploration of words and phrases. Includes composite word fusion and emotion-focused. Can also try film, TV and book titles. Enjoy!

Sugma Discrete Math Solver

Powered by GPT-4 Turbo. 128,000 Tokens. Knowledge base of Discrete Math concepts, proofs and terminology. This GPT is instructed to carefully read and understand the prompt, plan a strategy to solve the problem, and write formal mathematical proofs.

Monster Battle - RPG Game

Train monsters, travel the world, earn Arena Tokens and become the ultimate monster battling champion of earth!