Best AI tools for< Rag Development >

Infographic

20 - AI tool Sites

RAG ChatBot

RAG ChatBot is a service that allows users to easily train and share chatbots. It can transform PDFs, URLs, and text into smart chatbots that can be embedded anywhere with an iframe. RAG ChatBot is designed to make knowledge sharing easier and more efficient. It offers a variety of features to help users create and manage their chatbots, including easy knowledge training, continuous improvement, seamless integration with OpenAI Custom GPTs, secure API key integration, continuous optimization, and online privacy control.

Langflow

Langflow is a new, visual framework for building multi-agent and RAG applications. It is open-source, Python-powered, fully customizable, and LLM and vector store agnostic. Langflow empowers developers to rapidly prototype and build AI applications with its user-friendly interface and powerful features. Whether you're a seasoned AI developer or just starting out, Langflow provides the tools you need to bring your AI ideas to life.

ACHIV

ACHIV is an AI tool for ideas validation and market research. It helps businesses make informed decisions based on real market needs by providing data-driven insights. The tool streamlines the market validation process, allowing quick adaptation and refinement of product development strategies. ACHIV offers a revolutionary approach to data collection and preprocessing, along with proprietary AI models for smart analysis and predictive forecasting. It is designed to assist entrepreneurs in understanding market gaps, exploring competitors, and enhancing investment decisions with real-time data.

Athina AI Hub

Athina AI Hub is an ultimate resource for AI development teams, offering a wide range of AI development blogs, research papers, and original content. It provides valuable insights into cutting-edge technologies such as Large Language Models (LLMs), Retrieval-Augmented Generation (RAG), and AI agents. Athina AI Hub aims to empower AI engineers, researchers, data scientists, and product developers by offering comprehensive resources and fostering innovation in the field of Artificial Intelligence.

Activeloop

Activeloop is an AI tool that offers Deep Lake, a database for AI solutions across various industries such as agriculture, audio processing, autonomous vehicles, robotics, biomedical and healthcare, generative AI, multimedia, safety, and security. The platform provides features like fast AI search, faster data preparation, serverless DB for code assistant, and more. Activeloop aims to streamline data processing and enhance AI development for businesses and researchers.

Cohere

Cohere is the leading AI platform for enterprise, offering generative AI, search and discovery, and advanced retrieval solutions. Their models are designed to enhance the global workforce, empowering businesses to thrive in the AI era. With features like Cohere Command, Cohere Embed, and Cohere Rerank, the platform enables the development of scalable and efficient AI-powered applications. Cohere focuses on optimizing enterprise data through language-based models, supporting over 100 languages for enhanced accuracy and efficiency.

ITRex

ITRex is an AI tool that specializes in Gen AI, Data, and Agentic System Development. The company offers a wide range of services including AI strategy consulting, AI product discovery, AI design and development, data consulting, IoT solutions, and more. ITRex focuses on providing end-to-end AI solutions tailored to meet the specific needs of each client, from strategy to deployment. The company's expertise spans across various industries such as healthcare, logistics, manufacturing, and retail, delivering innovative and customized AI solutions to drive business growth and efficiency.

Singlebase

Singlebase.cloud is an AI-powered platform that serves as an alternative to Firebase and Supabase. It offers a comprehensive suite of tools and services to facilitate faster development and deployment through a unified API. The platform includes features such as Vector Database, NoSQL Database, Vector Embeddings, Generative AI, RAG, Knowledge Base, File storage, and Authentication, catering to a wide range of development needs.

Ragie

Ragie is a fully managed RAG-as-a-Service platform designed for developers. It offers easy-to-use APIs and SDKs to help developers get started quickly, with advanced features like LLM re-ranking, summary index, entity extraction, flexible filtering, and hybrid semantic and keyword search. Ragie allows users to connect directly to popular data sources like Google Drive, Notion, Confluence, and more, ensuring accurate and reliable information delivery. The platform is led by Craft Ventures and offers seamless data connectivity through connectors. Ragie simplifies the process of data ingestion, chunking, indexing, and retrieval, making it a valuable tool for AI applications.

Jina AI

Jina AI is a company that provides multimodal AI solutions for businesses and developers. Their products include embeddings, rerankers, and prompt engineering tools. Jina AI's mission is to make AI accessible and easy to use for everyone.

Hyperleap AI

Hyperleap AI is an AI chatbot platform designed to help businesses grow by answering questions, capturing leads, and booking appointments on various platforms such as websites, WhatsApp, and Instagram. The platform offers powerful features like OTP-verified lead capture, automated follow-ups, multi-language support, rich media sharing, instant replies, and smart scheduling. Hyperleap Agentic Chatbots differentiate themselves with enterprise-grade AI infrastructure, hierarchical RAG architecture, and advanced agentic workflows. The platform allows users to deploy chatbots in minutes, not months, with industry-specific templates, dynamic prompt composition, and easy integration with various channels.

Tonic.ai

Tonic.ai is a platform that allows users to build AI models on their unstructured data. It offers various products for software development and LLM development, including tools for de-identifying and subsetting structured data, scaling down data, handling semi-structured data, and managing ephemeral data environments. Tonic.ai focuses on standardizing, enriching, and protecting unstructured data, as well as validating RAG systems. The platform also provides integrations with relational databases, data lakes, NoSQL databases, flat files, and SaaS applications, ensuring secure data transformation for software and AI developers.

Google Gemma

Google Gemma is a lightweight, state-of-the-art open language model (LLM) developed by Google. It is part of the same research used in the creation of Google's Gemini models. Gemma models come in two sizes, the 2B and 7B parameter versions, where each has a base (pre-trained) and instruction-tuned modifications. Gemma models are designed to be cross-device compatible and optimized for Google Cloud and NVIDIA GPUs. They are also accessible through Kaggle, Hugging Face, Google Cloud with Vertex AI or GKE. Gemma models can be used for a variety of applications, including text generation, summarization, RAG, and both commercial and research use.

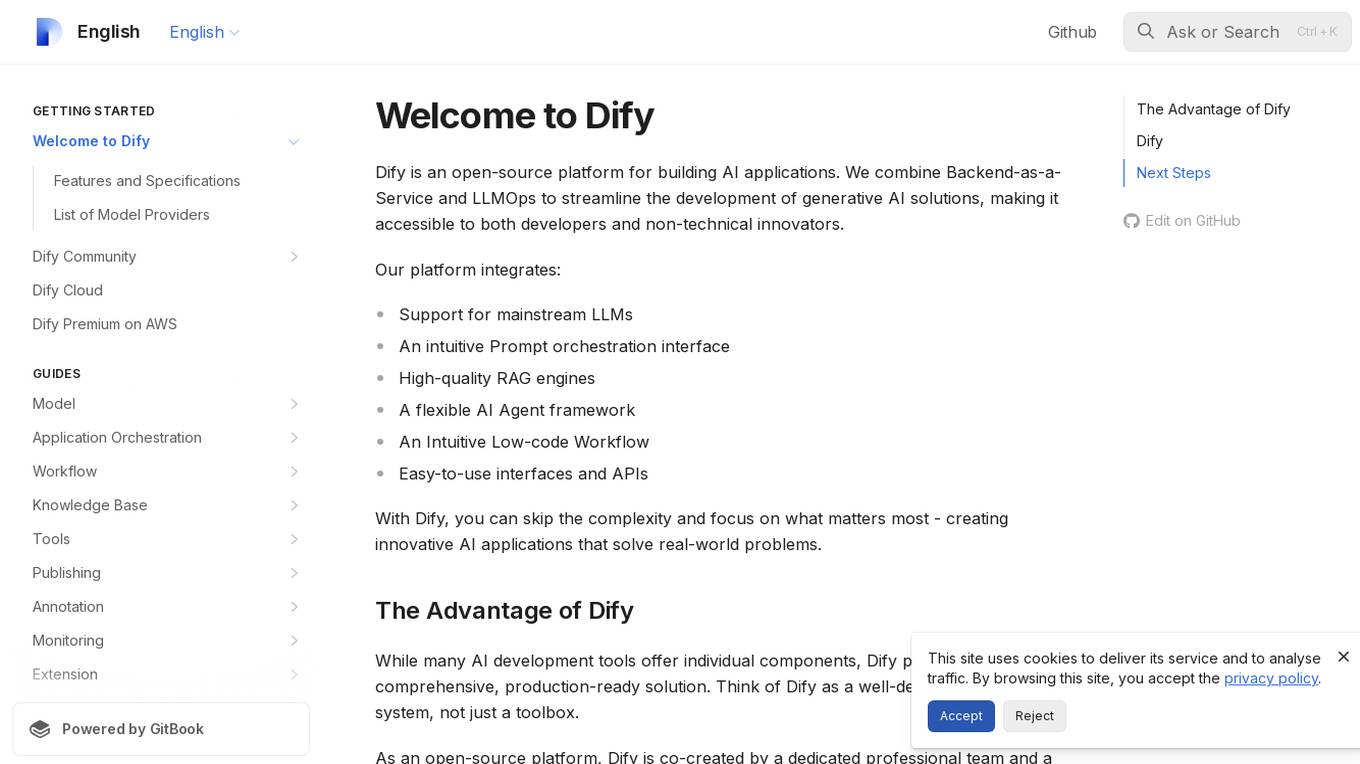

Dify

Dify is an open-source platform for building AI applications that combines Backend-as-a-Service and LLMOps to streamline the development of generative AI solutions. It integrates support for mainstream LLMs, an intuitive Prompt orchestration interface, high-quality RAG engines, a flexible AI Agent framework, and easy-to-use interfaces and APIs. Dify allows users to skip complexity and focus on creating innovative AI applications that solve real-world problems. It offers a comprehensive, production-ready solution with a user-friendly interface.

Clarifai

Clarifai is an AI Workflow Orchestration Platform that helps businesses establish an AI Operating Model and transition from prototype to production efficiently. It offers end-to-end solutions for operationalizing AI, including Retrieval Augmented Generation (RAG), Generative AI, Digital Asset Management, Visual Inspection, Automated Data Labeling, and Content Moderation. Clarifai's platform enables users to build and deploy AI faster, reduce development costs, ensure oversight and security, and unlock AI capabilities across the organization. The platform simplifies data labeling, content moderation, intelligence & surveillance, generative AI, content organization & personalization, and visual inspection. Trusted by top enterprises, Clarifai helps companies overcome challenges in hiring AI talent and misuse of data, ultimately leading to AI success at scale.

Allapi.ai

Allapi.ai is an advanced AI API platform designed to simplify AI integration for developers and startup founders. It offers a powerful ecosystem of models, plugins, and APIs to help users build and deploy AI-powered applications quickly and efficiently. With features like dynamic data capabilities, advanced RAG system, streamlined development process, and intelligent code assistant, Allapi.ai aims to accelerate innovation and reduce development costs. The platform provides access to cutting-edge AI models like Claude3, GPT-4, Gemini 1.5 Pro, and LLaMA 3, along with a wide range of plugins and tools to supercharge AI-driven applications.

Helix AI

Helix AI is a private GenAI platform that enables users to build AI applications using open source models. The platform offers tools for RAG (Retrieval-Augmented Generation) and fine-tuning, allowing deployment on-premises or in a Virtual Private Cloud (VPC). Users can access curated models, utilize Helix API tools to connect internal and external APIs, embed Helix Assistants into websites/apps for chatbot functionality, write AI application logic in natural language, and benefit from the innovative RAG system for Q&A generation. Additionally, users can fine-tune models for domain-specific needs and deploy securely on Kubernetes or Docker in any cloud environment. Helix Cloud offers free and premium tiers with GPU priority, catering to individuals, students, educators, and companies of varying sizes.

Langflow

Langflow is a low-code app builder for RAG and multi-agent AI applications. It is Python-based and agnostic to any model, API, or database. Langflow offers a visual IDE for building and testing workflows, multi-agent orchestration, free cloud service, observability features, and ecosystem integrations. Users can customize workflows using Python and publish them as APIs or export as Python applications.

Nuclia

Nuclia is an AI-powered search engine that helps businesses unlock the value of their unstructured data. With Nuclia, businesses can quickly and easily search, analyze, and extract insights from their data, regardless of its format or location. Nuclia's AI capabilities include natural language processing, machine learning, and deep learning, which allow it to understand the context and meaning of data, and to generate human-like text and code. Nuclia is used by businesses of all sizes across a variety of industries, including financial services, healthcare, manufacturing, and retail.

AI Builders Summit

AI Builders Summit is a 4-week virtual training event designed to equip data scientists, ML and AI engineers, and innovators with the latest advancements in large language models (LLMs), AI agents, and Retrieval-Augmented Generation (RAG). The summit emphasizes hands-on learning and real-world applications, with interactive workshops, platform credits, and direct exposure to industry-leading tools. Attendees can learn progressively over four weeks, building practical skills through expert-led sessions, cutting-edge tools, and industry insights.

1 - Open Source Tools

promptfoo

Promptfoo is a tool for testing and evaluating LLM output quality. With promptfoo, you can build reliable prompts, models, and RAGs with benchmarks specific to your use-case, speed up evaluations with caching, concurrency, and live reloading, score outputs automatically by defining metrics, use as a CLI, library, or in CI/CD, and use OpenAI, Anthropic, Azure, Google, HuggingFace, open-source models like Llama, or integrate custom API providers for any LLM API.

1 - OpenAI Gpts

Automated Knowledge Distillation

For strategic knowledge distillation, upload the document you need to analyze and use !start. ENSURE the uploaded file shows DOCUMENT and NOT PDF. This workflow requires leveraging RAG to operate. Only a small amount of PDFs are supported, convert to txt or doc. For timeout, refresh & !continue