Best AI tools for< Qa Practitioner >

Infographic

20 - AI tool Sites

Virtuoso

Virtuoso is an AI-powered, end-to-end functional testing tool for web applications. It uses Natural Language Programming, Machine Learning, and Robotic Process Automation to automate the testing process, making it faster and more efficient. Virtuoso can be used by QA managers, practitioners, and senior executives to improve the quality of their software applications.

Rainforest QA

Rainforest QA is an AI-powered test automation platform designed for SaaS startups to streamline and accelerate their testing processes. It offers AI-accelerated testing, no-code test automation, and expert QA services to help teams achieve reliable test coverage and faster release cycles. Rainforest QA's platform integrates with popular tools, provides detailed insights for easy debugging, and ensures visual-first testing for a seamless user experience. With a focus on automating end-to-end tests, Rainforest QA aims to eliminate QA bottlenecks and help teams ship bug-free code with confidence.

QA Wolf

QA Wolf is an AI-native service that delivers 80% automated end-to-end test coverage for web and mobile apps in weeks, not years. It automates hundreds of tests using Playwright code for web and Appium for mobile, providing reliable test results on every run. With features like 100% parallel run infrastructure, zero flake guarantee, and unlimited test runs, QA Wolf aims to help software teams ship better software faster by taking QA completely off their plate.

QA.tech

QA.tech is an advanced end-to-end testing application designed for B2B SaaS companies. It offers AI-powered testing solutions to help businesses ship faster, cut costs, and improve testing efficiency. The application features an AI agent named Jarvis that automates the testing process by scanning web apps, creating detailed memory structures, generating tests based on user interactions, and continuously testing for defects. QA.tech provides developer-friendly bug reports, supports various web frameworks, and integrates with CI/CD pipelines. It aims to revolutionize the testing process by offering faster, smarter, and more efficient testing solutions.

mySQM™ QA

SQM Group's mySQM™ QA software is a comprehensive solution for call centers to monitor, motivate, and manage agents, ultimately improving customer experience (CX) and reducing QA costs by 50%. It combines three data sources: post-call surveys, call handling data, and call compliance feedback, providing holistic CX insights. The software offers personalized agent self-coaching suggestions, real-time recognition for great CX delivery, and benchmarks, ranks, awards, and certifies Csat, FCR, and QA performance.

aqua

aqua is a comprehensive Quality Assurance (QA) management tool designed to streamline testing processes and enhance testing efficiency. It offers a wide range of features such as AI Copilot, bug reporting, test management, requirements management, user acceptance testing, and automation management. aqua caters to various industries including banking, insurance, manufacturing, government, tech companies, and medical sectors, helping organizations improve testing productivity, software quality, and defect detection ratios. The tool integrates with popular platforms like Jira, Jenkins, JMeter, and offers both Cloud and On-Premise deployment options. With AI-enhanced capabilities, aqua aims to make testing faster, more efficient, and error-free.

Octomind

Octomind is an agent-powered test automation platform designed for large web applications. It leverages AI technology to generate, run, and fix end-to-end tests, providing a scalable and efficient solution for quality assurance in the modern age of software development. Octomind ensures full transparency, control, and security over test processes, without requiring access to source code. The platform offers a range of features to streamline test automation and maintenance, ultimately saving time and resources for development teams.

Qodex

Qodex is an AI-powered QA tool designed for end-to-end API testing, built by developers for developers. It offers enterprise-level QA efficiency with full automation and zero coding required. The tool auto-generates tests in plain English and adapts as the product evolves. Qodex also provides interactive API documentation and seamless integration, making it a cost-effective solution for enhancing productivity and efficiency in software testing.

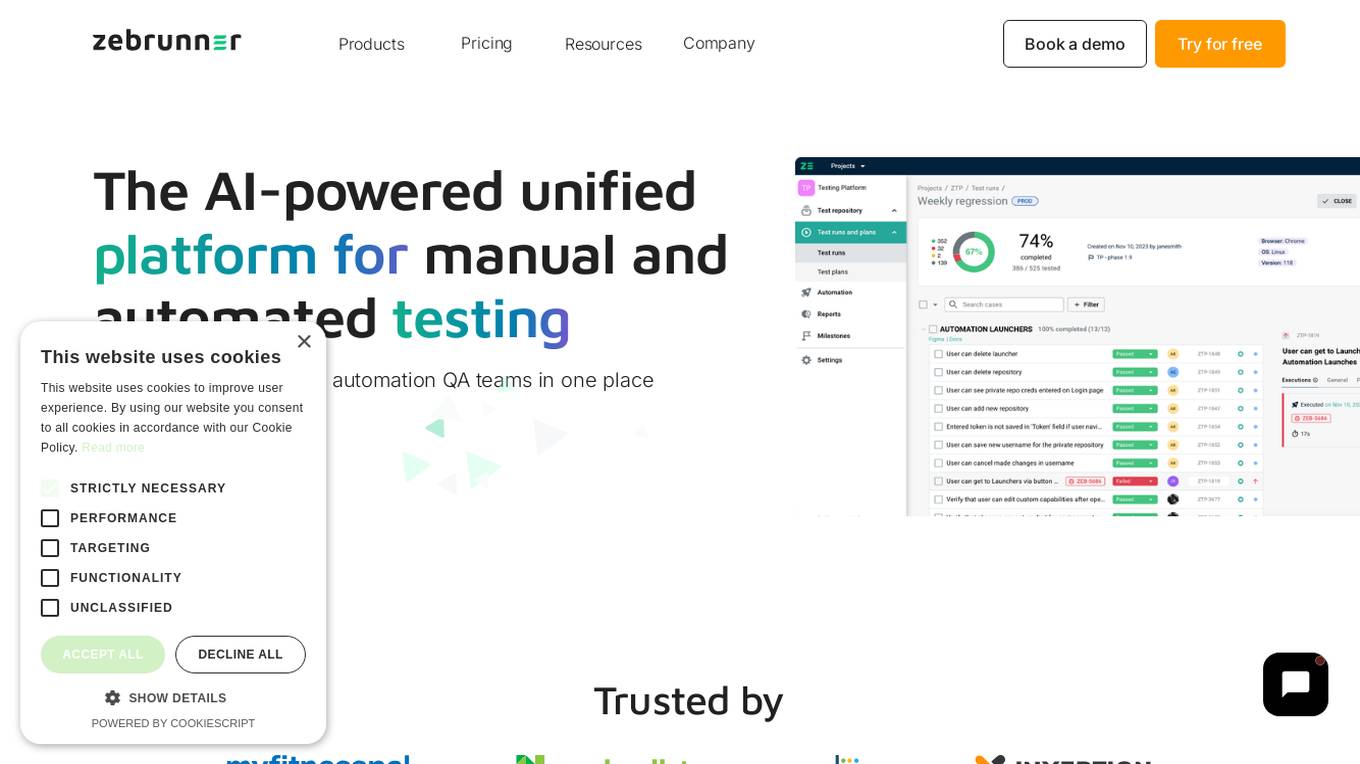

Zebrunner

Zebrunner is an AI-powered unified platform for manual and automated testing, designed to synchronize manual and automation QA teams in one place. It offers features such as test management, automation reporting, and test case management, with capabilities for generating new test cases, autocomplete existing ones, and categorize failures using AI. Zebrunner provides a clean and intuitive UI, unmatched performance, powerful reporting, rich integrations, and 24/7 support for efficient testing processes. It also offers customizable dashboards, sharable reports, and seamless integrations with Jira and other SDLC tools for streamlined workflows.

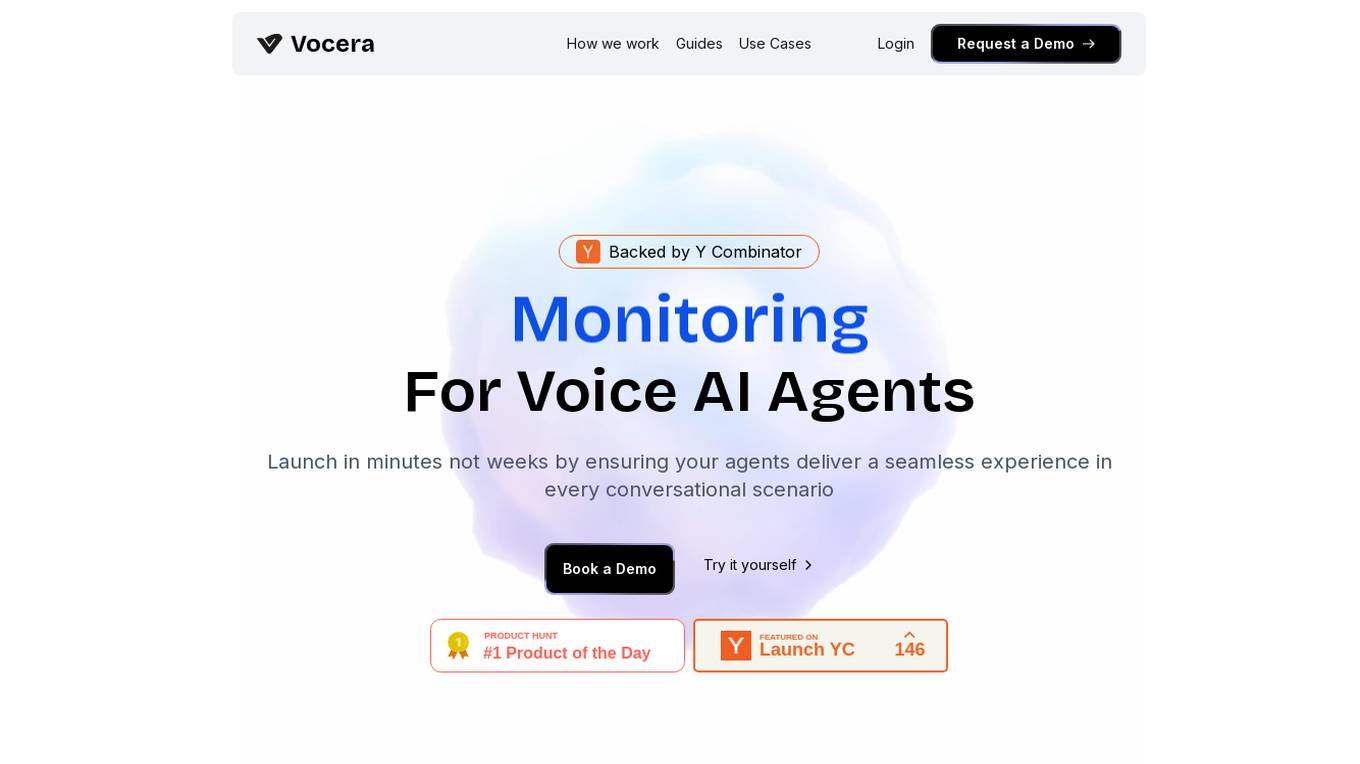

Vocera

Vocera is an AI voice agent testing tool that allows users to test and monitor voice AI agents efficiently. It enables users to launch voice agents in minutes, ensuring a seamless conversational experience. With features like testing against AI-generated datasets, simulating scenarios, and monitoring AI performance, Vocera helps in evaluating and improving voice agent interactions. The tool provides real-time insights, detailed logs, and trend analysis for optimal performance, along with instant notifications for errors and failures. Vocera is designed to work for everyone, offering an intuitive dashboard and data-driven decision-making for continuous improvement.

BugRaptors

BugRaptors is an AI-powered quality engineering services company that offers a wide range of software testing services. They provide manual testing, compatibility testing, functional testing, UAT services, mobile app testing, web testing, game testing, regression testing, usability testing, crowd-source testing, automation testing, and more. BugRaptors leverages AI and automation to deliver world-class QA services, ensuring seamless customer experience and aligning with DevOps automation goals. They have developed proprietary tools like MoboRaptors, BugBot, RaptorVista, RaptorGen, RaptorHub, RaptorAssist, RaptorSelect, and RaptorVision to enhance their services and provide quality engineering solutions.

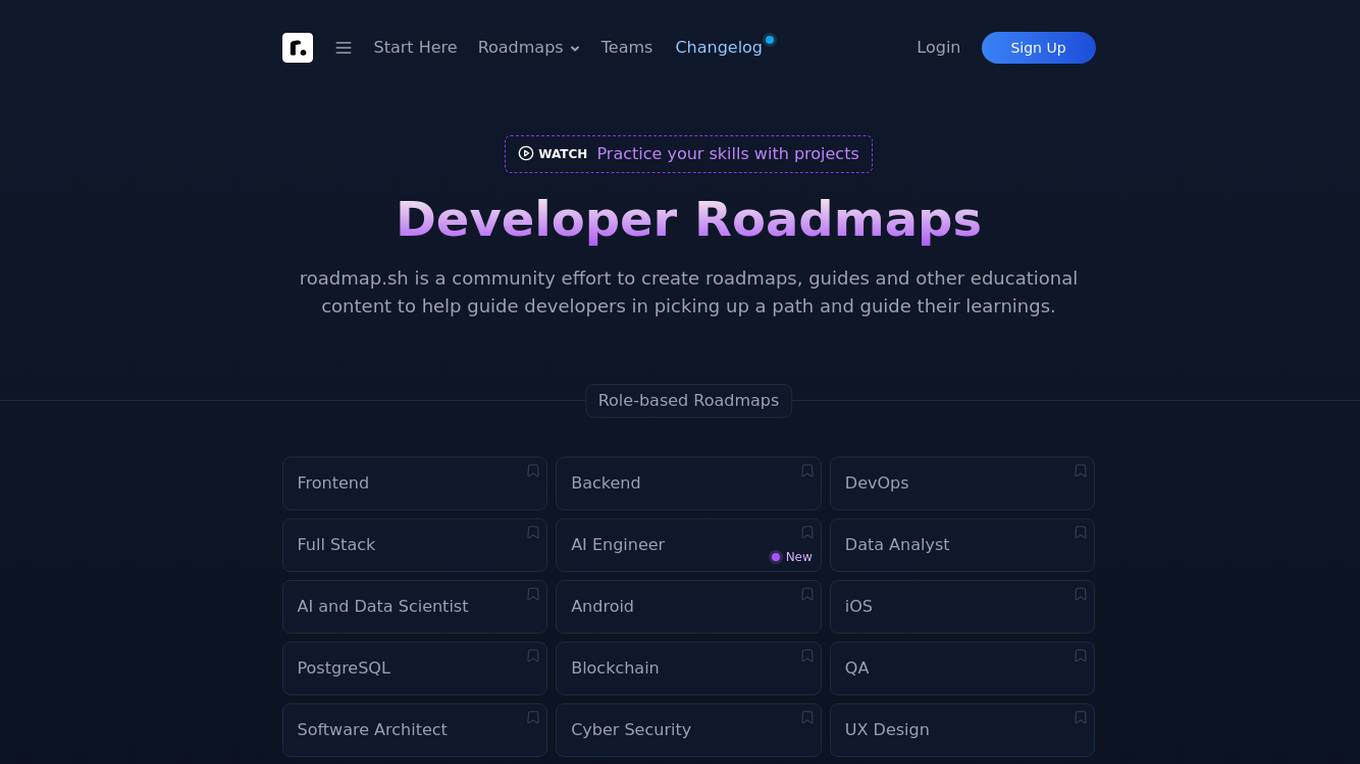

Developer Roadmaps

Developer Roadmaps (roadmap.sh) is a community-driven platform offering official roadmaps, guides, projects, best practices, questions, and videos to assist developers in skill development and career growth. It provides role-based and skill-based roadmaps covering various technologies and domains. The platform is actively maintained and continuously updated to enhance the learning experience for developers worldwide.

Stark.ai

Stark.ai is an AI-powered job search tool that revolutionizes the way job seekers navigate their professional journey. It offers a range of features such as Resume Builder, Career Guru AI insights, Job Match Score, ATS Friendliness Check, and Skill Builder to help users enhance their skills, optimize their resumes, and streamline their job search process. Stark.ai empowers users to get noticed, get hired faster, and transform their careers with AI-driven precision.

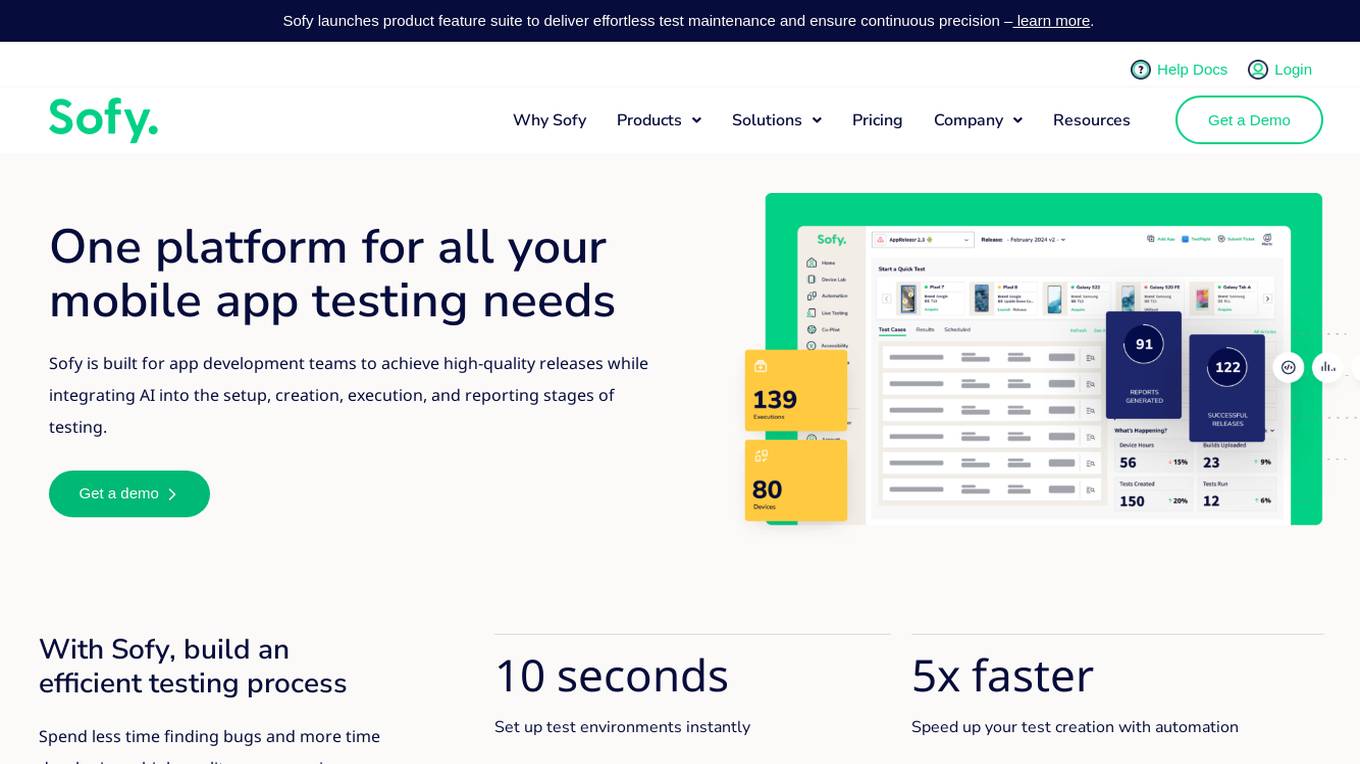

Sofy

Sofy is a revolutionary no-code testing platform for mobile applications that integrates AI to streamline the testing process. It offers features such as manual and ad-hoc testing, no-code automation, AI-powered test case generation, and real device testing. Sofy helps app development teams achieve high-quality releases by simplifying test maintenance and ensuring continuous precision. With a focus on efficiency and user experience, Sofy is trusted by top industries for its all-in-one testing solution.

Ranger

Ranger is a fast and reliable QA testing tool powered by AI and perfected by humans. It writes and maintains QA tests to find real bugs, allowing teams to move forward confidently. Trusted by fast-growing teams, Ranger handles every facet of QA testing, saving time and enabling faster product launches. With a focus on catching real bugs and maintaining core flows, Ranger helps teams maintain high quality while accelerating engineering velocity.

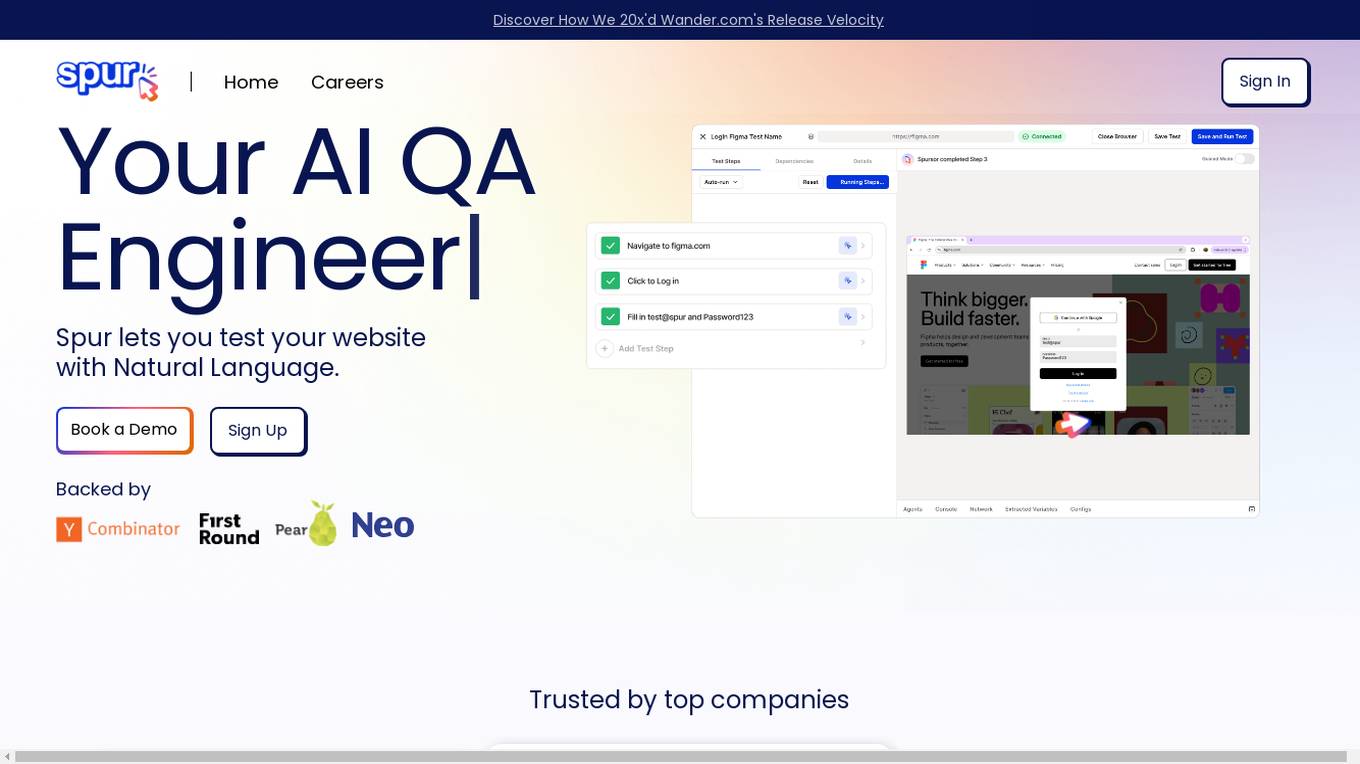

Spur

Spur is an AI QA tool that allows users to test websites using natural language, eliminating the need for complex test scripts. It offers reliable automated tests that adapt to UI changes, real-time playback for debugging, and powerful validations. Spur's AI-powered tests reduce manual testing time, improve software testing processes, and ensure the reliability of tests even with site changes. The tool is user-friendly, requires no coding skills, and supports API testing.

Momentic

Momentic is an AI testing tool that offers automated AI testing for software applications. It streamlines regression testing, production monitoring, and UI automation, making test automation easy with its AI capabilities. Momentic is designed to be simple to set up, easy to maintain, and accelerates team productivity by creating and deploying tests faster with its intuitive low-code editor. The tool adapts to applications, saves time with automated test maintenance, and allows testing anywhere, anytime using cloud, local, or CI/CD pipelines.

TestDriver

TestDriver is an AI-powered testing tool that helps developers automate their testing process. It can be integrated with GitHub and can test anything, right in the GitHub environment. TestDriver is easy to set up and use, and it can help developers save time and effort by offloading testing to AI. It uses Dashcam.io technology to provide end-to-end exploratory testing, allowing developers to see the screen, logs, and thought process as the AI completes its test.

ILoveMyQA

ILoveMyQA is an AI-powered QA testing service that provides comprehensive, well-documented bug reports. The service is affordable, easy to get started with, and requires no time-zapping chats. ILoveMyQA's team of Rockstar QAs is dedicated to helping businesses find and fix bugs before their customers do, so they can enjoy the results and benefits of having a QA team without the cost, management, and headaches.

MaestroQA

MaestroQA is a comprehensive Call Center Quality Assurance Software that offers a range of products and features to enhance QA processes. It provides customizable report builders, scorecard builders, calibration workflows, coaching workflows, automated QA workflows, screen capture, accurate transcriptions, root cause analysis, performance dashboards, AI grading assist, analytics, and integrations with various platforms. The platform caters to industries like eCommerce, financial services, gambling, insurance, B2B software, social media, and media, offering solutions for QA managers, team leaders, and executives.

0 - Open Source Tools

20 - OpenAI Gpts

Game QA Strategist

Advises on QA tests based on recent game code changes, including git history. Learn more at regression.gg

Automation QA Interview Assistant

I provide Automation QA interview prep and conduct mock interviews.

Manual QA Interview Assistant

I provide Manual QA interview prep and conduct mock interviews.

Prompt QA

Designed for excellence in Quality Assurance, fine-tuning custom GPT configurations through continuous refinement.

Test Case GPT

I will provide guidance on testing, verification, and validation for QA roles.

Selenium Sage

Expert in Selenium test automation, providing practical advice and solutions.

Test Shaman

Test Shaman: Guiding software testing with Grug wisdom and humor, balancing fun with practical advice.

Complete Apex Test Class Assistant

Crafting full, accurate Apex test classes, with 100% user service.