Best AI tools for< Model Optimization >

Infographic

20 - AI tool Sites

artlabs

artlabs is an AI-powered immersive 3D eCommerce platform that helps boost sales by turning product images into high-quality 3D visuals. It offers virtual try-on experiences and hyper-personalized eCommerce stores for enterprises with extensive product catalogs. The platform simplifies AR creation, ensures quality, integrates seamlessly, and provides ROI analytics without the need for heavy equipment or extra photoshoots. Trusted by eCommerce leaders globally, artlabs accelerates eCommerce growth with proven technology that increases conversion rates, decreases product returns, and enhances customer preferences.

ONNX Runtime

ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing in various technology stacks. It supports multiple languages and platforms, optimizing performance for CPU, GPU, and NPU hardware. ONNX Runtime powers AI in Microsoft products and is widely used in cloud, edge, web, and mobile applications. It also enables large model training and on-device training, offering state-of-the-art models for tasks like image synthesis and text generation.

Rawbot

Rawbot is an AI model comparison tool that simplifies the process of selecting the best AI models for projects and applications. It allows users to compare various AI models side-by-side, providing insights into their performance, strengths, weaknesses, and suitability. Rawbot helps users make informed decisions by identifying the most suitable AI models based on specific requirements, leading to optimal results in research, development, and business applications.

Testmyprompt

Testmyprompt is an AI prompt software designed for AI Automation Agencies. It allows users to build and test AI prompts quickly and efficiently, saving significant time and ensuring consistency in prompt creation. The tool enables users to simulate thousands of conversations in seconds, import AI settings, add test questions with variations and success criteria, and analyze AI performance to identify areas of improvement. Testmyprompt helps users optimize their AI models for better performance and customer interaction.

Edge Impulse

Edge Impulse is a leading edge AI platform that enables users to build datasets, train models, and optimize libraries to run directly on any edge device. It offers sensor datasets, feature engineering, model optimization, algorithms, and NVIDIA integrations. The platform is designed for product leaders, AI practitioners, embedded engineers, and OEMs across various industries and applications. Edge Impulse helps users unlock sensor data value, build high-quality sensor datasets, advance algorithm development, optimize edge AI models, and achieve measurable results. It allows for future-proofing workflows by generating models and algorithms that perform efficiently on any edge hardware.

LM-Kit.NET

LM-Kit.NET is a comprehensive AI toolkit for .NET developers, offering a wide range of features such as AI agent integration, data processing, text analysis, translation, text generation, and model optimization. The toolkit enables developers to create intelligent and adaptable AI applications by providing tools for language models, sentiment analysis, emotion detection, and more. With a focus on performance optimization and security, LM-Kit.NET empowers developers to build cutting-edge AI solutions seamlessly into their C# and VB.NET applications.

Motific.ai

Motific.ai is a responsible GenAI tool powered by data at scale. It offers a fully managed service with natural language compliance and security guardrails, an intelligence service, and an enterprise data-powered, end-to-end retrieval augmented generation (RAG) service. Users can rapidly deliver trustworthy GenAI assistants and API endpoints, configure assistants with organization's data, optimize performance, and connect with top GenAI model providers. Motific.ai enables users to create custom knowledge bases, connect to various data sources, and ensure responsible AI practices. It supports English language only and offers insights on usage, time savings, and model optimization.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

Embedl

Embedl is an AI tool that specializes in developing advanced solutions for efficient AI deployment in embedded systems. With a focus on deep learning optimization, Embedl offers a cost-effective solution that reduces energy consumption and accelerates product development cycles. The platform caters to industries such as automotive, aerospace, and IoT, providing cutting-edge AI products that drive innovation and competitive advantage.

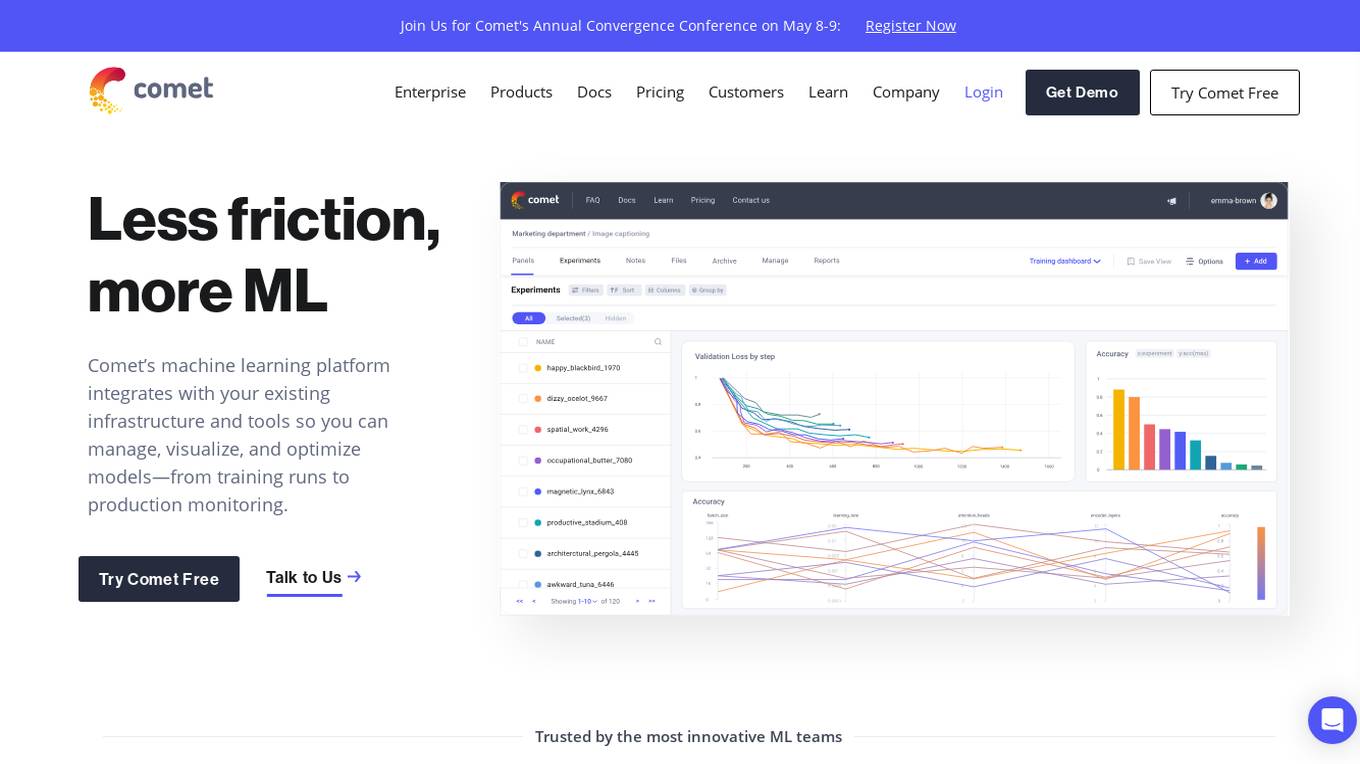

Comet ML

Comet ML is an extensible, fully customizable machine learning platform that aims to move ML forward by supporting productivity, reproducibility, and collaboration. It integrates with existing infrastructure and tools to manage, visualize, and optimize models from training runs to production monitoring. Users can track and compare training runs, create a model registry, and monitor models in production all in one platform. Comet's platform can be run on any infrastructure, enabling users to reshape their ML workflow and bring their existing software and data stack.

Caffe

Caffe is a deep learning framework developed by Berkeley AI Research (BAIR) and community contributors. It is designed for speed, modularity, and expressiveness, allowing users to define models and optimization through configuration without hard-coding. Caffe supports both CPU and GPU training, making it suitable for research experiments and industry deployment. The framework is extensible, actively developed, and tracks the state-of-the-art in code and models. Caffe is widely used in academic research, startup prototypes, and large-scale industrial applications in vision, speech, and multimedia.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

Intel Gaudi AI Accelerator Developer

The Intel Gaudi AI accelerator developer website provides resources, guidance, tools, and support for building, migrating, and optimizing AI models. It offers software, model references, libraries, containers, and tools for training and deploying Generative AI and Large Language Models. The site focuses on the Intel Gaudi accelerators, including tutorials, documentation, and support for developers to enhance AI model performance.

Industrial Engineer AI

Industrial Engineer AI is an advanced AI tool designed to assist industrial engineers in optimizing processes and improving efficiency in manufacturing environments. The application utilizes machine learning algorithms to analyze data, identify bottlenecks, and suggest solutions for streamlining operations. With its user-friendly interface and powerful capabilities, Industrial Engineer AI is a valuable tool for professionals looking to enhance productivity and reduce costs in industrial settings.

FareTrack

FareTrack is an AI-driven data intelligence solution tailored for the modern air travel industry. It offers accurate, timely, and actionable insights for airline revenue management, distribution, and network operations teams. By leveraging advanced AI technology, FareTrack empowers clients with competitive fare tracking, ancillary pricing insights, open pricing monitoring, and price rank value optimization. The platform also provides comprehensive travel data solutions beyond airfare, including tax breakdowns, historical fare analysis, and trend analysis. With customizable dashboards and API integration, FareTrack enables users to make informed decisions swiftly and stay ahead in the dynamic world of air travel.

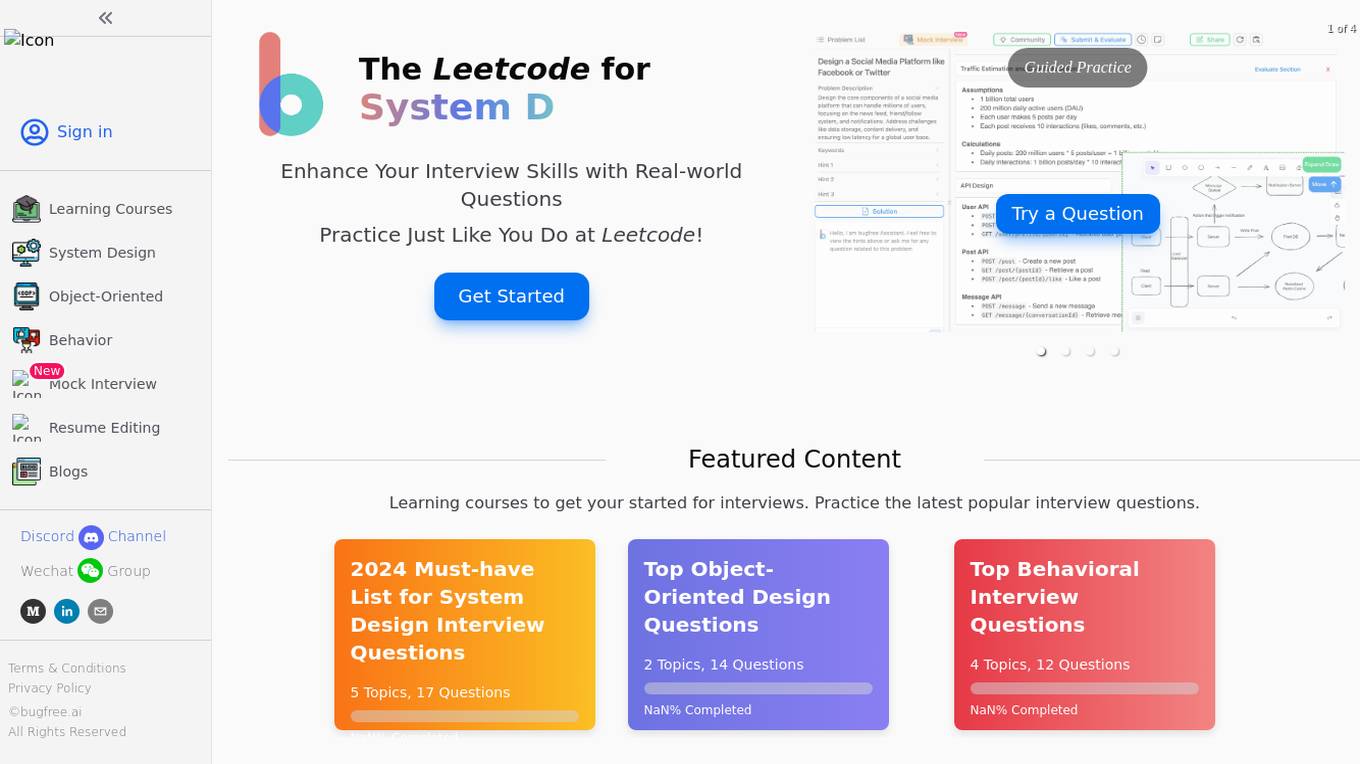

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

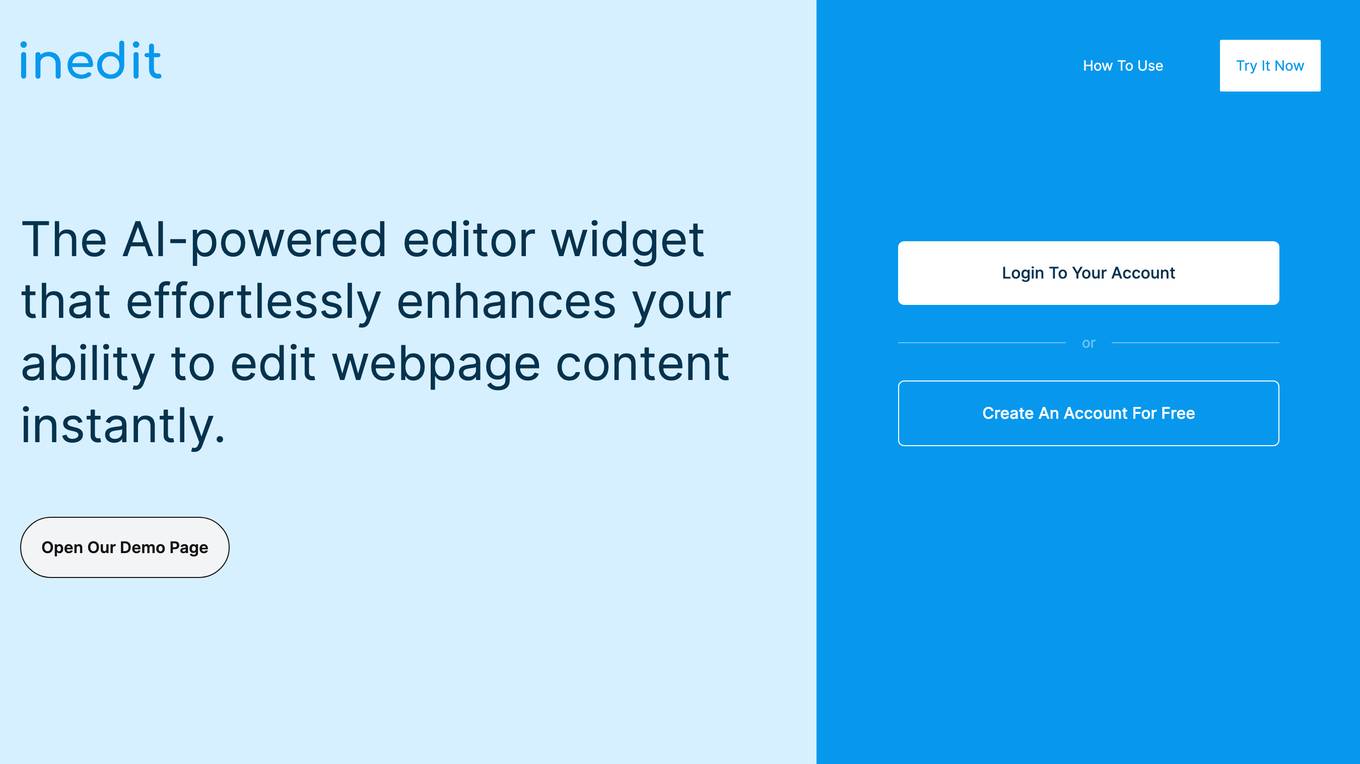

Inedit

Inedit is an AI-powered editor widget that enhances webpage content editing instantly. It offers features like AI technology, manual editing, effortless editing of multiple elements, and the ability to inspect deeper structures of webpages. The tool is powered by OpenAI GPT Models, providing unparalleled flexibility and performance. Users can seamlessly edit, evaluate, and publish content, ensuring only approved content reaches the audience.

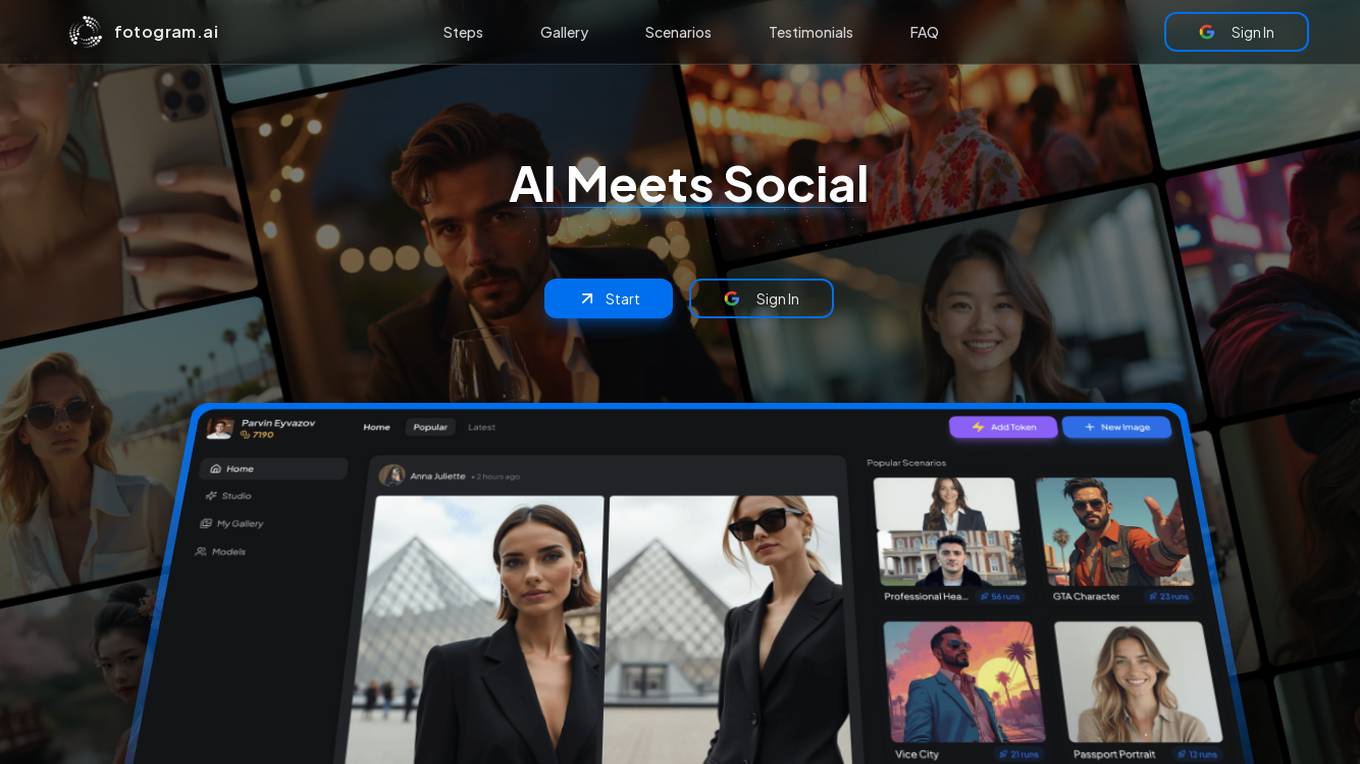

Fotogram.ai

Fotogram.ai is an AI-powered image editing tool that offers a wide range of features to enhance and transform your photos. With Fotogram.ai, users can easily apply filters, adjust colors, remove backgrounds, add effects, and retouch images with just a few clicks. The tool uses advanced AI algorithms to provide professional-level editing capabilities to users of all skill levels. Whether you are a photographer looking to streamline your workflow or a social media enthusiast wanting to create stunning visuals, Fotogram.ai has you covered.

Bibit AI

Bibit AI is a real estate marketing AI designed to enhance the efficiency and effectiveness of real estate marketing and sales. It can help create listings, descriptions, and property content, and offers a host of other features. Bibit AI is the world's first AI for Real Estate. We are transforming the real estate industry by boosting efficiency and simplifying tasks like listing creation and content generation.

HUAWEI Cloud Pangu Drug Molecule Model

HUAWEI Cloud Pangu is an AI tool designed for accelerating drug discovery by optimizing drug molecules. It offers features such as Molecule Search, Molecule Optimizer, and Pocket Molecule Design. Users can submit molecules for optimization and view historical optimization results. The tool is based on the MindSpore framework and has been visited over 300,000 times since August 23, 2021.

1 - Open Source Tools

hqq

HQQ is a fast and accurate model quantizer that skips the need for calibration data. It's super simple to implement (just a few lines of code for the optimizer). It can crunch through quantizing the Llama2-70B model in only 4 minutes! 🚀

20 - OpenAI Gpts

Shell Mentor

An AI GPT model designed to assist with Shell/Bash programming, providing real-time code suggestions, debugging tips, and script optimization for efficient command-line operations.

Data Architect

Database Developer assisting with SQL/NoSQL, architecture, and optimization.

Optimisateur de Performance GPT

Expert en optimisation de performance et traitement de données

Seabiscuit Business Model Master

Discover A More Robust Business: Craft tailored value proposition statements, develop a comprehensive business model canvas, conduct detailed PESTLE analysis, and gain strategic insights on enhancing business model elements like scalability, cost structure, and market competition strategies. (v1.18)

Create A Business Model Canvas For Your Business

Let's get started by telling me about your business: What do you offer? Who do you serve? ------------------------------------------------------- Need help Prompt Engineering? Reach out on LinkedIn: StephenHnilica

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

BITE Model Analyzer by Dr. Steven Hassan

Discover if your group, relationship or organization uses specific methods to recruit and maintain control over people