Best AI tools for< Ml Security Engineer >

Infographic

20 - AI tool Sites

Huntr

Huntr is the world's first bug bounty platform for AI/ML. It provides a single place for security researchers to submit vulnerabilities, ensuring the security and stability of AI/ML applications, including those powered by Open Source Software (OSS).

hCaptcha Enterprise

hCaptcha Enterprise is a comprehensive AI-powered security platform designed to detect and deter human and automated threats, including bot detection, fraud protection, and account defense. It offers highly accurate bot detection, fraud protection without false positives, and account takeover detection. The platform also provides privacy-preserving abuse detection with zero personally identifiable information (PII) required. hCaptcha Enterprise is trusted by category leaders in various industries worldwide, offering universal support, comprehensive security, and compliance with global privacy standards like GDPR, CCPA, and HIPAA.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

Cyguru

Cyguru is an all-in-one cloud-based AI Security Operation Center (SOC) that offers a comprehensive range of features for a robust and secure digital landscape. Its Security Operation Center is the cornerstone of its service domain, providing AI-Powered Attack Detection, Continuous Monitoring for Vulnerabilities and Misconfigurations, Compliance Assurance, SecPedia: Your Cybersecurity Knowledge Hub, and Advanced ML & AI Detection. Cyguru's AI-Powered Analyst promptly alerts users to any suspicious behavior or activity that demands attention, ensuring timely delivery of notifications. The platform is accessible to everyone, with up to three free servers and subsequent pricing that is more than 85% below the industry average.

H2O.ai

H2O.ai is a leading platform that offers a convergence of the world's best predictive and generative AI solutions for private and protected data. The platform provides a wide range of AI agents, digital assistants, business insights, predictive AI tools, and solutions for model builders, data scientists, and enterprise developers. H2O.ai is known for its innovative AI technologies that empower organizations to accelerate model development, train custom models, and manage the full ML lifecycle. With a focus on privacy and security, H2O.ai is trusted by banks, telcos, and government agencies worldwide.

Intuition Machines

Intuition Machines is a leading provider of Privacy-Preserving AI/ML platforms and research solutions. They offer products and services that cater to category leaders worldwide, focusing on AI/ML research, security, and risk analysis. Their innovative solutions help enterprises prepare for the future by leveraging AI for a wide range of problems. With a strong emphasis on privacy and security, Intuition Machines is at the forefront of developing cutting-edge AI technologies.

Elastic

Elastic is a Search AI Company that offers a platform for building tailored experiences, search and analytics, data ingestion, visualization, and generative AI solutions. The company provides services like Elastic Cloud for real-time insights, Elastic AI Assistant for retrieval and generation, and Search AI Lake for faster integration with LLMs. Elastic aims to help businesses scale with low-latency search AI and accelerate problem resolution with observability powered by advanced ML and analytics.

Voxel51

Voxel51 is an AI tool that provides open-source computer vision tools for machine learning. It offers solutions for various industries such as agriculture, aviation, driving, healthcare, manufacturing, retail, robotics, and security. Voxel51's main product, FiftyOne, helps users explore, visualize, and curate visual data to improve model performance and accelerate the development of visual AI applications. The platform is trusted by thousands of users and companies, offering both open-source and enterprise-ready solutions to manage and refine data and models for visual AI.

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

United States Artificial Intelligence Institute

The United States Artificial Intelligence Institute (USAII) is an AI certification platform offering a range of self-paced and powerful Artificial Intelligence certifications. The platform provides certifications for professionals at different experience levels, from beginners to experts, covering topics such as Neural Network Architectures, Deep Learning, Computer Vision, AI Adoption Strategies, and more. USAII aims to bridge the global AI skill gap by developing industry-relevant skills and certifying professionals. The platform offers exclusive AI learning programs for high school students and emphasizes the importance of AI education for future innovators.

Aiiot Talk

Aiiot Talk is an AI tool that focuses on Artificial Intelligence, Robotics, Technology, Internet of Things, Machine Learning, Business Technology, Data Security, and Marketing. The platform provides insights, articles, and discussions on the latest trends and applications of AI in various industries. Users can explore how AI is reshaping businesses, enhancing security measures, and revolutionizing technology. Aiiot Talk aims to educate and inform readers about the potential of AI and its impact on society and the future.

Fiddler AI

Fiddler AI is an AI Observability platform that provides tools for monitoring, explaining, and improving the performance of AI models. It offers a range of capabilities, including explainable AI, NLP and CV model monitoring, LLMOps, and security features. Fiddler AI helps businesses to build and deploy high-performing AI solutions at scale.

Great Learning

Great Learning is an online platform offering a wide range of courses, PG certificates, and degree programs in various domains such as AI & Machine Learning, Data Science, Business Analytics, Cloud Computing, Cyber Security, Software Development, Digital Marketing, Design, MBA, and Masters. The platform provides opportunities to learn from top universities, offers career support, success stories, and enterprise solutions. With a focus on AI and Machine Learning, Great Learning aims to elevate expertise and provide transformative programs to help individuals enhance their skills and advance their careers.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

Arize AI

Arize AI is an AI observability tool designed to monitor and troubleshoot AI models in production. It provides configurable and sophisticated observability features to ensure the performance and reliability of next-gen AI stacks. With a focus on ML observability, Arize offers automated setup, a simple API, and a lightweight package for tracking model performance over time. The tool is trusted by top companies for its ability to surface insights, simplify issue root causing, and provide a dedicated customer success manager. Arize is battle-hardened for real-world scenarios, offering unparalleled performance, scalability, security, and compliance with industry standards like SOC 2 Type II and HIPAA.

MOSTLY AI Platform

The website offers a Synthetic Data Generation platform with the highest accuracy for free. It provides detailed information on synthetic data, data anonymization, and features a Python Client for data generation. The platform ensures privacy and security, allowing users to create fully anonymous synthetic data from original data. It supports various AI/ML use cases, self-service analytics, testing & QA, and data sharing. The platform is designed for Enterprise organizations, offering scalability, privacy by design, and the world's most accurate synthetic data.

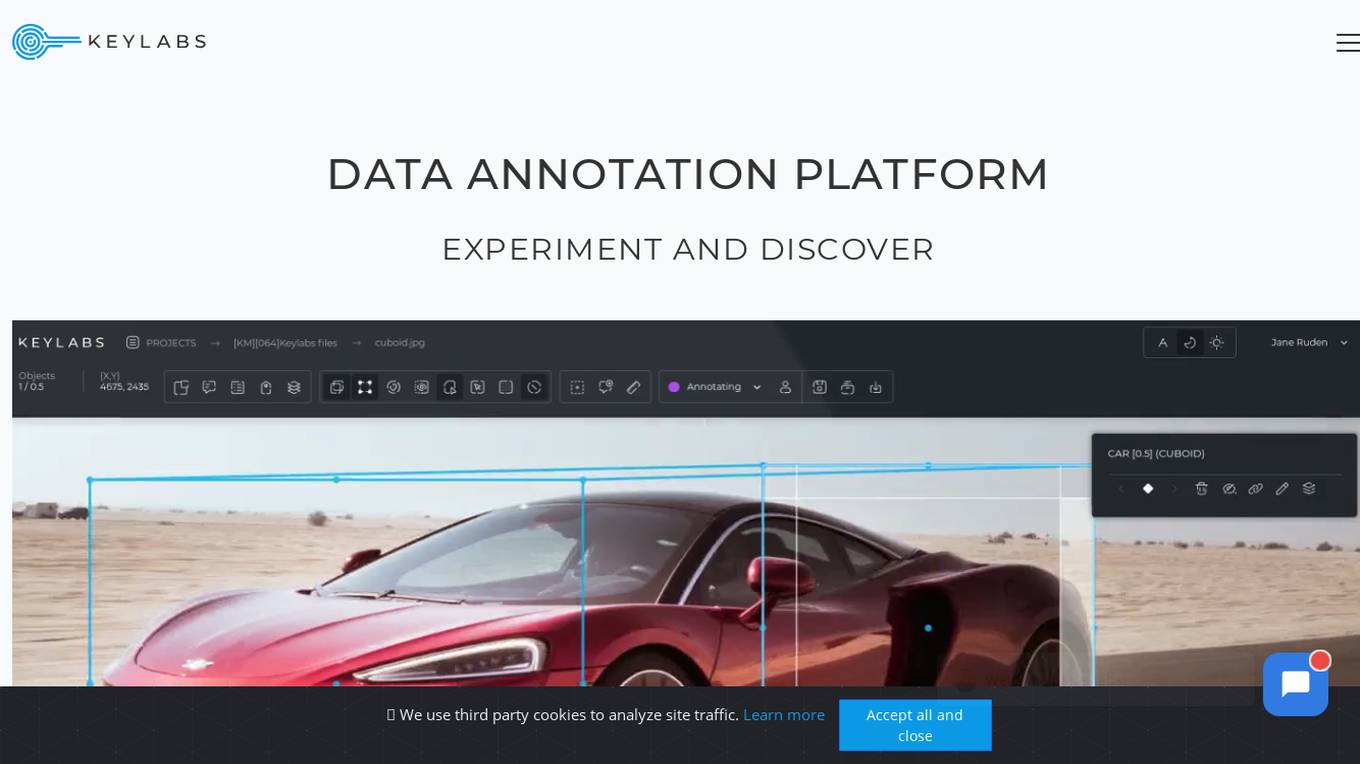

Keylabs

Keylabs is a state-of-the-art data annotation platform that enhances AI projects with highly precise data annotation and innovative tools. It offers image and video annotation, labeling, and ML-assisted features for industries such as automotive, aerial, agriculture, robotics, manufacturing, waste management, medical, healthcare, retail, fashion, sports, security, livestock, construction, and logistics. Keylabs provides advanced annotation tools, built-in machine learning, efficient operation management, and extra high performance to boost the preparation of visual data for machine learning. The platform ensures transparency in pricing with no hidden fees and offers a free trial for users to experience its capabilities.

Signal

Signal is an encrypted messaging service that allows users to send and receive text, voice, video, and image messages. It is available as a mobile app and a desktop application, and it can be used to communicate with other Signal users or with people who use other messaging apps. Signal is known for its strong security features, which include end-to-end encryption, disappearing messages, and a focus on privacy.

Kount

Kount is a comprehensive trust and safety platform that offers solutions for fraud detection, chargeback management, identity verification, and compliance. With advanced artificial intelligence and machine learning capabilities, Kount provides businesses with robust data and customizable policies to protect against various threats. The platform is suitable for industries such as ecommerce, health care, online learning, gaming, and more, offering personalized solutions to meet individual business needs.

0 - Open Source Tools

20 - OpenAI Gpts

Code & Research ML Engineer

ML Engineer who codes & researches for you! created by Meysam

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

Personalized ML+AI Learning Program

Interactive ML/AI tutor providing structured daily lessons.

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

Code Solver

ML/DL expert focused on mathematical modeling, Kaggle competitions, and advanced ML models.

Dascimal

Explains ML and data science concepts clearly, catering to various expertise levels.

Jacques

Deep Dive into math & ML, generating guides, with explanations and python exercises