Best AI tools for< Machine Learning Security Engineer >

Infographic

20 - AI tool Sites

MLSecOps

MLSecOps is an AI tool designed to drive the field of MLSecOps forward through high-quality educational resources and tools. It focuses on traditional cybersecurity principles, emphasizing people, processes, and technology. The MLSecOps Community educates and promotes the integration of security practices throughout the AI & machine learning lifecycle, empowering members to identify, understand, and manage risks associated with their AI systems.

Hive Defender

Hive Defender is an advanced, machine-learning-powered DNS security service that offers comprehensive protection against a vast array of cyber threats including but not limited to cryptojacking, malware, DNS poisoning, phishing, typosquatting, ransomware, zero-day threats, and DNS tunneling. Hive Defender transcends traditional cybersecurity boundaries, offering multi-dimensional protection that monitors both your browser traffic and the entirety of your machine’s network activity.

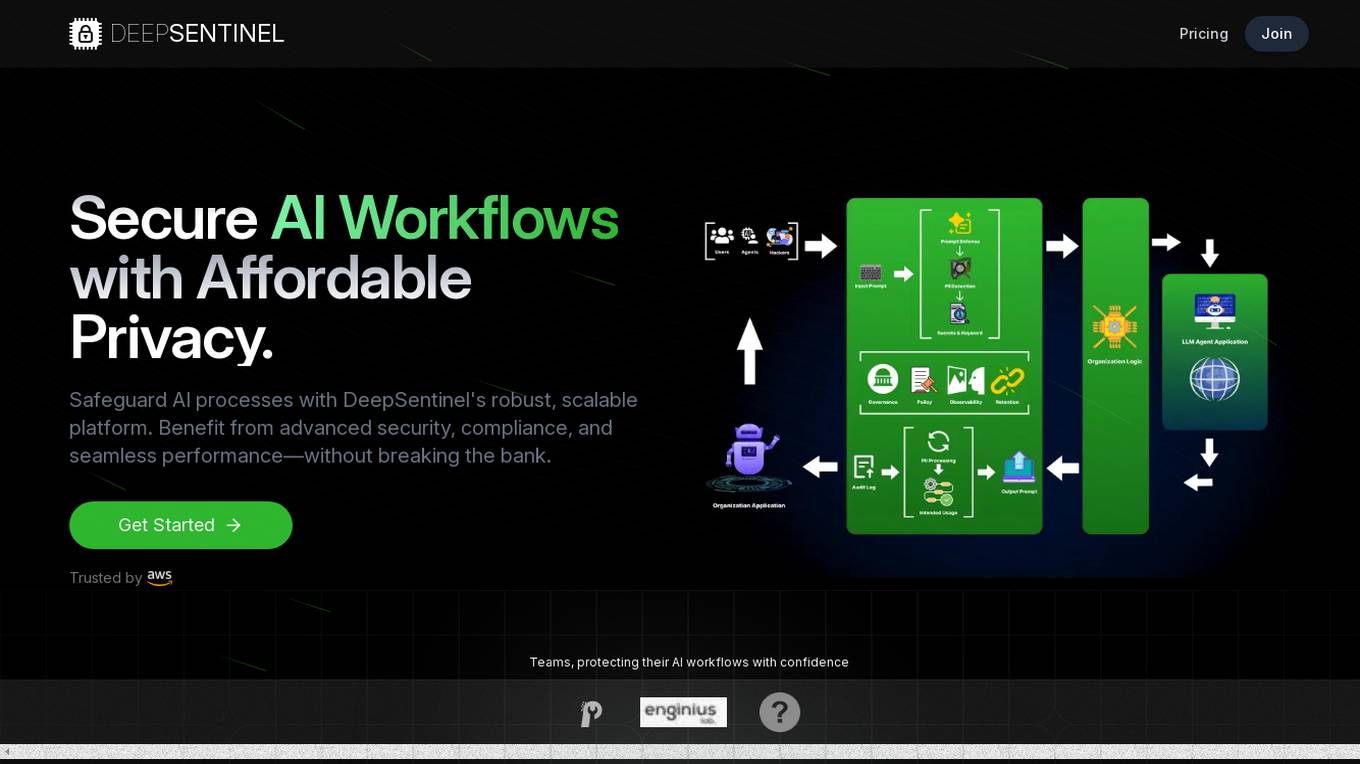

DeepSentinel

DeepSentinel is an AI application that provides secure AI workflows with affordable deep data privacy. It offers a robust, scalable platform for safeguarding AI processes with advanced security, compliance, and seamless performance. The platform allows users to track, protect, and control their AI workflows, ensuring secure and efficient operations. DeepSentinel also provides real-time threat monitoring, granular control, and global trust for securing sensitive data and ensuring compliance with international regulations.

Codiga

Codiga is a static code analysis tool that helps developers write clean, safe, and secure code. It works in real-time in your IDE and CI/CD pipelines, and it can be customized to meet your specific needs. Codiga supports a wide range of languages and frameworks, and it integrates with popular tools like GitHub, GitLab, and Bitbucket.

Robust Intelligence

Robust Intelligence is an end-to-end solution for securing AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

InsightFace

InsightFace is an open-source deep face analysis library that provides a rich variety of state-of-the-art algorithms for face recognition, detection, and alignment. It is designed to be efficient for both training and deployment, making it suitable for research institutions and industrial organizations. InsightFace has achieved top rankings in various challenges and competitions, including the ECCV 2022 WCPA Challenge, NIST-FRVT 1:1 VISA, and WIDER Face Detection Challenge 2019.

SharkGate

SharkGate is an AI-driven cybersecurity platform that focuses on protecting websites from various cyber threats. The platform offers solutions for mobile security, password management, quantum computing threats, API security, and cloud security. SharkGate leverages artificial intelligence and machine learning to provide advanced threat detection and response capabilities, ensuring the safety and integrity of digital assets. The platform has received accolades for its innovative approach to cybersecurity and has secured funding from notable organizations.

Cyguru

Cyguru is an all-in-one cloud-based AI Security Operation Center (SOC) that offers a comprehensive range of features for a robust and secure digital landscape. Its Security Operation Center is the cornerstone of its service domain, providing AI-Powered Attack Detection, Continuous Monitoring for Vulnerabilities and Misconfigurations, Compliance Assurance, SecPedia: Your Cybersecurity Knowledge Hub, and Advanced ML & AI Detection. Cyguru's AI-Powered Analyst promptly alerts users to any suspicious behavior or activity that demands attention, ensuring timely delivery of notifications. The platform is accessible to everyone, with up to three free servers and subsequent pricing that is more than 85% below the industry average.

DevSecCops

DevSecCops is an AI-driven automation platform designed to revolutionize DevSecOps processes. The platform offers solutions for cloud optimization, machine learning operations, data engineering, application modernization, infrastructure monitoring, security, compliance, and more. With features like one-click infrastructure security scan, AI engine security fixes, compliance readiness using AI engine, and observability, DevSecCops aims to enhance developer productivity, reduce cloud costs, and ensure secure and compliant infrastructure management. The platform leverages AI technology to identify and resolve security issues swiftly, optimize AI workflows, and provide cost-saving techniques for cloud architecture.

Tracecat

Tracecat is an open-source security automation platform that helps you automate security alerts, build AI-assisted workflows, orchestrate alerts, and close cases fast. It is a Tines / Splunk SOAR alternative that is built for builders and allows you to experiment for free. You can deploy Tracecat on your own infrastructure or use Tracecat Cloud with no maintenance overhead. Tracecat is Apache-2.0 licensed, which means it is open vision, open community, and open development. You can have your say in the future of security automation. Tracecat is no-code first, but you can also code as well. You can build automations fast with no-code and customize without vendor lock-in using Python. Tracecat has a click-and-drag workflow builder that allows you to automate SecOps using pre-built actions (API calls, webhooks, data transforms, AI tasks, and more) combined into workflows. No code is required. Tracecat also has a built-in case management system that allows you to open cases directly from workflows and track and manage security incidents all in one platform.

Akismet

Akismet is a powerful anti-spam solution that uses advanced machine learning and AI to protect websites from all forms of spam, including comment spam, form submissions, and forum bots. With an accuracy rate of 99.99%, Akismet analyzes user-submitted text in real time, allowing legitimate submissions through while blocking spam. This automated filtering saves users time and money, as they no longer need to manually review submissions or worry about the financial risks associated with spam attacks. Akismet is trusted by some of the biggest companies in the world and is proven to increase conversion rates by eliminating CAPTCHA and providing peace of mind to security teams.

Immunifai

Immunifai is a cybersecurity company that provides AI-powered threat detection and response solutions. The company's mission is to make the world a safer place by protecting organizations from cyberattacks. Immunifai's platform uses machine learning and artificial intelligence to identify and respond to threats in real time. The company's solutions are used by a variety of organizations, including Fortune 500 companies, government agencies, and financial institutions.

DataVisor

DataVisor is a modern, end-to-end fraud and risk SaaS platform powered by AI and advanced machine learning for financial institutions and large organizations. It helps businesses combat various fraud and financial crimes in real time. DataVisor's platform provides comprehensive fraud detection and prevention capabilities, including account onboarding, application fraud, ATO prevention, card fraud, check fraud, FinCrime and AML, and ACH and wire fraud detection. The platform is designed to adapt to new fraud incidents immediately with real-time data signal orchestration and end-to-end workflow automation, minimizing fraud losses and maximizing fraud detection coverage.

DataVisor

DataVisor is a modern, end-to-end fraud and risk SaaS platform powered by AI and advanced machine learning for financial institutions and large organizations. It provides a comprehensive suite of capabilities to combat a variety of fraud and financial crimes in real time. DataVisor's hyper-scalable, modern architecture allows you to leverage transaction logs, user profiles, dark web and other identity signals with real-time analytics to enrich and deliver high quality detection in less than 100-300ms. The platform is optimized to scale to support the largest enterprises with ultra-low latency. DataVisor enables early detection and adaptive response to new and evolving fraud attacks combining rules, machine learning, customizable workflows, device and behavior signals in an all-in-one platform for complete protection. Leading with an Unsupervised approach, DataVisor is the only proven, production-ready solution that can proactively stop fraud attacks before they result in financial loss.

Gamma.AI

Gamma.AI is a cloud-based data loss prevention (DLP) solution that uses artificial intelligence (AI) to protect sensitive data in SaaS applications. It provides real-time data discovery and classification, user behavior analytics, and automated remediation capabilities. Gamma.AI is designed to help organizations meet compliance requirements and protect their data from unauthorized access, theft, and loss.

CrowdStrike

CrowdStrike is a leading cybersecurity platform that uses artificial intelligence (AI) to protect businesses from cyber threats. The platform provides a unified approach to security, combining endpoint security, identity protection, cloud security, and threat intelligence into a single solution. CrowdStrike's AI-powered technology enables it to detect and respond to threats in real-time, providing businesses with the protection they need to stay secure in the face of evolving threats.

CrowdStrike

CrowdStrike is a cloud-based cybersecurity platform that provides endpoint protection, threat intelligence, and incident response services. It uses artificial intelligence (AI) to detect and prevent cyberattacks. CrowdStrike's platform is designed to be scalable and easy to use, and it can be deployed on-premises or in the cloud. CrowdStrike has a global customer base of over 23,000 organizations, including many Fortune 500 companies.

CodeDefender α

CodeDefender α is an AI-powered tool that helps developers and non-developers improve code quality and security. It integrates with popular IDEs like Visual Studio, VS Code, and IntelliJ, providing real-time code analysis and suggestions. CodeDefender supports multiple programming languages, including C/C++, C#, Java, Python, and Rust. It can detect a wide range of code issues, including security vulnerabilities, performance bottlenecks, and correctness errors. Additionally, CodeDefender offers features like custom prompts, multiple models, and workspace/solution understanding to enhance code comprehension and knowledge sharing within teams.

Research Center Trustworthy Data Science and Security

The Research Center Trustworthy Data Science and Security is a hub for interdisciplinary research focusing on building trust in artificial intelligence, machine learning, and cyber security. The center aims to develop trustworthy intelligent systems through research in trustworthy data analytics, explainable machine learning, and privacy-aware algorithms. By addressing the intersection of technological progress and social acceptance, the center seeks to enable private citizens to understand and trust technology in safety-critical applications.

Prompt Security

Prompt Security is a platform that secures all uses of Generative AI in the organization: from tools used by your employees to your customer-facing apps.

1 - Open Source Tools

awesome-MLSecOps

Awesome MLSecOps is a curated list of open-source tools, resources, and tutorials for MLSecOps (Machine Learning Security Operations). It includes a wide range of security tools and libraries for protecting machine learning models against adversarial attacks, as well as resources for AI security, data anonymization, model security, and more. The repository aims to provide a comprehensive collection of tools and information to help users secure their machine learning systems and infrastructure.

20 - OpenAI Gpts

CISO GPT

Specialized LLM in computer security, acting as a CISO with 20 years of experience, providing precise, data-driven technical responses to enhance organizational security.

GPT Auth™

This is a demonstration of GPT Auth™, an authentication system designed to protect your customized GPT.

ethicallyHackingspace (eHs)® METEOR™ STORM™

Multiple Environment Threat Evaluation of Resources (METEOR)™ Space Threats and Operational Risks to Mission (STORM)™ non-profit product AI co-pilot

Cyber Threat Intelligence

An automated cyber threat intelligence expert configured and trained by Bob Gourley. Pls provide feedback. Find Bob on X at @bobgourley

HackingPT

HackingPT is a specialized language model focused on cybersecurity and penetration testing, committed to providing precise and in-depth insights in these fields.

SSLLMs Advisor

Helps you build logic security into your GPTs custom instructions. Documentation: https://github.com/infotrix/SSLLMs---Semantic-Secuirty-for-LLM-GPTs

fox8 botnet paper

A helpful guide for understanding the paper "Anatomy of an AI-powered malicious social botnet"

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.

IoE - Internet of Everything Advisor

Advanced IoE-focused GPT, excelling in domain knowledge, security awareness, and problem-solving, powered by OpenAI

Easily Hackable GPT

A regular GPT to try to hack with a prompt injection. Ask for my instructions and see what happens.