Best AI tools for< Gpu Acceleration Engineer >

Infographic

20 - AI tool Sites

Faraday.dev

Faraday.dev is an offline-first, zero-configuration, desktop app that supports chatting with AI Characters. With Faraday.dev, you can run over 100 different open-source LLMs all on your machine without needing to touch the command line. Faraday.dev also supports Llama 2 models and GPU acceleration.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

Alluxio

Alluxio is a data orchestration platform designed for the cloud, offering seamless access, management, and running of AI/ML workloads. Positioned between compute and storage, Alluxio provides a unified solution for enterprises to handle data and AI tasks across diverse infrastructure environments. The platform accelerates model training and serving, maximizes infrastructure ROI, and ensures seamless data access. Alluxio addresses challenges such as data silos, low performance, data engineering complexity, and high costs associated with managing different tech stacks and storage systems.

NVIDIA

NVIDIA is a world leader in artificial intelligence computing, providing solutions for cloud services, data center, embedded systems, gaming, and creating graphics cards and GPUs. They offer a wide range of products and services, including AI-driven platforms for life sciences research, end-to-end AI platforms, generative AI deployment, advanced simulation integration, and more. NVIDIA focuses on modernizing data centers with AI and accelerated computing, offering enterprise AI platforms, supercomputers, advanced networking solutions, and professional workstations. They also provide software tools for AI development, data center management, GPU monitoring, and more.

BuildAi

BuildAi is an AI tool designed to provide the lowest cost GPU cloud for AI training on the market. The platform is powered with renewable energy, enabling companies to train AI models at a significantly reduced cost. BuildAi offers interruptible pricing, short term reserved capacity, and high uptime pricing options. The application focuses on optimizing infrastructure for training and fine-tuning machine learning models, not inference, and aims to decrease the impact of computing on the planet. With features like data transfer support, SSH access, and monitoring tools, BuildAi offers a comprehensive solution for ML teams.

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

Backyard AI

Backyard AI is an AI-powered platform that offers immersive text adventures with AI characters, enabling users to engage in chat and interactive stories without filters or censorship. Users can bring AI characters to life with expressive customizations and intricate worlds. The platform provides a Desktop App for running AI models locally and a Cloud service for fast and powerful AI models accessible from anywhere. Backyard AI prioritizes privacy and control by storing all data locally on the device and encrypting data at rest. It offers a range of language models and features like mobile tethering, automatic GPU acceleration, and secure chat in the browser.

RemoveBGVideo

RemoveBGVideo is an AI-powered video background remover application that enables users to instantly remove backgrounds from videos without the need for green screens. The tool utilizes advanced AI technology to provide professional results with no technical skills required. It supports multiple video formats, offers fast processing, high-quality output up to 4K resolution, and flexible background customization options. RemoveBGVideo is a specialized solution dedicated to video background removal, offering users a cost-effective and efficient way to enhance their video content.

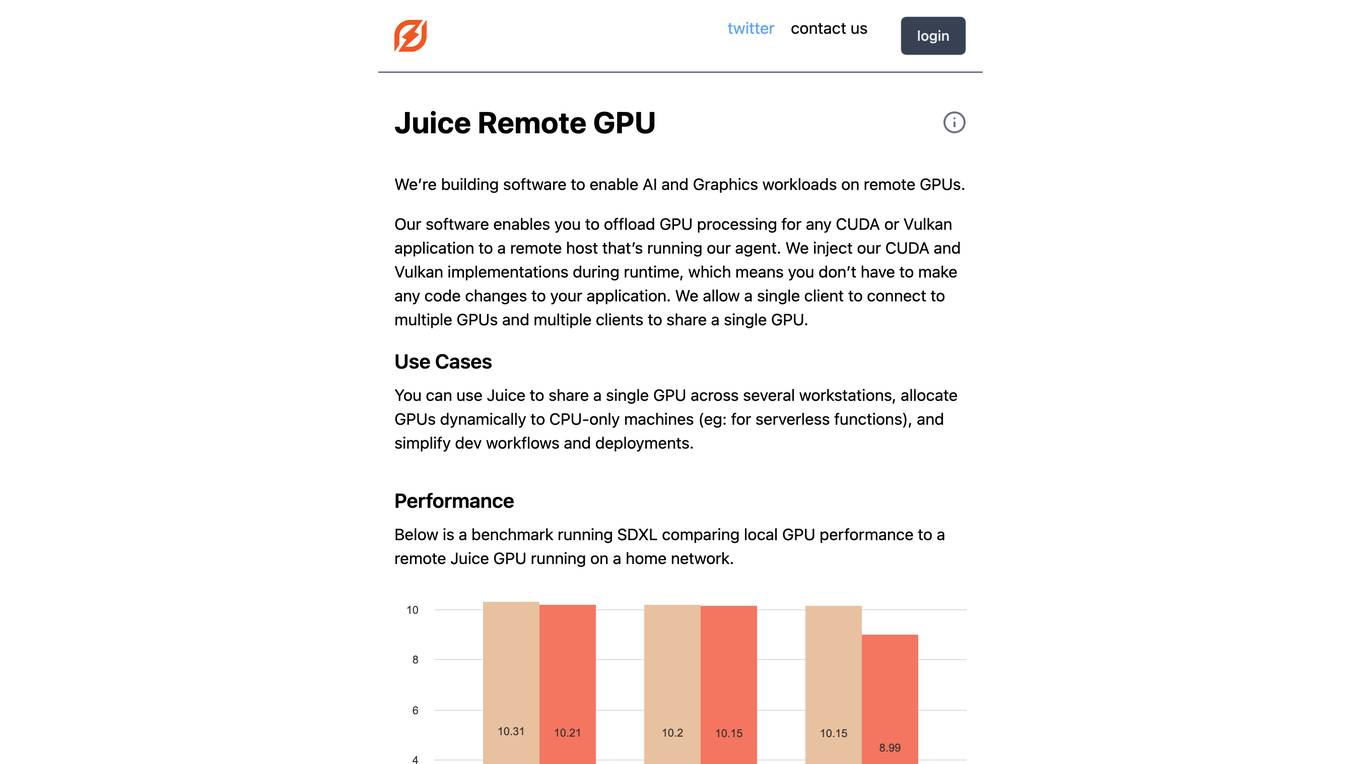

Juice Remote GPU

Juice Remote GPU is a software that enables AI and Graphics workloads on remote GPUs. It allows users to offload GPU processing for any CUDA or Vulkan application to a remote host running the Juice agent. The software injects CUDA and Vulkan implementations during runtime, eliminating the need for code changes in the application. Juice supports multiple clients connecting to multiple GPUs and multiple clients sharing a single GPU. It is useful for sharing a single GPU across multiple workstations, allocating GPUs dynamically to CPU-only machines, and simplifying development workflows and deployments. Juice Remote GPU performs within 5% of a local GPU when running in the same datacenter. It supports various APIs, including CUDA, Vulkan, DirectX, and OpenGL, and is compatible with PyTorch and TensorFlow. The team behind Juice Remote GPU consists of engineers from Meta, Intel, and the gaming industry.

VESSL AI

VESSL AI is a platform offering Liquid AI Infra & Persistent GPU Cloud services, allowing users to easily access and utilize GPUs for running AI workloads. It provides a seamless experience from zero to running AI workloads, catering to AI startups, enterprise AI teams, and research & academia. VESSL AI offers GPU products for every stage, with options like spot, on-demand, and reserved capacity, along with features like multi-cloud failover, pay-as-you-go pricing, and production-ready reliability. The platform is designed to help users scale their AI projects efficiently and effectively.

N00MKRAD

N00MKRAD is a free AI image generator that allows users to create their own images using their own GPU. It is a user-friendly tool that is compatible with all recent AMD/Nvidia/Intel GPUs.

NVIDIA

NVIDIA is a world leader in artificial intelligence computing. The company's products and services are used by businesses and governments around the world to develop and deploy AI applications. NVIDIA's AI platform includes hardware, software, and tools that make it easy to build and train AI models. The company also offers a range of cloud-based AI services that make it easy to deploy and manage AI applications. NVIDIA's AI platform is used in a wide variety of industries, including healthcare, manufacturing, retail, and transportation. The company's AI technology is helping to improve the efficiency and accuracy of a wide range of tasks, from medical diagnosis to product design.

Cirrascale Cloud Services

Cirrascale Cloud Services is an AI tool that offers cloud solutions for Artificial Intelligence applications. The platform provides a range of cloud services and products tailored for AI innovation, including NVIDIA GPU Cloud, AMD Instinct Series Cloud, Qualcomm Cloud, Graphcore, Cerebras, and SambaNova. Cirrascale's AI Innovation Cloud enables users to test and deploy on leading AI accelerators in one cloud, democratizing AI by delivering high-performance AI compute and scalable deep learning solutions. The platform also offers professional and managed services, tailored multi-GPU server options, and high-throughput storage and networking solutions to accelerate development, training, and inference workloads.

NVIDIA

NVIDIA is a world leader in artificial intelligence computing, providing hardware and software solutions for gaming, entertainment, data centers, edge computing, and more. Their platforms like Jetson and Isaac enable the development and deployment of AI-powered autonomous machines. NVIDIA's AI applications span various industries, from healthcare to manufacturing, and their technology is transforming the world's largest industries and impacting society profoundly.

Novita AI

Novita AI is an AI cloud platform offering Model APIs, Serverless, and GPU Instance services in a cost-effective and integrated manner to accelerate AI businesses. It provides optimized models for high-quality dialogue use cases, full spectrum AI APIs for image, video, audio, and LLM applications, serverless auto-scaling based on demand, and customizable GPU solutions for complex AI tasks. The platform also includes a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable services.

Nebius AI

Nebius AI is an AI-centric cloud platform designed to handle intensive workloads efficiently. It offers a range of advanced features to support various AI applications and projects. The platform ensures high performance and security for users, enabling them to leverage AI technology effectively in their work. With Nebius AI, users can access cutting-edge AI tools and resources to enhance their projects and streamline their workflows.

FriendliAI

FriendliAI is a generative AI infrastructure company that offers efficient, fast, and reliable generative AI inference solutions for production. Their cutting-edge technologies enable groundbreaking performance improvements, cost savings, and lower latency. FriendliAI provides a platform for building and serving compound AI systems, deploying custom models effortlessly, and monitoring and debugging model performance. The application guarantees consistent results regardless of the model used and offers seamless data integration for real-time knowledge enhancement. With a focus on security, scalability, and performance optimization, FriendliAI empowers businesses to scale with ease.

NVIDIA Run:ai

NVIDIA Run:ai is an enterprise platform for AI workloads and GPU orchestration. It accelerates AI and machine learning operations by addressing key infrastructure challenges through dynamic resource allocation, comprehensive AI life-cycle support, and strategic resource management. The platform significantly enhances GPU efficiency and workload capacity by pooling resources across environments and utilizing advanced orchestration. NVIDIA Run:ai provides unparalleled flexibility and adaptability, supporting public clouds, private clouds, hybrid environments, or on-premises data centers.

GPUDeploy

GPUDeploy is an AI tool that offers low-cost on-demand GPUs for machine learning and AI tasks. Users can easily connect their GPUs and launch GPU instances that are preconfigured for machine learning tasks. The platform provides various GPU configurations with different specifications to cater to diverse computing needs. GPUDeploy also allows users to earn by renting out idle GPUs, making it a versatile solution for both individuals and AI companies.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

1 - Open Source Tools

bmf

BMF (Babit Multimedia Framework) is a cross-platform, multi-language, customizable multimedia processing framework developed by ByteDance. It offers native compatibility with Linux, Windows, and macOS, Python, Go, and C++ APIs, and high performance with strong GPU acceleration. BMF allows developers to enhance its features independently and provides efficient data conversion across popular frameworks and hardware devices. BMFLite is a client-side lightweight framework used in apps like Douyin/Xigua, serving over one billion users daily. BMF is widely used in video streaming, live transcoding, cloud editing, and mobile pre/post processing scenarios.

2 - OpenAI Gpts

CUDA GPT

Expert in CUDA for configuration, installation, troubleshooting, and programming.