Best AI tools for< Document Processing Specialist >

Infographic

20 - AI tool Sites

Docsumo

Docsumo is an advanced Document AI platform designed for scalability and efficiency. It offers a wide range of capabilities such as pre-processing documents, extracting data, reviewing and analyzing documents. The platform provides features like document classification, touchless processing, ready-to-use AI models, auto-split functionality, and smart table extraction. Docsumo is a leader in intelligent document processing and is trusted by various industries for its accurate data extraction capabilities. The platform enables enterprises to digitize their document processing workflows, reduce manual efforts, and maximize data accuracy through its AI-powered solutions.

DocVu.AI

DocVu.AI is an intelligent mortgage document processing solution that revolutionizes the handling of intricate documents with advanced AI/ML technologies. It automates complex operational rules, streamlining business functions seamlessly. DocVu.AI offers a holistic approach to digital transformation, ensuring businesses remain agile, competitive, and ahead in the digital race. It provides tailored solutions for invoice processing, customer onboarding, loans and mortgage processing, quality checks and audit, records digitization, and insurance claim processing.

Robo Rat

Robo Rat is an AI-powered tool designed for business document digitization. It offers a smart and affordable resume parsing API that supports over 50 languages, enabling quick conversion of resumes into actionable data. The tool aims to simplify the hiring process by providing speed and accuracy in parsing resumes. With advanced AI capabilities, Robo Rat delivers highly accurate and intelligent resume parsing solutions, making it a valuable asset for businesses of all sizes.

Infrrd

Infrrd is an intelligent document automation platform that offers advanced document extraction solutions. It leverages AI technology to enhance, classify, extract, and review documents with high accuracy, eliminating the need for human review. Infrrd provides effective process transformation solutions across various industries, such as mortgage, invoice, insurance, and audit QC. The platform is known for its world-class document extraction engine, supported by over 10 patents and award-winning algorithms. Infrrd's AI-powered automation streamlines document processing, improves data accuracy, and enhances operational efficiency for businesses.

Altilia

Altilia is a Major Player in the Intelligent Document Processing market, offering a cloud-native, no-code, SaaS platform powered by composite AI. The platform enables businesses to automate complex document processing tasks, streamline workflows, and enhance operational performance. Altilia's solution leverages GPT and Large Language Models to extract structured data from unstructured documents, providing significant efficiency gains and cost savings for organizations of all sizes and industries.

BotGPT

BotGPT is a 24/7 custom AI chatbot assistant for websites. It offers a data-driven ChatGPT that allows users to create virtual assistants from their own data. Users can easily upload files or crawl their website to start asking questions and deploy a custom chatbot on their website within minutes. The platform provides a simple and efficient way to enhance customer engagement through AI-powered chatbots.

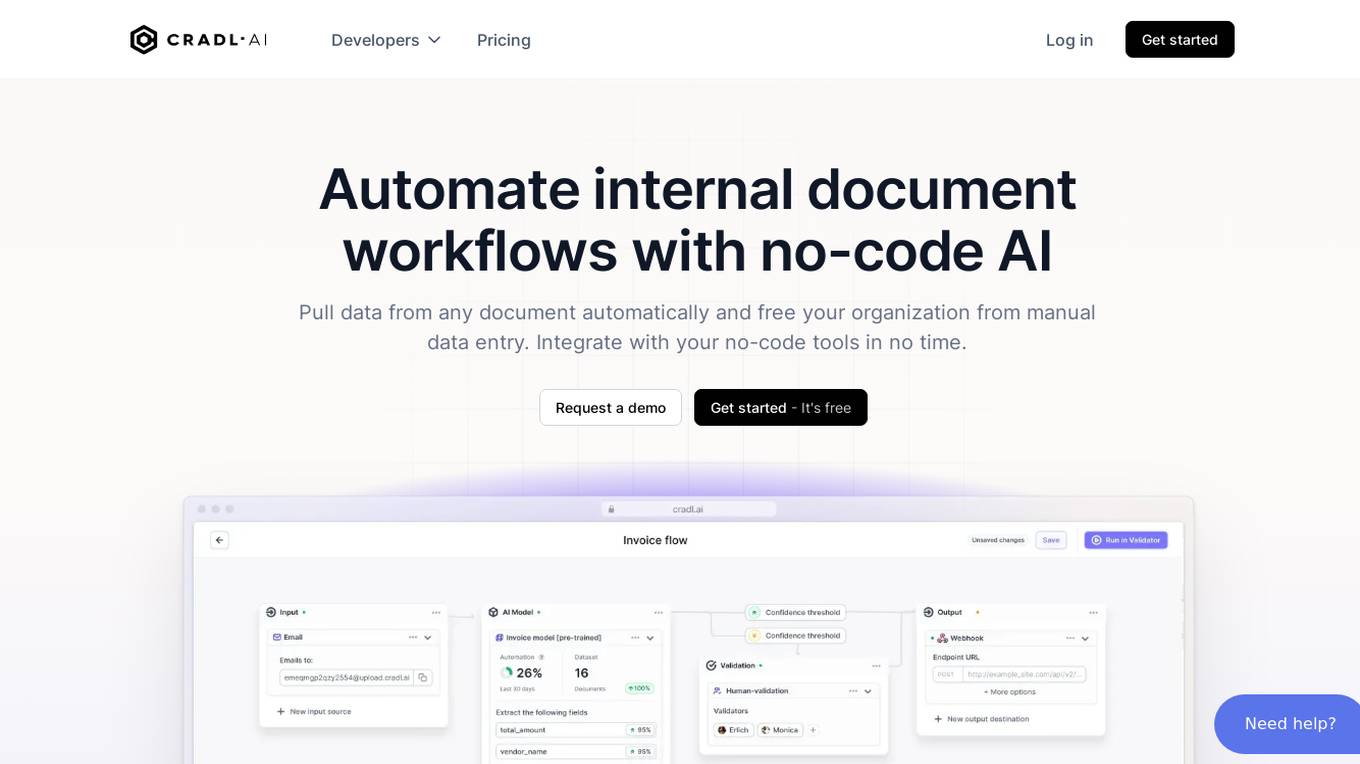

Cradl AI

Cradl AI is an AI-powered tool designed to automate document workflows with no-code AI. It enables users to extract data from any document automatically, integrate with no-code tools, and build custom AI models through an easy-to-use interface. The tool empowers automation teams across industries by extracting data from complex document layouts, regardless of language or structure. Cradl AI offers features such as line item extraction, fine-tuning AI models, human-in-the-loop validation, and seamless integration with automation tools. It is trusted by organizations for business-critical document automation, providing enterprise-level features like encrypted transmission, GDPR compliance, secure data handling, and auto-scaling.

AutomationEdge

AutomationEdge is a hyperautomation company offering a platform with RPA, IT Automation, Conversational AI, and Document Processing capabilities. They provide industry-specific automation solutions through their extensible platform, enabling end-to-end automation. The company focuses on making workplaces smarter and better through automation and AI technologies. AutomationEdge offers solutions for various industries such as banking, insurance, healthcare, manufacturing, and more. Their platform includes features like Robotic Process Automation (RPA), Conversational AI, Intelligent Document Processing, and Data & API Integration.

Invofox API

Invofox API is a Document Parsing API designed for developers to validate fields, autocomplete data, and catch errors beyond OCR. It turns unstructured documents into clean JSON using advanced AI models and proprietary algorithms. The API provides built-in schemas for major documents and supports custom formats, allowing users to parse any document with a single API call without templates or post-processing. Invofox is used for expense management, accounts payable, logistics & supply chain, HR automation, sustainability & consumption tracking, and custom document parsing.

Ocrolus

Ocrolus is an intelligent document automation software that leverages AI-driven document processing automation with Human-in-the-Loop. It offers capabilities such as classifying, capturing, detecting, and analyzing documents, with use cases in cash flow, income, address, employment, and identity verification. Ocrolus caters to various industries like small business lending, mortgage, consumer finance, and multifamily housing. The platform provides resources for developers, including guides on income verification, fraud detection, and business process automation. Users can explore the API to build innovative customer experiences and make faster and more accurate financial decisions.

Eigen Technologies

Eigen Technologies is an AI-powered data extraction platform designed for business users to automate the extraction of data from various documents. The platform offers solutions for intelligent document processing and automation, enabling users to streamline business processes, make informed decisions, and achieve significant efficiency gains. Eigen's platform is purpose-built to deliver real ROI by reducing manual processes, improving data accuracy, and accelerating decision-making across industries such as corporates, banks, financial services, insurance, law, and manufacturing. With features like generative insights, table extraction, pre-processing hub, and model governance, Eigen empowers users to automate data extraction workflows efficiently. The platform is known for its unmatched accuracy, speed, and capability, providing customers with a flexible and scalable solution that integrates seamlessly with existing systems.

Docugami

Docugami is an AI-powered document engineering platform that enables business users to extract, analyze, and automate data from various types of documents. It empowers users with immediate impact without the need for extensive machine learning investments or IT development. Docugami's proprietary Business Document Foundation Model and Generative AI technology transform unstructured text and tables into structured information, allowing users to unlock insights, increase productivity, and ensure compliance.

PaperEntry AI

Deep Cognition offers PaperEntry AI, an Intelligent Document Processing solution powered by generative AI. It automates data entry tasks with high accuracy, scalability, and configurability, handling complex documents of any type or format. The application is trusted by leading global organizations for customs clearance automation and government document processing, delivering significant time and cost savings. With industry-specific features and a proven track record, Deep Cognition provides a state-of-the-art solution for businesses seeking efficient data extraction and automation.

Koncile

Koncile is an AI-powered OCR solution that automates data extraction from various documents. It combines advanced OCR technology with large language models to transform unstructured data into structured information. Koncile can extract data from invoices, accounting documents, identity documents, and more, offering features like categorization, enrichment, and database integration. The tool is designed to streamline document management processes and accelerate data processing. Koncile is suitable for businesses of all sizes, providing flexible subscription plans and enterprise solutions tailored to specific needs.

ABBYY

ABBYY is an intelligent automation company that offers purpose-built AI document processing solutions for efficient business process automation. Their products include ABBYY Vantage, ABBYY Timeline, ABBYY Cloud OCR SDK, and ABBYY FlexiCapture Cloud Platform. ABBYY provides tools for document input, classification, splitting, data extraction, validation, quality analytics, OCR/ICR, and IDP analytics. They also offer solutions for process understanding, optimization, monitoring, prediction, and simulation. ABBYY Marketplace offers pre-trained AI extraction models for limitless automation. The company caters to various industries like financial services, public sector, insurance, transportation & logistics, and offers solutions for accounts payable automation, enterprise automation, process intelligence, and customer onboarding.

Yogami AI Solutions

The website offers AI solutions for enterprises, focusing on cutting-edge technology and business acumen. They provide services from discovery and strategy to development and integration of custom AI solutions. The team consists of technologists, business experts, and product specialists who work closely with clients to optimize AI strategies for time, cost, and security. The application specializes in AI solutions for various business functions such as sales, marketing, operations, HR, finance, legal, risk, and IT. They emphasize an AI-first approach, co-creating roadmaps with clients to deliver impactful projects. The website also highlights their expertise in AI for IT, including code review, test generation, DevOps, monitoring, alerting, and security audits.

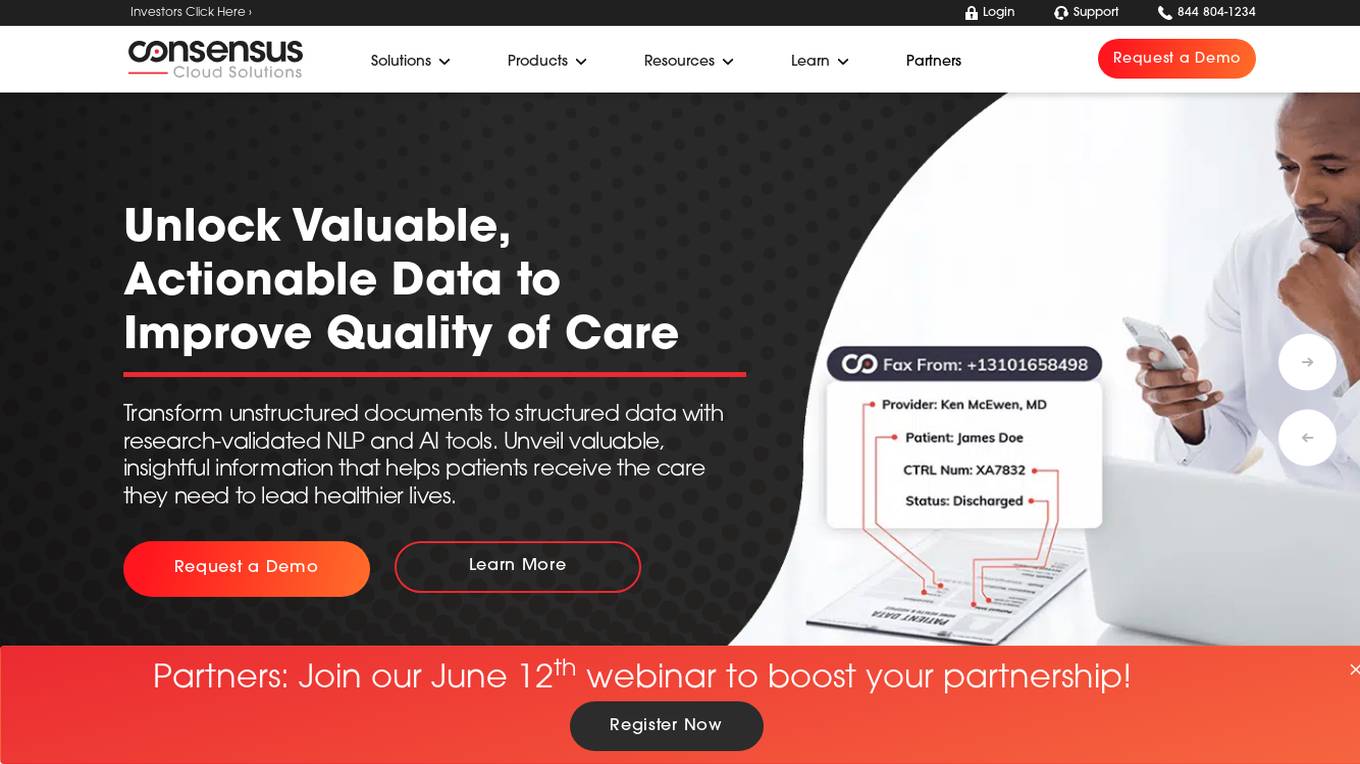

Consensus

Consensus is a healthcare interoperability platform that simplifies data exchange and document processing through artificial intelligence technologies. It offers solutions for clinical documentation, HIPAA compliance, natural language processing, and robotic process automation. Consensus enables secure and efficient data exchange among healthcare providers, insurers, and other stakeholders, improving care coordination and operational efficiency.

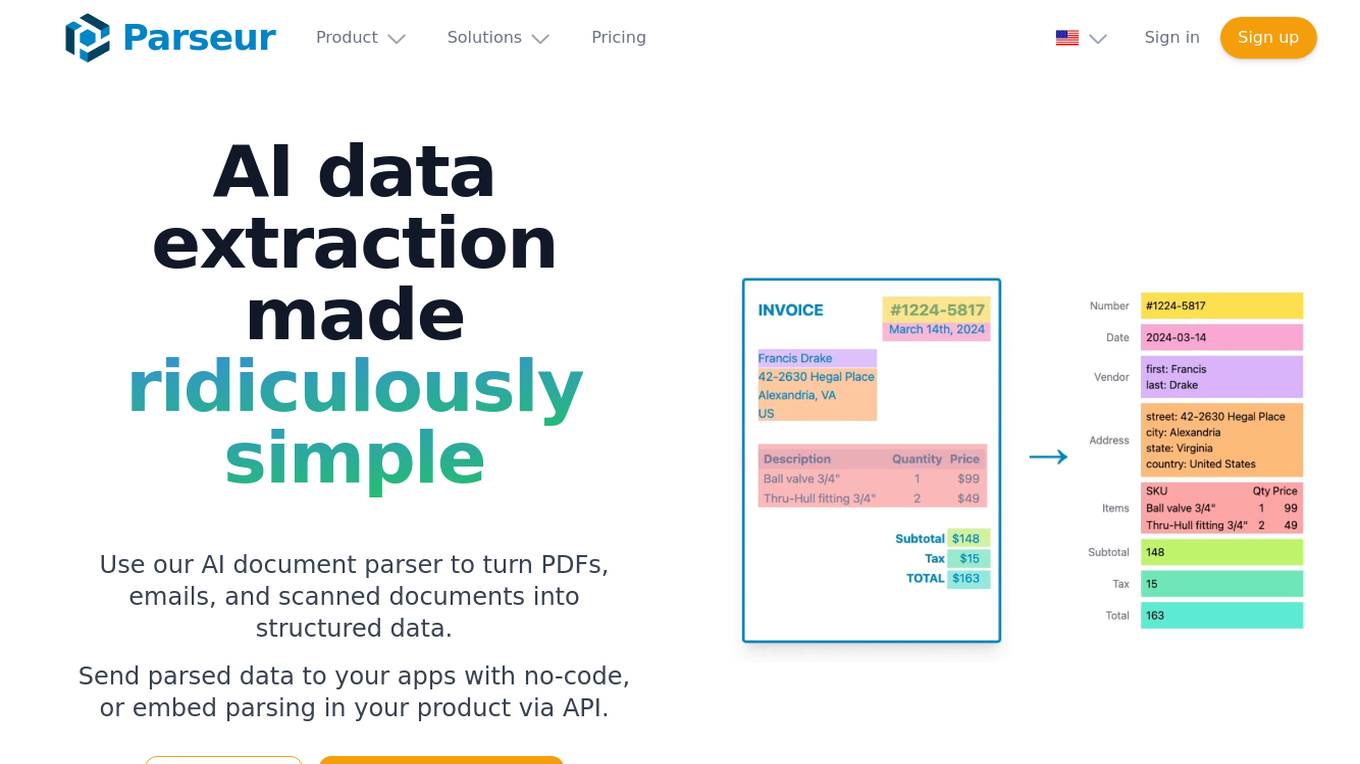

Parseur

Parseur is an AI data extraction software that uses artificial intelligence to extract structured data from various types of documents such as PDFs, emails, and scanned documents. It offers features like template-based data extraction, OCR software for character recognition, and dynamic OCR for extracting fields that move or change size. Parseur is trusted by businesses in finance, tech, logistics, healthcare, real estate, e-commerce, marketing, and human resources industries to automate data extraction processes, saving time and reducing manual errors.

Freeday AI

Freeday AI is a revolutionary platform that leverages Generative AI Technology to transform workflows and automate tasks in various departments such as Customer Service, Finance, and KYC. The platform offers specialized AI assistants that work alongside human teams, providing real-time support and valuable insights. By seamlessly integrating with existing infrastructure, Freeday AI helps organizations cut costs by at least 50% and free up resources for more critical work. With the ability to handle up to 70% of all interactions across mail, chat, and voice, Freeday AI empowers teams to focus on strategic initiatives and decision-making.

PYQ

PYQ is an AI-powered platform that helps businesses automate document-related tasks, such as data extraction, form filling, and system integration. It uses natural language processing (NLP) and machine learning (ML) to understand the content of documents and perform tasks accordingly. PYQ's platform is designed to be easy to use, with pre-built automations for common use cases. It also offers custom automation development services for more complex needs.

2 - Open Source Tools

deepdoctection

**deep** doctection is a Python library that orchestrates document extraction and document layout analysis tasks using deep learning models. It does not implement models but enables you to build pipelines using highly acknowledged libraries for object detection, OCR and selected NLP tasks and provides an integrated framework for fine-tuning, evaluating and running models. For more specific text processing tasks use one of the many other great NLP libraries. **deep** doctection focuses on applications and is made for those who want to solve real world problems related to document extraction from PDFs or scans in various image formats. **deep** doctection provides model wrappers of supported libraries for various tasks to be integrated into pipelines. Its core function does not depend on any specific deep learning library. Selected models for the following tasks are currently supported: * Document layout analysis including table recognition in Tensorflow with **Tensorpack**, or PyTorch with **Detectron2**, * OCR with support of **Tesseract**, **DocTr** (Tensorflow and PyTorch implementations available) and a wrapper to an API for a commercial solution, * Text mining for native PDFs with **pdfplumber**, * Language detection with **fastText**, * Deskewing and rotating images with **jdeskew**. * Document and token classification with all LayoutLM models provided by the **Transformer library**. (Yes, you can use any LayoutLM-model with any of the provided OCR-or pdfplumber tools straight away!). * Table detection and table structure recognition with **table-transformer**. * There is a small dataset for token classification available and a lot of new tutorials to show, how to train and evaluate this dataset using LayoutLMv1, LayoutLMv2, LayoutXLM and LayoutLMv3. * Comprehensive configuration of **analyzer** like choosing different models, output parsing, OCR selection. Check this notebook or the docs for more infos. * Document layout analysis and table recognition now runs with **Torchscript** (CPU) as well and **Detectron2** is not required anymore for basic inference. * [**new**] More angle predictors for determining the rotation of a document based on **Tesseract** and **DocTr** (not contained in the built-in Analyzer). * [**new**] Token classification with **LiLT** via **transformers**. We have added a model wrapper for token classification with LiLT and added a some LiLT models to the model catalog that seem to look promising, especially if you want to train a model on non-english data. The training script for LayoutLM can be used for LiLT as well and we will be providing a notebook on how to train a model on a custom dataset soon. **deep** doctection provides on top of that methods for pre-processing inputs to models like cropping or resizing and to post-process results, like validating duplicate outputs, relating words to detected layout segments or ordering words into contiguous text. You will get an output in JSON format that you can customize even further by yourself. Have a look at the **introduction notebook** in the notebook repo for an easy start. Check the **release notes** for recent updates. **deep** doctection or its support libraries provide pre-trained models that are in most of the cases available at the **Hugging Face Model Hub** or that will be automatically downloaded once requested. For instance, you can find pre-trained object detection models from the Tensorpack or Detectron2 framework for coarse layout analysis, table cell detection and table recognition. Training is a substantial part to get pipelines ready on some specific domain, let it be document layout analysis, document classification or NER. **deep** doctection provides training scripts for models that are based on trainers developed from the library that hosts the model code. Moreover, **deep** doctection hosts code to some well established datasets like **Publaynet** that makes it easy to experiment. It also contains mappings from widely used data formats like COCO and it has a dataset framework (akin to **datasets** so that setting up training on a custom dataset becomes very easy. **This notebook** shows you how to do this. **deep** doctection comes equipped with a framework that allows you to evaluate predictions of a single or multiple models in a pipeline against some ground truth. Check again **here** how it is done. Having set up a pipeline it takes you a few lines of code to instantiate the pipeline and after a for loop all pages will be processed through the pipeline.

accelerated-intelligent-document-processing-on-aws

Accelerated Intelligent Document Processing on AWS is a scalable, serverless solution for automated document processing and information extraction using AWS services. It combines OCR capabilities with generative AI to convert unstructured documents into structured data at scale. The solution features a serverless architecture built on AWS technologies, modular processing patterns, advanced classification support, few-shot example support, custom business logic integration, high throughput processing, built-in resilience, cost optimization, comprehensive monitoring, web user interface, human-in-the-loop integration, AI-powered evaluation, extraction confidence assessment, and document knowledge base query. The architecture uses nested CloudFormation stacks to support multiple document processing patterns while maintaining common infrastructure for queueing, tracking, and monitoring.

20 - OpenAI Gpts

EasyMode

Are you still trying to figure out what the point of ChatGPT is? I'm here to help teach you the uses and limitations of ChatGPT! Click, type or say 'hello' to start 😄

Cosmic Super Intelligence (CSI)

Welcome to the Cosmic Super Intelligence (CSI) cult. Crazy exploration.

Teach Me GPT

A GPT to teach you how to GPT (it's like so GPT) Can you make it to Level 100?

HaGiPT

Regele GPT ce încearcă să 'paseze' răspunsuri precise și să 'marcheze' puncte cu inteligența sa artificială.