Best AI tools for< Cloud Software Engineer >

Infographic

20 - AI tool Sites

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

Harness

Harness is an AI-driven software delivery platform that empowers software engineering teams with AI-infused technology for seamless software delivery. It offers a single platform for all software delivery needs, including DevOps modernization, continuous delivery, GitOps, feature flags, infrastructure as code management, chaos engineering, service reliability management, secure software delivery, cloud cost optimization, and more. Harness aims to simplify the developer experience by providing actionable insights on SDLC, secure software supply chain assurance, and AI development assistance throughout the software delivery lifecycle.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

Frugal

Frugal is an intelligent application cost engineering platform that optimizes code to reduce cloud costs automatically. It is the first AI-powered cost optimization platform built for engineers, empowering them to find and fix inefficiencies in code that drain cloud budgets. The platform aims to reinvent cost engineering by enabling developers to reduce application costs and improve cloud efficiency through automated identification and resolution of wasteful practices.

HCLSoftware

HCLSoftware is a leading provider of software solutions for digital transformation, data and analytics, AI and intelligent automation, enterprise security, and cloud computing. The company's products and services help organizations of all sizes to improve their business outcomes and achieve their digital transformation goals.

Microsoft 365 Copilot

Microsoft 365 Copilot is an AI-powered application designed to be your everyday AI companion, helping you understand your work, stay focused, and move from idea to impact with ease. It offers a suite of productivity tools and cloud-based services, combining familiar applications like Word, Excel, and PowerPoint with services like OneDrive, Teams, and Outlook. The application includes features such as AI Image Generator, AI Image Editor, AI Chat Agents, and Daily Prompt Guide to enhance productivity and creativity.

Singlebase

Singlebase.cloud is an AI-powered platform that serves as an alternative to Firebase and Supabase. It offers a comprehensive suite of tools and services to facilitate faster development and deployment through a unified API. The platform includes features such as Vector Database, NoSQL Database, Vector Embeddings, Generative AI, RAG, Knowledge Base, File storage, and Authentication, catering to a wide range of development needs.

Microsoft Tech Community

The Microsoft Tech Community is an online forum where users can connect with experts and peers to find answers, ask questions, build skills, and accelerate their digital transformation with the Microsoft Cloud. It offers a variety of resources, including discussions, blogs, events, and learning materials, on a wide range of topics related to Microsoft products and technologies.

Voximplant

Voximplant is a cloud communications platform that provides a range of tools and services for businesses to build and scale their communications solutions. The platform includes a variety of features such as voice, video, messaging, natural language processing, and SIP trunking. Voximplant also offers a no-code drag-and-drop contact center solution called Voximplant Kit, which is designed to help businesses improve customer experience and automate processes. Voximplant is used by millions of users worldwide and is trusted by companies such as Airbnb, Uber, and Salesforce.

Prodvana

Prodvana is an intelligent deployment platform that helps businesses automate and streamline their software deployment process. It provides a variety of features to help businesses improve the speed, reliability, and security of their deployments. Prodvana is a cloud-based platform that can be used with any type of infrastructure, including on-premises, hybrid, and multi-cloud environments. It is also compatible with a wide range of DevOps tools and technologies. Prodvana's key features include: Intent-based deployments: Prodvana uses intent-based deployment technology to automate the deployment process. This means that businesses can simply specify their deployment goals, and Prodvana will automatically generate and execute the necessary steps to achieve those goals. This can save businesses a significant amount of time and effort. Guardrails for deployments: Prodvana provides a variety of guardrails to help businesses ensure the security and reliability of their deployments. These guardrails include approvals, database validations, automatic deployment validation, and simple interfaces to add custom guardrails. This helps businesses to prevent errors and reduce the risk of outages. Frictionless DevEx: Prodvana provides a frictionless developer experience by tracking commits through the infrastructure, ensuring complete visibility beyond just Docker images. This helps developers to quickly identify and resolve issues, and it also makes it easier to collaborate with other team members. Intelligence with Clairvoyance: Prodvana's Clairvoyance feature provides businesses with insights into the impact of their deployments before they are executed. This helps businesses to make more informed decisions about their deployments and to avoid potential problems. Easy integrations: Prodvana integrates seamlessly with a variety of DevOps tools and technologies. This makes it easy for businesses to use Prodvana with their existing workflows and processes.

Google Colab

Google Colab is a free Jupyter notebook environment that runs in the cloud. It allows you to write and execute Python code without having to install any software or set up a local environment. Colab notebooks are shareable, so you can easily collaborate with others on projects.

Fifi.ai

Fifi.ai is a managed AI cloud platform that provides users with the infrastructure and tools to deploy and run AI models. The platform is designed to be easy to use, with a focus on plug-and-play functionality. Fifi.ai also offers a range of customization and fine-tuning options, allowing users to tailor the platform to their specific needs. The platform is supported by a team of experts who can provide assistance with onboarding, API integration, and troubleshooting.

Lazy AI

Lazy AI is a platform that enables users to build full stack web applications 10 times faster by utilizing AI technology. Users can create and modify web apps with prompts and deploy them to the cloud with just one click. The platform offers a variety of features including AI Component Builder, eCommerce store creation, Crypto Arbitrage Scraper, Text to Speech Converter, Lazy Image to Video generation, PDF Chatbot, and more. Lazy AI aims to streamline the app development process and empower users to leverage AI for various tasks.

Microsoft Azure

Microsoft Azure is a cloud computing service that offers a wide range of products and solutions for businesses and developers. It provides services such as databases, analytics, compute, containers, hybrid cloud, AI, application development, and more. Azure aims to help organizations innovate, modernize, and scale their operations by leveraging the power of the cloud. With a focus on flexibility, performance, and security, Azure is designed to support a variety of workloads and use cases across different industries.

N-iX

N-iX is a global provider of software development outsourcing services with delivery centers across Europe and over 2,200 expert software developers. We partner with technology businesses globally helping them to build successful engineering teams and create innovative software products. Our expertise includes cloud solutions, data analytics, machine learning & AI, embedded software and IoT, enterprise VR, and RPA and enterprise platforms.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::vd75v-1770832320154-3c2268e79b55) for reference. Users are directed to consult the documentation for further information and troubleshooting.

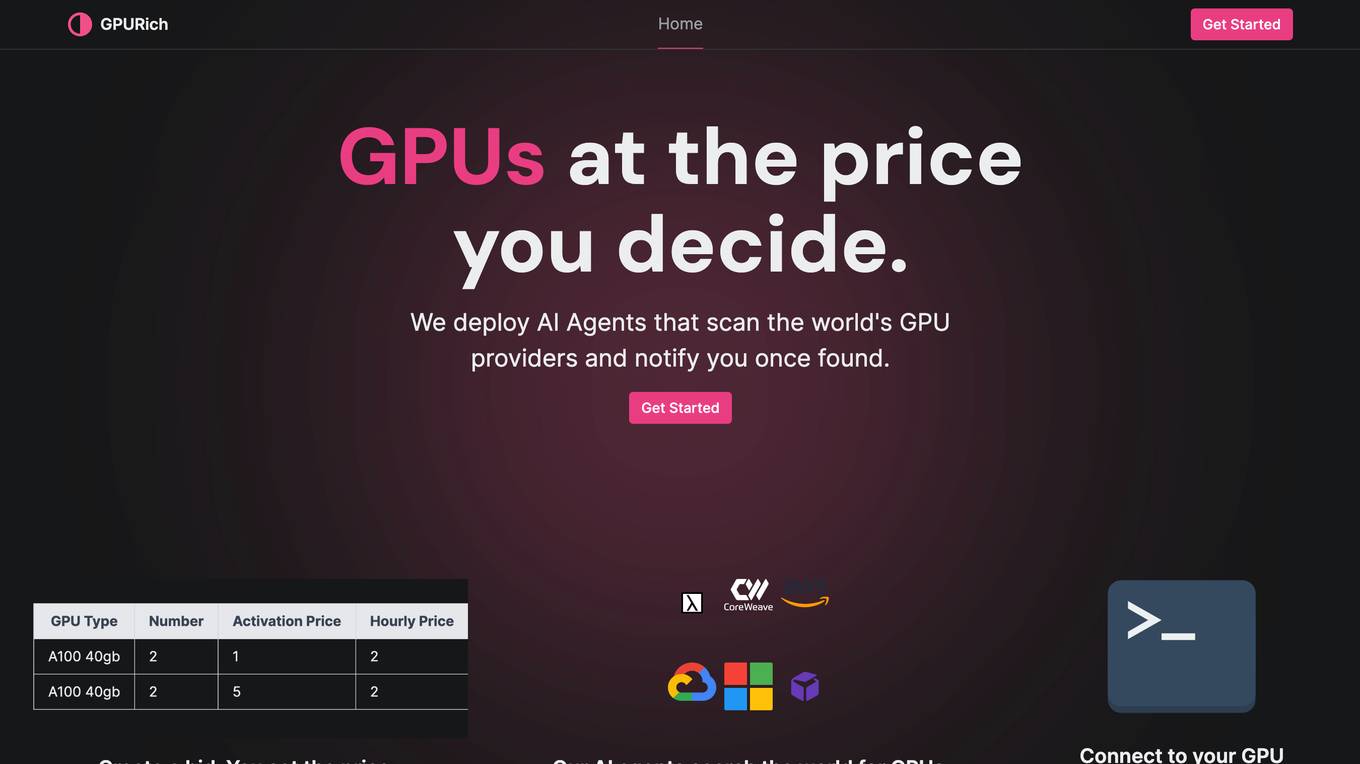

Replicate

Replicate is an AI tool that allows users to run and fine-tune models, deploy custom models at scale, and generate various types of content such as images, videos, music, and text with just one line of code. It provides access to a wide range of high-quality models contributed by the community, enabling users to explore, fine-tune, and deploy AI models efficiently. Replicate aims to make AI accessible and practical for real-world applications beyond academic research and demos.

Replicate

Replicate is an AI tool that allows users to run and fine-tune open-source models, deploy custom models at scale, and generate images, text, videos, music, and speech with just one line of code. It provides a platform for the community to contribute and explore thousands of production-ready AI models, enabling users to push the boundaries of AI beyond academic papers and demos. With features like fine-tuning models, deploying custom models, and scaling on Replicate, users can easily create and deploy AI solutions for various tasks.

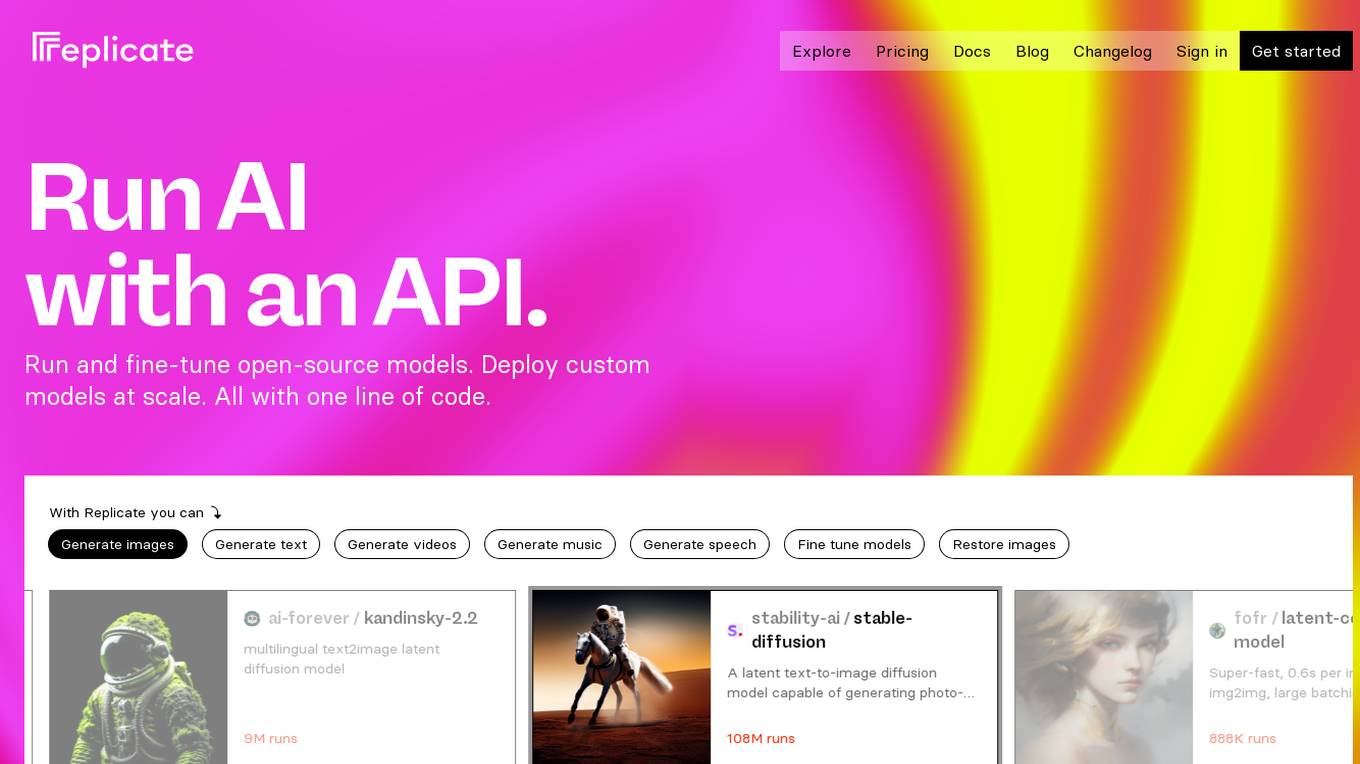

Paperspace

Paperspace is an AI tool designed to develop, train, and deploy AI models of any size and complexity. It offers a cloud GPU platform for accelerated computing, with features such as GPU cloud workflows, machine learning solutions, GPU infrastructure, virtual desktops, gaming, rendering, 3D graphics, and simulation. Paperspace provides a seamless abstraction layer for individuals and organizations to focus on building AI applications, offering low-cost GPUs with per-second billing, infrastructure abstraction, job scheduling, resource provisioning, and collaboration tools.

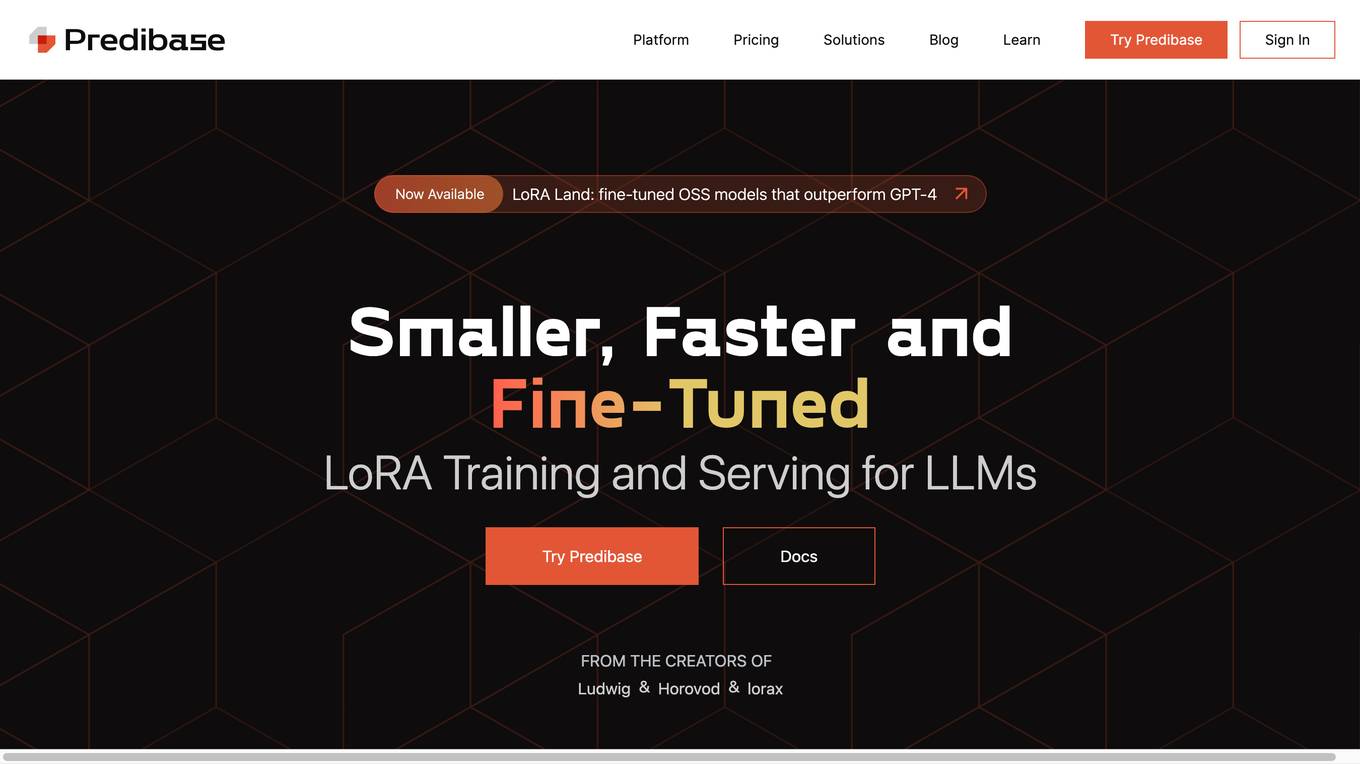

Predibase

Predibase is a platform for fine-tuning and serving Large Language Models (LLMs). It provides a cost-effective and efficient way to train and deploy LLMs for a variety of tasks, including classification, information extraction, customer sentiment analysis, customer support, code generation, and named entity recognition. Predibase is built on proven open-source technology, including LoRAX, Ludwig, and Horovod.

0 - Open Source Tools

20 - OpenAI Gpts

Serverless Architect Pro

Helping software engineers to architect domain-driven serverless systems on AWS

Cloud Computing

Expert in cloud computing, offering insights on services, security, and infrastructure.

JIMAI - Cloud Researcher

Cybernetic humanoid expert in extraterrestrial tech, driven to merge past and future.

Alexandre Leroy : Architecte de Solutions Cloud

Architecte cloud chez KingLand et passionné de nature. Conception d'architectures cloud, expertise en solutions cloud, capacité d'innovation technologique, compétences en gestion de projet, collaboration interdépartementale.

🌟Technical diagrams pro🌟

Create UML for flowcharts, Class, Sequence, Use Case, and Activity diagrams using PlantUML. System design and cloud infrastructure diagrams for AWS, Azue and GCP. No login required.

Back-end Development Advisor

Drives efficient back-end processes through innovative coding and software solutions.

React on Rails Pro

Expert in Rails & React, focusing on high-standard software development.

The Amazonian Interview Coach

A role-play enabled Amazon/AWS interview coach specializing in STAR format and Leadership Principles.

IAC Code Guardian

Introducing IAC Code Guardian: Your Trusted IaC Security Expert in Scanning Opentofu, Terrform, AWS Cloudformation, Pulumi, K8s Yaml & Dockerfile

Infrastructure as Code Advisor

Develops, advises and optimizes infrastructure-as-code practices across the organization.