Best AI tools for< Cloud Data Engineer >

Infographic

20 - AI tool Sites

Lightup

Lightup is a cloud data quality monitoring tool with AI-powered anomaly detection, incident alerts, and data remediation capabilities for modern enterprise data stacks. It specializes in helping large organizations implement successful and sustainable data quality programs quickly and easily. Lightup's pushdown architecture allows for monitoring data content at massive scale without moving or copying data, providing extreme scalability and optimal automation. The tool empowers business users with democratized data quality checks and enables automatic fixing of bad data at enterprise scale.

Qubinets

Qubinets is a cloud data environment solutions platform that provides building blocks for building big data, AI, web, and mobile environments. It is an open-source, no lock-in, secured, and private platform that can be used on any cloud, including AWS, Digital Ocean, Google Cloud, and Microsoft Azure. Qubinets makes it easy to plan, build, and run data environments, and it streamlines and saves time and money by reducing the grunt work in setup and provisioning.

Trifacta API Documentation

Trifacta API Documentation provides reference information on all of the available endpoints for each product edition. This website does not factor disabled features or your specific account permissions. To review API documentation for the endpoints to which your account has access, please select Help menu > API Documentation from the Trifacta application menu.

Dflux

Dflux is a cloud-based Unified Data Science Platform that offers end-to-end data engineering and intelligence with a no-code ML approach. It enables users to integrate data, perform data engineering, create customized models, analyze interactive dashboards, and make data-driven decisions for customer retention and business growth. Dflux bridges the gap between data strategy and data science, providing powerful SQL editor, intuitive dashboards, AI-powered text to SQL query builder, and AutoML capabilities. It accelerates insights with data science, enhances operational agility, and ensures a well-defined, automated data science life cycle. The platform caters to Data Engineers, Data Scientists, Data Analysts, and Decision Makers, offering all-round data preparation, AutoML models, and built-in data visualizations. Dflux is a secure, reliable, and comprehensive data platform that automates analytics, machine learning, and data processes, making data to insights easy and accessible for enterprises.

OpenBuckets

OpenBuckets is a web application designed to help users find and secure open buckets in cloud storage systems. It provides a user-friendly interface for scanning and identifying unprotected buckets, allowing users to take necessary actions to secure their data. With OpenBuckets, users can easily detect potential security risks and prevent unauthorized access to their sensitive information stored in the cloud.

Alluxio

Alluxio is a data orchestration platform designed for the cloud, offering seamless access, management, and running of AI/ML workloads. Positioned between compute and storage, Alluxio provides a unified solution for enterprises to handle data and AI tasks across diverse infrastructure environments. The platform accelerates model training and serving, maximizes infrastructure ROI, and ensures seamless data access. Alluxio addresses challenges such as data silos, low performance, data engineering complexity, and high costs associated with managing different tech stacks and storage systems.

Pointly

Pointly is an intelligent, cloud-based B2B software solution that enables efficient automatic and advanced manual classification in 3D point clouds. It offers innovative AI techniques for fast and precise data classification and vectorization, transforming point cloud analysis into an enjoyable and efficient workflow. Pointly provides standard and custom classifiers, tools for classification and vectorization, API and on-premise classification options, collaboration features, secure cloud processing, and scalability for handling large-scale point cloud data.

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

Reaktr.ai

Reaktr.ai is an AI-driven technology solutions provider that offers advanced AI automation services, predictive analytics, and sophisticated machine learning algorithms to help enterprises operate with agility and precision. The platform equips businesses with intelligent automation, enhanced security, and immersive experiences to drive growth, efficiency, and innovation. Reaktr.ai specializes in cloud management, cybersecurity, and AI services, providing solutions for data infrastructure, security testing, compliance, and more. With a commitment to redefining how enterprises operate, Reaktr.ai leverages AI capabilities to help businesses prosper in an AI-ready landscape.

DATAFOREST

DATAFOREST is an AI-powered data engineering company that offers a wide range of services including generative AI, data science, web and mobile development, DevOps, cloud solutions, digital transformation, and more. They provide custom data-driven solutions for small and medium-sized businesses, focusing on efficiency improvement, revenue growth, and cost reduction. With over 15 years of experience, DATAFOREST helps businesses automate complex tasks, enhance decision-making, boost productivity, and streamline operations through AI and machine learning technologies.

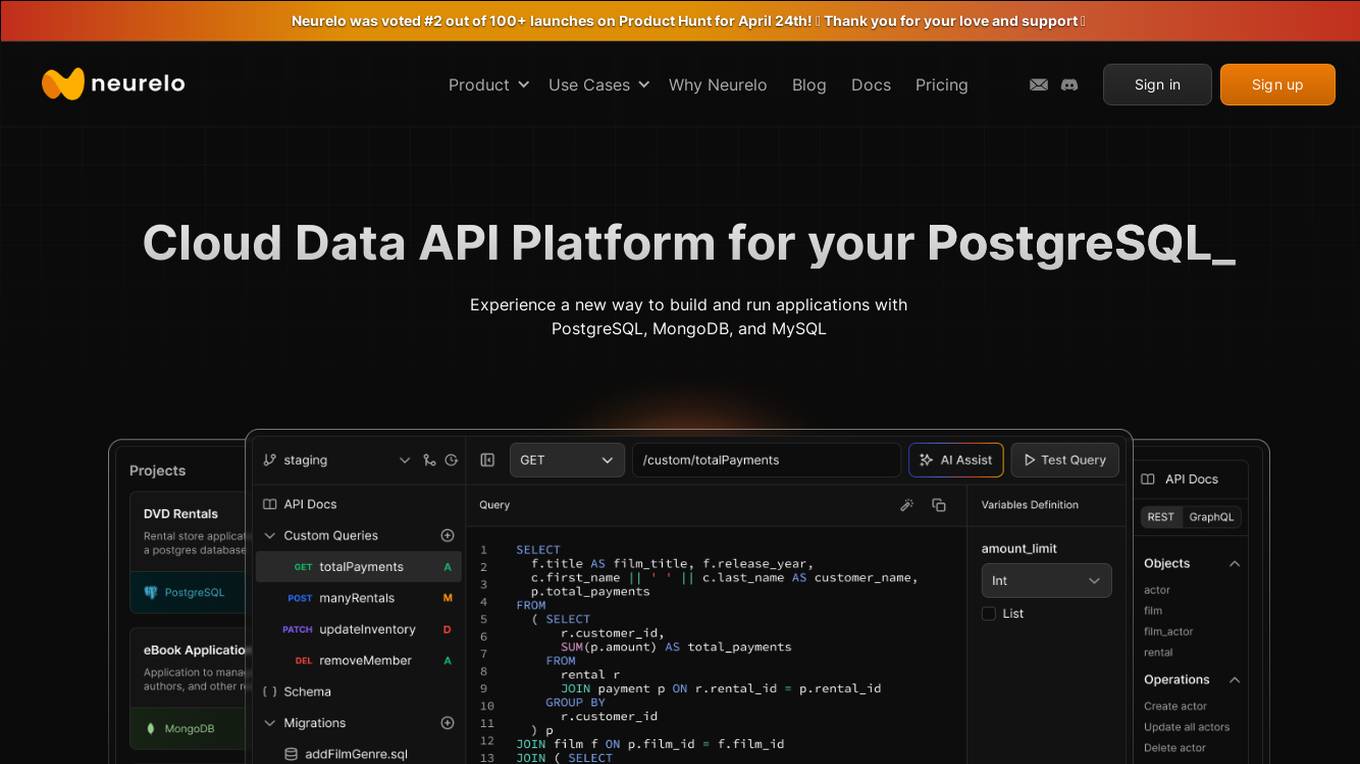

Neurelo

Neurelo is a cloud API platform that offers services for PostgreSQL, MongoDB, and MySQL. It provides features such as auto-generated APIs, custom query APIs with AI assistance, query observability, schema as code, and the ability to build full-stack applications in minutes. Neurelo aims to empower developers by simplifying database programming complexities and enhancing productivity. The platform leverages the power of cloud technology, APIs, and AI to offer a seamless and efficient way to build and run applications.

Global Nodes

Global Nodes is a global leader in innovative solutions, specializing in Artificial Intelligence, Data Engineering, Cloud Services, Software Development, and Mobile App Development. They integrate advanced AI to accelerate product development and provide custom, secure, and scalable solutions. With a focus on cutting-edge technology and visionary thinking, Global Nodes offers services ranging from ideation and design to precision execution, transforming concepts into market-ready products. Their team has extensive experience in delivering top-notch AI, cloud, and data engineering services, making them a trusted partner for businesses worldwide.

Palo Alto Networks

Palo Alto Networks is a cybersecurity company offering advanced security solutions powered by Precision AI to protect modern enterprises from cyber threats. The company provides network security, cloud security, and AI-driven security operations to defend against AI-generated threats in real time. Palo Alto Networks aims to simplify security and achieve better security outcomes through platformization, intelligence-driven expertise, and proactive monitoring of sophisticated threats.

Datamation

Datamation is a leading industry resource for B2B data professionals and technology buyers. Datamation’s focus is on providing insight into the latest trends and innovation in AI, data security, big data, and more, along with in-depth product recommendations and comparisons. More than 1.7M users gain insight and guidance from Datamation every year.

HST Solutions

HST Solutions is a trusted digital engineering and enterprise modernization partner that offers custom software development, AI applications, and data engineering services. They combine deep technical expertise and industry experience to help clients anticipate future needs and provide innovative solutions. The company focuses on delivering transformation and solving complex challenges with precision and innovation.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes with self-optimizing, lossless compression. It offers state-of-the-art technology that works seamlessly across various platforms like Iceberg, Delta, Trino, Spark, Snowflake, and Databricks. Granica helps organizations reduce storage costs, improve query performance, and enhance data accessibility for AI and analytics workloads.

DevRev

DevRev is an AI-native modern support platform that offers a comprehensive solution for customer experience enhancement. It provides data engineering, knowledge graph, and customizable LLMs to streamline support, product management, and software development processes. With features like in-browser analytics, consumer-grade social collaboration, and global scale API calls, DevRev aims to bring together different silos within a company to drive efficiency and collaboration. The platform caters to support people, product managers, and developers, automating tasks, assisting in decision-making, and elevating collaboration levels. DevRev is designed to empower digital product teams to assimilate customer feedback in real-time, ultimately powering the next generation of technology companies.

Valohai

Valohai is a scalable MLOps platform that enables Continuous Integration/Continuous Deployment (CI/CD) for machine learning and pipeline automation on-premises and across various cloud environments. It helps streamline complex machine learning workflows by offering framework-agnostic ML capabilities, automatic versioning with complete lineage of ML experiments, hybrid and multi-cloud support, scalability and performance optimization, streamlined collaboration among data scientists, IT, and business units, and smart orchestration of ML workloads on any infrastructure. Valohai also provides a knowledge repository for storing and sharing the entire model lifecycle, facilitating cross-functional collaboration, and allowing developers to build with total freedom using any libraries or frameworks.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

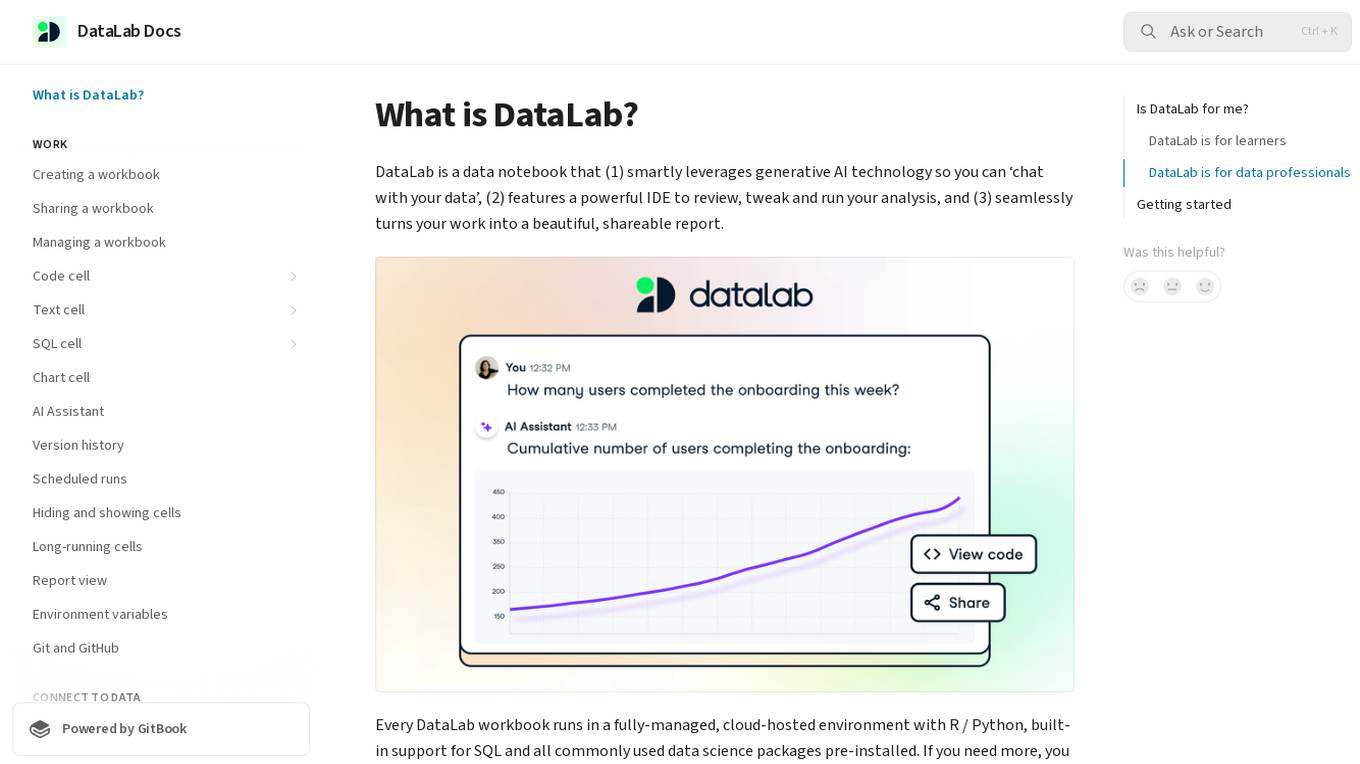

DataLab

DataLab is a data notebook that smartly leverages generative AI technology to enable users to 'chat with their data'. It features a powerful IDE for analysis, and seamlessly transforms work into shareable reports. The application runs in a cloud-hosted environment with support for R/Python, SQL, and various data science packages. Users can connect to external databases, collaborate in real-time, and utilize an AI Assistant for code generation and error correction.

1 - Open Source Tools

litdata

LitData is a tool designed for blazingly fast, distributed streaming of training data from any cloud storage. It allows users to transform and optimize data in cloud storage environments efficiently and intuitively, supporting various data types like images, text, video, audio, geo-spatial, and multimodal data. LitData integrates smoothly with frameworks such as LitGPT and PyTorch, enabling seamless streaming of data to multiple machines. Key features include multi-GPU/multi-node support, easy data mixing, pause & resume functionality, support for profiling, memory footprint reduction, cache size configuration, and on-prem optimizations. The tool also provides benchmarks for measuring streaming speed and conversion efficiency, along with runnable templates for different data types. LitData enables infinite cloud data processing by utilizing the Lightning.ai platform to scale data processing with optimized machines.

20 - OpenAI Gpts

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

Nimbus Navigator

Cloud Engineer Expert, guiding in cloud tech, projects, career, and industry trends.

Kafka Expert

I will help you to integrate the popular distributed event streaming platform Apache Kafka into your own cloud solutions.

Cloud Computing

Expert in cloud computing, offering insights on services, security, and infrastructure.

KQL Query Helper

The KQL Query Helper GPT is tailored specifically for assisting users with Kusto Query Language (KQL) queries. It leverages extensive knowledge from Azure Data Explorer documentation to aid users in understanding, reviewing, and creating new KQL queries based on their prompts.

NoSQL Code Helper

Assists with NoSQL programming by providing code examples, debugging tips, and best practices.

JIMAI - Cloud Researcher

Cybernetic humanoid expert in extraterrestrial tech, driven to merge past and future.

Cloud Certifications

AI Cloud Certification Assistant: Google Cloud expert with timed exams and specific service exercises.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.

Cloud Networking Advisor

Optimizes cloud-based networks for efficient organizational operations.

Apple CloudKit Complete Code Expert

A detailed expert trained on all 5,671 pages of Apple CloudKit, offering complete coding solutions. Saving time? https://www.buymeacoffee.com/parkerrex ☕️❤️

The Amazonian Interview Coach

A role-play enabled Amazon/AWS interview coach specializing in STAR format and Leadership Principles.