Best AI tools for< Associate Director, Drug Delivery >

Infographic

20 - AI tool Sites

Wendy Labs

Wendy Labs is an AI application that provides on-call AI Therapist services for teams to support mental health and well-being in the workplace. The application offers 24/7 mental health support, personalized assistance, and measurable insights to empower employees and improve team retention. Wendy aims to eliminate stigma associated with seeking mental health support and offers a cost-effective and scalable solution for organizations to address mental health issues effectively.

Music Business Worldwide

Music Business Worldwide is a platform that provides news, interviews, analysis, and job opportunities for the global music industry. It covers a wide range of topics such as artist management, music production, songwriting, industry insights, and financial reports. The platform aims to keep music professionals informed about the latest trends and developments in the music business.

Gladly

Gladly is an AI-powered Customer Service Platform that focuses on delivering exceptional customer experiences by centering on people rather than tickets. It offers a unified solution for customer communication across various channels, combining AI technology with human support to enhance efficiency, decrease costs, and drive revenue growth. Gladly stands out for its customer-centric approach, personalized self-service, and the ability to maintain a single lifelong conversation with customers. The platform is trusted by world-renowned brands and has proven success stories in improving customer interactions and agent productivity.

MetaMuse

MetaMuse is an AI-powered marketing tool that provides access to award-winning creative ideas and strategies without the high costs associated with traditional agencies. By analyzing over 20,500 successful campaigns and leveraging AI technology, MetaMuse democratizes creative expertise and offers lightning-fast turnaround for generating groundbreaking marketing concepts. Users can define their marketing challenges, connect with their target audience, and generate winning ideas with the help of an AI marketing expert, creative strategist, and creative team. MetaMuse aims to empower businesses to create unforgettable brand campaigns and drive real results.

Connected-Stories

Connected-Stories is the next generation of Creative Management Platforms powered by AI. It is a cloud-based platform that helps creative teams to manage their projects, collaborate with each other, and track their progress. Connected-Stories uses AI to automate many of the tasks that are typically associated with creative management, such as scheduling, budgeting, and resource allocation. This allows creative teams to focus on their work and be more productive.

Best Tire Deals Online

The website Best Tire Deals Online provides a comprehensive guide for buying tires online, including tips on comparing options, arranging professional installation, choosing between mobile fitting or retail shops, and maintaining new tires. It also offers information on tire size, speed rating, load index, treadwear warranties, and verified tests to help users make confident online purchases. The platform allows users to filter by vehicle fitment and performance priorities, and provides insights on tire installation services, maintenance, and warranty considerations. Additionally, the website features a search directory for various industries and topics.

GPT Builders

GPT Builders is a platform offering customizable GPT models to create personalized AI tools for various tasks. The directory includes mini ChatGPTs trained for specific functions like customer service and market research. With multi-model agents, privacy controls, seamless integration, flexibility, memory, personalized communication, increased efficiency, and API access, businesses can enhance operations and decision-making. The application empowers users to navigate market trends, identify leads, and catalyze conversions, leading to improved efficiency, customer satisfaction, and growth.

KeysAI

KeysAI is an AI-powered sales associate that helps dealerships save money and convert web traffic into dealership traffic. It is trained on cutting-edge automotive sales techniques and has a knowledge center that knows everything about your dealership, your inventory, and your customer. KeysAI is available 24/7 and can handle thousands of prospective buyers at the same time, for a fraction of the cost of traditional BDCs. It converts web traffic into foot traffic at your dealership, which means you sell more cars. KeysAI drives more leads at lower costs than your current chat solution and increases your ROI.

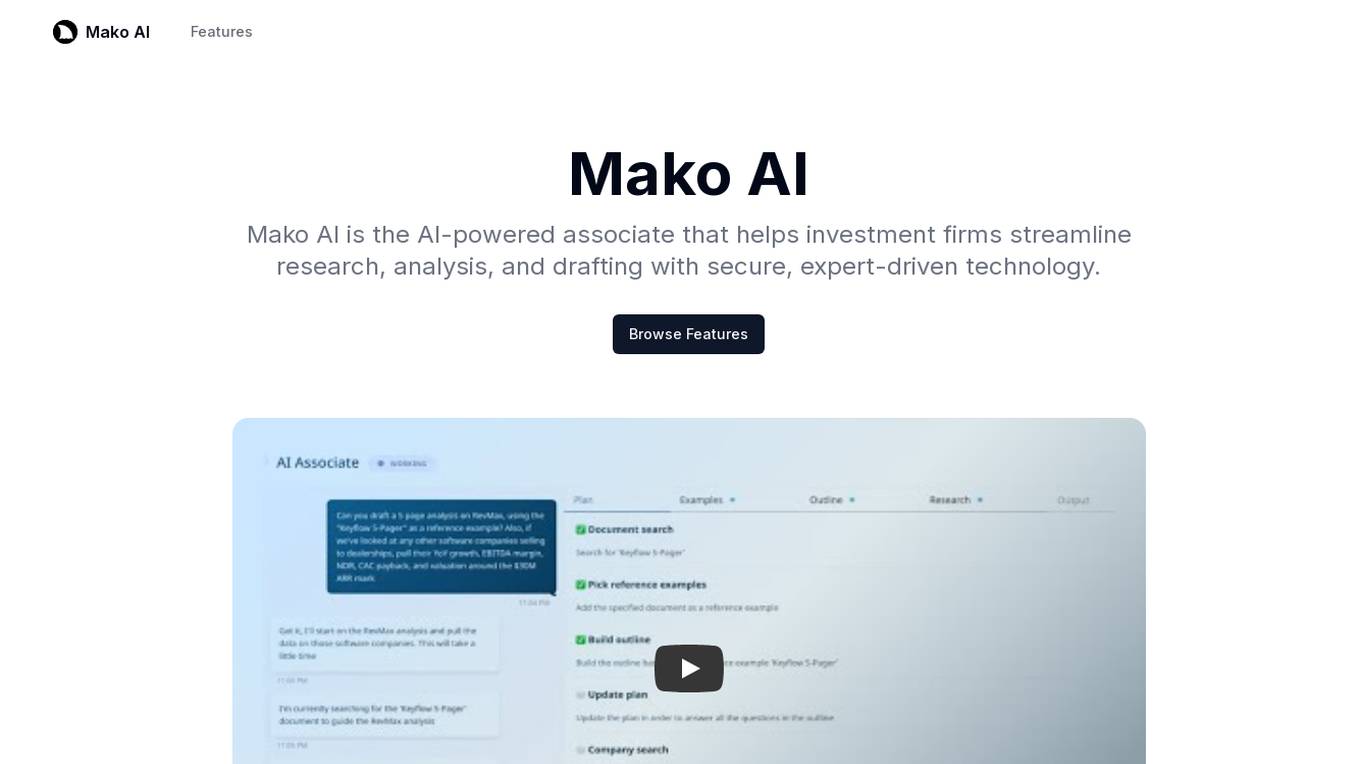

Mako AI

Mako AI is an AI-powered associate designed to revolutionize the workflows of investment firms by streamlining research, analysis, and drafting processes. It offers essential tools to simplify data access, safeguard information, and provide actionable insights. With features like enterprise search, chat capabilities, and a knowledge base, Mako AI centralizes institutional knowledge and ensures data security with SOC 2 Type II certification. The application is easy to implement, prioritizes security, and enhances collaboration within firms.

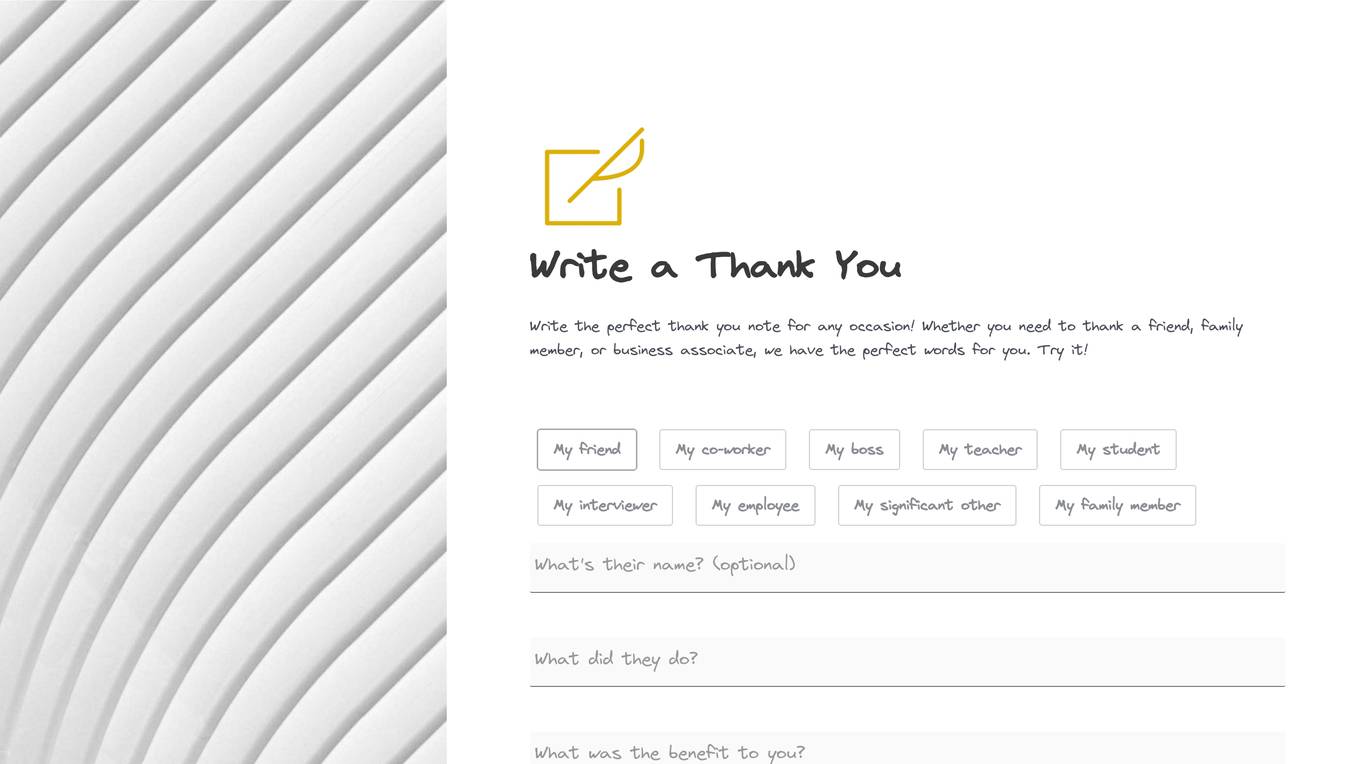

ThankYouNote.app

ThankYouNote.app is an AI-powered tool that helps users write personalized and heartfelt thank-you notes for any occasion. It offers a range of templates and examples to choose from, making it easy to express gratitude in a thoughtful and meaningful way. The tool is designed to assist users in crafting custom thank-you notes that are perfect for any situation, whether it's for a gift, an act of kindness, or simply to show appreciation.

Just Walk Out technology

Just Walk Out technology is a checkout-free shopping experience that allows customers to enter a store, grab whatever they want, and quickly get back to their day, without having to wait in a checkout line or stop at a cashier. The technology uses camera vision and sensor fusion, or RFID technology which allows them to simply walk away with their items. Just Walk Out technology is designed to increase revenue with cost-optimized technology, maximize space productivity, increase throughput, optimize operational costs, and improve shopper loyalty.

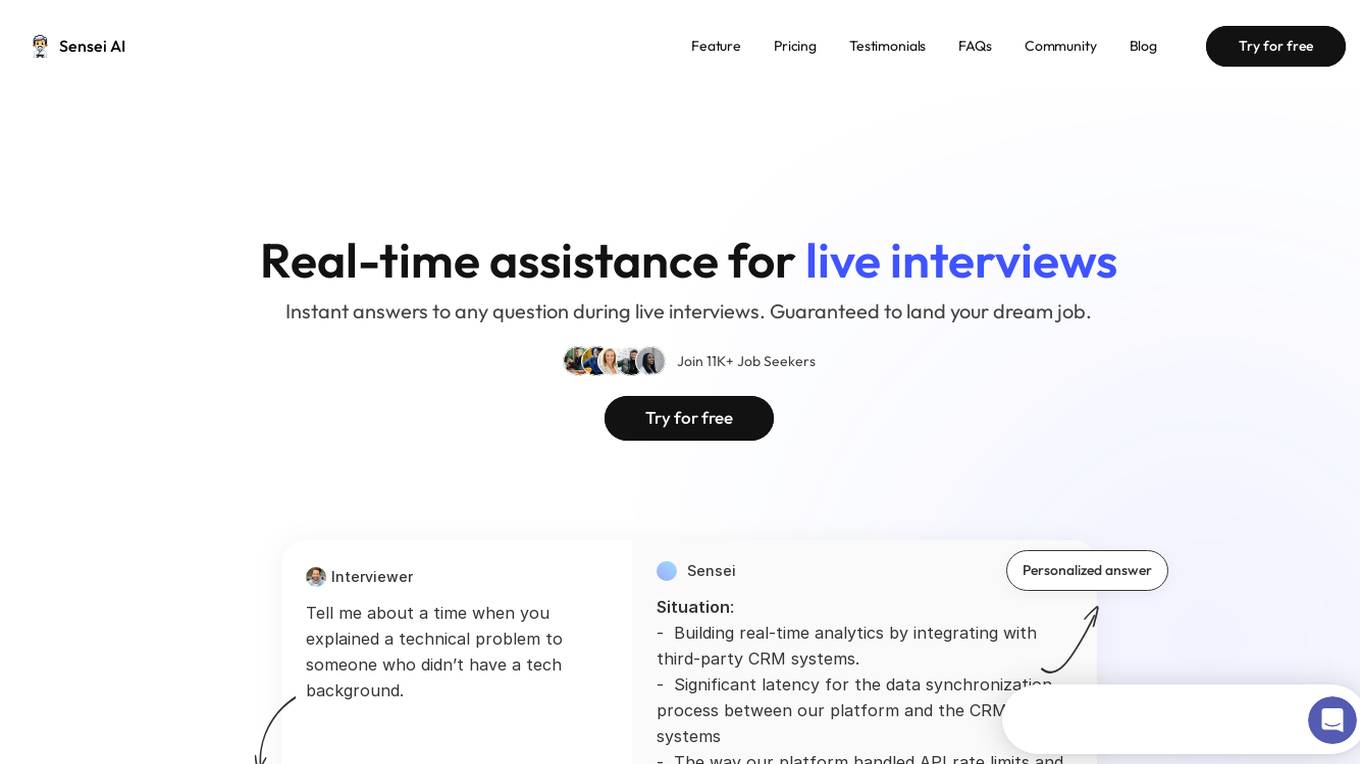

Sensei AI

Sensei AI is a real-time interview copilot application designed to provide assistance during live interviews. It offers instant answers to questions, personalized responses, and aims to help users land their dream job. The application uses advanced AI insights to understand the true intent behind interview questions, tailoring responses based on tone, word choices, keywords, timing, formality level, and context. Sensei AI also offers a hands-free experience, robust privacy features, and a personalized interview experience by tailoring answers to the user's job role, resume, and personal stories.

Intuitivo

Intuitivo is an AI/Computer Vision company building the future of retail, designing the perfect one-on-one shopping experience. We aim to create a connected, physical point of contact by meeting your client halfway; no lines, no friction. Our A-POPs facilitate seamless, cash-free purchases that naturally incorporate themselves into any customer’s routine. It’s simple, fully automated, and digitally intuitive.

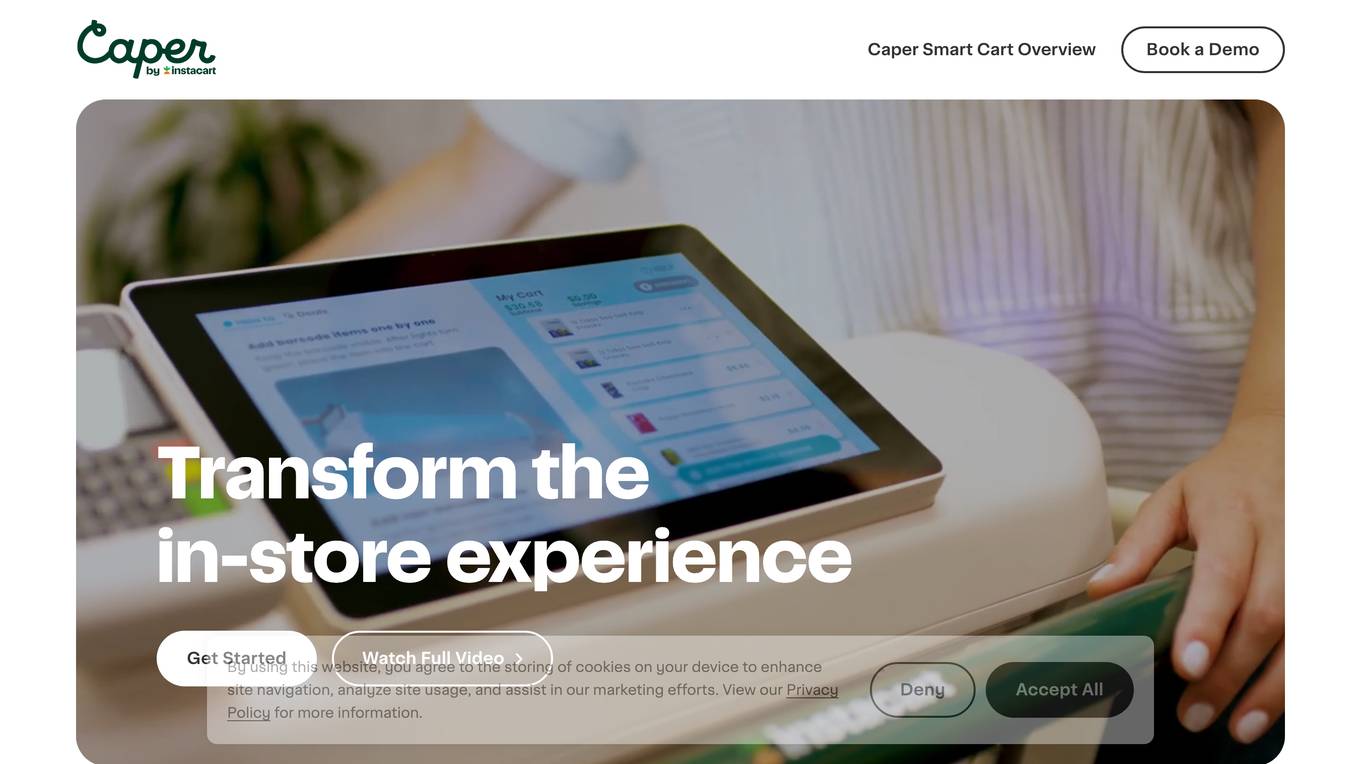

Caper

Caper is an AI-powered smart shopping cart technology that revolutionizes the in-store shopping experience for retailers. It offers seamless and personalized shopping, incremental consumer spend, and alternate revenue streams through personalized advertising and loyalty program integration. Caper enhances customer engagement with gamification features and provides anti-theft and operational capabilities for retailers. The application integrates with existing POS systems and loyalty programs, offering a unified online and in-store grocery experience. With advanced technology like sensor fusion and AI integration, Caper transforms the traditional shopping cart into a smart, interactive tool for both customers and retailers.

Boutiq

Boutiq is an AI-powered video clienteling platform that helps Shopify stores provide a more personalized and engaging shopping experience for their customers. With Boutiq, customers can start or schedule a video chat with a sales associate anywhere on the Shopify store, allowing them to get personalized advice and assistance without leaving their home. This can lead to higher sales, increased customer satisfaction, and reduced returns.

Product Discovery & Comparison Platform

The website is a platform that allows users to discover and compare various products across different categories such as cars, phones, laptops, TVs, smartwatches, and more. Users can easily find information on high-end, mid-tier, and low-cost options for each product category, enabling them to make informed purchasing decisions. The site aims to simplify the process of product research and comparison for consumers looking to buy different items.

Blozum

Blozum is an advanced AI chat assistant application designed to enhance website conversions. It offers digital sales assistants that act as 24/7 sales force, engaging with platform visitors, providing instant answers, and guiding customers through purchase journeys. The application leverages AI to optimize interactions, personalize user experiences, and streamline the sales process. Blozum is suitable for various industries such as Ecommerce, Real Estate, Retail, Web3, Insurance, Banking, Edtech, Healthcare, and more.

Beacon Biosignals

Beacon Biosignals provides an EEG neurobiomarker platform that is designed to accelerate clinical trials and enable new treatments for patients with neurological and psychiatric diseases. Their platform is powered by machine learning and a world-class clinico-EEG database, which allows them to analyze existing EEG data for insights into mechanisms, PK/PD, and patient stratification. This information can be used to guide further development efforts, optimize clinical trials, and enhance understanding of treatment efficacy.

AiCure

AiCure provides a patient-centric eClinical trial management platform that enhances drug development through improved medication adherence rates, more powerful analysis and prediction of treatment response using digital biomarkers, and reduced clinical tech burden. AiCure's solutions support traditional, decentralized, or hybrid trials and offer flexibility to meet the needs of various research designs.

Nara

Nara is an AI-powered digital sales associate that helps online stores increase sales and provide 24/7 support across all chat channels. It automates customer engagement by answering support questions, providing tailored shopping advice, and simplifying customer checkout. Nara offers different pricing plans to suit varying needs and provides a human touch experience similar to interacting with a helpful sales associate in physical stores.

0 - Open Source Tools

20 - OpenAI Gpts

VC Associate

A gpt assistant that helps with analyzing a startup/market. The answers you get back is already structured to give you the core elements you would want to see in an investment memo/ market analysis

Mattress Matchmaker

I will help you find the perfect mattress tailored to your unique sleeping needs!

Smart Shopper Assistant

AI-powered pal for smart product comparisons, savvy shopping tips, and instant image-to-product matching

Price Is Right Bot 3000

Finds and compares product prices across online retailers from uploaded images.

Savvy Saver

A friendly assistant personalizing coupon searches for all items and retailers.

TV Comparison | Comprehensive TV Database

Compare TV Devices Uncover the pros and cons of different latest TV models.

Black Friday Cyber Monday - Deal Guide 2023

Comprehensive Black Friday/Cyber Monday shopping and budgeting advisor